视频话题识别与跟踪 - demo 问题总结1.1-视频处理

Posted 小羊小羊小羊羊羊

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了视频话题识别与跟踪 - demo 问题总结1.1-视频处理相关的知识,希望对你有一定的参考价值。

目的:视频 --》音频 --》文本(语音识别)

-

moviepy库可将MP4文件转换为MP3文件

-

pydub库将MP3文件转换为flac文件,但是必须安装FFmpeg

实际上pydub库仅支持WAV格式文件的转换,FFmpeg可以支持多种格式音频转换

在官网下载FFmpeg压缩包,解压后将bin文件夹添加至系统环境变量,再安装pydub库即可正常使用

安装了FFmpeg后,pydub也支持MP4文件转MP3文件

以下代码是github上的例子:import os import glob from pydub import Audiosegment video_dir = '/home/johndoe/downloaded_videos/' # Path where the videos are located extension_list = ('*.mp4', '*.flv') os.chdir(video_dir) for extension in extension_list: for video in glob.glob(extension): mp3_filename = os.path.splitext(os.path.basename(video))[0] + '.mp3' AudioSegment.from_file(video).export(mp3_filename, format='mp3') -

speech_recognition库可以进行语音识别,但不支持MP3文件,所以才要先将MP3文件转换为flac文件

SpeechRecognition支持语音文件类型:

WAV: 必须是 PCM/LPCM 格式

AIFF

AIFF-C

FLAC: 必须是初始 FLAC 格式;OGG-FLAC 格式不可用 -

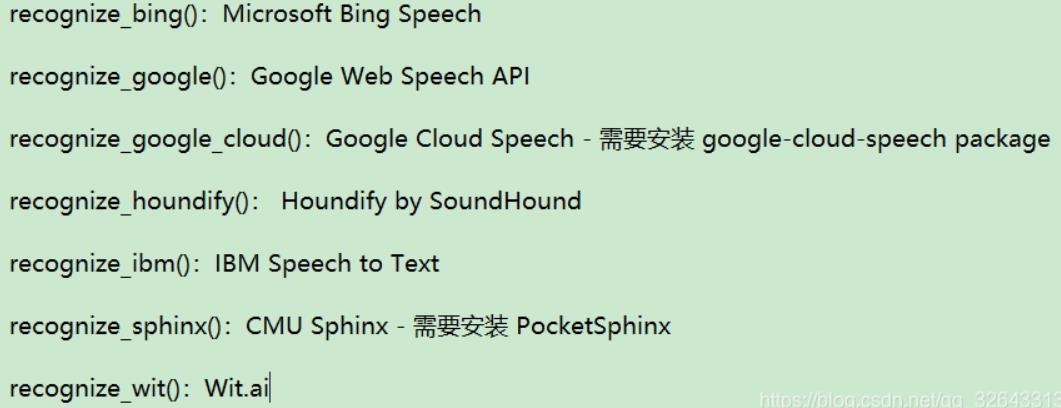

SpeechRcognition的识别类(器)有7个

但只有recognition_sphinx()可与CMU Sphinx 引擎脱机工作, 其他六个都需要连接互联网(调用API)。另外SpeechRecognition 附带 Google Web Speech API 的默认 API 密钥,可直接使用它 -

我选用了recognition_sphinx,在安装时发现要安装wheel和swig,wheel可以直接pip安装,但是swig不行

官网下载swig压缩包,解压后添加环境变量即可正常安装sphinx。cmd下可以正常安装,pycharm中依旧报错

-

SpeechRcognition和recognition_sphinx都安装好后,要在SpeechRcognition的安装文件夹下找到pocketsphinx-data文件夹,创建新文件夹“zh-CN”存放中文声学模型、语言模型和字典文件,这样才能进行中文识别。

pocketsphinx需要安装的中文语言、声学模型

下载地址:http://sourceforge.net/projects/cmusphinx/files/Acoustic%20and%20Language%20Models/

下载cmusphinx-zh-cn-5.2.tar.gz并解压,加入zh-CN文件夹,zh_cn.cd_cont_5000文件夹重命名为acoustic-model、zh_cn.lm.bin命名为language-model.lm.bin、zh_cn.dic中dic改为dict格式

但是感觉识别的准确度不太好

使用Google Web Speech API,会报错“由于连接方在一段时间后没有正确答复或连接的主机没有反应,连接尝试失败。”

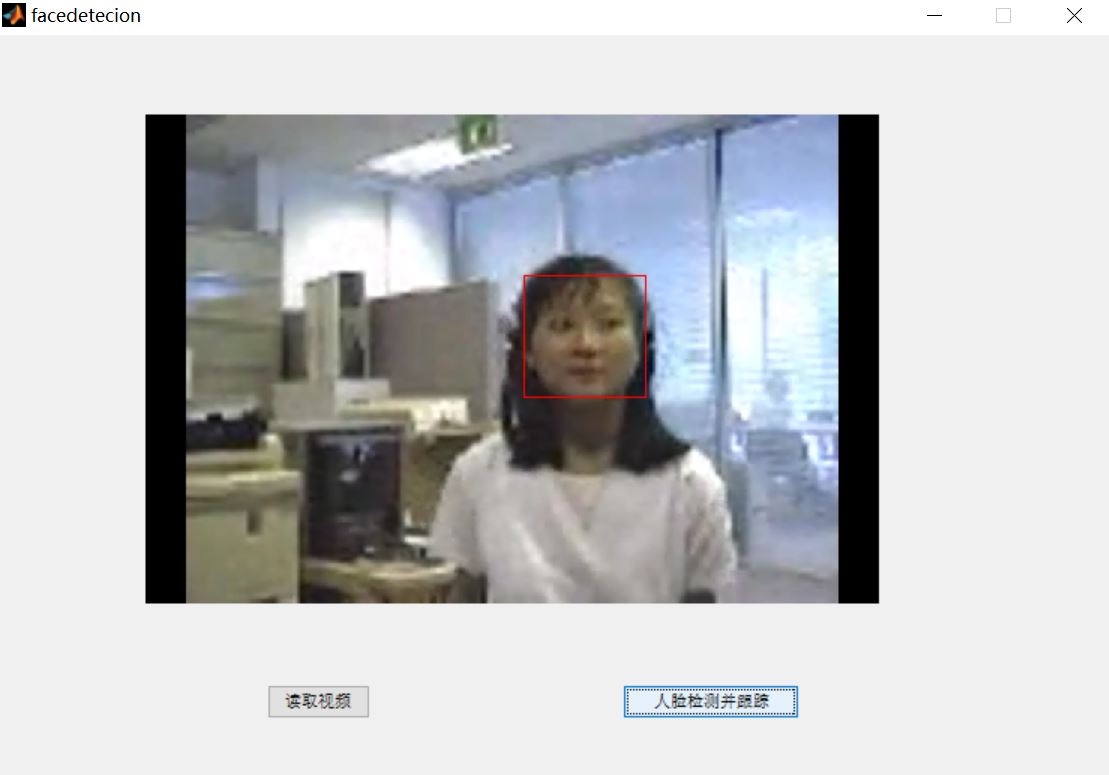

人脸识别基于matlab GUI人脸实时检测与跟踪含Matlab源码 673期

一、简介

如何在视频流中检测到人脸以及人脸追踪。对象检测和跟踪在许多计算机视觉应用中都很重要,包括活动识别,汽车安全和监视。所以这篇主要总结MATLAB的人脸检测和跟踪。

首先看一下流程。检测人脸——>面部特征提取——>脸部追踪。

二、源代码

unction varargout = facedetecion(varargin)

% FACEDETECION MATLAB code for facedetecion.fig

% FACEDETECION, by itself, creates a new FACEDETECION or raises the existing

% singleton*.

%

% H = FACEDETECION returns the handle to a new FACEDETECION or the handle to

% the existing singleton*.

%

% FACEDETECION('CALLBACK',hObject,eventData,handles,...) calls the local

% function named CALLBACK in FACEDETECION.M with the given input arguments.

%

% FACEDETECION('Property','Value',...) creates a new FACEDETECION or raises the

% existing singleton*. Starting from the left, property value pairs are

% applied to the GUI before facedetecion_OpeningFcn gets called. An

% unrecognized property name or invalid value makes property application

% stop. All inputs are passed to facedetecion_OpeningFcn via varargin.

%

% *See GUI Options on GUIDE's Tools menu. Choose "GUI allows only one

% instance to run (singleton)".

%

% See also: GUIDE, GUIDATA, GUIHANDLES

% Edit the above text to modify the response to help facedetecion

% Last Modified by GUIDE v2.5 01-May-2017 19:18:42

% Begin initialization code - DO NOT EDIT

gui_Singleton = 1;

gui_State = struct('gui_Name', mfilename, ...

'gui_Singleton', gui_Singleton, ...

'gui_OpeningFcn', @facedetecion_OpeningFcn, ...

'gui_OutputFcn', @facedetecion_OutputFcn, ...

'gui_LayoutFcn', [] , ...

'gui_Callback', []);

if nargin && ischar(varargin{1})

gui_State.gui_Callback = str2func(varargin{1});

end

if nargout

[varargout{1:nargout}] = gui_mainfcn(gui_State, varargin{:});

else

gui_mainfcn(gui_State, varargin{:});

end

% End initialization code - DO NOT EDIT

% --- Executes just before facedetecion is made visible.

function facedetecion_OpeningFcn(hObject, eventdata, handles, varargin)

% This function has no output args, see OutputFcn.

% hObject handle to figure

% eventdata reserved - to be defined in a future version of MATLAB

% handles structure with handles and user data (see GUIDATA)

% varargin command line arguments to facedetecion (see VARARGIN)

% Choose default command line output for facedetecion

handles.output = hObject;

% Update handles structure

guidata(hObject, handles);

% UIWAIT makes facedetecion wait for user response (see UIRESUME)

% uiwait(handles.figure1);

% --- Outputs from this function are returned to the command line.

function varargout = facedetecion_OutputFcn(hObject, eventdata, handles)

% varargout cell array for returning output args (see VARARGOUT);

% hObject handle to figure

% eventdata reserved - to be defined in a future version of MATLAB

% handles structure with handles and user data (see GUIDATA)

% Get default command line output from handles structure

varargout{1} = handles.output;

% --- Executes on button press in pushbutton1.

function pushbutton1_Callback(hObject, eventdata, handles)

% hObject handle to pushbutton1 (see GCBO)

% eventdata reserved - to be defined in a future version of MATLAB

% handles structure with handles and user data (see GUIDATA)

global myvideo myvideo1;

[fileName,pathName] = uigetfile('*.*','Please select an video');%文件筐,选择文件

if(fileName)

fileName = strcat(pathName,fileName);

fileName = lower(fileName);%一致的小写字母形式

else

% J = 0;%记录区域生长所分割得到的区域

msgbox('Please select an video');

return; %退出程序

end

% boxlnserter = vision.ShapeInserter('BorderColor','Custom','CustomBorderColor',[255 0 0]);

% videoOut = step(boxlnserter,videoFrame,bbox);

myvideo = VideoReader(fileName);

nFrames = myvideo.NumberOfFrames

vidHeight = myvideo.Height

vidWidth = myvideo.Width

mov(1:nFrames) = struct('cdata',zeros(vidHeight,vidWidth,3,'uint8'),'colormap',[]);

B_K = read(myvideo,1);

axes(handles.axes1);

imshow(B_K);

% myvideo = VideoReader(fileName);

% nFrames = myvideo.NumberOfFrames

% vidHeight = myvideo.Height

% vidWidth = myvideo.Width

% mov(1:nFrames) = struct('cdata',zeros(vidHeight,vidWidth,3,'uint8'),'colormap',[]);

% B_K = read(myvideo,1);

% axes(handles.axes1);

% imshow(B_K);

% --- Executes on button press in pushbutton2.

function pushbutton2_Callback(hObject, eventdata, handles)

% hObject handle to pushbutton2 (see GCBO)

% eventdata reserved - to be defined in a future version of MATLAB

% handles structure with handles and user data (see GUIDATA)

global myvideo myvideo1;

nFrames = myvideo.NumberOfFrames

vidHeight = myvideo.Height

vidWidth = myvideo.Width

mov(1:nFrames) = struct('cdata',zeros(vidHeight,vidWidth,3,'uint8'),'colormap',[]);

faceDetector = vision.CascadeObjectDetector();

% videoFileReader = vision.VideoFileReader(fileName);

% videoFrame = step(videoFileReader);

三、运行结果

四、备注

完整代码或者咨询添加QQ 1564658423

以上是关于视频话题识别与跟踪 - demo 问题总结1.1-视频处理的主要内容,如果未能解决你的问题,请参考以下文章

dlib库包的介绍与使用,opencv+dlib检测人脸框opencv+dlib进行人脸68关键点检测,opencv+dlib实现人脸识别,dlib进行人脸特征聚类dlib视频目标跟踪