Hive实战 --- 电子商务消费行为分析

Posted 你∈我

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Hive实战 --- 电子商务消费行为分析相关的知识,希望对你有一定的参考价值。

目录

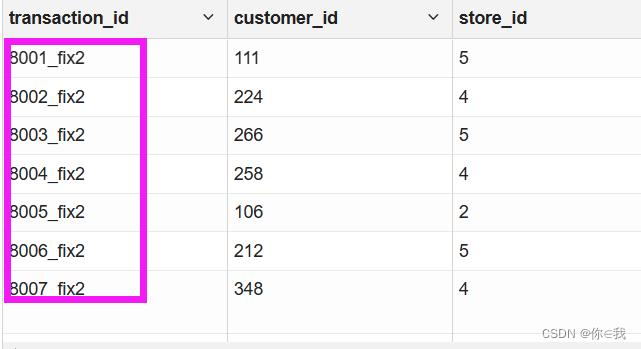

对transaction_details中的重复数据生成新ID

找出PII (personal information identification‘个人信息识别’) 数据进行加密

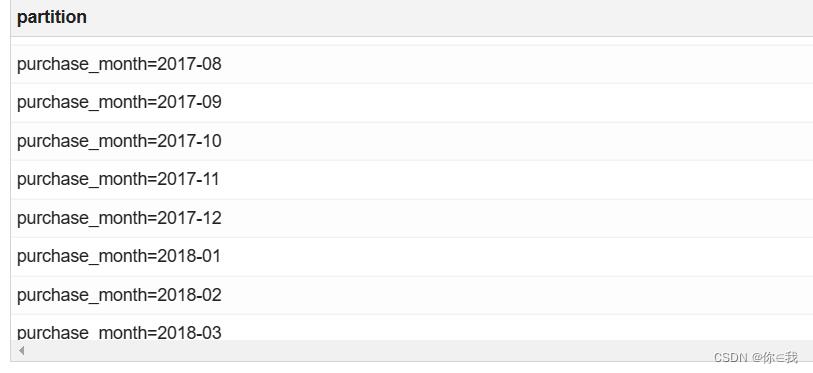

重新组织transaction数据按照日期YYYY-MM(按月)做分区

1.在ext_store_review中找出存在冲突的交易映射关系

数据结构

Customer表

| customer_details | details |

|---|---|

| customer_id | Int, 1 - 500 |

| first_name | string |

| last_name | string |

| | string, such as willddy@gmail.com |

| gender | string, Male or female |

| address | string |

| country | string |

| language | string |

| job | string, job title/position |

| credit_type | string, credit card type, such as visa |

| credit_no | string, credit card number |

Transaction表

| transaction_details | details |

|---|---|

| transaction_id | Int, 1 - 1000 |

| customer_id | Int, 1 - 500 |

| store_id | Int, 1 - 5 |

| price | decimal, such as 5.08 |

| product | string, things bought |

| date | string, when to purchase |

| time | string, what time to purchase |

Store表

| store_details | details |

|---|---|

| store_id | Int, 1 - 5 |

| store_name | string |

| employee_number | Int, 在store有多少employee |

Review表

| store_review | details |

|---|---|

| stransaction_id | Int, 1 - 8000 |

| store_id | Int, 1 - 5 |

| review_store | Int, 1 - 5 |

上传数据

创建目录用于存放数据

%sh

hdfs dfs -mkdir -p /shopping/data/customer

hdfs dfs -mkdir -p /shopping/data/store

hdfs dfs -mkdir -p /shopping/data/review

hdfs dfs -mkdir -p /shopping/data/transaction把本地文件上传到HDFS上

%sh

cd /opt/stufile/storetransaction

ls -al

hdfs dfs -put ./customer_details.csv /shopping/data/customer

hdfs dfs -put ./store_details.csv /shopping/data/store

hdfs dfs -put ./store_review.csv /shopping/data/review

hdfs dfs -put ./transaction_details.csv /shopping/data/transaction创建外部表

创建数据库

%hive

-- 如果存在此表则删除

drop database if exists shopping cascade;

--创建数据库

create database if not exists shopping;创建表

%hive

create external table if not exists ext_customer_detail

(

customer_id string,

first_name string,

last_name string,

email string,

gender string,

address string,

country string,

language string,

job string,

credit_type string,

credit_no string

)

row format serde 'org.apache.hadoop.hive.serde2.OpenCSVSerde'

location '/shopping/data/customer'

tblproperties ('skip.header.line.count' = '1');

create external table if not exists ext_transaction_details

(

transaction_id string,

customer_id string,

store_id string,

price decimal(8, 2),

product string,

purchase_date string,

purchase_time string

) row format serde 'org.apache.hadoop.hive.serde2.OpenCSVSerde'

location '/shopping/data/transaction'

tblproperties ('skip.header.line.count' = '1');

drop table ext_store_details;

create external table if not exists ext_store_details

(

store_id string,

store_name string,

employee_number string

) row format serde 'org.apache.hadoop.hive.serde2.OpenCSVSerde'

location '/shopping/data/store'

tblproperties ('skip.header.line.count' = '1');

drop table ext_store_review;

create external table if not exists ext_store_review

(

store_id string,

transaction_id string,

review_score string

) row format serde 'org.apache.hadoop.hive.serde2.OpenCSVSerde'

location '/shopping/data/review'

tblproperties ('skip.header.line.count' = '1');TIP:

OpenCSVSerde 默认的分隔符 (separator)、quote 以及逃逸字符(escape characters )分别为 、" 以及 '

如果我们查看表结构的时候,我们会发现如果 row format serde 为 org.apache.hadoop.hive.serde2.OpenCSVSerde,不管你建表的时候指定字段是什么类型,其显示的都是 string 类型

tblproperties ('skip.header.line.count' = '1');从外部表导入数据跳过(忽略)首行。

数据清洗

对transaction_details中的重复数据生成新ID

%hive

use shopping;

with basetb as (

select row_number() over (partition by transaction_id order by transaction_id) as rn

, transaction_id

, customer_id

, store_id

, price

, product

, purchase_date

, purchase_time

, substr(purchase_date, 0, 7) as purchase_month

from ext_transaction_details),

basetb2 as (

select `if`(rn = 1, transaction_id, concat(transaction_id, '_fix', rn)) transaction_id

, customer_id

, store_id

, price

, product

, purchase_date

, purchase_time

, purchase_month

from basetb)

select *

from basetb2

where transaction_id like '%fix%';因为查询的数据比较多,显示不全所有要在后面加一个查询

过滤掉store_review中没有评分的数据

查出有评分的数据

%hive

use shopping;

create view if not exists vm_store_review as

select *

from ext_store_review

where review_score <> '';

select * from vm_store_review;

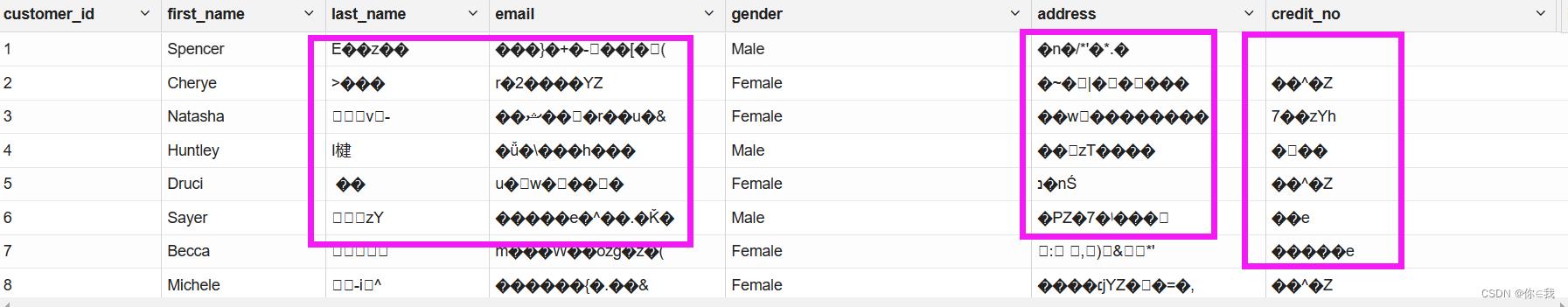

找出PII (personal information identification‘个人信息识别’) 数据进行加密

%hive

use shopping;

drop view customer_detail;

create view vm_customer_details as

select

customer_id,first_name,unbase64(last_name)as last_name,unbase64(email) as email,

gender,unbase64(address) as address,

unbase64(concat(unbase64(credit_no),'hello')) as credit_no --二次加密

from ext_customer_details;

重新组织transaction数据按照日期YYYY-MM(按月)做分区

%hive

use shopping;

set hive.exec.dynamic.partition.mode=nonstrict;

with basetb as (

select row_number() over (partition by transaction_id order by transaction_id) as rn

, transaction_id

, customer_id

, store_id

, price

, product

, purchase_date

, purchase_time

, substr(purchase_date, 0, 7) as purchase_month

from ext_transaction_details)

insert overwrite table transaction_details partition (purchase_month)

select `if`(rn = 1, transaction_id, concat(transaction_id, '_fix', rn)) transaction_id

, customer_id

, store_id

, price

, product

, purchase_date

, purchase_time

, purchase_month

from basetb;

show partitions transaction_details;

Customer分析

1.找出顾客最常用的信用卡

%hive

use shopping;

select credit_type, max(credit_type) counts

from ext_customer_details

group by credit_type

order by counts desc;

2.找出户客资料中排名前五的职位名称

select job, count(job) counts

from ext_customer_details

group by job

order by counts desc

limit 5;

3.在美国女性最常用的信用卡

%hive

use shopping;

select credit_type, count(credit_type) counts

from ext_customer_details

where gender = 'Female' and country='United States'

group by credit_type

order by counts desc;

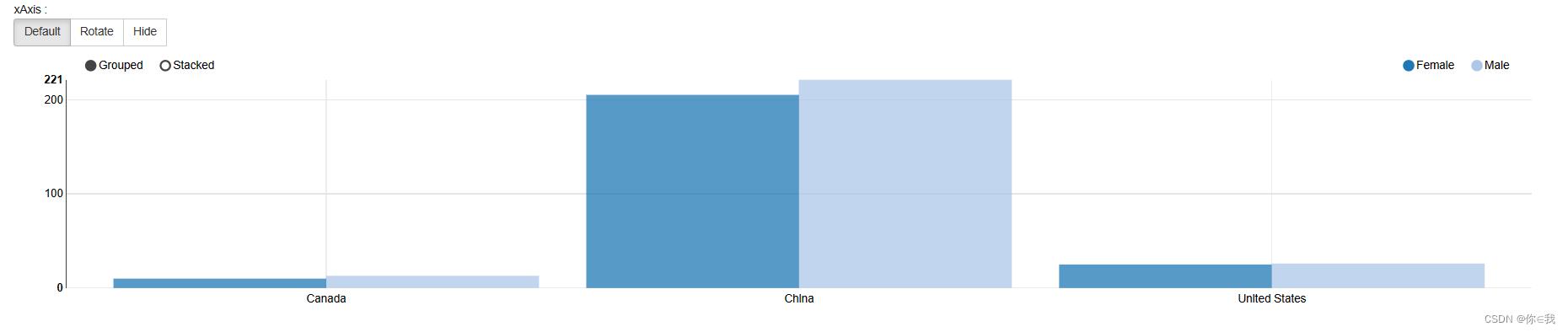

4.按性别和国家进行客户统计

%hive

use shopping;

select country, gender,count(customer_id)

from ext_customer_details

group by gender, country;

Transaction

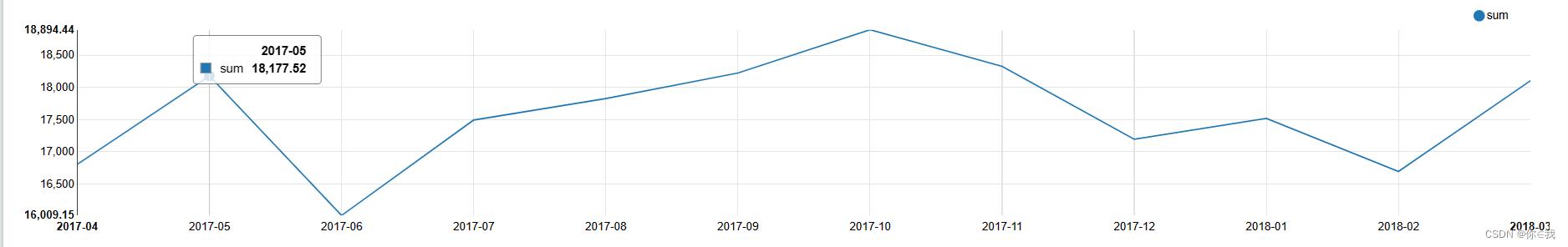

1.计算每月总收入

%hive

use shopping;

select substr(purchase_date,0,7) month,round(sum(price),2) sum

from ext_transaction_details

group by substr(purchase_date,0,7);

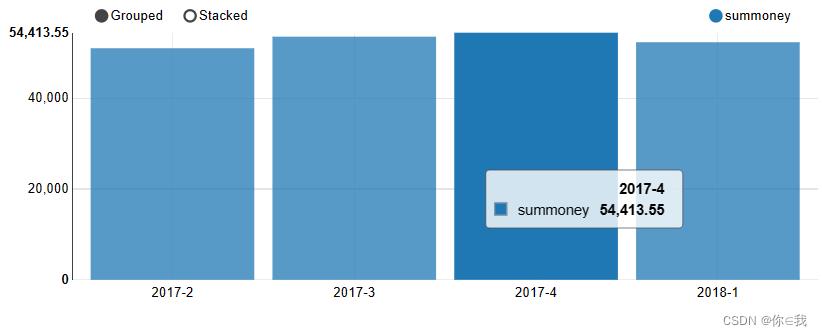

2.计算每个季度的总收入

with basetb as (

select concat_ws('-', cast(year(purchase_date) as string),

cast(quarter(purchase_date) as string)) as year_quarter,

price

from transaction_details)

select year_quarter ,sum(price) sumMoney from basetb group by year_quarter;

3.按年计算总收入

%hive

use shopping;

select year(purchase_date) years , round(sum(price),2) sum

from ext_transaction_details

group by year(purchase_date);

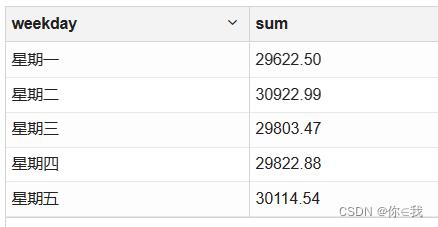

4.按工作日计算总收入

%hive

use shopping;

with basetb as (

select `dayofweek`(purchase_date) weekday, price

from transaction_details)

select case

when (weekday - 1) = 1 then '星期一'

when (weekday - 1) = 2 then '星期二'

when (weekday - 1) = 3 then '星期三'

when (weekday - 1) = 4 then '星期四'

when (weekday - 1) = 5 then '星期五'

end as weekday,

sum(price) sum

from basetb

group by weekday

having weekday between 2 and 6;

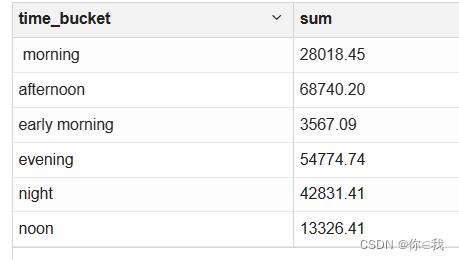

5.按时间段计算总收入(需要清理数据)

%hive

use shopping;

with basetb1 as (

select price,

purchase_time,

case

when purchase_time like '%AM' then split(purchase_time, '\\\\s+')[0]

when purchase_time like '%PM' then concat_ws(':',cast(`if`(

(cast(split(purchase_time, ':')[0] as int) + 12) == 24,0, (cast(split(purchase_time, ':')[0] as int) + 12)) as string)

, split(split(purchase_time, ':')[1], '\\\\s+')[0])

else purchase_time

end time_format

from transaction_details),

basetb2 as (select price,

purchase_time,

(cast(split(time_format, ':')[0] as decimal(4, 2)) +

cast(split(time_format, ':')[1] as decimal(4, 2)) / 60) purchase_time_num

from basetb1),

basetb3 as (select price,

purchase_time,

`if`(purchase_time_num > 5 and purchase_time_num <= 8, 'early morning',

`if`(purchase_time_num > 8 and purchase_time_num <= 11, ' morning',

`if`(purchase_time_num > 11 and purchase_time_num <= 13, 'noon',

`if`(purchase_time_num > 13 and purchase_time_num <= 18, 'afternoon',

`if`(purchase_time_num > 18 and purchase_time_num <= 22, 'evening','night'))))) as time_bucket

from basetb2)

select time_bucket, sum(price) sum

from basetb3

group by time_bucket;

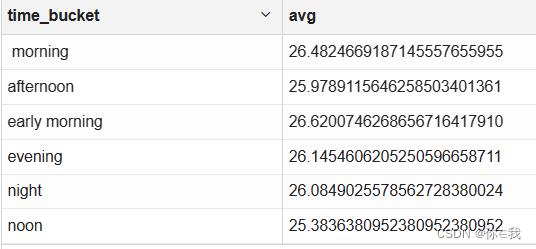

6.按时间段计算平均消费

%hive

use shopping;

with basetb1 as (

select price,

purchase_time,

case

when purchase_time like '%AM' then split(purchase_time, '\\\\s+')[0]

when purchase_time like '%PM' then concat_ws(':',cast(`if`(

(cast(split(purchase_time, ':')[0] as int) + 12) == 24,0, (cast(split(purchase_time, ':')[0] as int) + 12)) as string)

, split(split(purchase_time, ':')[1], '\\\\s+')[0])

else purchase_time

end time_format

from transaction_details),

basetb2 as (select price,

purchase_time,

(cast(split(time_format, ':')[0] as decimal(4, 2)) +

cast(split(time_format, ':')[1] as decimal(4, 2)) / 60) purchase_time_num

from basetb1),

basetb3 as (select price,

purchase_time,

`if`(purchase_time_num > 5 and purchase_time_num <= 8, 'early morning',

`if`(purchase_time_num > 8 and purchase_time_num <= 11, ' morning',

`if`(purchase_time_num > 11 and purchase_time_num <= 13, 'noon',

`if`(purchase_time_num > 13 and purchase_time_num <= 18, 'afternoon',

`if`(purchase_time_num > 18 and purchase_time_num <= 22, 'evening','night'))))) as time_bucket

from basetb2)

select time_bucket, avg(price) avg

from basetb3

group by time_bucket;

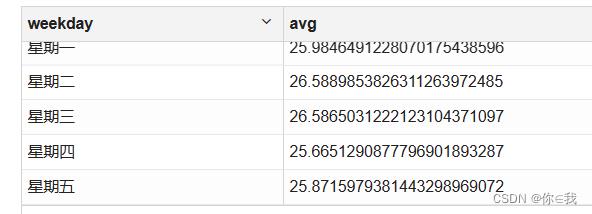

7.按工作日计算平均消费

%hive

use shopping;

with basetb as (

select `dayofweek`(purchase_date) weekday, price

from transaction_details)

select case

when (weekday - 1) = 1 then '星期一'

when (weekday - 1) = 2 then '星期二'

when (weekday - 1) = 3 then '星期三'

when (weekday - 1) = 4 then '星期四'

when (weekday - 1) = 5 then '星期五'

end weekday,

avg(price) avg

from basetb

group by weekday

having weekday between 2 and 6;

8.计算年、月、日的交易总数

%hive

use shopping;

select distinct purchase_date,

purchase_month,

year(purchase_date),

count(1) over (partition by year(purchase_date)) years,

count(1) over (partition by year(purchase_date),month(purchase_date)) months,

count(1) over (partition by year(purchase_date),month(purchase_date),day(purchase_date)) days

from transaction_details;

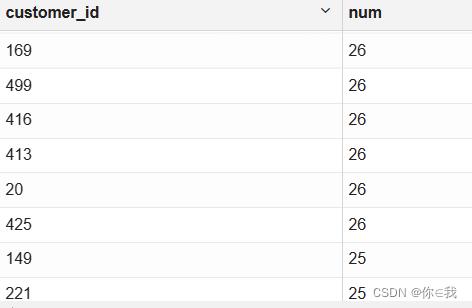

9.找出交易量最大的10个客户

%hive

use shopping;

select

customer_id,count(1) as num

from transaction_details

group by customer_id

order by num desc

limit 10

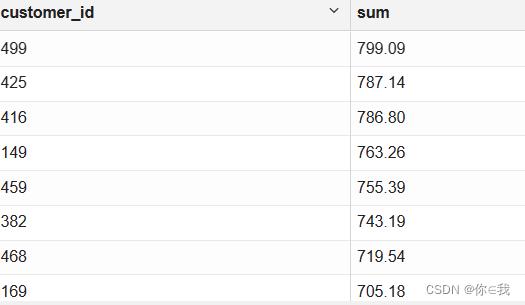

10.找出消费最多的前10位顾客

%hive

use shopping;

select customer_id,

sum(price) as sum

from transaction_details

group by customer_id

order by sum desc

limit 10

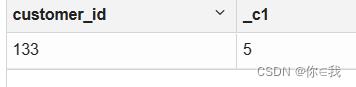

11.计该期间交易数量最少的用户

use shopping;

select customer_id, count(transaction_id)

from transaction_details

group by customer_id

order by count(transaction_id)

limit 1;

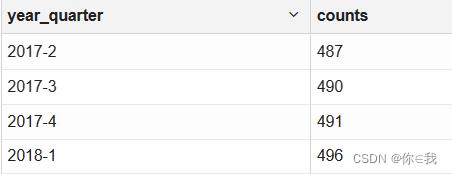

12.算每个季度的独立客户总数

%hive

use shopping;

with basetb as (

select distinct concat_ws('-', cast(year(purchase_date) as string),

cast(quarter(purchase_date) as string)) as year_quarter,

customer_id

from transaction_details)

select year_quarter, count(customer_id) counts

from basetb

group by year_quarter;

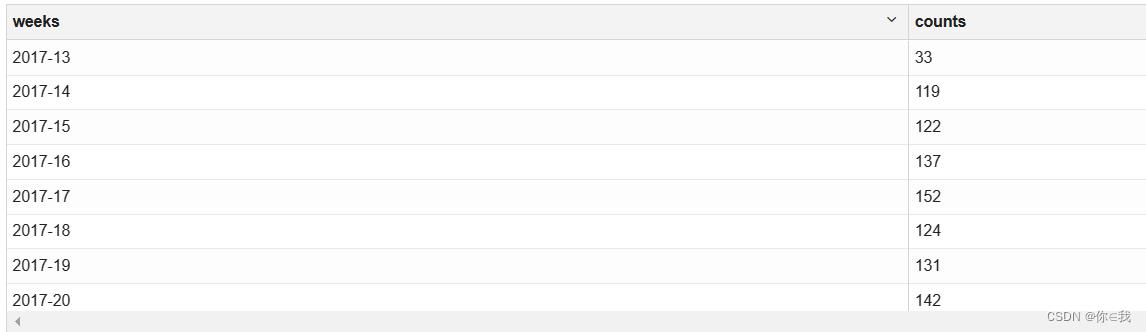

13.算每周的独立客户总数

%hive

use shopping;

with basetb as (

select distinct concat(cast(year(purchase_date) as string), '-', cast(weekofyear(purchase_date)as string)) weeks,

customer_id

from transaction_details)

select weeks, count(customer_id) counts

from basetb

group by weeks;

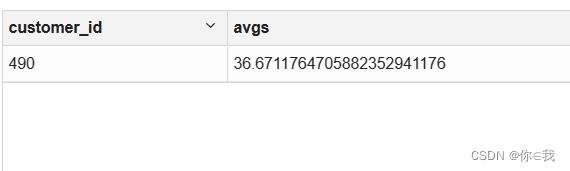

14.算整个活动客户平均花费的最大值

%hive

use shopping;

select customer_id,avg(price) avgs

from transaction_details

group by customer_id

order by avgs desc

limit 1;

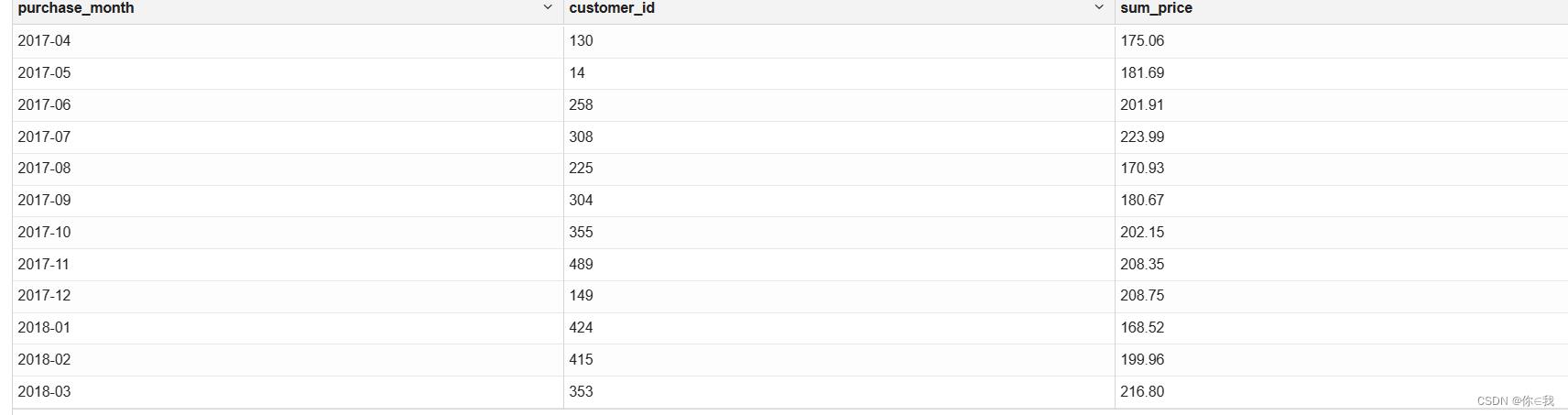

15.计每月花费最多的客户

%hive

use shopping;

with basetb as (

select purchase_month,

customer_id,

sum(price) sum_price

from transaction_details

group by purchase_month, customer_id),

basetb2 as (

select purchase_month,

customer_id,

sum_price,

row_number() over (partition by purchase_month order by sum_price desc ) rn

from basetb)

select purchase_month, customer_id, sum_price

from basetb2

where rn = 1;

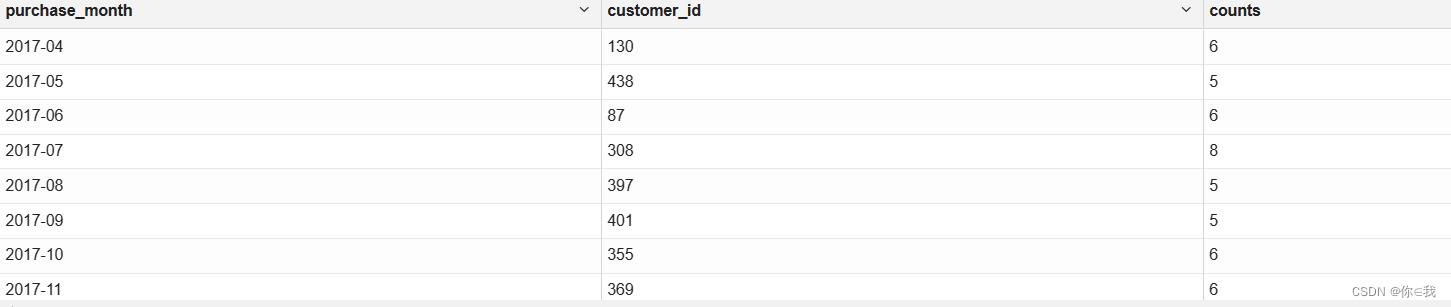

16.计每月访问次数最多的客户

%hive

use shopping;

with basetb as (

select purchase_month,

customer_id,

count(customer_id) counts

from transaction_details

group by purchase_month, customer_id),

basetb2 as (

select purchase_month,

customer_id,

counts,

row_number() over (partition by purchase_month order by counts desc ) rn

from basetb)

select purchase_month, customer_id, counts

from basetb2

where rn = 1;

17.总价找出最受欢迎的5种产品

select product,sum(price) sum

from transaction_details

group by product

order by sum desc

limit 5;

18.据购买频率找出最畅销的5种产品

select product,

count(1) counts

from transaction_details

group by product

order by counts desc

limit 5;

19.据客户数量找出最受欢迎的5种产品

select product,

count(distinct customer_id) counts

from transaction_details

group by product

order by counts desc

limit 5Store分析

1.按客流量找出最受欢迎的商店

%hive

use shopping;

select store_name, count(distinct customer_id) counts

from transaction_details td

join ext_store_details esd on td.store_id = esd.store_id

group by store_name

order by counts desc;

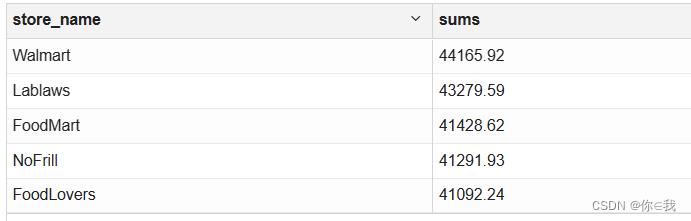

2.根据顾客消费价格找出最受欢迎的商店

%hive

use shopping;

select store_name, sum(price) sums

from transaction_details td

join ext_store_details esd on td.store_id = esd.store_id

group by store_name

order by sums desc;3.根据顾客交易情况找出最受欢迎的商店

%hive

use shopping;

select store_name, count(td.store_id) counts

from transaction_details td

join ext_store_details esd on td.store_id = esd.store_id

group by store_name

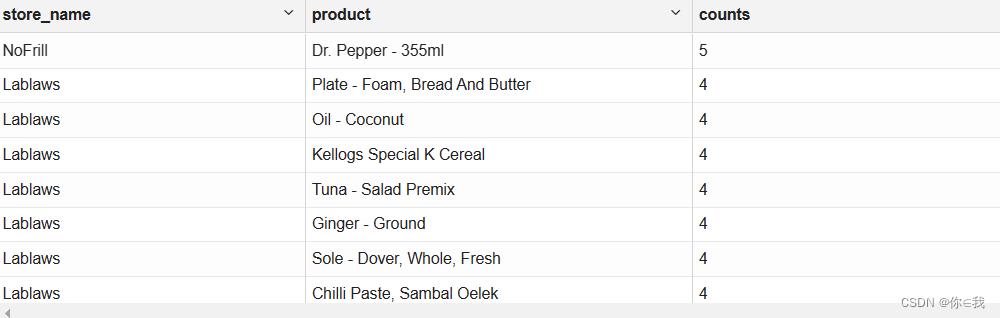

order by counts desc;4.根据商店和唯一的顾客id获取最受欢迎的产品

%hive

use shopping;

with basetb as (

select store_id, product, count(distinct customer_id) counts

from transaction_details

group by store_id, product),

basetb2 as (

select store_id,

product,

counts,

rank() over (partition by store_id order by counts desc ) as rn

from basetb)

select store_name, product, counts

from basetb2 tb2

join ext_store_details esd on tb2.store_id = esd.store_id

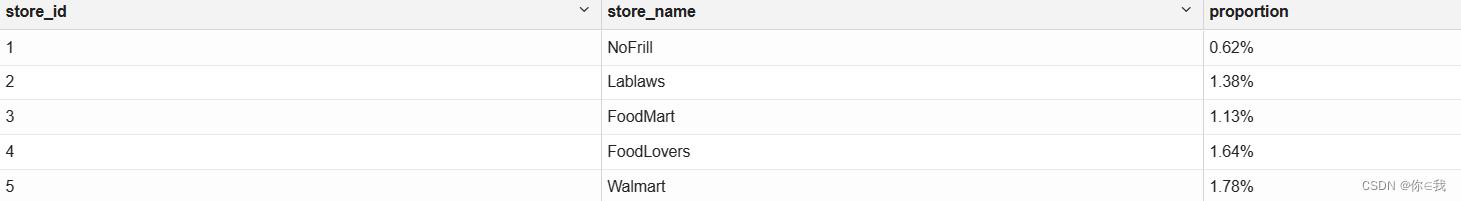

where rn = 1;5.获取每个商店的员工与顾客比

%hive

use shopping;

with t1 as (select count(1) c1, store_id

from transaction_details td

group by td.store_id)

select t1.store_id,

esd.store_name,

concat(substring(cast(esd.employee_number / t1.c1 as decimal(9, 8)) * 100.0, 0, 4), '%') proportion

from t1

join ext_store_details esd on t1.store_id = esd.store_id;

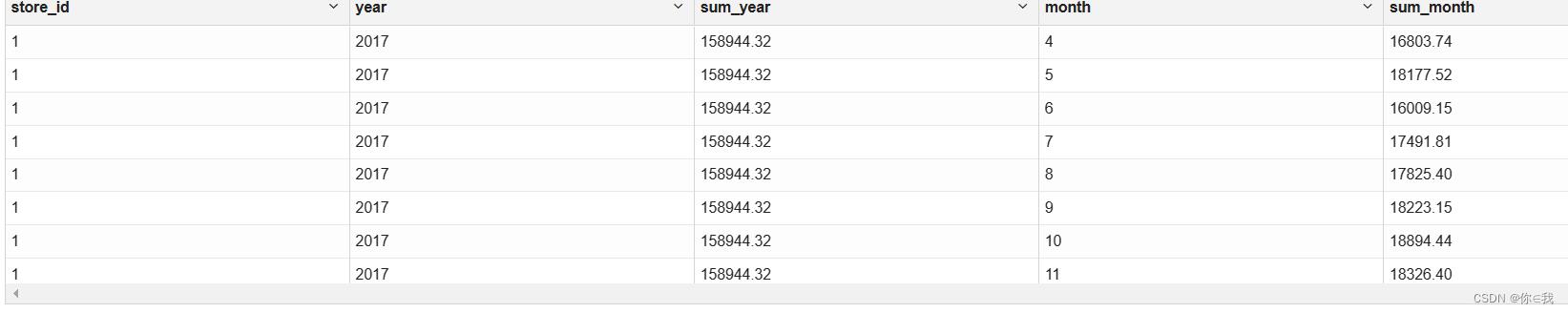

6.按年和月计算每家店的收入

%hive

use shopping;

select distinct *

from (

select store_id,

year(purchase_date) year,

sum(price) over (partition by year(purchase_date)) sum_year,

month(purchase_date) month,

sum(price) over (partition by year(purchase_date),month(purchase_date)) sum_month

from transaction_details

) tb;

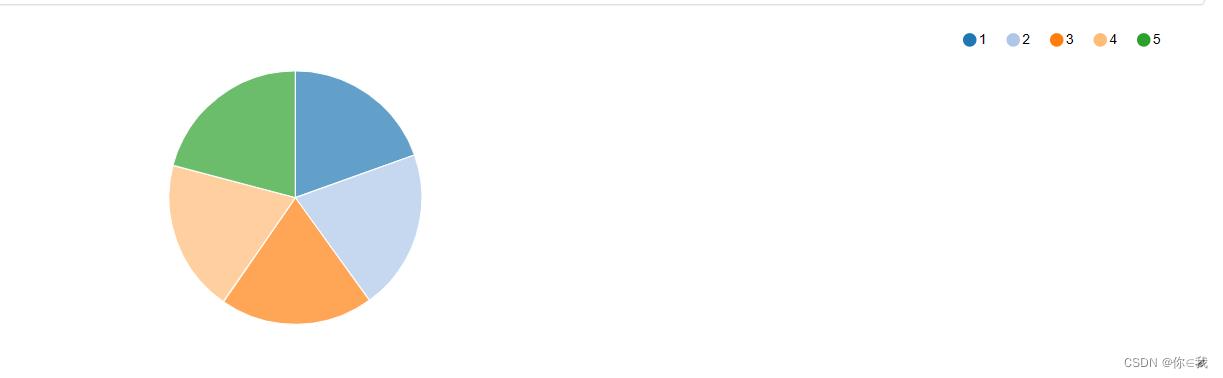

7.按店铺制作总收益饼图

%hive

use shopping;

select store_id,sum(price)

from transaction_details

group by store_id

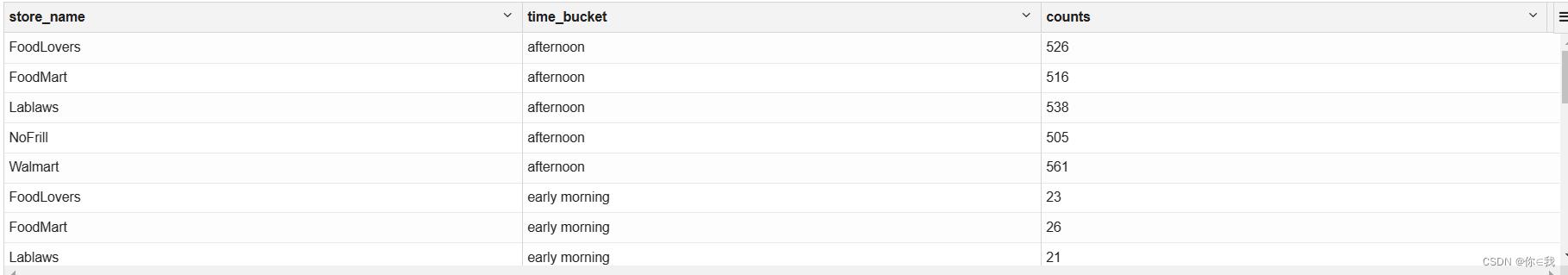

8.找出每个商店最繁忙的时间段

%hive

use shopping;

with basetb1 as (

select store_id,

customer_id,

purchase_time,

case

when purchase_time like '%AM' then split(purchase_time, '\\\\s+')[0]

when purchase_time like '%PM' then concat_ws(':',

cast(`if`(

(cast(split(purchase_time, ':')[0] as int) + 12) == 24,

0,

(cast(split(purchase_time, ':')[0] as int) + 12)) as string)

, split(split(purchase_time, ':')[1], '\\\\s+')[0])

else purchase_time

end time_format

from transaction_details),

basetb2 as (select store_id,

customer_id,

purchase_time,

(cast(split(time_format, ':')[0] as decimal(4, 2)) +

cast(split(time_format, ':')[1] as decimal(4, 2)) / 60) purchase_time_num

from basetb1),

basetb3 as (select store_id,

customer_id,

purchase_time,

`if`(purchase_time_num > 5 and purchase_time_num <= 8, 'early morning',

`if`(purchase_time_num > 8 and purchase_time_num <= 11, 'morning',

`if`(purchase_time_num > 11 and purchase_time_num <= 13, 'noon',

`if`(purchase_time_num > 13 and purchase_time_num <= 18, 'afternoon',

`if`(purchase_time_num > 18 and purchase_time_num <= 22, 'evening',

'night'))))) as time_bucket

from basetb2)

select esd.store_name,

tb3.time_bucket,

count(customer_id) counts

from basetb3 tb3

join ext_store_details esd on tb3.store_id = esd.store_id

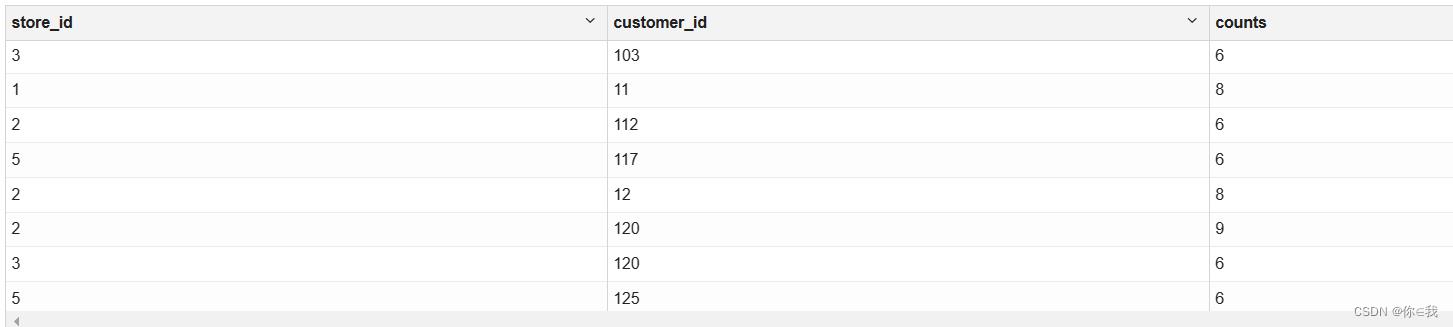

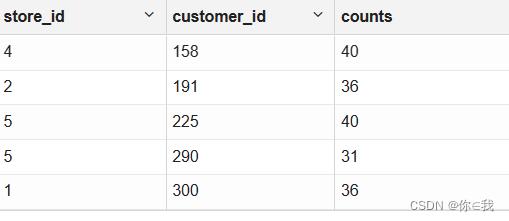

group by esd.store_name, time_bucket;9.找出每家店的忠实顾客

购买次数大于5,认为他是忠实粉丝

%hive

use shopping;

select *

from (

select store_id, customer_id, count(1) counts

from transaction_details

group by store_id, customer_id) tb

where tb.counts > 5;

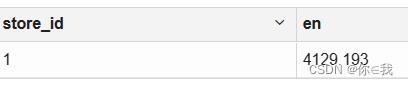

10.根据每位员工的最高收入找出明星商店

%hive

use shopping;

with base as

(

select store_id, sum(price) s

from transaction_details

group by store_id

)

select base.store_id,

base.s / store.employee_number en

from base

join ext_store_details store

on base.store_id = store.store_id

order by en desc

limit 1;Review分析

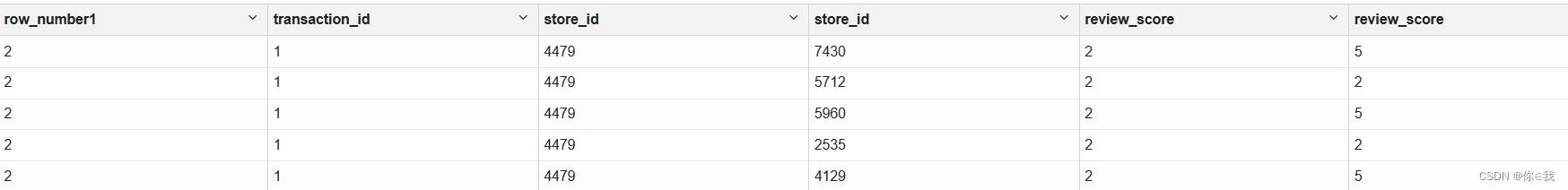

1.在ext_store_review中找出存在冲突的交易映射关系

%hive

use shopping;

with basetb as (

select row_number() over (partition by transaction_id) as row_number1, * from vm_store_review

)

select row_number1, a.transaction_id, a.store_id, b.store_id, a.review_score, b.review_score

from basetb a

join vm_store_review b on a.transaction_id = b.transaction_id

where row_number1 > 1;

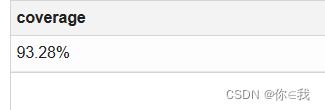

2.了解客户评价的覆盖率

%hive

use shopping;

with t1 as (

select count(1) c1

from ext_store_review

where review_score <> ''

),

t2 as (

select count(1) c2

from ext_store_review

where review_score = ''

)

select concat(cast((c1 - c2) / c1 * 100 as decimal(4, 2)), '%') Coverage

from t1 join t2;

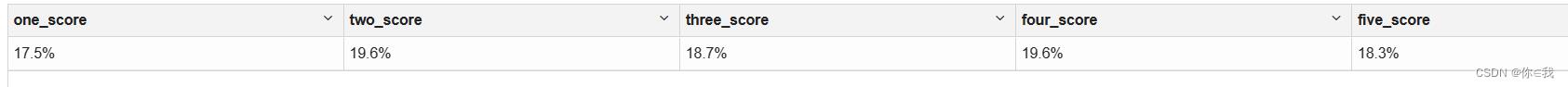

3.根据评分了解客户的分布情况

%hive

use shopping;

select concat(round(sum(case review_score when '1' then 1 else 0 end) / count(*) * 100, 2), '%') as one_score,

concat(round(sum(case review_score when '2' then 1 else 0 end) / count(*) * 100, 2), '%') as two_score,

concat(round(sum(case review_score when '3' then 1 else 0 end) / count(*) * 100, 2), '%') as three_score,

concat(round(sum(case review_score when '4' then 1 else 0 end) / count(*) * 100, 2), '%') as four_score,

concat(round(sum(case review_score when '5' then 1 else 0 end) / count(*) * 100, 2), '%') as five_score

from ext_store_review;

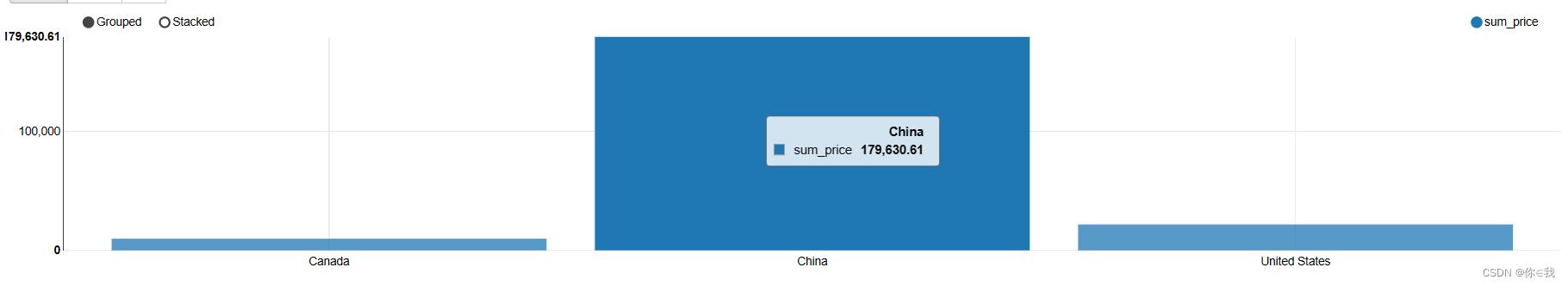

4.根据交易了解客户的分布情况

根据总金额

%hive

use shopping;

select country,

sum(price) sum_price

from transaction_details td

join ext_customer_details cd on td.customer_id = cd.customer_id

group by cd.country;

5.客户给出的最佳评价是否总是同一家门店

%hive

use shopping;

select store_id, customer_id, count(customer_id) counts

from transaction_details td

join ext_store_review esr

on esr.transaction_id = td.transaction_id

where esr.review_score = 5

group by td.store_id, td.customer_id;

以上是关于Hive实战 --- 电子商务消费行为分析的主要内容,如果未能解决你的问题,请参考以下文章