java API 操作HDFS服务器

Posted 幻世纪

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了java API 操作HDFS服务器相关的知识,希望对你有一定的参考价值。

1.准备

开发环境:eclipse

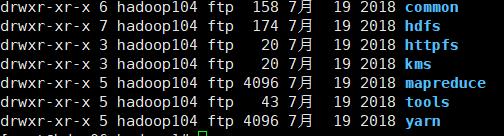

依赖包:hadoop-2.7.7.tar.gz安装包 share/hadoop/下 common、hdfs中的jar包

2.连接HDFS服务器

/**

* 连接HDFS服务器 */ @Test public void connectHDFS() { //做一个配置 Configuration conf = new Configuration(); //服务器地址 端口 conf.set("fs.defaultFS", "hdfs://192.168.124.26:9000"); try { //连接到服务器 FileSystem fileSystem = FileSystem.get(conf); FileStatus fileStatus = fileSystem.getFileStatus(new Path("/upload/word.txt")); System.out.println(fileStatus.isFile()); System.out.println(fileStatus.isDirectory()); System.out.println(fileStatus.getPath()); System.out.println(fileStatus.getLen()); fileSystem.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } }

3.重命名(增删改操作需要使用root用户登陆)

/** * 重命名 */ @Test public void mv() { Configuration conf = new Configuration(); try { //修改文件名需要修改的权限,以root用户登陆HDFS FileSystem fileSystem = FileSystem.get(new URI("hdfs://192.168.124.26:9000"),conf,"root"); boolean rename = fileSystem.rename(new Path("/upload/word.txt"), new Path("/upload/word2.txt")); System.out.println(rename?"修改成功":"修改失败"); fileSystem.close(); } catch (IOException | URISyntaxException e) { // TODO Auto-generated catch block e.printStackTrace(); } catch (InterruptedException e) { // TODO Auto-generated catch block e.printStackTrace(); } }

4.创建文件夹

/** * 创建文件夹 */ @Test public void mkdir() { Configuration conf = new Configuration(); try { FileSystem fileSystem = FileSystem.get(new URI("hdfs://192.168.124.26:9000"),conf,"root"); fileSystem.mkdirs(new Path("/user")); fileSystem.close(); } catch (IOException | URISyntaxException | InterruptedException e) { // TODO Auto-generated catch block e.printStackTrace(); } }

5.文件上传(使用byte[])

/** * 文件上传 */ @Test public void upload() { Configuration conf = new Configuration(); try { FileSystem fileSystem = FileSystem.get(new URI("hdfs://192.168.124.26:9000"),conf,"root"); FSDataOutputStream out = fileSystem.create(new Path("/upload/words.txt")); FileInputStream in = new FileInputStream(new File("E:\\\\words.txt")); byte[] b = new byte[1024]; int len = 0; while((len=in.read(b))!=-1) { out.write(b,0,len); } in.close(); out.close(); fileSystem.close(); } catch (IOException | URISyntaxException | InterruptedException e) { // TODO Auto-generated catch block e.printStackTrace(); } }

6.文件上传(使用IOUtils工具包)

/** * 文件上传 使用IOUtils简化步骤 * jar包在commons-io中 */ @Test public void upload2() { Configuration conf = new Configuration(); try { FileSystem fileSystem = FileSystem.get(new URI("hdfs://192.168.124.26:9000"), conf, "root"); FSDataOutputStream out = fileSystem.create(new Path("/upload/words2.txt")); FileInputStream in = new FileInputStream(new File("E:/words.txt")); IOUtils.copyBytes(in, out, conf); fileSystem.close(); } catch (IOException | InterruptedException | URISyntaxException e) { // TODO Auto-generated catch block e.printStackTrace(); } }

7.文件下载

/** * 文件下载 */ @Test public void download() { Configuration conf = new Configuration(); try { FileSystem fileSystem = FileSystem.get(new URI("hdfs://192.168.124.26:9000"), conf); FSDataInputStream in = fileSystem.open(new Path("/upload/word2.txt")); FileOutputStream out = new FileOutputStream(new File("E:/word2.txt")); byte[] b = new byte[1024]; int len = 0; while((len=in.read(b))!=-1) { out.write(b, 0, len); } in.close(); out.close(); fileSystem.close(); } catch (IOException | URISyntaxException e) { // TODO Auto-generated catch block e.printStackTrace(); } }

8.实现命令lsr,递归显示文件夹和文件

/** * 实现命令lsr 递归显示文件夹和文件 */ @Test public void lrs() { Configuration conf = new Configuration(); try { FileSystem fileSystem = FileSystem.get(new URI("hdfs://192.168.124.26:9000"),conf); isDir(fileSystem,new Path("/")); fileSystem.close(); } catch (IOException | URISyntaxException e) { // TODO Auto-generated catch block e.printStackTrace(); } } public void isDir(FileSystem fileSystem,Path path) { try { FileStatus[] listStatuses = fileSystem.listStatus(path); for(FileStatus listStatus:listStatuses) { Path path1 = listStatus.getPath(); boolean directory = fileSystem.isDirectory(path1); if(directory) { System.out.println("文件夹:"+listStatus.getPath()+"\\t"+listStatus.getLen()+"\\t"+listStatus.getOwner()+"\\t"+listStatus.getGroup()); isDir(fileSystem,path1); }else { System.out.println("文件:"+listStatus.getPath()+"\\t"+listStatus.getLen()+"\\t"+listStatus.getOwner()+"\\t"+listStatus.getGroup()); } } } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } }

以上是关于java API 操作HDFS服务器的主要内容,如果未能解决你的问题,请参考以下文章