java爬虫,网页简易爬小说程序

Posted 香吗

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了java爬虫,网页简易爬小说程序相关的知识,希望对你有一定的参考价值。

package PaChong; import org.jsoup.Jsoup; import org.jsoup.nodes.Document; import org.jsoup.nodes.Element; import org.jsoup.nodes.Node; import org.jsoup.select.Elements; import java.io.BufferedOutputStream; import java.io.FileNotFoundException; import java.io.FileOutputStream; import java.io.IOException; import java.io.InputStream; import java.net.URL; import java.net.URLConnection; import java.util.List; import java.util.Scanner; public class Main { /** * @param strURL(链接地址) * @return */ public static void is(String strURL) { is(strURL, "utf-8"); } /** * @param strURL(链接地址) * @param charset(字符编码) * @return(返回字符串) */ public static void is(String strURL, String charset) { getContentFromUrl(strURL, charset); } /** * @param myUrl(链接地址) * @param charset(字符编码) * @return (返回字符串) */ public static void getContentFromUrl(String myUrl, String charset) { int start = 7449572; int end = 7450351; // String text = ""; Scanner sc = null; InputStream is = null; BufferedOutputStream bs = null; try { bs = new BufferedOutputStream(new FileOutputStream("1234.txt", true)); } catch (FileNotFoundException e) { e.printStackTrace(); } System.out.println("开始"); for (int q = start; q <= end; q++) { //地址 String UrlAddress = ""; try { StringBuffer sb = new StringBuffer(); UrlAddress = "https://www.biquges.com/11_11744/" + q + ".html"; // UrlAddress = "http://www.022003.com/9_9198/"+q+".html"; URL url = new URL(UrlAddress); URLConnection urlConnection = url.openConnection(); is = urlConnection.getInputStream(); // byte [] b = new byte[65535]; // int len; // while((len = is.read(b)) != -1){ // bs.write(b,0,len); // System.out.println(new String(b,0,len,"UTF-8")); // } sc = new Scanner(is, charset); while (sc.hasNextLine()) { sb.append(sc.nextLine()).append("\\r\\n"); } Document document = Jsoup.parseBodyFragment(sb.toString()); Element bookname = document.getElementsByTag("h1").get(0); bs.write(bookname.text().getBytes()); System.out.println(bookname.text()); Element content = document.getElementById("content"); List<Node> nodes = content.childNodes(); for (int i = 0; i < nodes.size() - 1; i++) { Node cc = nodes.get(i); if (cc != null && !" ".equals(cc.toString()) && !"<br>".equals(cc.toString())) { // System.out.println(cc.toString().replaceAll(" ", "")); bs.write(cc.toString().replaceAll(" ", "").getBytes()); bs.write("\\r\\n".getBytes()); } } bs.write("\\r\\n".getBytes()); } catch (Exception e) { System.out.println(UrlAddress + "====》》》访问异常。。。"); continue; } } if (sc != null) { sc.close(); } if (is != null) { try { is.close(); } catch (IOException e) { e.printStackTrace(); } } if (bs != null) { try { bs.close(); } catch (IOException e) { } } System.out.println("结束"); } public static void main(String[] args) { /* * 使用示例 */ Main.is(""); /* * 使用示例 */ // Main.is("https://www.biquges.com/11_11744/7449454.html", "utf-8"); } }

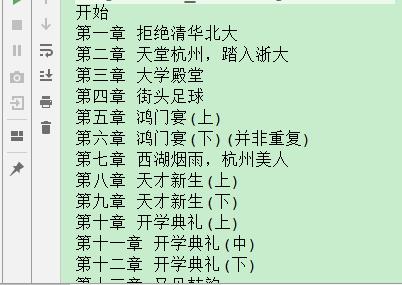

结果:

以上是关于java爬虫,网页简易爬小说程序的主要内容,如果未能解决你的问题,请参考以下文章