数据规整:聚合合并和重塑

Posted 派大星先生c

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了数据规整:聚合合并和重塑相关的知识,希望对你有一定的参考价值。

目录

一、层次化索引

层次化索引(hierarchical indexing)是pandas的一项重要功能,它使你能在一个轴上拥有多个(两个以上)索引级别。抽象点说,它使你能以低维度形式处理高维度数据。我们先来看一个简单的例子:创建一个Series,并用一个由列表或数组组成的列表作为索引:

In [9]: data = pd.Series(np.random.randn(9),

...: index=[['a', 'a', 'a', 'b', 'b', 'c', 'c', 'd', 'd'],

...: [1, 2, 3, 1, 3, 1, 2, 2, 3]])

In [10]: data

Out[10]:

a 1 -0.204708

2 0.478943

3 -0.519439

b 1 -0.555730

3 1.965781

c 1 1.393406

2 0.092908

d 2 0.281746

3 0.769023

dtype: float64

看到的结果是经过美化的带有MultiIndex索引的Series的格式。索引之间的“间隔”表示“直接使用上面的标签”:

In [11]: data.index

Out[11]:

MultiIndex(levels=[['a', 'b', 'c', 'd'], [1, 2, 3]],

labels=[[0, 0, 0, 1, 1, 2, 2, 3, 3], [0, 1, 2, 0, 2, 0, 1, 1, 2]])

对于一个层次化索引的对象,可以使用所谓的部分索引,使用它选取数据子集的操作更简单:

In [12]: data['b']

Out[12]:

1 -0.555730

3 1.965781

dtype: float64

In [13]: data['b':'c']

Out[13]:

b 1 -0.555730

3 1.965781

c 1 1.393406

2 0.092908

dtype: float64

In [14]: data.loc[['b', 'd']]

Out[14]:

b 1 -0.555730

3 1.965781

d 2 0.281746

3 0.769023

dtype: float64

有时甚至还可以在“内层”中进行选取

In [15]: data.loc[:, 2]

Out[15]:

a 0.478943

c 0.092908

d 0.281746

dtype: float64

对于一个DataFrame,每条轴都可以有分层索引:

In [18]: frame = pd.DataFrame(np.arange(12).reshape((4, 3)),

....: index=[['a', 'a', 'b', 'b'], [1, 2, 1, 2]],

....: columns=[['Ohio', 'Ohio', 'Colorado'],

....: ['Green', 'Red', 'Green']])

In [19]: frame

Out[19]:

Ohio Colorado

Green Red Green

a 1 0 1 2

2 3 4 5

b 1 6 7 8

2 9 10 11

各层都可以有名字(可以是字符串,也可以是别的Python对象)。如果指定了名称,它们就会显示在控制台输出中:

In [20]: frame.index.names = ['key1', 'key2']

In [21]: frame.columns.names = ['state', 'color']

In [22]: frame

Out[22]:

state Ohio Colorado

color Green Red Green

key1 key2

a 1 0 1 2

2 3 4 5

b 1 6 7 8

2 9 10 11

重排与分级排序

有时,你需要重新调整某条轴上各级别的顺序,或根据指定级别上的值对数据进行排序。swaplevel接受两个级别编号或名称,并返回一个互换了级别的新对象(但数据不会发生变化):

In [24]: frame.swaplevel('key1', 'key2')

Out[24]:

state Ohio Colorado

color Green Red Green

key2 key1

1 a 0 1 2

2 a 3 4 5

1 b 6 7 8

2 b 9 10 11

而sort_index则根据单个级别中的值对数据进行排序。交换级别时,常常也会用到sort_index,这样最终结果就是按照指定顺序进行字母排序了:

In [25]: frame.sort_index(level=1)

Out[25]:

state Ohio Colorado

color Green Red Green

key1 key2

a 1 0 1 2

b 1 6 7 8

a 2 3 4 5

b 2 9 10 11

In [26]: frame.swaplevel(0, 1).sort_index(level=0)

Out[26]:

state Ohio Colorado

color Green Red Green

key2 key1

1 a 0 1 2

b 6 7 8

2 a 3 4 5

b 9 10 11

根据级别汇总统计

许多对DataFrame和Series的描述和汇总统计都有一个level选项,它用于指定在某条轴上求和的级别。再以上面那个DataFrame为例,我们可以根据行或列上的级别来进行求和:

In [27]: frame.sum(level='key2')

Out[27]:

state Ohio Colorado

color Green Red Green

key2

1 6 8 10

2 12 14 16

In [28]: frame.sum(level='color', axis=1)

Out[28]:

color Green Red

key1 key2

a 1 2 1

2 8 4

b 1 14 7

2 20 10

DataFrame的set_index函数会将其一个或多个列转换为行索引,并创建一个新的DataFrame:

In [29]: frame = pd.DataFrame('a': range(7), 'b': range(7, 0, -1),

....: 'c': ['one', 'one', 'one', 'two', 'two',

....: 'two', 'two'],

....: 'd': [0, 1, 2, 0, 1, 2, 3])

In [30]: frame

Out[30]:

a b c d

0 0 7 one 0

1 1 6 one 1

2 2 5 one 2

3 3 4 two 0

4 4 3 two 1

5 5 2 two 2

6 6 1 two 3

默认情况下,那些列会从DataFrame中移除,但也可以将其保留下来:

In [33]: frame.set_index(['c', 'd'], drop=False)

Out[33]:

a b c d

c d

one 0 0 7 one 0

1 1 6 one 1

2 2 5 one 2

two 0 3 4 two 0

1 4 3 two 1

2 5 2 two 2

3 6 1 two 3

reset_index的功能跟set_index刚好相反,层次化索引的级别会被转移到列里面:

In [34]: frame2.reset_index()

Out[34]:

c d a b

0 one 0 0 7

1 one 1 1 6

2 one 2 2 5

3 two 0 3 4

4 two 1 4 3

5 two 2 5 2

6 two 3 6 1

二、合并数据集

pandas对象中的数据可以通过一些方式进行合并:

- pandas.merge可根据一个或多个键将不同DataFrame中的行连接起来。SQL或其他关系型数据库的用户对此应该会比较熟悉,因为它实现的就是数据库的join操作。

- pandas.concat可以沿着一条轴将多个对象堆叠到一起。

- 实例方法combine_first可以将重复数据拼接在一起,用一个对象中的值填充另一个对象中的缺失值。

我将分别对它们进行讲解,并给出一些例子。本书剩余部分的示例中将经常用到它们。

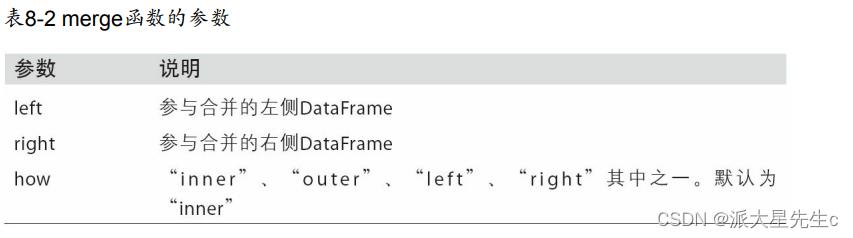

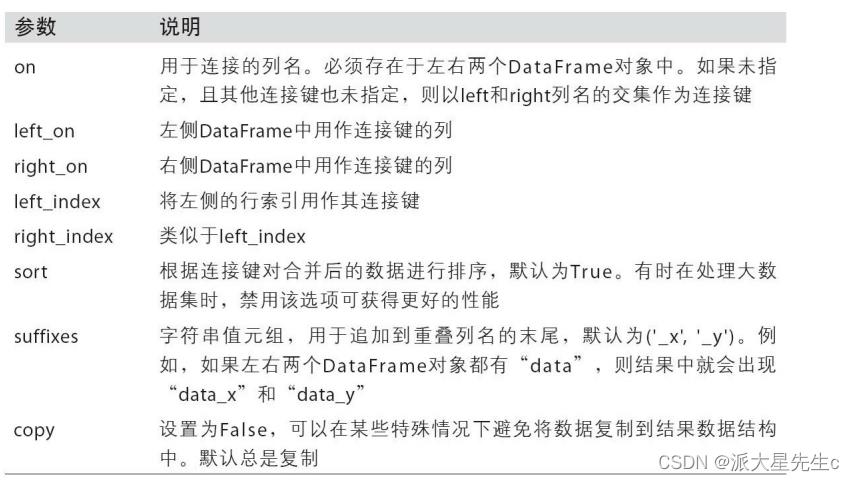

数据库风格的DataFrame合并

数据集的合并(merge)或连接(join)运算是通过一个或多个键将行连接起来的。这些运算是关系型数据库(基于SQL)的核心。pandas的merge函数是对数据应用这些算法的主要切入点。

以一个简单的例子开始:

In [35]: df1 = pd.DataFrame('key': ['b', 'b', 'a', 'c', 'a', 'a', 'b'],

....: 'data1': range(7))

In [36]: df2 = pd.DataFrame('key': ['a', 'b', 'd'],

....: 'data2': range(3))

In [37]: df1

Out[37]:

data1 key

0 0 b

1 1 b

2 2 a

3 3 c

4 4 a

5 5 a

6 6 b

In [38]: df2

Out[38]:

data2 key

0 0 a

1 1 b

2 2 d

这是一种多对一的合并。df1中的数据有多个被标记为a和b的行,而df2中key列的每个值则仅对应一行。对这些对象调用merge即可得到:

In [39]: pd.merge(df1, df2)

Out[39]:

data1 key data2

0 0 b 1

1 1 b 1

2 6 b 1

3 2 a 0

4 4 a 0

5 5 a 0

注意,我并没有指明要用哪个列进行连接。如果没有指定,merge就会将重叠列的列名当做键。不过,最好明确指定一下:

In [40]: pd.merge(df1, df2, on='key')

Out[40]:

data1 key data2

0 0 b 1

1 1 b 1

2 6 b 1

3 2 a 0

4 4 a 0

5 5 a 0

如果两个对象的列名不同,也可以分别进行指定:

In [41]: df3 = pd.DataFrame('lkey': ['b', 'b', 'a', 'c', 'a', 'a', 'b'],

....: 'data1': range(7))

In [42]: df4 = pd.DataFrame('rkey': ['a', 'b', 'd'],

....: 'data2': range(3))

In [43]: pd.merge(df3, df4, left_on='lkey', right_on='rkey')

Out[43]:

data1 lkey data2 rkey

0 0 b 1 b

1 1 b 1 b

2 6 b 1 b

3 2 a 0 a

4 4 a 0 a

5 5 a 0 a

可能你已经注意到了,结果里面c和d以及与之相关的数据消失了。默认情况下,merge做的是“内连接”;结果中的键是交集。其他方式还有"left"、“right"以及"outer”。外连接求取的是键的并集,组合了左连接和右连接的效果:

In [44]: pd.merge(df1, df2, how='outer')

Out[44]:

data1 key data2

0 0.0 b 1.0

1 1.0 b 1.0

2 6.0 b 1.0

3 2.0 a 0.0

4 4.0 a 0.0

5 5.0 a 0.0

6 3.0 c NaN

7 NaN d 2.0

多键合并

In [51]: left = pd.DataFrame('key1': ['foo', 'foo', 'bar'],

....: 'key2': ['one', 'two', 'one'],

....: 'lval': [1, 2, 3])

In [52]: right = pd.DataFrame('key1': ['foo', 'foo', 'bar', 'bar'],

....: 'key2': ['one', 'one', 'one', 'two'],

....: 'rval': [4, 5, 6, 7])

In [53]: pd.merge(left, right, on=['key1', 'key2'], how='outer')

Out[53]:

key1 key2 lval rval

0 foo one 1.0 4.0

1 foo one 1.0 5.0

2 foo two 2.0 NaN

3 bar one 3.0 6.0

4 bar two NaN 7.0

对于合并运算需要考虑的最后一个问题是对重复列名的处理。虽然你可以手工处理列名重叠的问题(查看前面介绍的重命名轴标签),但merge有一个更实用的suffixes选项,用于指定附加到左右两个DataFrame对象的重叠列名上的字符串:

In [54]: pd.merge(left, right, on='key1')

Out[54]:

key1 key2_x lval key2_y rval

0 foo one 1 one 4

1 foo one 1 one 5

2 foo two 2 one 4

3 foo two 2 one 5

4 bar one 3 one 6

5 bar one 3 two 7

In [55]: pd.merge(left, right, on='key1', suffixes=('_left', '_right'))

Out[55]:

key1 key2_left lval key2_right rval

0 foo one 1 one 4

1 foo one 1 one 5

2 foo two 2 one 4

3 foo two 2 one 5

4 bar one 3 one 6

5 bar one 3 two 7

索引上的合并

有时候,DataFrame中的连接键位于其索引中。在这种情况下,你可以传入left_index=True或right_index=True(或两个都传)以说明索引应该被用作连接键:

In [56]: left1 = pd.DataFrame('key': ['a', 'b', 'a', 'a', 'b', 'c'],

....: 'value': range(6))

In [57]: right1 = pd.DataFrame('group_val': [3.5, 7], index=['a', 'b'])

In [58]: left1

Out[58]:

key value

0 a 0

1 b 1

2 a 2

3 a 3

4 b 4

5 c 5

In [59]: right1

Out[59]:

group_val

a 3.5

b 7.0

In [60]: pd.merge(left1, right1, left_on='key', right_index=True)

Out[60]:

key value group_val

0 a 0 3.5

数据规整化:清理转换合并重塑

写在前面的话:

实例中的所有数据都是在GitHub上下载的,打包下载即可。

地址是:http://github.com/pydata/pydata-book

还有一定要说明的:

我使用的是Python2.7,书中的代码有一些有错误,我使用自己的2.7版本调通。

# coding: utf-8

from pandas import Series, DataFrame

import pandas as pd

import numpy as np

df1 = DataFrame('key':['b','b','a','c','a','a','b'],'data1':range(7))

df2 = DataFrame('key':['a','b','d'],'data2':range(3))

df1

df2

pd.merge(df1,df2)

pd.merge(df1,df2,on='key')

df3 = DataFrame('lkey':['b','b','a','c','a','a','b'],'data1':range(7))

df4 = DataFrame('rkey':['a','b','d'],'data2':range(3))

pd.merge(df3,df4,left_on='lkey',right_on='rkey')

pd.merge(df1,df2,how='outer')

df5 = DataFrame('key':['b','b','a','c','a','b'],'data1':range(6))

df6 = DataFrame('key':['a','b','a','b','d'],'data2':range(5))

df5

df6

pd.merge(df5,df6,on='key',how='left')

pd.merge(df5,df6,how='inner')

left = DataFrame('key1':['foo','foo','bar'],'key2':['one','two','one'],'lval':[1,2,3])

right = DataFrame('key1':['foo','foo','bar','bar'],'key2':['one','one','one','two'],'lval':[4,5,6,7])

pd.merge(left,right,on=['key1','key2'],how='outer')

pd.merge(left,right,on='key1')

pd.merge(left,right,on='key1',suffixes=('_left','_right'))

left1 = DataFrame('key':['a','b','a','a','b','c'],'value':range(6))

right1 = DataFrame('group_val':[3.5,7],index=['a','b'])

left1

right1

pd.merge(left1,right1,left_on='key',right_index=True)

pd.merge(left1,right1,left_on='key',right_index=True,how='outer')

lefth = DataFrame('key1':['Ohio','Ohio','Ohio','Nevada','Nevada'],'key2':[2000,2001,2002,2001,2002],'data':np.arange(5.))

righth = DataFrame(np.arange(12).reshape((6,2)),index=[['Nevada','Nevada','Ohio','Ohio','Ohio','Ohio'],[2001,2000,2000,2000,2001,2002]],columns=['event1','event2'])

lefth

righth

pd.merge(lefth,righth,left_on=['key1','key2'],right_index=True)

pd.merge(lefth,righth,left_on=['key1','key2'],right_index=True,how='outer')

left2 = DataFrame([[1.,2.],[3.,4.],[5.,6.]],index=['a','c','e'],columns=['Ohio','Nevada'])

right2 = DataFrame([[7.,8.],[9.,10.],[11.,12.],[13.,14.]],index=['b','c','d','e'],columns=['Missouri','Alabama'])

left2

right2

pd.merge(left2,right2,how='outer',left_index=True,right_index=True)

left2.join(right2,how='outer')

left1.join(right1,on='key')

another = DataFrame([[7.,8.],[9.,10.],[11.,12.],[16.,17.]],index=['a','c','e','f'],columns=['New York','Oregon'])

left2.join([right2,another])

left2.join([right2,another],how='outer')

arr = np.arange(12).reshape((3,4))

arr

np.concatenate([arr,arr],axis=1)

s1 = Series([0,1],index=['a','b'])

s2 = Series([2,3,4],index=['c','d','e'])

s3 = Series([5,6],index=['f','g'])

pd.concat([s1,s2,s3])

pd.concat([s1,s2,s3],axis=1)

s4 = pd.concat([s1 * 5,s3])

pd.concat([s1,s4],axis=1)

pd.concat([s1,s4],axis=1,join='inner')

pd.concat([s1,s4],axis=1,join_axes=[['a','c','b','e']])

result = pd.concat([s1,s1,s3],keys=['one','two','three'])

result

result.unstack()

pd.concat([s1,s2,s3],axis=1,keys=['one','two','three'])

df1 = DataFrame(np.arange(6).reshape(3,2),index=['a','b','c'],columns=['one','two'])

df2 = DataFrame(5 + np.arange(4).reshape(2,2),index=['a','c'],columns=['three','four'])

pd.concat([df1,df2],axis=1,keys=['level1','level2'])

pd.concat('level1':df1,'level2':df2,axis=1)

pd.concat([df1,df2],axis=1,keys=['level1','level2'],names=['upper','lower'])

df1 = DataFrame(np.arange(12).reshape(3,4),columns=['a','b','c','d'])

df2 = DataFrame(np.arange(6).reshape(2,3),columns=['b','d','a'])

df1

df2

pd.concat([df1,df2],ignore_index=True)

a = Series([np.nan,2.5,np.nan,3.5,4.5,np.nan],index=['f','e','d','c','b','a'])

b = Series(np.arange(len(a),dtype=np.float64),index=['f','e','d','c','b','a'])

b[-1] = np.nan

a

b

np.where(pd.isnull(a),b,a)

b[:-2].combine_first(a[2:])

df1 = DataFrame('a':[1,np.nan,5,np.nan],

'b':[np.nan,2,np.nan,6],

'c':range(2,18,4))

df2 = DataFrame('a':[5,4,np.nan,3,7],

'b':[np.nan,3,4,6,8])

df1.combine_first(df2)

data = DataFrame(np.arange(6).reshape((2,3)),index=pd.Index(['Ohio','Colorado'],name='state'),columns=pd.Index(['one','two','three'],name='number'))

data

result = data.stack()

result

result.unstack()

result.unstack(0)

result.unstack('state')

s1 = Series([0,1,2,3],index=['a','b','c','d'])

s2 = Series([4,5,6],index=['c','d','e'])

data2 = pd.concat([s1,s2],keys=['one','two'])

data2.unstack()

data2.unstack().stack(dropna=False)

df = DataFrame('left':result,'right':result + 5,columns=pd.Index(['left','right'],name='side'))

df

df.unstack('state')

df.unstack('state').stack('side')

ldata = DataFrame('date':['03-31','03-31','03-31','06-30','06-30','06-30'],

'item':['real','infl','unemp','real','infl','unemp'],'value':['2710.','000.','5.8','2778.','2.34','5.1'])

ldata

pivoted = ldata.pivot('date','item','value')

pivoted.head()

ldata['value2'] = np.random.randn(len(ldata))

ldata[:10]

pivoted = ldata.pivot('date','item')

pivoted[:5]

pivoted['value'][:5]

unstacked = ldata.set_index(['date','item']).unstack('item')

unstacked[:7]

data = DataFrame('k1':['one'] * 3 + ['two'] *4,'k2':[1,1,2,3,3,4,4])

data

data.duplicated()

data.drop_duplicates()

data['v1'] = range(7)

data.drop_duplicates(['k1'])

data.drop_duplicates(['k1','k2'],take_last=True)

data = DataFrame('food':['bacon','pulled pork','bacon','Pastrami','corned beef','Bacon','pastrami','honey ham','nova lox'],

'ounces':[4,3,12,6,7.5,8,3,5,6])

data

meat_to_animal = 'bacon':'pig',

'pulled pork':'pig',

'pastrami':'cow',

'corned beef':'cow',

'honey ham':'pig',

'nova lox':'salmon'

data['animal'] = data['food'].map(str.lower).map(meat_to_animal)

data

data['food'].map(lambda x: meat_to_animal[x.lower()])

data = Series([1.,-999.,2.,-999.,-1000.,3.])

data

data.replace(-999,np.nan)

data.replace([-999,-1000],[np.nan,666])

data.replace(-999:np.nan, -1000:0)

data = DataFrame(np.arange(12).reshape((3,4)),index=['Ohio','Colorado','New York',],columns=['one','two','three','four'])

data.index = data.index.map(str.upper)

data

data.rename(index=str.title,columns=str.upper)

data.rename(index='OHIO':'INDIANA',columns='three':'peekaboo')

ages = [20,22,25,27,21,23,37,31,61,45,51,41,32]

bins = [18,25,35,60,100]

cats = pd.cut(ages,bins)

cats

cats.labels

cats.levels

pd.value_counts(cats)

pd.cut(ages,[18,26,36,61,100],right=False)

group_names = ['Youth','YouthAdult','MiddleAge','Senior']

pd.cut(ages,bins,labels=group_names)

data = np.random.rand(20)

pd.cut(data,4,precision=2)

data = np.random.rand(1000)

cats = pd.qcut(data,4)

cats

pd.value_counts(cats)

pd.qcut(data,[0,0.1,0.5,0.9,1.])

pd.value_counts(cats)

np.random.seed(12345)

data = DataFrame(np.random.randn(1000,4))

data.describe()

col = data[3]

col[np.abs(col)>3]

data[(np.abs(data)>3).any(1)]

data[np.abs(data)>3] = np.sign(data) * 3

data.describe()

df = DataFrame(np.arange(5*4).reshape((5,4)))

sampler = np.random.permutation(5)

sampler

df

df.take(sampler)

df.take(np.random.permutation(len(df))[:3])

bag = np.array([5,7,-1,6,4])

sampler = np.random.randint(0,len(bag),size=10)

sampler

draws = bag.take(sampler)

draws

df = DataFrame('key':['b','b','a','c','a','b'],'data1':range(6))

pd.get_dummies(df['key'])

dummies = pd.get_dummies(df['key'],prefix='key')

df_with_dummy = df[['data1']].join(dummies)

df_with_dummy

mnames = ['movie_id','title','genres']

movies = pd.read_table('D:\\Source Code\\pydata-book-master\\ch02\\movielens\\movies.dat',sep='::',header=None,names=mnames)

movies[:10]

genre_iter = (set(x.split('|')) for x in movies.genres)

genres = sorted(set.union(*genre_iter))

dummies = DataFrame(np.zeros((len(movies),len(genres))),columns=genres)

for i,gen in enumerate(movies.genres):

dummies.ix[i,gen.split('|')] = 1

movies_windic = movies.join(dummies.add_prefix('Genre_'))

movies_windic.ix[0]

values = np.random.rand(10)

values

bins = [0,0.2,0.4,0.6,0.8,1]

pd.get_dummies(pd.cut(values,bins))

val = 'a,b, guido'

val.split(',')

pieces = [x.strip() for x in val.split(',')]

pieces

first,second,thrid = pieces

first + '::' + second + '::' + thrid

'::'.join(pieces)

'guido' in val

val.index(',')

val.find(':')

val.count(',')

val.replace(',','::')

val.replace(',', '')

import re

text = "foo bar\\t baz \\tqux"

re.split('\\s+',text)

regex = re.compile('\\s+')

regex.split(text)

regex.findall(text)

text = """Dave dave@aa.com

Steve steve@aa.com

Rob rob@gmail.com

Ryan ryan@yahoo.com

"""

pattern = r'[A-Z0-9._%+-]+@[A-Z0-9.-]+\\.[A-Z]2,4'

regex = re.compile(pattern,flags=re.IGNORECASE)

regex.findall(text)

m = regex.search(text)

m

text[m.start():m.end()]

print regex.match(text)

print regex.sub('REDACTED',text)

pattern = r'([A-Z0-9._%+-]+)@([A-Z0-9.-]+)\\.([A-Z]2,4)'

regex = re.compile(pattern,flags=re.IGNORECASE)

m = regex.match('wes@shjdjs.net')

m.groups()

regex.findall(text)

print regex.sub(r'Username:\\1,domain:\\2,suffix:\\3',text)

data = 'Dave':'dave@aa.com','Steve':'steve@aa.com','Rob':'rob@gmail.com','Ryan':'ryan@yahoo.com','Wes':np.nan

data = Series(data)

data

data.isnull()

data.str.contains('gmail')

pattern

data.str.findall(pattern,flags=re.IGNORECASE)

matches = data.str.match(pattern,flags=re.IGNORECASE)

matches

matches.str.get(1)

matches.str[0]

data.str[:5]

import json

db = json.load(open('D:\\Source Code\\pydata-book-master\\ch07\\\\foods-2011-10-03.json'))

len(db)

db[0].keys()

db[0]['nutrients'][0]

nutrients = DataFrame(db[0]['nutrients'])

nutrients[:7]

info_keys = ['description','group','id','manufacturer']

info = DataFrame(db,columns=info_keys)

info[:5]

info

pd.value_counts(info.group)[:10]

nutrients = []

for rec in db:

fnuts = DataFrame(rec['nutrients'])

fnuts['id'] = rec['id']

nutrients.append(fnuts)

nutrients = pd.concat(nutrients,ignore_index=True)

nutrients

nutrients.duplicated().sum()

nutrients = nutrients.drop_duplicates()

col_mapping = 'description':'food','group':'fgroup'

info = info.rename(columns=col_mapping,copy=False)

info

col_mapping = 'description':'nutrient','group':'nutgroup'

nutrients = nutrients.rename(columns=col_mapping,copy=False)

nutrients

ndata = pd.merge(nutrients,info,on='id',how='outer')

ndata

ndata.ix[30000]

result = ndata.groupby(['nutrient','fgroup'])['value'].quantile(0.5)

result['Zinc, Zn'].order().plot(kind='barh')

by_nutrient = ndata.groupby(['nutgroup','nutrient'])

get_max = lambda x : x.xs(x.value.idxmax())

get_min = lambda x : x.xs(x.value.idxmin())

max_foods = by_nutrient.apply(get_max)[['value','food']]

max_foods = max_foods.food.str[:50]

max_foods.ix['Amino Acids']

以上是关于数据规整:聚合合并和重塑的主要内容,如果未能解决你的问题,请参考以下文章