请教高手们:电脑ATX电源里的绿线和黑色短接后的电流是多大?

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了请教高手们:电脑ATX电源里的绿线和黑色短接后的电流是多大?相关的知识,希望对你有一定的参考价值。

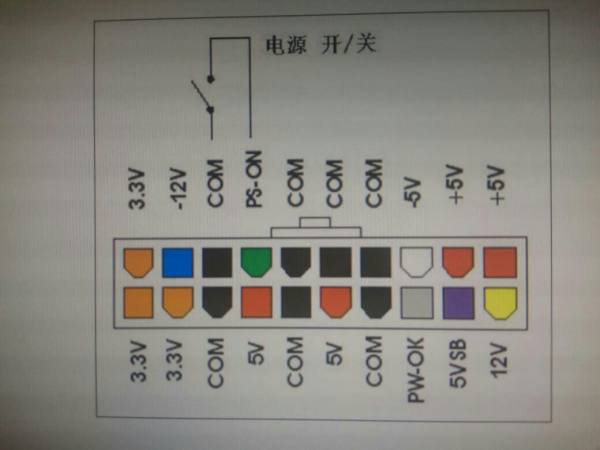

请教高手们:电脑ATX电源里的绿线和黑色短接后的电流是多大?

电脑ATX电源里的绿线和黑色短接后没有电流。

ATX电源的特性就是,绿线和黑线短接后,电源会启动工作。

即使电源不带有任何负载,短接这两根线以后,电源也会启动工作。当然,此时由于没有负载,当然也不存在电流的问题。

至于这两根线本身,即使短接也没有电流,同样是没有负载。如果有电流,电源岂不是要烧了。

iOS 中的裁剪视频在视频周围看到奇怪的绿线

【中文标题】iOS 中的裁剪视频在视频周围看到奇怪的绿线【英文标题】:Crop video in iOS see weird green line around video 【发布时间】:2015-04-08 03:53:34 【问题描述】:大家好,我正在裁剪从 iPhone 上的相机拍摄的视频,然后将其裁剪并像这样播放。但是,当我这样做时,我会在视频的底部和右侧看到一条奇怪的绿线?不知道为什么会发生这种情况或如何解决它。这是我的裁剪方式。

- (UIImageOrientation)getVideoOrientationFromAsset:(AVAsset *)asset

AVAssetTrack *videoTrack = [[asset tracksWithMediaType:AVMediaTypeVideo] objectAtIndex:0];

CGSize size = [videoTrack naturalSize];

CGAffineTransform txf = [videoTrack preferredTransform];

if (size.width == txf.tx && size.height == txf.ty)

return UIImageOrientationLeft; //return UIInterfaceOrientationLandscapeLeft;

else if (txf.tx == 0 && txf.ty == 0)

return UIImageOrientationRight; //return UIInterfaceOrientationLandscapeRight;

else if (txf.tx == 0 && txf.ty == size.width)

return UIImageOrientationDown; //return UIInterfaceOrientationPortraitUpsideDown;

else

return UIImageOrientationUp; //return UIInterfaceOrientationPortrait;

- (AVAssetExportSession*)applyCropToVideoWithAsset:(AVAsset*)asset AtRect:(CGRect)cropRect OnTimeRange:(CMTimeRange)cropTimeRange ExportToUrl:(NSURL*)outputUrl ExistingExportSession:(AVAssetExportSession*)exporter WithCompletion:(void(^)(BOOL success, NSError* error, NSURL* videoUrl))completion

// NSLog(@"CALLED");

//create an avassetrack with our asset

AVAssetTrack *clipVideoTrack = [[asset tracksWithMediaType:AVMediaTypeVideo] objectAtIndex:0];

//create a video composition and preset some settings

AVMutableVideoComposition* videoComposition = [AVMutableVideoComposition videoComposition];

videoComposition.frameDuration = CMTimeMake(1, 30);

CGFloat cropOffX = cropRect.origin.x;

CGFloat cropOffY = cropRect.origin.y;

CGFloat cropWidth = cropRect.size.width;

CGFloat cropHeight = cropRect.size.height;

// NSLog(@"width: %f - height: %f - x: %f - y: %f", cropWidth, cropHeight, cropOffX, cropOffY);

videoComposition.renderSize = CGSizeMake(cropWidth, cropHeight);

//create a video instruction

AVMutableVideoCompositionInstruction *instruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

instruction.timeRange = cropTimeRange;

AVMutableVideoCompositionLayerInstruction* transformer = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:clipVideoTrack];

UIImageOrientation videoOrientation = [self getVideoOrientationFromAsset:asset];

CGAffineTransform t1 = CGAffineTransformIdentity;

CGAffineTransform t2 = CGAffineTransformIdentity;

switch (videoOrientation)

case UIImageOrientationUp:

t1 = CGAffineTransformMakeTranslation(clipVideoTrack.naturalSize.height - cropOffX, 0 - cropOffY );

t2 = CGAffineTransformRotate(t1, M_PI_2 );

break;

case UIImageOrientationDown:

t1 = CGAffineTransformMakeTranslation(0 - cropOffX, clipVideoTrack.naturalSize.width - cropOffY ); // not fixed width is the real height in upside down

t2 = CGAffineTransformRotate(t1, - M_PI_2 );

break;

case UIImageOrientationRight:

t1 = CGAffineTransformMakeTranslation(0 - cropOffX, 0 - cropOffY );

t2 = CGAffineTransformRotate(t1, 0 );

break;

case UIImageOrientationLeft:

t1 = CGAffineTransformMakeTranslation(clipVideoTrack.naturalSize.width - cropOffX, clipVideoTrack.naturalSize.height - cropOffY );

t2 = CGAffineTransformRotate(t1, M_PI );

break;

default:

NSLog(@"no supported orientation has been found in this video");

break;

CGAffineTransform finalTransform = t2;

[transformer setTransform:finalTransform atTime:kCMTimeZero];

//add the transformer layer instructions, then add to video composition

instruction.layerInstructions = [NSArray arrayWithObject:transformer];

videoComposition.instructions = [NSArray arrayWithObject: instruction];

//Remove any prevouis videos at that path

[[NSFileManager defaultManager] removeItemAtURL:outputUrl error:nil];

if (!exporter)

exporter = [[AVAssetExportSession alloc] initWithAsset:asset presetName:AVAssetExportPresetHighestQuality] ;

// assign all instruction for the video processing (in this case the transformation for cropping the video

exporter.videoComposition = videoComposition;

exporter.outputFileType = AVFileTypeQuickTimeMovie;

if (outputUrl)

exporter.outputURL = outputUrl;

[exporter exportAsynchronouslyWithCompletionHandler:^

switch ([exporter status])

case AVAssetExportSessionStatusFailed:

NSLog(@"crop Export failed: %@", [[exporter error] localizedDescription]);

if (completion)

dispatch_async(dispatch_get_main_queue(), ^

completion(NO,[exporter error],nil);

);

return;

break;

case AVAssetExportSessionStatusCancelled:

NSLog(@"crop Export canceled");

if (completion)

dispatch_async(dispatch_get_main_queue(), ^

completion(NO,nil,nil);

);

return;

break;

default:

break;

if (completion)

dispatch_async(dispatch_get_main_queue(), ^

completion(YES,nil,outputUrl);

);

];

return exporter;

然后我像这样玩和调用作物。

AVAsset *assest = [AVAsset assetWithURL:self.videoURL];

NSString * documentsPath = [NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES) objectAtIndex:0];

NSString *exportPath = [documentsPath stringByAppendingFormat:@"/croppedvideo.mp4"];

NSURL *exportUrl = [NSURL fileURLWithPath:exportPath];

AVAssetExportSession *exporter = [AVAssetExportSession exportSessionWithAsset:assest presetName:AVAssetExportPresetLowQuality];

[self applyCropToVideoWithAsset:assest AtRect:CGRectMake(self.view.frame.size.width/2 - 57.5 - 5, self.view.frame.size.height / 2 - 140, 115, 85) OnTimeRange:CMTimeRangeMake(kCMTimeZero, CMTimeMakeWithSeconds(assest.duration.value, 1))

ExportToUrl:exportUrl ExistingExportSession:exporter WithCompletion:^(BOOL success, NSError *error, NSURL *videoUrl)

AVPlayer *player = [AVPlayer playerWithURL:videoUrl];

AVPlayerLayer *layer = [AVPlayerLayer playerLayerWithPlayer:player];

layer.frame = CGRectMake(125, 365, 115, 115);

UIView *view = [[UIView alloc] initWithFrame:CGRectMake(0, 0, 400, 400)];

[view.layer addSublayer:layer];

[self.view addSubview:view];

[player play];

如果你想测试这个,只需复制粘贴代码,然后设置一个视频,你就会看到我在说什么。

感谢您花时间帮助我,我知道这是一段相当多的代码。

【问题讨论】:

【参考方案1】:iOS 编码器或视频格式本身都有宽度要求。尝试使宽度均匀或可被 4 整除。

我不知道对高度有类似的要求,但这也值得一试。

我从来没有发现它记录在案,但是要求均匀度有一定的意义,因为 h.264 使用 4:2:0 yuv 颜色空间,其中 UV 分量的大小(在两个维度上)是Y 通道,具有视频的整体尺寸。如果这些尺寸不均匀,那么 UV 尺寸就不是完整的。

附言在这些情况下,暗示是神秘的绿色。我认为它对应于 YUV 中的 0,0,0。

【讨论】:

它必须为宽度和高度都完成,但是哇,这太奇怪了 酷。是 2 还是 4 的倍数?【参考方案2】:@Rhythmic 的回答拯救了我的一天。

在我的应用中,我需要根据屏幕宽度大小的方形视频。因此,对于 iPhone 5,这是 320 像素,对于 iPhone 6,这是 375 像素。

所以我遇到了 iPhone 6 尺寸分辨率的相同绿线问题。因为它的屏幕尺寸宽度是 375 像素。并且不能被 2 或 4 整除。

为此,我们进行了以下更改:

AVMutableVideoComposition *MainCompositionInst = [AVMutableVideoComposition videoComposition];

MainCompositionInst.instructions = [NSArray arrayWithObject:MainInstruction];

MainInstruction.timeRange = range;

MainCompositionInst.frameDuration = VideoFrameDuration; //Constants

MainCompositionInst.renderScale = VideoRenderScale; //Constants

if ((int)SCREEN_WIDTH % 2 == 0)

MainCompositionInst.renderSize = CGSizeMake(SCREEN_WIDTH, SCREEN_WIDTH);

else // This does the trick

MainCompositionInst.renderSize = CGSizeMake(SCREEN_WIDTH+1, SCREEN_WIDTH+1);

只需再添加一个像素,它就可以被 2 或 4 整除。

【讨论】:

聪明的解释,我看过@Rhythmic 的回答,它实际上是一个很好的回答。但当事不宜迟。你赢了。 ;) 所以为你 +1。以上是关于请教高手们:电脑ATX电源里的绿线和黑色短接后的电流是多大?的主要内容,如果未能解决你的问题,请参考以下文章