音频之Android NDK读写声卡

Posted 浪游东戴河

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了音频之Android NDK读写声卡相关的知识,希望对你有一定的参考价值。

通过android NDK读写声卡通过 AudioRecord和AudioTrack两个类实现。

AudioTrack:负责声音数据的输出

AudioRecord:负责声音数据的采集

system/media/audio/include/system

├── audio-base.h

├── audio-base-utils.h

├── audio_effect-base.h

├── audio_effect.h

├── audio_effects

├── audio.h

├── audio_policy.h

└── sound_trigger.h

音频源:

typedef enum

AUDIO_SOURCE_DEFAULT = 0, //默认输入源

AUDIO_SOURCE_MIC = 1, //Microphone audio source 麦克风输入源

AUDIO_SOURCE_VOICE_UPLINK = 2, //Voice call uplink (Tx) audio source 语音呼叫上行(Tx)输入源

AUDIO_SOURCE_VOICE_DOWNLINK = 3, //Voice call downlink (Rx) audio source 语音呼叫下行(Rx)输入源

AUDIO_SOURCE_VOICE_CALL = 4, //Voice call uplink + downlink audio source 语音呼叫上下行输入源

AUDIO_SOURCE_CAMCORDER = 5, //Microphone audio source tuned for video recording 视频录制的麦克风音频源

AUDIO_SOURCE_VOICE_RECOGNITION = 6, //Microphone audio source tuned for voice recognition 针对语音唤醒的输入源

AUDIO_SOURCE_VOICE_COMMUNICATION = 7, //Microphone audio source tuned for voice communications such as VoIP 针对VOIP语音的输入源

AUDIO_SOURCE_REMOTE_SUBMIX = 8,

AUDIO_SOURCE_UNPROCESSED = 9,

AUDIO_SOURCE_VOICE_PERFORMANCE = 10,

AUDIO_SOURCE_ECHO_REFERENCE = 1997,

AUDIO_SOURCE_FM_TUNER = 1998,

#ifndef AUDIO_NO_SYSTEM_DECLARATIONS

/**

* A low-priority, preemptible audio source for for background software

* hotword detection. Same tuning as VOICE_RECOGNITION.

* Used only internally by the framework.

*/

AUDIO_SOURCE_HOTWORD = 1999,

#endif // AUDIO_NO_SYSTEM_DECLARATIONS

audio_source_t;

typedef enum

AUDIO_SESSION_OUTPUT_STAGE = -1, // (-1)

AUDIO_SESSION_OUTPUT_MIX = 0,

AUDIO_SESSION_ALLOCATE = 0,

AUDIO_SESSION_NONE = 0,

audio_session_t;

//音频格式

typedef enum //省略部分定义

AUDIO_FORMAT_INVALID = 0xFFFFFFFFu,

AUDIO_FORMAT_DEFAULT = 0,

AUDIO_FORMAT_PCM = 0x00000000u,

AUDIO_FORMAT_MP3 = 0x01000000u,

AUDIO_FORMAT_AMR_NB = 0x02000000u,

/* Subformats */

AUDIO_FORMAT_PCM_SUB_16_BIT = 0x1u,

AUDIO_FORMAT_PCM_SUB_8_BIT = 0x2u,

AUDIO_FORMAT_PCM_SUB_32_BIT = 0x3u,

AUDIO_FORMAT_PCM_SUB_8_24_BIT = 0x4u,

AUDIO_FORMAT_PCM_SUB_FLOAT = 0x5u,

AUDIO_FORMAT_PCM_SUB_24_BIT_PACKED = 0x6u,

/* Aliases */

AUDIO_FORMAT_PCM_16_BIT = 0x1u, // (PCM | PCM_SUB_16_BIT) //PCM16位

AUDIO_FORMAT_PCM_8_BIT = 0x2u, // (PCM | PCM_SUB_8_BIT) //PCM 8位

AUDIO_FORMAT_PCM_32_BIT = 0x3u, // (PCM | PCM_SUB_32_BIT)

AUDIO_FORMAT_PCM_8_24_BIT = 0x4u, // (PCM | PCM_SUB_8_24_BIT)

AUDIO_FORMAT_PCM_FLOAT = 0x5u, // (PCM | PCM_SUB_FLOAT)

AUDIO_FORMAT_PCM_24_BIT_PACKED = 0x6u, // (PCM | PCM_SUB_24_BIT_PACKED)

AUDIO_FORMAT_AAC_MAIN = 0x4000001u, // (AAC | AAC_SUB_MAIN)

AUDIO_FORMAT_AAC_LC = 0x4000002u, // (AAC | AAC_SUB_LC)

AUDIO_FORMAT_AAC_SSR = 0x4000004u, // (AAC | AAC_SUB_SSR)

audio_format_t;

enum //省略部分定义

AUDIO_CHANNEL_REPRESENTATION_POSITION = 0x0u,

AUDIO_CHANNEL_REPRESENTATION_INDEX = 0x2u,

AUDIO_CHANNEL_NONE = 0x0u,

AUDIO_CHANNEL_INVALID = 0xC0000000u,

AUDIO_CHANNEL_OUT_FRONT_LEFT = 0x1u,

AUDIO_CHANNEL_OUT_FRONT_RIGHT = 0x2u,

AUDIO_CHANNEL_IN_TOP_RIGHT = 0x400000u,

AUDIO_CHANNEL_IN_VOICE_UPLINK = 0x4000u,

AUDIO_CHANNEL_IN_VOICE_DNLINK = 0x8000u,

AUDIO_CHANNEL_IN_MONO = 0x10u, // IN_FRONT //单声道

AUDIO_CHANNEL_IN_STEREO = 0xCu, // IN_LEFT | IN_RIGHT 立体声

AUDIO_CHANNEL_IN_FRONT_BACK = 0x30u, // IN_FRONT | IN_BACK

AUDIO_CHANNEL_IN_6 = 0xFCu, // IN_LEFT | IN_RIGHT | IN_FRONT | IN_BACK | IN_LEFT_PROCESSED | IN_RIGHT_PROCESSED

AUDIO_CHANNEL_IN_2POINT0POINT2 = 0x60000Cu, // IN_LEFT | IN_RIGHT | IN_TOP_LEFT | IN_TOP_RIGHT

AUDIO_CHANNEL_IN_2POINT1POINT2 = 0x70000Cu, // IN_LEFT | IN_RIGHT | IN_TOP_LEFT | IN_TOP_RIGHT | IN_LOW_FREQUENCY

AUDIO_CHANNEL_IN_3POINT0POINT2 = 0x64000Cu, // IN_LEFT | IN_CENTER | IN_RIGHT | IN_TOP_LEFT | IN_TOP_RIGHT

AUDIO_CHANNEL_IN_3POINT1POINT2 = 0x74000Cu, // IN_LEFT | IN_CENTER | IN_RIGHT | IN_TOP_LEFT | IN_TOP_RIGHT | IN_LOW_FREQUENCY

AUDIO_CHANNEL_IN_5POINT1 = 0x17000Cu, // IN_LEFT | IN_CENTER | IN_RIGHT | IN_BACK_LEFT | IN_BACK_RIGHT | IN_LOW_FREQUENCY

AUDIO_CHANNEL_IN_VOICE_UPLINK_MONO = 0x4010u, // IN_VOICE_UPLINK | IN_MONO

AUDIO_CHANNEL_IN_VOICE_DNLINK_MONO = 0x8010u, // IN_VOICE_DNLINK | IN_MONO

AUDIO_CHANNEL_IN_VOICE_CALL_MONO = 0xC010u, // IN_VOICE_UPLINK_MONO | IN_VOICE_DNLINK_MONO

;

typedef enum

AUDIO_INPUT_FLAG_NONE = 0x0,

AUDIO_INPUT_FLAG_FAST = 0x1,

AUDIO_INPUT_FLAG_HW_HOTWORD = 0x2,

AUDIO_INPUT_FLAG_RAW = 0x4,

AUDIO_INPUT_FLAG_SYNC = 0x8,

AUDIO_INPUT_FLAG_MMAP_NOIRQ = 0x10,

AUDIO_INPUT_FLAG_VOIP_TX = 0x20,

AUDIO_INPUT_FLAG_HW_AV_SYNC = 0x40,

#ifndef AUDIO_NO_SYSTEM_DECLARATIONS // TODO: Expose at HAL interface, remove FRAMEWORK_FLAGS mask

AUDIO_INPUT_FLAG_DIRECT = 0x80,

AUDIO_INPUT_FRAMEWORK_FLAGS = AUDIO_INPUT_FLAG_DIRECT,

#endif

audio_input_flags_t;

enum

AUDIO_IO_HANDLE_NONE = 0,

AUDIO_MODULE_HANDLE_NONE = 0,

AUDIO_PORT_HANDLE_NONE = 0,

AUDIO_PATCH_HANDLE_NONE = 0,

;

TRANSFER_CALLBACK 通过回调函数传输数据

TRANSFER_OBTAIN

TRANSFER_SYNC

TRANSFER_DEFAULT

├── Android.mk

├── include

└── src

└── audio_main.cpp

audio_main.cpp:

#include <stdio.h>

#include <pthread.h>

#include <math.h>

#include <system/audio.h>

#include <media/AudioRecord.h>

#include <media/AudioTrack.h>

using namespace android;

sp<AudioRecord> mAudioRecord;

sp<AudioTrack> mAudioTrack;

FILE *g_read_pcm = NULL;

FILE *g_write_pcm = NULL;

audio_channel_mask_t channelmask = AUDIO_CHANNEL_IN_MONO;

audio_format_t audio_format = AUDIO_FORMAT_PCM_16_BIT;

int sample_rate = 16000;

int min_buf_size = 0;

void read_audio_data(int event, void *user, void *info)

if (event != AudioRecord::EVENT_MORE_DATA)

printf("%s: event: %d\\n", __FUNCTION__, event);

return;

AudioRecord::Buffer *buffer = static_cast<AudioRecord::Buffer *>(info);

if (buffer->size == 0)

return;

//printf("%s: buf size: %d\\n", __FUNCTION__, buffer->size);

fwrite(buffer->raw, buffer->size, 1, g_write_pcm);

//read from soundcard and write into file

int ndk_audio_read()

int ret = 0;

char file[256] = '\\0';

size_t frame_count = 0;

int frame_size = 0;

String16 strName = String16("reader");

mAudioRecord = new AudioRecord(strName);

mAudioRecord.get();

status_t result = AudioRecord::getMinFrameCount(&frame_count, sample_rate,

audio_format, channelmask);

if (result == NO_ERROR)

int channel_count = popcount(channelmask);

min_buf_size = frame_count * channel_count * (audio_format == AUDIO_FORMAT_PCM_16_BIT ? 2 : 1);

else if (result == BAD_VALUE)

printf("Invalid param when get min frame count\\n");

return -1;

else

printf("Faield to get min frame count\\n");

return -1;

min_buf_size *= 2;// To prevent "buffer overflow" issue

if (min_buf_size > 0)

printf("get min buf size[%d]\\n", min_buf_size);

else

printf("get min buf size failed\\n");

return -1;

frame_size = popcount(channelmask) * (audio_format == AUDIO_FORMAT_PCM_16_BIT ? 2 : 1);

frame_count = min_buf_size / frame_size;

ret = mAudioRecord->set(

AUDIO_SOURCE_MIC,

sample_rate,

audio_format,

channelmask,

frame_count,

read_audio_data,

NULL,

0,

false,

AUDIO_SESSION_ALLOCATE,

AudioRecord::TRANSFER_CALLBACK,

AUDIO_INPUT_FLAG_FAST,

getuid(),

getpid(),

NULL,

AUDIO_PORT_HANDLE_NONE);

if (ret != NO_ERROR)

printf("AudioRecord set failure\\n");

return -1;

else

printf("set success\\n");

if (mAudioRecord->initCheck() != NO_ERROR)

printf("AudioRecord initialization failed!");

return -1;

snprintf(file, 256, "/data/ndksound.pcm");

g_write_pcm = fopen(file, "wb");

ret = mAudioRecord->start();

if (ret != NO_ERROR)

printf("Audio Record start failure ret: [%d]", ret);

return 0;

void write_audio_data(int event, void *user, void *info)

if (event != AudioTrack::EVENT_MORE_DATA)

printf("soundcard writer event: %d\\n", event);

return;

AudioTrack::Buffer *buffer = static_cast<AudioTrack::Buffer *>(info);

if (buffer->size == 0)

return;

memset(buffer->raw, 0, buffer->size);

int ret = fread(buffer->raw, 1, buffer->size, g_read_pcm);

if (ret <= 0)

printf("%s: no more data:%d\\n", __FUNCTION__, ret);

exit(1);

//read from file and write into soundcard

int ndk_audio_write()

int ret = 0;

char file[256] = '\\0';

size_t frame_count = 0;

int frame_size = 0;

mAudioTrack = new AudioTrack();

mAudioTrack.get();

status_t result = AudioTrack::getMinFrameCount(&frame_count, AUDIO_STREAM_DEFAULT,

sample_rate);

if (result == NO_ERROR)

int channel_count = popcount(channelmask);

min_buf_size = frame_count * channel_count * (audio_format == AUDIO_FORMAT_PCM_16_BIT ? 2 : 1);

else if (result == BAD_VALUE)

printf("Invalid param when get min frame count\\n");

return -1;

else

printf("Faield to get min frame count\\n");

return -1;

if (min_buf_size > 0)

printf("get min buf size[%d]\\n", min_buf_size);

else

printf("get min buf size failed\\n");

return -1;

channelmask = AUDIO_CHANNEL_OUT_MONO;

frame_size = popcount(channelmask) * (audio_format == AUDIO_FORMAT_PCM_16_BIT ? 2 : 1);

frame_count = min_buf_size / frame_size;

ret = mAudioTrack->set(

AUDIO_STREAM_VOICE_CALL,

sample_rate,

audio_format,

channelmask,

frame_count,

AUDIO_OUTPUT_FLAG_FAST,

write_audio_data,

NULL,

0,

0,

false,

AUDIO_SESSION_ALLOCATE,

AudioTrack::TRANSFER_CALLBACK,

NULL,

-1

);

if (ret != NO_ERROR)

printf("mAudioTrack set failure\\n");

return -1;

else

printf("set success\\n");

if (mAudioTrack->initCheck() != NO_ERROR)

printf("mAudioTrack initialization failed!");

return -1;

snprintf(file, 256, "/data/ndksound.pcm");

g_read_pcm = fopen(file, "rb");

if (!g_read_pcm)

printf("open file failed\\n");

return -1;

ret = mAudioTrack->start();

if (ret != NO_ERROR)

printf("Audio Track start failure ret: [%d]", ret);

return -1;

printf("start success\\n");

return 0;

int main(int argc, char *argv[])

int ret = 0;

if (argc < 2)

printf("need 2 param\\n");

return -1;

if (0 == strcmp(argv[1], "read"))

printf("read soundcard\\n");

ret = ndk_audio_read();

if (ret < 0)

exit(1);

else

printf("write soundcard\\n");

ret = ndk_audio_write();

if (ret < 0)

exit(1);

while (1)

sleep(5);

if (g_read_pcm)

fclose(g_read_pcm);

if (g_write_pcm)

fclose(g_write_pcm);

return 0;

Android.mk

LOCAL_PATH := $(call my-dir)

include $(CLEAR_VARS)

LOCAL_SRC_FILES += \\

src/audio_main.cpp

LOCAL_C_INCLUDES += \\

bionic \\

external/stlport/stlport \\

external/libcxx/include \\

frameworks/av/include \\

frameworks/av/media/libaudioclient/include \\

frameworks/native/libs/nativebase/include \\

frameworks/native/libs/math/include \\

frameworks/av/media/ndk/include \\

system/core/include \\

system/core/libprocessgroup/include \\

system/core/base/include \\

system/core/libutils/include \\

LOCAL_CFLAGS := -DANDROID -Wall -Wno-implicit-function-declaration -Wl,--unresolved-symbols=ignore-all

LOCAL_MODULE := ndk_audio

LOCAL_LDLIBS := -lm -lmediandk -landroid -laudioclient -lstdc++ -lutils

include $(BUILD_EXECUTABLE)

从声卡读声音数据写到文件: ./ndk_audio read

从文件读声音数据写到声卡: ./ndk_audio write

Linux ALSA声卡驱动之五:移动设备中的ALSA(ASoC)

转自http://blog.csdn.net/droidphone/article/details/7165482

1. ASoC的由来

ASoC--ALSA System on Chip ,是建立在标准ALSA驱动层上,为了更好地支持嵌入式处理器和移动设备中的音频Codec的一套软件体系。在ASoc出现之前,内核对于SoC中的音频已经有部分的支持,不过会有一些局限性:

- Codec驱动与SoC CPU的底层耦合过于紧密,这种不理想会导致代码的重复,例如,仅是wm8731的驱动,当时Linux中有分别针对4个平台的驱动代码。

- 音频事件没有标准的方法来通知用户,例如耳机、麦克风的插拔和检测,这些事件在移动设备中是非常普通的,而且通常都需要特定于机器的代码进行重新对音频路劲进行配置。

- 当进行播放或录音时,驱动会让整个codec处于上电状态,这对于PC没问题,但对于移动设备来说,这意味着浪费大量的电量。同时也不支持通过改变过取样频率和偏置电流来达到省电的目的。

ASoC正是为了解决上述种种问题而提出的,目前已经被整合至内核的代码树中:sound/soc。ASoC不能单独存在,他只是建立在标准ALSA驱动上的一个它必须和标准的ALSA驱动框架相结合才能工作。

/********************************************************************************************/

声明:本博内容均由http://blog.csdn.net/droidphone原创,转载请注明出处,谢谢!

/********************************************************************************************/

2. 硬件架构

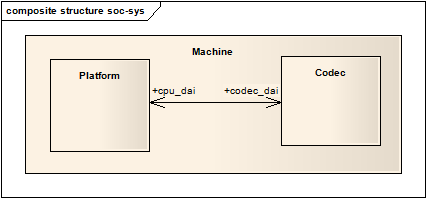

通常,就像软件领域里的抽象和重用一样,嵌入式设备的音频系统可以被划分为板载硬件(Machine)、Soc(Platform)、Codec三大部分,如下图所示:

图2.1 音频系统结构

- Machine 是指某一款机器,可以是某款设备,某款开发板,又或者是某款智能手机,由此可以看出Machine几乎是不可重用的,每个Machine上的硬件实现可能都不一样,CPU不一样,Codec不一样,音频的输入、输出设备也不一样,Machine为CPU、Codec、输入输出设备提供了一个载体。

- Platform 一般是指某一个SoC平台,比如pxaxxx,s3cxxxx,omapxxx等等,与音频相关的通常包含该SoC中的时钟、DMA、I2S、PCM等等,只要指定了SoC,那么我们可以认为它会有一个对应的Platform,它只与SoC相关,与Machine无关,这样我们就可以把Platform抽象出来,使得同一款SoC不用做任何的改动,就可以用在不同的Machine中。实际上,把Platform认为是某个SoC更好理解。

- Codec 字面上的意思就是编解码器,Codec里面包含了I2S接口、D/A、A/D、Mixer、PA(功放),通常包含多种输入(Mic、Line-in、I2S、PCM)和多个输出(耳机、喇叭、听筒,Line-out),Codec和Platform一样,是可重用的部件,同一个Codec可以被不同的Machine使用。嵌入式Codec通常通过I2C对内部的寄存器进行控制。

3. 软件架构

在软件层面,ASoC也把嵌入式设备的音频系统同样分为3大部分,Machine,Platform和Codec。

- Codec驱动 ASoC中的一个重要设计原则就是要求Codec驱动是平台无关的,它包含了一些音频的控件(Controls),音频接口,DAMP(动态音频电源管理)的定义和某些Codec IO功能。为了保证硬件无关性,任何特定于平台和机器的代码都要移到Platform和Machine驱动中。所有的Codec驱动都要提供以下特性:

- Codec DAI 和 PCM的配置信息;

- Codec的IO控制方式(I2C,SPI等);

- Mixer和其他的音频控件;

- Codec的ALSA音频操作接口;

必要时,也可以提供以下功能:

-

- DAPM描述信息;

- DAPM事件处理程序;

- DAC数字静音控制

- Platform驱动 它包含了该SoC平台的音频DMA和音频接口的配置和控制(I2S,PCM,AC97等等);它也不能包含任何与板子或机器相关的代码。

- Machine驱动 Machine驱动负责处理机器特有的一些控件和音频事件(例如,当播放音频时,需要先行打开一个放大器);单独的Platform和Codec驱动是不能工作的,它必须由Machine驱动把它们结合在一起才能完成整个设备的音频处理工作。

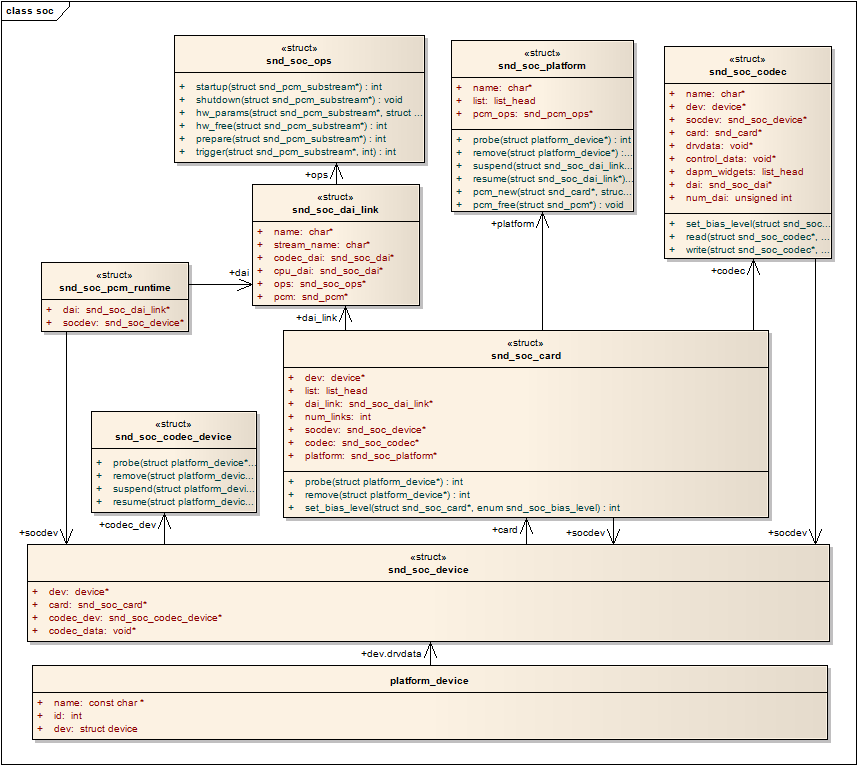

4. 数据结构

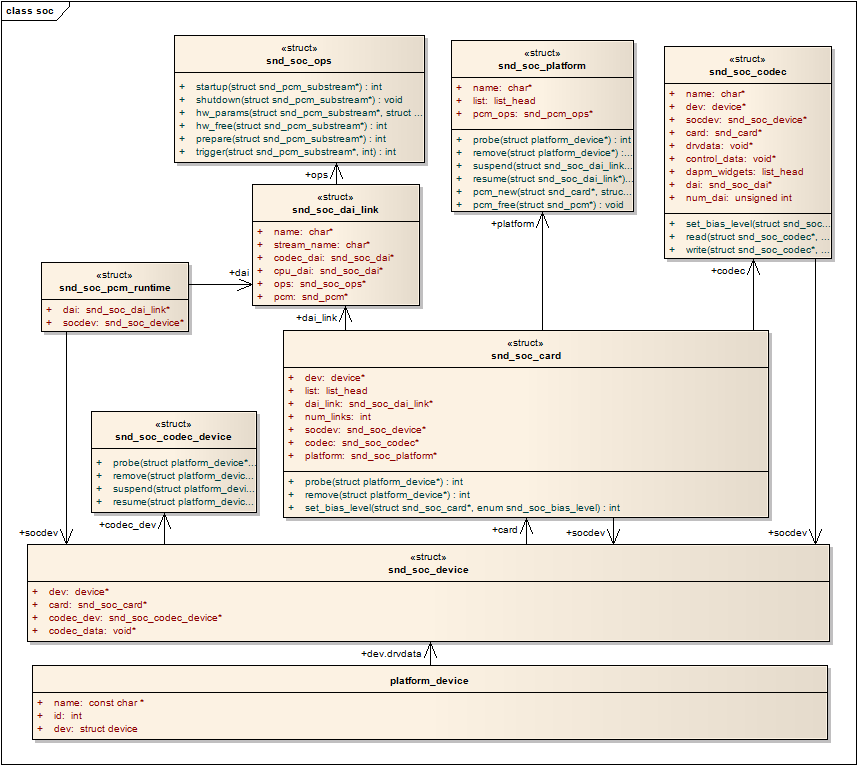

整个ASoC是由一些列数据结构组成,要搞清楚ASoC的工作机理,必须要理解这一系列数据结构之间的关系和作用,下面的关系图展示了ASoC中重要的数据结构之间的关联方式:

图4.1 Kernel-2.6.35-ASoC中各个结构的静态关系

ASoC把声卡实现为一个Platform Device,然后利用Platform_device结构中的dev字段:dev.drvdata,它实际上指向一个snd_soc_device结构。可以认为snd_soc_device是整个ASoC数据结构的根本,由他开始,引出一系列的数据结构用于表述音频的各种特性和功能。snd_soc_device结构引出了snd_soc_card和soc_codec_device两个结构,然后snd_soc_card又引出了snd_soc_platform、snd_soc_dai_link和snd_soc_codec结构。如上所述,ASoC被划分为Machine、Platform和Codec三大部分,如果从这些数据结构看来,snd_codec_device和snd_soc_card代表着Machine驱动,snd_soc_platform则代表着Platform驱动,snd_soc_codec和soc_codec_device则代表了Codec驱动,而snd_soc_dai_link则负责连接Platform和Codec。

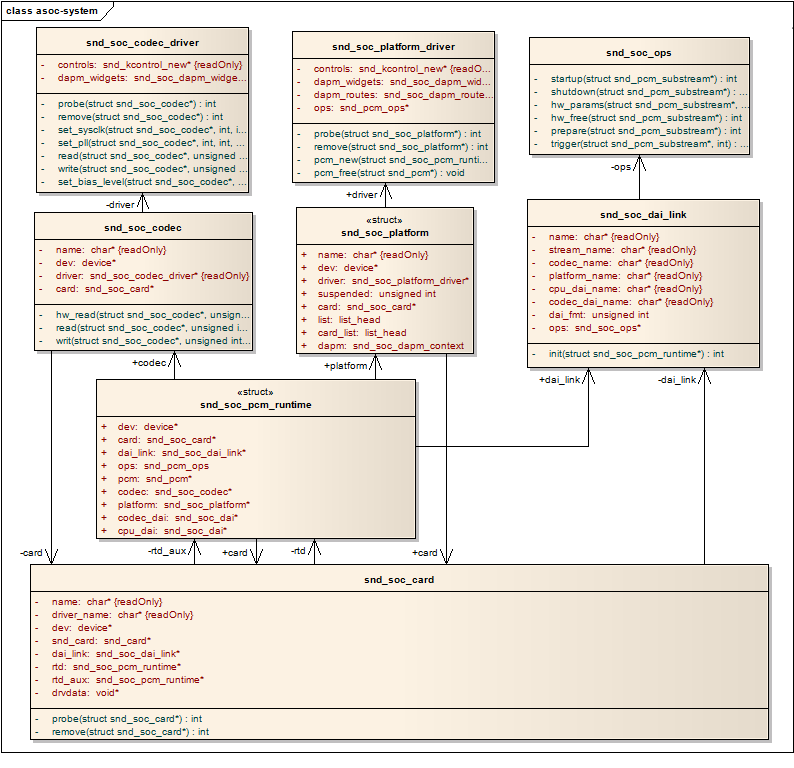

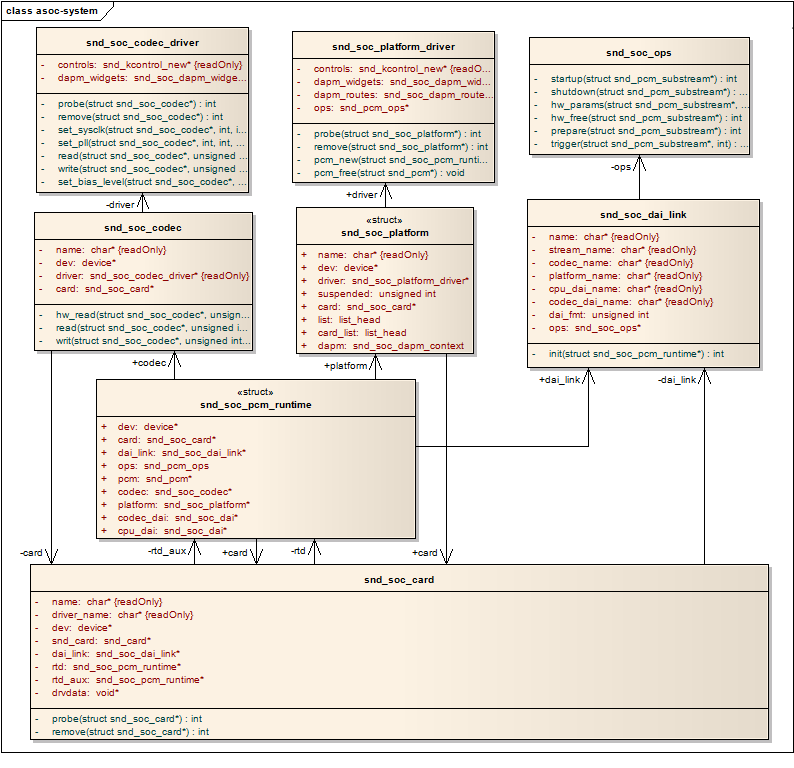

5. 3.0版内核对ASoC的改进

本来写这篇文章的时候参考的内核版本是2.6.35,不过有CSDN的朋友提出在内核版本3.0版本中,ASoC做了较大的变化。故特意下载了3.0的代码,发现确实有所变化,下面先贴出数据结构的静态关系图:

图5.1 Kernel 3.0中的ASoC数据结构

由上图我们可以看出,3.0中的数据结构更为合理和清晰,取消了snd_soc_device结构,直接用snd_soc_card取代了它,并且强化了snd_soc_pcm_runtime的作用,同时还增加了另外两个数据结构snd_soc_codec_driver和snd_soc_platform_driver,用于明确代表Codec驱动和Platform驱动。

后续的章节中将会逐一介绍Machine和Platform以及Codec驱动的工作细节和关联。

以上是关于音频之Android NDK读写声卡的主要内容,如果未能解决你的问题,请参考以下文章