解决spark程序报错:Caused by: java.util.concurrent.TimeoutException: Futures timed out after [300 seconds](

Posted 凿石刻字

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了解决spark程序报错:Caused by: java.util.concurrent.TimeoutException: Futures timed out after [300 seconds](相关的知识,希望对你有一定的参考价值。

报错信息:

09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.catalyst.errors.package$.attachTree(package.scala:49) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.aggregate.TungstenAggregate.doExecute(TungstenAggregate.scala:80) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$5.apply(SparkPlan.scala:132) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$5.apply(SparkPlan.scala:130) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:150) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:130) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.Exchange.prepareShuffleDependency(Exchange.scala:164) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.Exchange$$anonfun$doExecute$1.apply(Exchange.scala:254) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.Exchange$$anonfun$doExecute$1.apply(Exchange.scala:248) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.catalyst.errors.package$.attachTree(package.scala:48) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - ... 64 more 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - Caused by: java.util.concurrent.TimeoutException: Futures timed out after [300 seconds] 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at scala.concurrent.impl.Promise$DefaultPromise.ready(Promise.scala:219) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at scala.concurrent.impl.Promise$DefaultPromise.result(Promise.scala:223) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at scala.concurrent.Await$$anonfun$result$1.apply(package.scala:107) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at scala.concurrent.BlockContext$DefaultBlockContext$.blockOn(BlockContext.scala:53) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at scala.concurrent.Await$.result(package.scala:107) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.joins.BroadcastHashJoin.doExecute(BroadcastHashJoin.scala:107) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$5.apply(SparkPlan.scala:132) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$5.apply(SparkPlan.scala:130) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:150) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:130) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.Project.doExecute(basicOperators.scala:46) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$5.apply(SparkPlan.scala:132) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$5.apply(SparkPlan.scala:130) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:150) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:130) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.aggregate.TungstenAggregate$$anonfun$doExecute$1.apply(TungstenAggregate.scala:86) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.execution.aggregate.TungstenAggregate$$anonfun$doExecute$1.apply(TungstenAggregate.scala:80) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - at org.apache.spark.sql.catalyst.errors.package$.attachTree(package.scala:48) 09-05-2017 09:58:44 CST xxxx_job_1494294485570174 INFO - ... 73 more

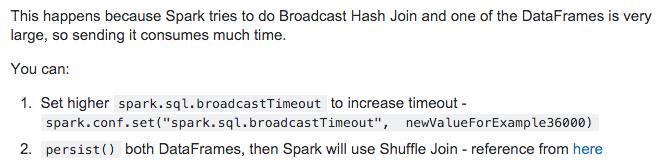

根据最后的Caused by信息和stack trace信息进行搜索,确定是broacast阶段超时,解决方法:

以上是关于解决spark程序报错:Caused by: java.util.concurrent.TimeoutException: Futures timed out after [300 seconds](的主要内容,如果未能解决你的问题,请参考以下文章