通过minio operator在k8s中部署minio tenant集群

Posted catoop

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了通过minio operator在k8s中部署minio tenant集群相关的知识,希望对你有一定的参考价值。

1、概述

MinIO是kubernetes原生的高性能对象存储,兼容Amazon的s3 API。

MinIO Operator是一个工具,该工具扩展了k8s的api,可以通过minio operator在公有云和私有云上来部署MinIO Tenants

本文就通过一步一步的操作,来演示如何在一个现存的k8s集群中部署一个minio集群。

2、MinIO集群部署过程

2.1、部署minio operator

本文档中,我们介绍的都是通过minio operator这个工具来部署minio的租户集群,那么首先,我们来安装minio operator

2.1.1、安装kubectl-minio插件

通过以下的命令安装minio operaor和minio插件(kubectl-minio)

wget http://xxxxx/kubernetes/kubectl-plugin/kubectl-minio_linux_amd64.zip

unzip kubectl-minio_linux_amd64.zip "kubectl-minio" -d /usr/local/bin/

kubectl minio version

如果出现以下的部分,说明kubectl-minio插件已经安装成功

[root@nccztsjb-node-23 ~]# kubectl minio version

v4.4.16

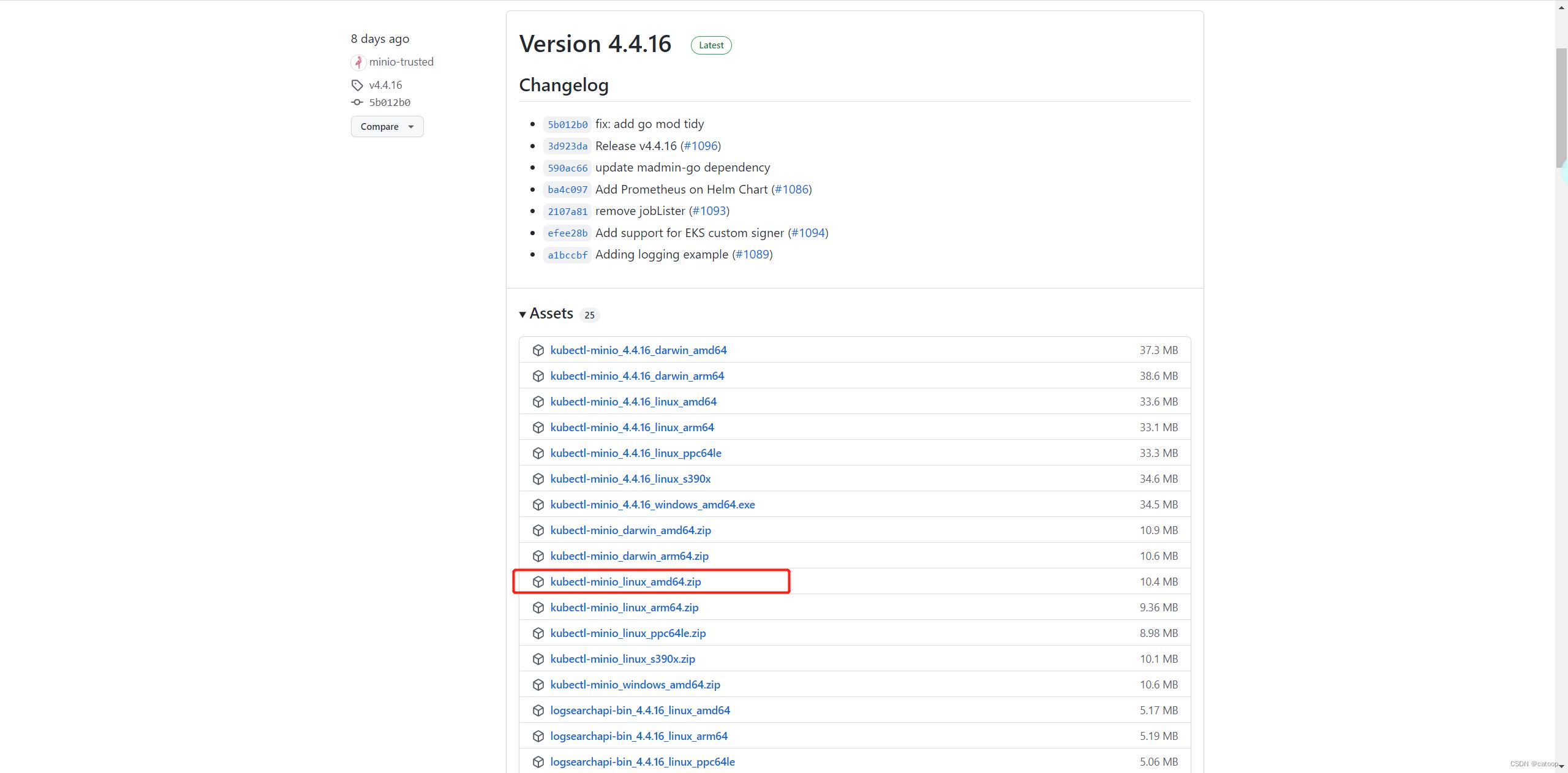

注意:这里的kubectl-minio插件压缩包已经下载到本地,也可以通过github上去获取需要的版本。

地址:https://github.com/minio/operator/releases

建议:直接下载zip包,下载二进制包,如果kubectl minio不是具体的版本,而是类似如下的信息:

[rootb@125 ~]# kubectl minio version

DEVELOPMENT.GOGET

[root@b125 ~]#

可能会导致后续的初始化和安装失败,并且operator打开之后,功能会不完整。

2.1.2、初始化minio operator

kubectl-minio插件完成之后,需要进行初始化的操作,即通过kubectl minio命令安装minio operator服务

a、执行以下的命令进行初始化

kubectl minio init \\

--image=1xxxx/minio/operator:v4.4.16 \\

--console-image=xxxx/minio/console:v0.15.13

注意:可以提前将镜像下载到本地的harbor,因为,本地有这样的镜像,在不同的环境部署的时候会节省时间。如果环境可以联网,那么是可以不加–image和–console-image参数的部分

安装过程

[root@nccztsjb-node-23 ~]# kubectl minio init \\

> --image=xxxx/minio/operator:v4.4.16 \\

> --console-image=1xxxx/minio/console:v0.15.13

namespace/minio-operator created

serviceaccount/minio-operator created

clusterrole.rbac.authorization.k8s.io/minio-operator-role created

clusterrolebinding.rbac.authorization.k8s.io/minio-operator-binding created

customresourcedefinition.apiextensions.k8s.io/tenants.minio.min.io created

service/operator created

deployment.apps/minio-operator created

serviceaccount/console-sa created

clusterrole.rbac.authorization.k8s.io/console-sa-role created

clusterrolebinding.rbac.authorization.k8s.io/console-sa-binding created

configmap/console-env created

service/console created

deployment.apps/console created

-----------------

To open Operator UI, start a port forward using this command:

kubectl minio proxy -n minio-operator

-----------------

[root@nccztsjb-node-23 ~]#

b、查看minio operator会被安装在minio-operator命名空间里

[root@nccztsjb-node-23 ~]# kubectl get all -n minio-operator

NAME READY STATUS RESTARTS AGE

pod/console-7796fcb6c4-q8tf4 1/1 Running 0 64s

pod/minio-operator-5d95d44c5-lffkr 1/1 Running 0 64s

pod/minio-operator-5d95d44c5-q7zdv 1/1 Running 0 64s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/console ClusterIP 10.105.37.171 <none> 9090/TCP,9443/TCP 64s

service/operator ClusterIP 10.100.4.192 <none> 4222/TCP,4221/TCP 64s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/console 1/1 1 1 64s

deployment.apps/minio-operator 2/2 2 2 64s

NAME DESIRED CURRENT READY AGE

replicaset.apps/console-7796fcb6c4 1 1 1 64s

replicaset.apps/minio-operator-5d95d44c5 2 2 2 64s

[root@nccztsjb-node-23 ~]#

当所有的pod都为Running状态的时候,表示服务已经ready了。

c、登录operator控制台

kubectl minio proxy -n minio-operator

操作日志

[root@nccztsjb-node-23 ~]# kubectl minio proxy -n minio-operator

Starting port forward of the Console UI.

To connect open a browser and go to http://localhost:9090

Current JWT to login: eyJhbGciOiJxxxxxx

Forwarding from 0.0.0.0:9090 -> 9090

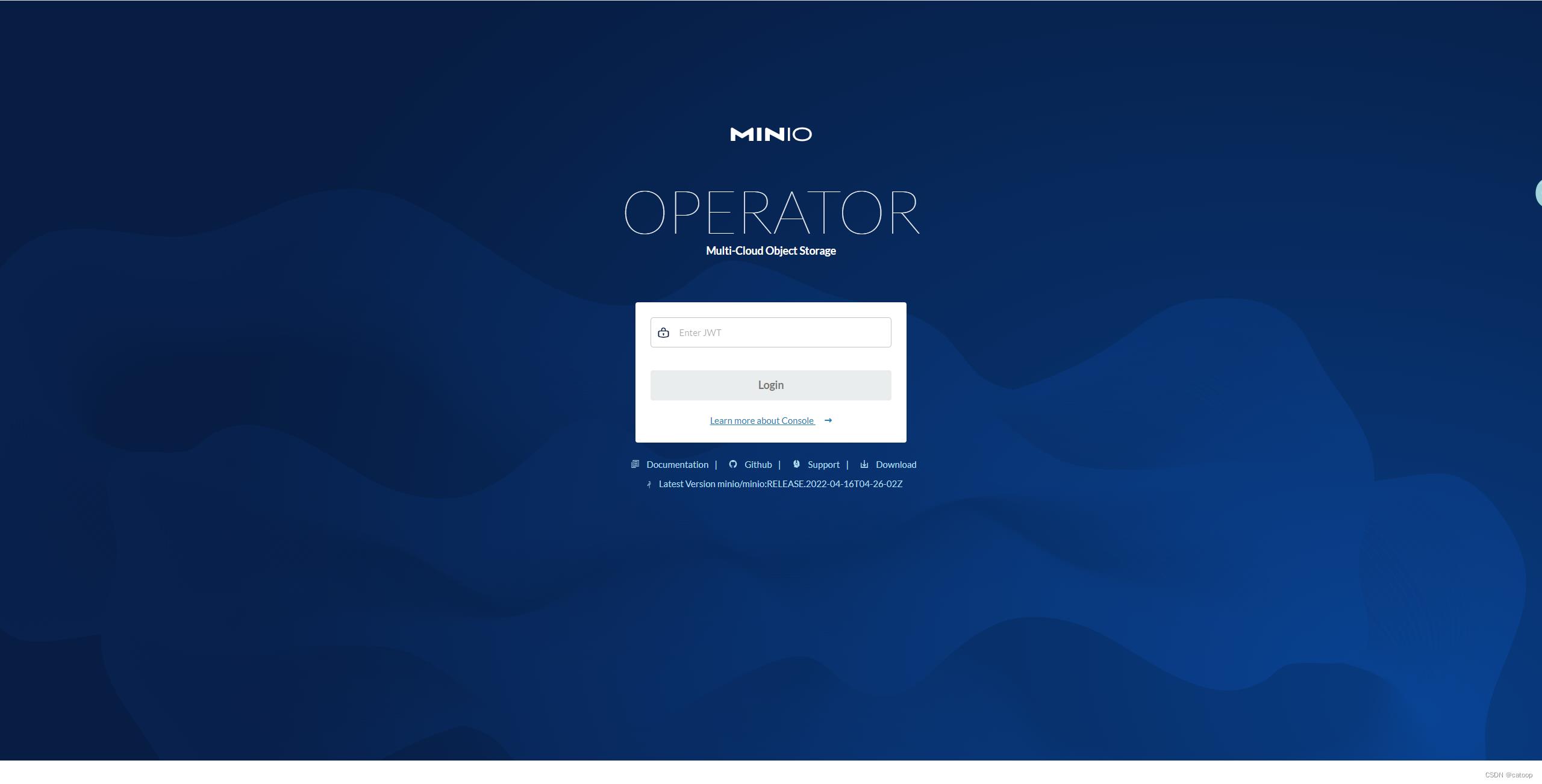

浏览器打开该地址(执行命令的主机):9090

输入JWT(上面执行命令的时候显示的 xxxxxxxx)

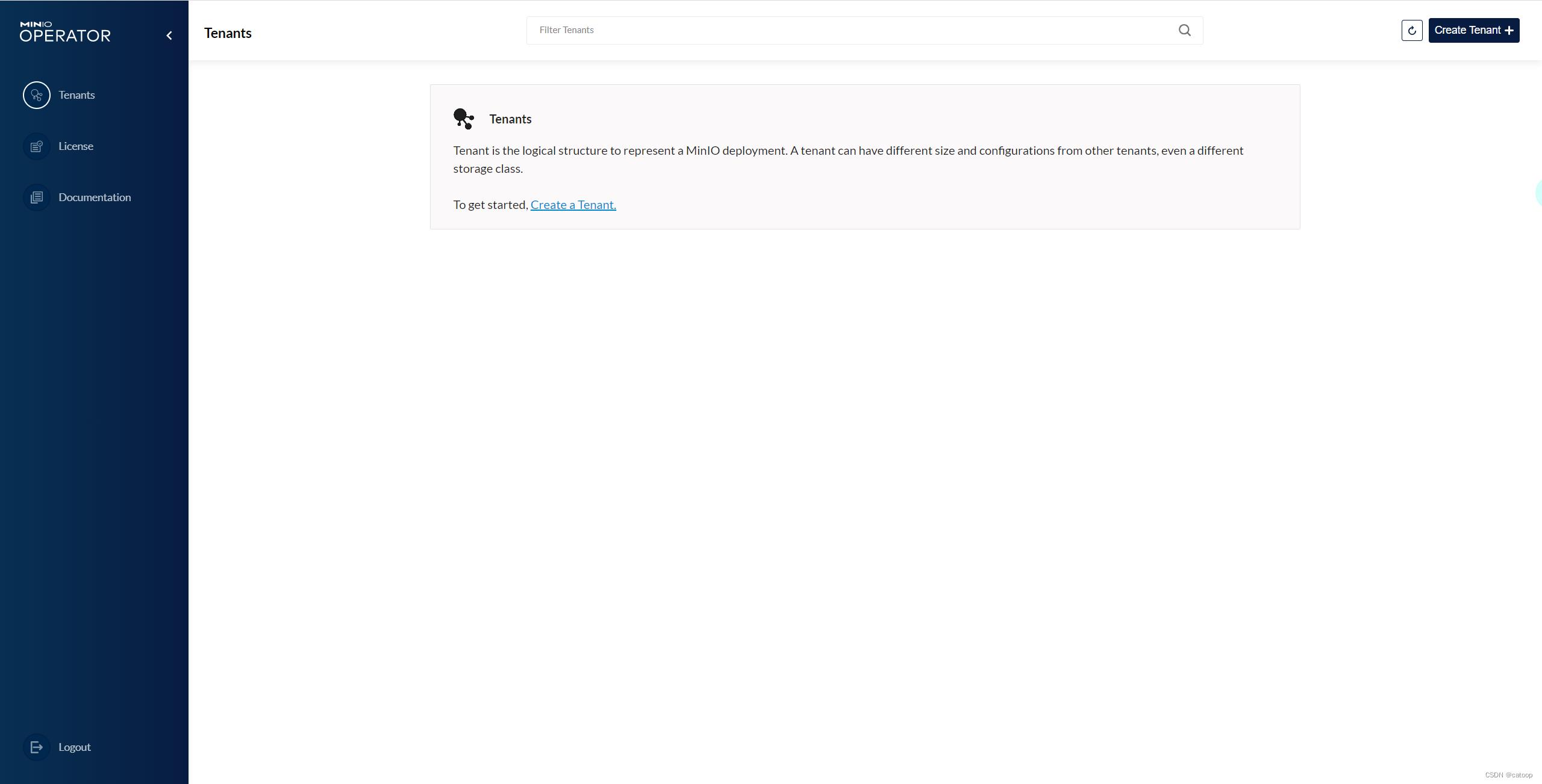

这样就登录了这个控制台了,当然我们可以在这里通过界面的操作来创建minio集群,但是本文档我们主要是通过命令行的方式来进行部署,这个后面会讲到。

2.2、部署minio tenant集群

我们在上文中已经部署了minio operator和对应的插件工具kubectl minio,在这部分我们要进行tenant集群的部署。

2.2.1、创建StorageClass

我们会通过storageclass和具体的pv进行绑定,来做为minio本地的持久化存储,当pv创建好之后,创建minio tenant集群的时候,会自动创建pvc然后通过这个storageclass和本地的pv进行绑定

通过以下的命令来创建storageclass

kubectl apply -f - <<EOF

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: minio-local-storage

provisioner: kubernetes.io/no-provisioner

reclaimPolicy: Retain

volumeBindingMode: WaitForFirstConsumer

EOF

注意:volumeBindingMode: WaitForFirstConsumer必须要设置为WaitForFirstConsumer,这样就会在pvc和pv进行绑定的时候,根据消费的情况进行绑定,而不是随机的绑定。

随机的绑定有可能导致,一个pod关联了不同主机的pv导致启动失败。

[root@nccztsjb-node-23 ~]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

minio-local-storage kubernetes.io/no-provisioner Retain WaitForFirstConsumer false 28h

[root@nccztsjb-node-23 ~]#

2.2.2、创建pv

对minio来说,使用local类型的pv进行数据的持久化的存储。

在每个节点创建不同的目录,用于local类型的本地存储目录

mkdir -p /data/minio/pv1,pv2,pv3,pv4

这里3个节点,一共12个volume,每个节点4个pv

通过以下的命令创建local类型的pv

kubectl apply -f - <<EOF

apiVersion: v1

kind: PersistentVolume

metadata:

name: nccztsjb-node-23-01

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: minio-local-storage

local:

path: /data/minio/pv1

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- nccztsjb-node-23

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nccztsjb-node-23-02

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: minio-local-storage

local:

path: /data/minio/pv2

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- nccztsjb-node-23

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nccztsjb-node-23-03

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: minio-local-storage

local:

path: /data/minio/pv3

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- nccztsjb-node-23

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nccztsjb-node-23-04

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: minio-local-storage

local:

path: /data/minio/pv4

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- nccztsjb-node-23

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nccztsjb-node-24-01

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: minio-local-storage

local:

path: /data/minio/pv1

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- nccztsjb-node-24

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nccztsjb-node-24-02

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: minio-local-storage

local:

path: /data/minio/pv2

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- nccztsjb-node-24

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nccztsjb-node-24-03

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: minio-local-storage

local:

path: /data/minio/pv3

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- nccztsjb-node-24

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nccztsjb-node-24-04

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: minio-local-storage

local:

path: /data/minio/pv4

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- nccztsjb-node-24

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nccztsjb-node-25-01

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: minio-local-storage

local:

path: /data/minio/pv1

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- nccztsjb-node-25

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nccztsjb-node-25-02

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: minio-local-storage

local:

path: /data/minio/pv2

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- nccztsjb-node-25

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nccztsjb-node-25-03

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: minio-local-storage

local:

path: /data/minio/pv3

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- nccztsjb-node-25

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nccztsjb-node-25-04

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: minio-local-storage

local:

path: /data/minio/pv4

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- nccztsjb-node-25

EOF

每个pv都是使用local类型,节点亲和的方式和具体的节点进行绑定

[root@nccztsjb-node-23 ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nccztsjb-node-23-01 10Gi RWO Retain Available minio-local-storage 3s

nccztsjb-node-23-02 10Gi RWO Retain Available minio-local-storage 3s

nccztsjb-node-23-03 10Gi RWO Retain Available minio-local-storage 3s

nccztsjb-node-23-04 10Gi RWO Retain Available minio-local-storage 3s

nccztsjb-node-24-01 10Gi RWO Retain Available minio-local-storage 3s

nccztsjb-node-24-02 10Gi RWO Retain Available minio-local-storage 3s

nccztsjb-node-24-03 10Gi RWO Retain Available minio-local-storage 3s

nccztsjb-node-24-04 10Gi RWO Retain Available minio-local-storage 3s

nccztsjb-node-25-01 10Gi RWO Retain Available minio-local-storage 3s

nccztsjb-node-25-02 10Gi RWO Retain Available minio-local-storage 3s

nccztsjb-node-25-03 10Gi RWO Retain Available minio-local-storage 3s

nccztsjb-node-25-04 10Gi RWO Retain Available minio-local-storage 3s

[root@nccztsjb-node-23 ~]#

这样,我们就创建好了,这12个pv.

注意、注意、注意:storageClassName: minio-local-storage,必须使用第一个步骤中的storageclass,否则在创建集群的时候,pv和pvc的绑定就会有问题!

2.2.3、创建命名空间

需要将minio租户放在一个特定的命名空间建中

kubectl create ns minio-tenant-1

2.2.4、创建minio tenant 集群

本地化存储已经具备,下面就来创建minio租户集群

kubectl minio tenant create minio-tenant-1 \\

--servers 3 \\

--volumes 12 \\

--capacity 120Gi \\

--storage-class minio-local-storage \\

--namespace minio-tenant-1 \\

--image xxxxxx/minio/minio:RELEASE.2022-04-16T04-26-02Z

这里我们的集群有3个节点,–servers 3,12个卷,每个pv 10g,就是120Gi的容器(capacity),注意,这里的storageclass这要指定minio-local-storage,另外,如果环境可以联网,也不需要指定–image操作

操作过程

[root@nccztsjb-node-23 ~]# kubectl minio tenant create minio-tenant-1 \\

> --servers 3 \\

> --volumes 12 \\

> --capacity 120Gi \\

> --storage-class minio-local-storage \\

> --namespace minio-tenant-1 \\

> --image xxxx/minio/minio:RELEASE.2022-04-16T04-26-02Z

Tenant 'minio-tenant-1' created in 'minio-tenant-1' Namespace

Username: admin

Password: xxxxxxx-02a3-4366-a2a4-5b74cb26a185

Note: Copy the credentials to a secure location. MinIO will not display these again.

+-------------+------------------------+----------------+--------------+--------------+

| APPLICATION | SERVICE NAME | NAMESPACE | SERVICE TYPE | SERVICE PORT |

+-------------+------------------------+----------------+--------------+--------------+

| MinIO | minio | minio-tenant-1 | ClusterIP | 443 |

| Console | minio-tenant-1-console | minio-tenant-1 | ClusterIP | 9443 |

+-------------+------------------------+----------------+--------------+--------------+

[root@nccztsjb-node-23 ~]#

这里面会给一个minio的管理用户密码,需要妥善保存。

查看pod的状态

[root@nccztsjb-node-23 ~]# kubectl get all -n minio-tenant-1

NAME READY STATUS RESTARTS AGE

pod/minio-tenant-1-ss-0-0 1/1 Running 0 43s

pod/minio-tenant-1-ss-0-1 1/1 Running 1 (35s ago) 43s

pod/minio-tenant-1-ss-0-2 1/1 Running 0 42s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/minio ClusterIP 10.104.251.7 <none> 443/TCP 43s

service/minio-tenant-1-console ClusterIP 10.102.87.98 <none> 9443/TCP 43s

service/minio-tenant-1-hl ClusterIP None <none> 9000/TCP 43s

NAME READY AGE

statefulset.apps/minio-tenant-1-ss-0 3/3 43s

[root@nccztsjb-node-23 ~]#

pod都为Running的状态。

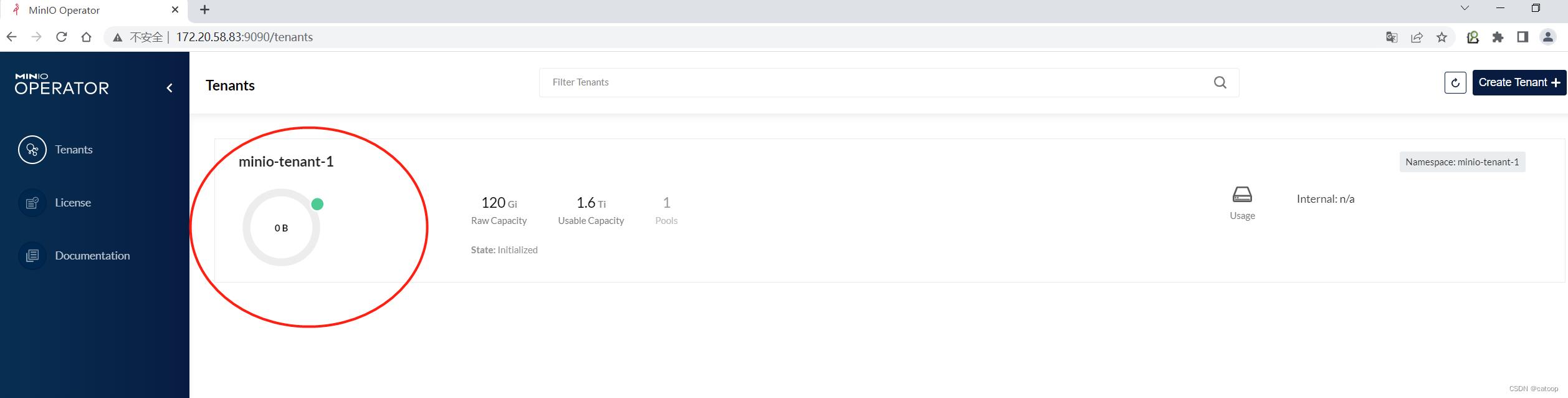

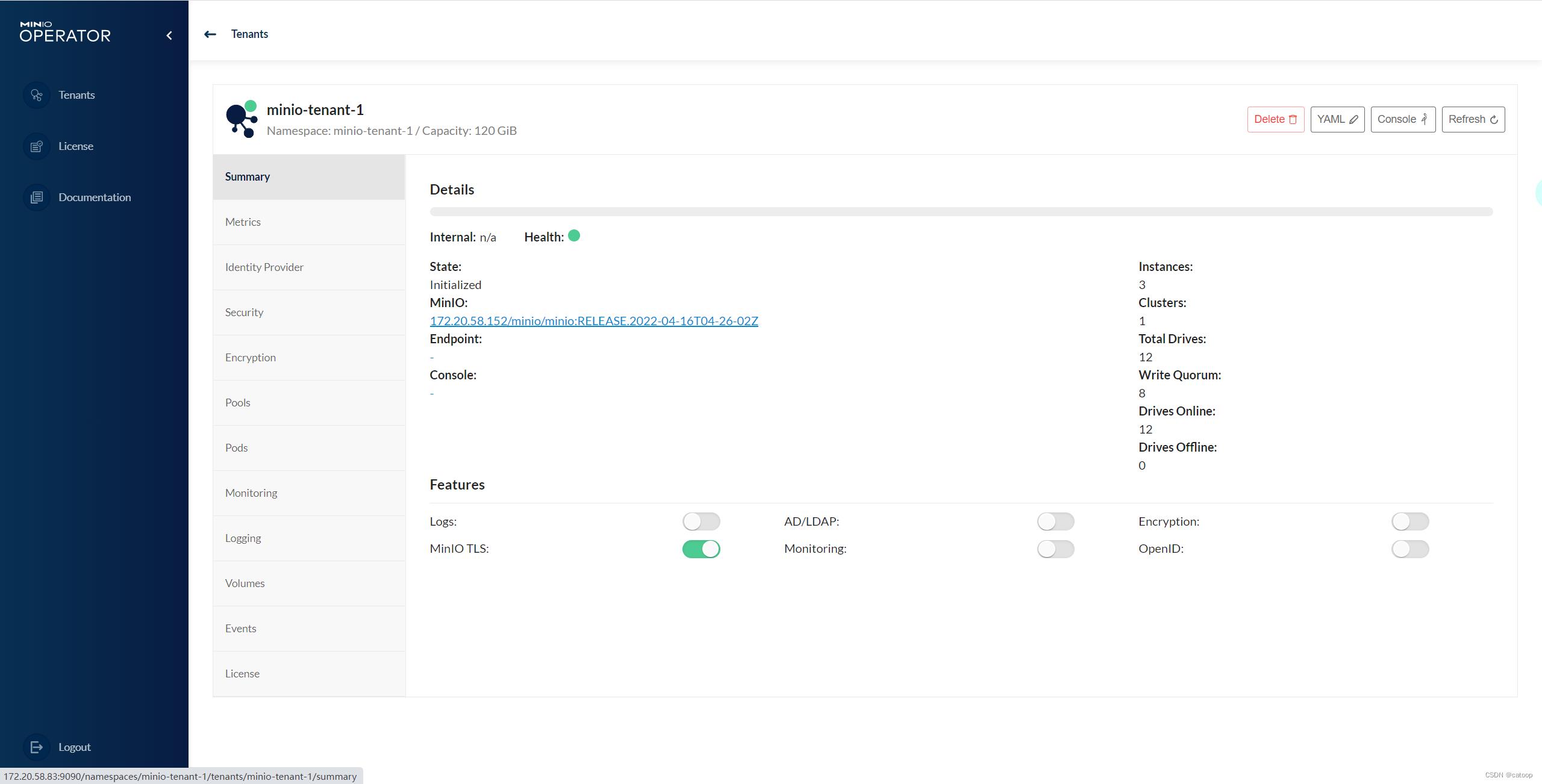

并且查看operator中的集群状态信息

当为health的时候,说明集群已经初始化了,没有问题。

OK,到这里,就完成了通过minio operator来安装minio集群的过程。

通过minio operator可以做很多的对集群的管理的操作。

3、kubectl minio常见操作

3.1、查看当前的tenant

[root@nccztsjb-node-23 ~]# kubectl minio tenant list

Tenant 'minio-tenant-1', Namespace 'minio-tenant-1', Total capacity 120 GiB

Current status: Initialized

MinIO version: 172.20.58.152/minio/minio:RELEASE.2022-04-16T04-26-02Z

[root@nccztsjb-node-23 ~]#

3.2、删除operator

kubectl minio delete

3.3、删除tenant

kubectl minio tenant delete minio-tenant-1 --namespace minio-tenant-1

3.4、删除pvc、pv

kubectl delete pvc --all -n minio-tenant-1

kubectl delete pv --all -n minio-tenant-1

3.5、删除命名空间

kubectl delete ns minio-tenant-1

4、问题汇总

在部署的过程中,启动tenant集群无法启动,始终报错如下:

API: SYSTEM()

Time: 07:34:57 UTC 04/24/2022

Error: Detected unexpected disk ordering refusing to use the disk - poolID: 1st, found disk mounted at (set=1st, disk=3rd) expected mount at (set=1st, disk=9th): https://minio-tenant-1-pool-0-2.minio-tenant-1-hl.minio-tenant-1.svc.cluster.local:9000/export2(45e46618-72a1-41a4-99f5-91deec0b7208) (*errors.errorString)

1: cmd/erasure-sets.go:451:cmd.newErasureSets.func1.1()

API: SYSTEM()

Time: 07:34:57 UTC 04/24/2022

Error: Detected unexpected disk ordering refusing to use the disk - poolID: 1st, found disk mounted at (set=1st, disk=1st) expected mount at (set=1st, disk=7th): /export2(583c2c4a-05a5-4090-9957-89e3c1f3e622) (*errors.errorString)

1: cmd/erasure-sets.go:451:cmd.newErasureSets.func1.1()

API: SYSTEM()

Time: 07:34:57 UTC 04/24/2022

Error: Detected unexpected disk ordering refusing to use the disk - poolID: 1st, found disk mounted at (set=1st, disk=6th) expected mount at (set=1st, disk=3rd): https://minio-tenant-1-pool-0-2.minio-tenant-1-hl.minio-tenant-1.svc.cluster.local:9000/export0(ed1401e4-6ed9-48de-897f-baa0935a003a) (*errors.errorString)

1: cmd/erasure-sets.go:451:cmd.newErasureSets.func1.1()

找了好久没有找到答案,后来想了下,这个是实验的环境,pv和pvc反复的绑定过很多次了,minio tenant是个有状态的集群,怀疑有关系,在每个节点的目录中,也看到minio相关的系统数据

[root@nccztsjb-node-23 data]# cd minio/

[root@nccztsjb-node-23 minio]# ls

pv1 pv2 pv3 pv4

[root@nccztsjb-node-23 minio]# cd pv1/

[root@nccztsjb-node-23 pv1]# ls

[root@nccztsjb-node-23 pv1]# ls -al

total 0

drwxrwsrwx 3 root 1000 24 Apr 24 10:52 .

drwxrwxrwx 6 root root 50 Apr 22 17:00 ..

drwxrwsrwx 9 adminuser 1000 124 Apr 25 10:36 .minio.sys

[root@nccztsjb-node-23 pv1]# cat .minio.sys/

cat: .minio.sys/: Is a directory

[root@nccztsjb-node-23 pv1]# ls

[root@nccztsjb-node-23 pv1]# cd .minio.sys/

[root@nccztsjb-node-23 .minio.sys]# ls

buckets config format.json ilm multipart pool.bin tmp tmp-old

[root@nccztsjb-node-23 .minio.sys]# cd ..

[root@nccztsjb-node-23 pv1]# ls

[root@nccztsjb-node-23 pv1]# pwd

/data/minio/pv1

[root@nccztsjb-node-23 pv1]#

在每个节点将/data/minio目录删除,重新创建。

解决了该问题。

所以,建议,实验环境,每次的部署都要进行环境的清理!避免出现一些其他的异常情况。

本文转自:https://www.cnblogs.com/chuanzhang053/p/16190774.html

(END)

以上是关于通过minio operator在k8s中部署minio tenant集群的主要内容,如果未能解决你的问题,请参考以下文章