COCO_03 制作COCO格式数据集 dataset 与 dataloader

Posted LiQiang33

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了COCO_03 制作COCO格式数据集 dataset 与 dataloader相关的知识,希望对你有一定的参考价值。

文章目录

1 引言

在之前的文章中,我们认识了COCO数据集的基本格式https://blog.csdn.net/qq_44776065/article/details/128695821和制作了分割数据集 制作COCO格式目标检测和分割数据集https://blog.csdn.net/qq_44776065/article/details/128697177,那么接下来如何读取数据集,并展示结果呢?接下来我们解决这个问题

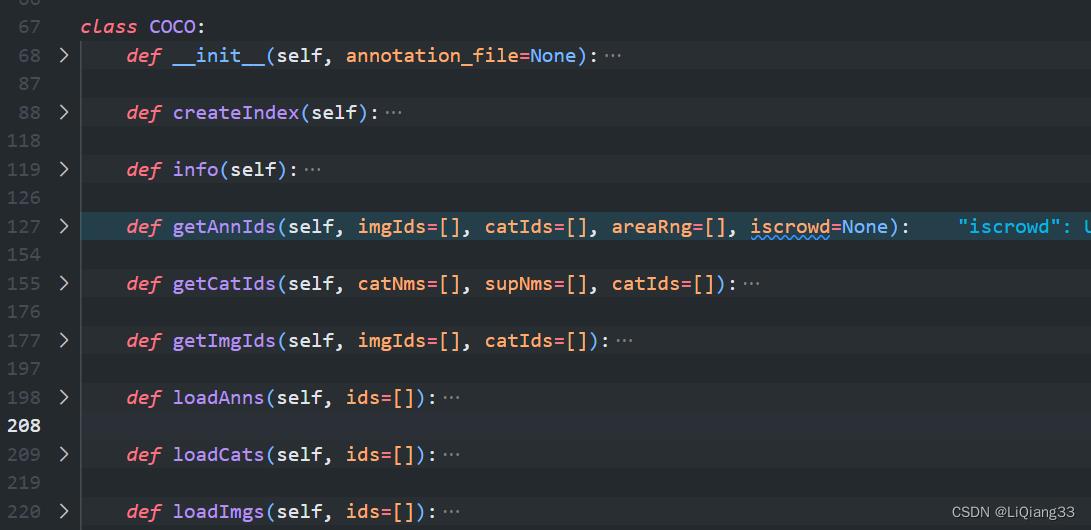

2 pycocotools介绍

pycocotools是官方给出的解析COCO格式数据集的API,帮助我们对COCO格式数据集进行操作,官方API:https://github.com/cocodataset/cocoapi,在PythonAPI中有Demo,可以下载后运行

安装pycocotools(本人安装时Linux和windows都可使用)

pip install pycocotools

重要属性

- 图片的字典信息:

coco.imgs - 标注的字典信息:

coco.anns - 类别的字典信息:

coco.cats

重要API,get与load:

基本思想:先获取ID,再加载信息

获取ID:

- 获取所有图片的ID:

getImgIds()指定ID回返回指定的ID - 根据

imgIds和catIds获取标注ID:getAnnIds(imgIds=[],catsIds=[]) - 获取类别ID:

getCatIds()

加载信息:

- 加载图片信息

loadImgs(img_id),获取的是字典信息,获取路径信息为:loadImgs(img_id)[0]["file_name"] - 加载标注信息

loadAnns(ann_ids),ann_ids来自筛选的的标注id - 加载类别信息

loadCats(cat_id)

例子:初始化COCO对象,并获取图片ID

from pycocotools.coco import COCO

import os

dataset_root = "D:MyDataset/my_coco"

anno_file = "my_annotations.json"

anno_path = os.path.join(dataset_root, anno_file)

anno = COCO(anno_path)

image_ids = anno.getImgIds()

3 Dataset 构建

基本流程:

- 初始化:初始化

COCO数据集,并获取所有图片的ID - 获取图片信息,根据

index获取图片ID,再根据图片ID(或者类别ID)获取标注ID - 获取标注信息,根据

标注ID,加载标注信息 - 对标注信息进行处理,转化为

tensor

初始化:

from PIL import Image

from torch.utils.data.dataset import Dataset

from torch.utils.data.dataloader import DataLoader

from pycocotools.coco import COCO

from utils import convert_coco_poly_mask, draw_gt

import utils.coco_transform as coco_transform

class SegDatasetCOCO(Dataset):

def __init__(self, dataset_root, p_anno_filename, category, transforms) -> None:

super(SegDatasetCOCO).__init__()

# 根据数据集和p_dir获取标注文件

assert os.path.exists(dataset_root), "0 does not exists".format(dataset_root)

anno_root = os.path.join(dataset_root, p_anno_filename)

self.patient_dir = p_anno_filename[0: -17]

self.transforms = transforms

self.category = category

# 加载COCO数据

self.anno = COCO(annotation_file=anno_root)

# 获取其中的数据

# self.ids = list(self.anno.imgs.keys())

self.ids = self.anno.getImgIds()

self.dataset_root = dataset_root

# 输出目录信息

print(f"Dataset Info Name: self.patient_dir")

print(f"Dataset Info dataset len: len(self.ids)")

获取单个batch:

def __getitem__(self, index):

# 获取图片和标注

img_id = self.ids[index]

# 读取图片

filename = self.anno.loadImgs(img_id)[0]["file_name"]

filepath = os.path.join(self.dataset_root, filename)

images = Image.open(filepath).convert("L")

# 获取标注

w, h = images.size

# 根据图片ID和类别ID获取标注ID

anno_ids = self.anno.getAnnIds(imgIds=img_id, catIds=self.category)

coco_targets = self.anno.loadAnns(anno_ids)

# # 选择标签

# coco_targets = [item for item in coco_targets if item["category_id"] == self.category]

target = self.parse_targets(img_id=img_id, coco_targets=coco_targets, w=w, h=h)

# 返回处理后的数据

if self.transforms is not None:

images, target = self.transforms(images, target)

return images, target

对标注信息处理:

def parse_targets(self,

img_id: int,

coco_targets: list,

w: int = None,

h: int = None):

assert w > 0, "w 不合法"

assert h > 0, "h 不合法"

# 只筛选出单个对象的情况

anno = [obj for obj in coco_targets if obj['iscrowd'] == 0]

boxes = [obj["bbox"] for obj in anno]

# 转化为tensor格式, box的格式: [xmin, ymin, w, h] -> [xmin, ymin, xmax, ymax]

boxes = torch.as_tensor(boxes, dtype=torch.float32).reshape(-1, 4)

boxes[:, 2:] += boxes[:, :2]

boxes[:, 0::2].clamp_(min=0, max=w)

boxes[:, 1::2].clamp_(min=0, max=h)

# 类别标签

classes = [obj["category_id"] for obj in anno]

classes = torch.tensor(classes, dtype=torch.int64)

# 面积

area = torch.tensor([obj["area"] for obj in anno])

iscrowd = torch.tensor([obj["iscrowd"] for obj in anno])

# 分割标签转化为图片

segmentations = [obj["segmentation"] for obj in anno]

masks = convert_coco_poly_mask(segmentations, h, w)

# 筛选出合法的目标,即 x_max>x_min 且 y_max>y_min

keep = (boxes[:, 3] > boxes[:, 1]) & (boxes[:, 2] > boxes[:, 0])

boxes = boxes[keep]

classes = classes[keep]

masks = masks[keep]

area = area[keep]

iscrowd = iscrowd[keep]

target =

target["boxes"] = boxes

target["labels"] = classes

target["masks"] = masks

target["image_id"] = torch.tensor([img_id])

target["area"] = area

target["iscrowd"] = iscrowd

return target

4 Dataloader 构建

创建Dataset与DataLoader

dataset_root = r"D:\\Learning\\OCT\\oct-dataset-master\\dataset\\dataset_stent_coco"

p_anno_filename = "P9_1_IMG002_annotations.json"

category = 2

transforms = coco_transform.Compose([coco_transform.ToTensor()])

dataset = SegDatasetCOCO(

dataset_root=dataset_root,

p_anno_filename=p_anno_filename,

category=category,

transforms=transforms

)

dataset_loader = DataLoader(

dataset=dataset,

batch_size=1,

shuffle=False,

collate_fn=dataset.collate_fn

)

4.1 解决batch中tensor维度不一致的打包问题

数据集读取需要特殊处理,原因是默认的batch组装无法将结果进行打包,原因是每一张图片的mask的维度不一致,根据目标的个数确定mask的个数

@staticmethod

def collate_fn(batch):

return tuple(zip(*batch))

4.2 collate_fn()函数分析

batch数据格式,数据均为tensor:

image, "bbox": [[1, 2, ,3 4], ...], "classes": [1, ...], "mask": [[[1,0, 0], [0, 0, 0], [1, 1, 1,1]], ...], "area": [100.0, ...]

原理分析:

if __name__ == "__main__":

a1 = ["a", [1, 2, 3]]

a2 = ["b", [3, 4]] # 第二个的元素维度不一致

b = [a1, a2]

c = zip(*(b))

for i in c:

print(i)

pass

# ('a', 'b')

# ([1, 2, 3], [3, 4])

使用*解开a迭代器, 将维度不一致的当作一个元素, 使用zip将两个迭代器对应位置的元素进行组合, 完成batch的合并

如果有不同类的元素

if __name__ == "__main__":

a1 = ["a", [1, 2, 3]]

a2 = ["b", [3, 4]]

a3 = ["c", "array": [5, 6]]

b = [a1, a2, a3]

c = tuple(zip(*(b)))

for i in c:

print(i)

pass

# ('a', 'b', 'c')

# ([1, 2, 3], [3, 4], 'array': [5, 6])

即使多个batch中有不同的元素,这样的情况一般不会出现,常常出现的问题是batch中某个数据维度不一致

5 绘制预测结果或GT

预测结果和GT文件的数据类型基本一致,不同的是模型会输出一定的置信度

在实际的项目中,预测结果会按照置信度进行筛选,之后在进行绘制,置信度在 0 - 1之间

完整代码:

需要将数据类型转换为ndarray之后进行绘制

if __name__ == "__main__":

dataset_root = r"D:\\Learning\\OCT\\oct-dataset-master\\dataset\\dataset_stent_coco"

p_anno_filename = "P9_1_IMG002_annotations.json"

category = 2

transforms = coco_transform.Compose([coco_transform.ToTensor()])

dataset = SegDatasetCOCO(

dataset_root=dataset_root,

p_anno_filename=p_anno_filename,

category=category,

transforms=transforms

)

dataset_loader = DataLoader(

dataset=dataset,

batch_size=1,

shuffle=False,

collate_fn=dataset.collate_fn

)

for i, (image, predictions) in enumerate(dataset_loader):

if i == 1:

break

# image = np.uint8(image[0].permute(1, 2, 0).cpu().numpy() * 255) # RGB图像

# 获取的都是batch中的第一个

# 获取图片,图片的类型是RGB图像

image = np.uint8(torch.squeeze(torch.squeeze(image[0], dim=0), dim=0).cpu().numpy() * 255) # 灰度图

image = Image.fromarray(image).convert("RGB") # 绘制灰度图必须保证图像的指定的颜色于其对应

# 将数据都转化为numpy

predict_boxes = predictions[0]["boxes"].to("cpu").numpy() # [[x_min, y_min, x_max, y_max], ...]

predict_classes = predictions[0]["labels"].to("cpu").numpy() # [1, ...] 类别

predict_scores = np.ones(len(predict_boxes)) # [0.9, ...]

predict_mask = predictions[0]["masks"].to("cpu").numpy() # [[[1, 1, 1], [1, 1, 1], [0, 0, 0]], ...]

# 定义总共的类别

category_index =

"1": "cerebral",

"2": "stent"

# 绘制gt

plot_img = draw_gt(image=image,

boxes=predict_boxes,

classes=predict_classes,

scores=predict_scores,

masks=predict_mask,

category_index=category_index,

line_thickness=2,

font='arial.ttf',

font_size=13)

# plt.imshow(plot_img)

# plt.show()

# 保存预测的图片结果

plot_img.save(f"./test/test_gt_catscategory_p_anno_filename[0: -17]_predictions[0]['image_id'].item().jpg")

Appendix

A. convert_coco_poly_mask

- 使用

coco_mask将polygon信息转化为rle格式,关于RLE格式,参考:<https: > - 对rle格式进行进行解码,转换为图片

mask - 保证

mask维度为3,为打包成batch准备,batch中图片格式:B, C, W, H

from pycocotools import mask as coco_mask

def convert_coco_poly_mask(segmentations, height, width):

masks = []

for polygons in segmentations:

rles = coco_mask.frPyObjects(polygons, height, width)

mask = coco_mask.decode(rles)

if len(mask.shape) < 3:

mask = mask[..., None]

mask = torch.as_tensor(mask, dtype=torch.uint8) # 维度w为[h, w, c]

mask = mask.any(dim=2) # >1 赋值为 True =0为False, 维度将为[h, w]

masks.append(mask)

if masks:

masks = torch.stack(masks, dim=0)

else:

# 如果mask为空,则说明没有目标,直接返回数值为0的mask

masks = torch.zeros((0, height, width), dtype=torch.uint8)

return masks

B. COCO_Transform

再次封装torchvision.transforms.ToTensor等函数,从而对image和target同时处理

import random

from torchvision.transforms import functional as F

class Compose(object):

"""组合多个transform函数"""

def __init__(self, transforms):

self.transforms = transforms

def __call__(self, image, target):

for t in self.transforms:

image, target = t(image, target)

return image, target

class ToTensor(object):

"""将PIL图像转为Tensor"""

def __call__(self, image, target):

image = F.to_tensor(image)

return image, target

class RandomHorizontalFlip(object):

"""随机水平翻转图像以及bboxes"""

def __init__(self, prob=0.5):

self.prob = prob

def __call__(self, image, target):

if random.random() < self.prob:

height, width = image.shape[-2:]

image = image.flip(-1) # 水平翻转图片

bbox = target["boxes"]

# bbox: xmin, ymin, xmax, ymax

bbox[:, [0, 2]] = width - bbox[:, [2, 0]] # 翻转对应bbox坐标信息

target["boxes"] = bbox

if "masks" in target:

target["masks"] = target["masks"].flip(-1)

return image, target

C. DrawGT

根据draw_boxes_on_image 或者 draw_masks_on_image 来选择绘制类型

目标检测框bbox采用line进行绘制

def draw_gt(image: Image,

boxes: np.ndarray = None,

classes: np.ndarray = None,

scores: np.ndarray = None,

masks: np.ndarray = None,

category_index: dict = None,

box_thresh: float = 0.1,

mask_thresh: float = 0.5,

line_thickness: int = 8,

font: str = 'arial.ttf',

font_size: int = 24,

draw_boxes_on_image: bool = True,

draw_masks_on_image: bool = True) -> Image:

"""

将目标边界框信息,类别信息,mask信息绘制在图片上

Args:

image: 需要绘制的图片

boxes: 目标边界框信息

classes: 目标类别信息

scores: 目标概率信息

masks: 目标mask信息

category_index: 类别与名称字典

box_thresh: 过滤的概率阈值

mask_thresh: 绘制框的置信度阈值, gt为1

line_thickness: 边界框宽度

font: 字体类型

font_size: 字体大小

draw_boxes_on_image:

draw_masks_on_image:

Returns:

"""

# 过滤掉低概率的目标

idxs = np.greater(scores, box_thresh)

boxes = boxes[idxs]

classes = classes[idxs]

scores = scores[idxs]

if masks is not以上是关于COCO_03 制作COCO格式数据集 dataset 与 dataloader的主要内容,如果未能解决你的问题,请参考以下文章