ElasticSearch——手写一个ElasticSearch分词器(附源码)

Posted 止步前行

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了ElasticSearch——手写一个ElasticSearch分词器(附源码)相关的知识,希望对你有一定的参考价值。

1. 分词器插件

ElasticSearch提供了对文本内容进行分词的插件系统,对于不同的语言的文字分词器,规则一般是不一样的,而ElasticSearch提供的插件机制可以很好的集成各语种的分词器。

Elasticsearch 本身并不支持中文分词,但好在它支持编写和安装额外的分词管理插件,而开源的中文分词器 ik 就非常强大,具有20万以上的常用词库,可以满足一般的常用分词功能。

1.1 分词器插件作用

分词器的主要作用是把文本拆分成一个个最小粒度的单词,然后给ElasticSearch作为索引系统的词条使用。不同语种拆分单词规则也是不一样的,最常见的就是中文分词和英文分词。

对于同一个文本,使用不同分词器,拆分的效果也是不同的。如:"中国人民共和国"使用ik_max_word分词器会被拆分成:中国人民共和国、中华人民、中华、华人、人民共和国、人民、共和国、共和、国,而使用standard分词器则会拆分成:中、国、人、民、共、和、国。被拆分后的词就可以作为ElasticSearch的索引词条来构建索引系统,这样就可以使用部分内容进行搜索了

2. 常用分词器

2.1 分词器介绍

-

standard

标准分词器。处理英文能力强, 会将词汇单元转换成小写形式,并去除停用词和标点符号,对于非英文按单字切分

-

whitespace

空格分词器。 针对英文,仅去除空格,没有其他任何处理, 不支持非英文

-

simple

针对英文,通过非字母字符分割文本信息,然后将词汇单元统一为小写形式,数字类型的字符会被去除

-

stop

stop的功能超越了simple,stop在simple的基础上增加了去除英文中的常用单词(如the,a等),也可以更加自己的需要设置常用单词,不支持中文 -

keyword

Keyword把整个输入作为一个单独词汇单元,不会对文本进行任何拆分,通常是用在邮政编码、电话号码等需要全匹配的字段上 -

pattern

查询文本会被自动当做正则表达式处理,生成一组

terms关键字,然后在对Elasticsearch进行查询 -

snowball

雪球分析器,在

standard的基础上添加了snowball filter,Lucene官方不推荐使用 -

language

一个用于解析特殊语言文本的

analyzer集合,但不包含中文 -

ik

IK分词器是一个开源的基于java语言开发的轻量级的中文分词工具包。 采用了特有的“正向迭代最细粒度切分算法”,支持细粒度和最大词长两种切分模式。支持:英文字母、数字、中文词汇等分词处理,兼容韩文、日文字符。 同时支持用户自定义词库。 它带有两个分词器:ik_max_word: 将文本做最细粒度的拆分,尽可能多的拆分出词语ik_smart:做最粗粒度的拆分,已被分出的词语将不会再次被其它词语占有

-

pinyin

通过用户输入的拼音匹配

Elasticsearch中的中文

2.2 分词器示例

对于同一个输入,使用不同分词器的结果。

输入:栖霞站长江线14w6号断路器

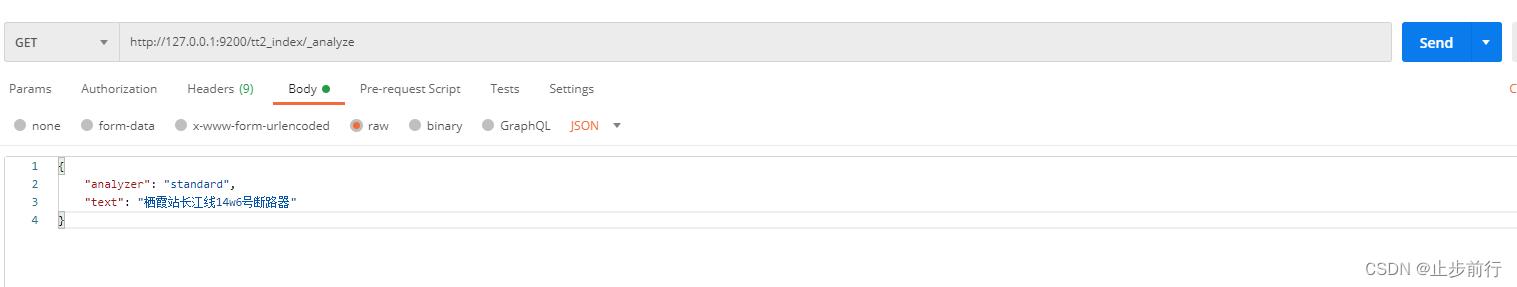

2.2.1 standard

"tokens": [

"token": "栖",

"start_offset": 0,

"end_offset": 1,

"type": "<IDEOGRAPHIC>",

"position": 0

,

"token": "霞",

"start_offset": 1,

"end_offset": 2,

"type": "<IDEOGRAPHIC>",

"position": 1

,

"token": "站",

"start_offset": 2,

"end_offset": 3,

"type": "<IDEOGRAPHIC>",

"position": 2

,

"token": "长",

"start_offset": 3,

"end_offset": 4,

"type": "<IDEOGRAPHIC>",

"position": 3

,

"token": "江",

"start_offset": 4,

"end_offset": 5,

"type": "<IDEOGRAPHIC>",

"position": 4

,

"token": "线",

"start_offset": 5,

"end_offset": 6,

"type": "<IDEOGRAPHIC>",

"position": 5

,

"token": "14w6",

"start_offset": 6,

"end_offset": 10,

"type": "<ALPHANUM>",

"position": 6

,

"token": "号",

"start_offset": 10,

"end_offset": 11,

"type": "<IDEOGRAPHIC>",

"position": 7

,

"token": "断",

"start_offset": 11,

"end_offset": 12,

"type": "<IDEOGRAPHIC>",

"position": 8

,

"token": "路",

"start_offset": 12,

"end_offset": 13,

"type": "<IDEOGRAPHIC>",

"position": 9

,

"token": "器",

"start_offset": 13,

"end_offset": 14,

"type": "<IDEOGRAPHIC>",

"position": 10

]

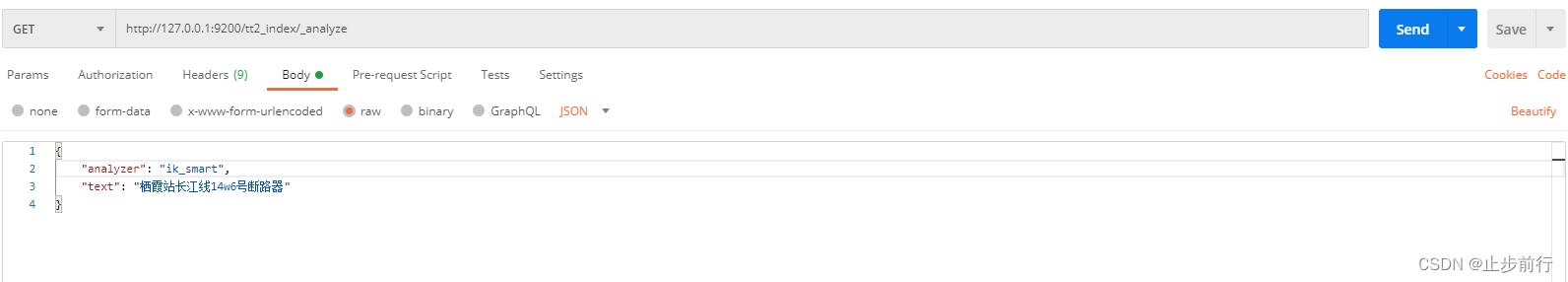

2.2.2 ik

ik_smart

"tokens": [

"token": "栖霞",

"start_offset": 0,

"end_offset": 2,

"type": "CN_WORD",

"position": 0

,

"token": "站",

"start_offset": 2,

"end_offset": 3,

"type": "CN_CHAR",

"position": 1

,

"token": "长江",

"start_offset": 3,

"end_offset": 5,

"type": "CN_WORD",

"position": 2

,

"token": "线",

"start_offset": 5,

"end_offset": 6,

"type": "CN_CHAR",

"position": 3

,

"token": "14w6",

"start_offset": 6,

"end_offset": 10,

"type": "LETTER",

"position": 4

,

"token": "号",

"start_offset": 10,

"end_offset": 11,

"type": "COUNT",

"position": 5

,

"token": "断路器",

"start_offset": 11,

"end_offset": 14,

"type": "CN_WORD",

"position": 6

]

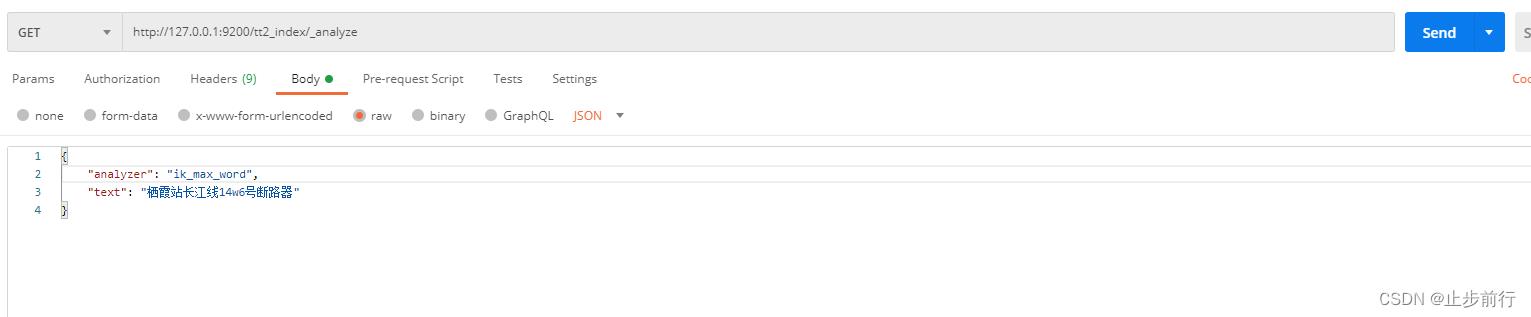

ik_max_word

"tokens": [

"token": "栖霞",

"start_offset": 0,

"end_offset": 2,

"type": "CN_WORD",

"position": 0

,

"token": "站长",

"start_offset": 2,

"end_offset": 4,

"type": "CN_WORD",

"position": 1

,

"token": "长江",

"start_offset": 3,

"end_offset": 5,

"type": "CN_WORD",

"position": 2

,

"token": "线",

"start_offset": 5,

"end_offset": 6,

"type": "CN_CHAR",

"position": 3

,

"token": "14w6",

"start_offset": 6,

"end_offset": 10,

"type": "LETTER",

"position": 4

,

"token": "14",

"start_offset": 6,

"end_offset": 8,

"type": "ARABIC",

"position": 5

,

"token": "w",

"start_offset": 8,

"end_offset": 9,

"type": "ENGLISH",

"position": 6

,

"token": "6",

"start_offset": 9,

"end_offset": 10,

"type": "ARABIC",

"position": 7

,

"token": "号",

"start_offset": 10,

"end_offset": 11,

"type": "COUNT",

"position": 8

,

"token": "断路器",

"start_offset": 11,

"end_offset": 14,

"type": "CN_WORD",

"position": 9

,

"token": "断路",

"start_offset": 11,

"end_offset": 13,

"type": "CN_WORD",

"position": 10

,

"token": "器",

"start_offset": 13,

"end_offset": 14,

"type": "CN_CHAR",

"position": 11

]

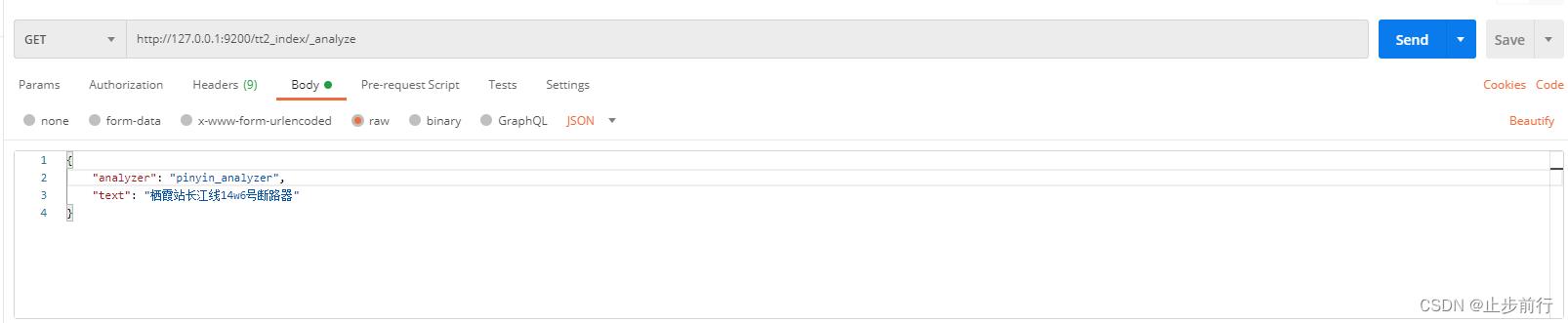

2.2.3 pinyin

"tokens": [

"token": "q",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 0

,

"token": "qi",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 0

,

"token": "栖霞站长江线14w6号断路器",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 0

,

"token": "qixiazhanzhangjiangxian14w6haoduanluqi",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 0

,

"token": "qxzzjx14w6hdlq",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 0

,

"token": "x",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 1

,

"token": "xia",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 1

,

"token": "z",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 2

,

"token": "zhan",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 2

,

"token": "zhang",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 3

,

"token": "j",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 4

,

"token": "jiang",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 4

,

"token": "xian",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 5

,

"token": "14",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 6

,

"token": "w",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 7

,

"token": "6",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 8

,

"token": "h",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 9

,

"token": "hao",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 9

,

"token": "d",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 10

,

"token": "duan",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 10

,

"token": "l",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 11

,

"token": "lu",

"start_offset": 0,

"end_offset": 0,

"type": "word",

"position": 11

]

3. 自定义分词器

对于上面三种分词器的效果,在某些场景下可能都符合要求,下面来看看为什么需要自定义分词器。

众所周知,在推荐系统中,对于拼音搜索是很有必要的,比如输入:"ls",希望返回与"ls"相关的索引词条,“零食(ls)”、“雷蛇(ls)”、“林书豪(lsh)”……,上面都是对的情况,但如果此处仅仅使用拼音分词器,可会"l"相关的索引词条也会被命中,比如"李宁(l)"、“兰蔻(l)”……,这种情况下的推荐就是不合理的。

如果使用拼音分词器,对于上面的输入"栖霞站长江线14w6号断路器",会产生出很多单个字母的索引词条,比如:"h","d","l"等。如果用户输入的查询条件"ql",根本不想看到这条”栖霞站长江线14w6号断路器“数据ÿ

以上是关于ElasticSearch——手写一个ElasticSearch分词器(附源码)的主要内容,如果未能解决你的问题,请参考以下文章