如何在忘乎所以时察觉对方的情感变化

Posted 扬志九洲

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了如何在忘乎所以时察觉对方的情感变化相关的知识,希望对你有一定的参考价值。

如何在忘乎所以时察觉对方的情感变化

本项目用于对表情进行识别,可以利用表情来察觉情感。

一、项目背景

在学习了微表情心理学后,认识到读懂表情对认识一个人的情感变化的意义,从而诞生了做这一项目的想法。

载入所需库

import paddle

import numpy as np

import cv2

from paddle.vision.models import resnet50

from paddle.vision.datasets import DatasetFolder

import matplotlib.pylab as plt

import os

定义参数

train_file='train'

valid_file='valid'

test_file='test'

imagesize=32

batch_size=32

lr=1e-5

二、数据集介绍

本项目用fer2013数据集,事先数据集已完成对训练集和验证集的切分,同时已将不同表情放于不同文件夹中。具体表情对应的标签和中英文如下:0 anger 生气; 1 disgust 厌恶; 2 fear 恐惧; 3 happy 开心; 4 sad 伤心;5 surprised 惊讶; 6 normal 中性。

1.解压数据集

!unzip -oq /home/aistudio/work/image/fer2013.zip

2.对数据加载进行预处理

# 定义数据预处理

def load_image(img_path):

img = cv2.cvtColor(cv2.imread(img_path), cv2.COLOR_BGR2RGB)

#resize

img = cv2.resize(img,(imagesize,imagesize))

img = np.array(img).astype('float32')

# HWC to CHW

img = img.transpose((2,0,1))

#Normalize

img = img / 255

return img

# 构建Dataset

class Face(DatasetFolder):

def __init__(self, path):

super().__init__(path)

def __getitem__(self, index):

img_path, label = self.samples[index]

label = np.array(label).astype(np.int64)

return load_image(img_path), label

train_dataset = Face(train_file)

eval_dataset = Face(valid_file)

3.对数据集查看

plt.figure(figsize=(15, 15))

for i in range(5):

fundus_img, lab = train_dataset.__getitem__(i)

plt.subplot(2, 5, i+1)

plt.imshow(fundus_img.transpose(1, 2, 0))

plt.axis("off")

print(lab)

三、模型选择和开发

选择了resnet50,又用了两个全连接层,然后输出。

1.模型组网

class Network(paddle.nn.Layer):

def __init__(self):

super(Network, self).__init__()

self.resnet = resnet50(pretrained=True, num_classes=0)

self.flatten = paddle.nn.Flatten()

self.linear_1 = paddle.nn.Linear(2048, 512)

self.linear_2 = paddle.nn.Linear(512, 256)

self.linear_3 = paddle.nn.Linear(256, 8)

self.relu = paddle.nn.ReLU()

self.dropout = paddle.nn.Dropout(0.2)

def forward(self, inputs):

# print('input', inputs)

y = self.resnet(inputs)

y = self.flatten(y)

y = self.linear_1(y)

y = self.linear_2(y)

y = self.relu(y)

y = self.dropout(y)

y = self.linear_3(y)

y = paddle.nn.functional.sigmoid(y)

return y

2.实例化模型和模型可视化

inputs = paddle.static.InputSpec(shape=[None, 3, 32, 32], name='inputs')

labels = paddle.static.InputSpec(shape=[None, 2], name='labels')

model = paddle.Model(Network(), inputs, labels)

paddle.summary(Network(), (1, 3, 32, 32))

-------------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===============================================================================

Conv2D-54 [[1, 3, 32, 32]] [1, 64, 16, 16] 9,408

BatchNorm2D-54 [[1, 64, 16, 16]] [1, 64, 16, 16] 256

ReLU-19 [[1, 64, 16, 16]] [1, 64, 16, 16] 0

MaxPool2D-2 [[1, 64, 16, 16]] [1, 64, 8, 8] 0

Conv2D-56 [[1, 64, 8, 8]] [1, 64, 8, 8] 4,096

BatchNorm2D-56 [[1, 64, 8, 8]] [1, 64, 8, 8] 256

ReLU-20 [[1, 256, 8, 8]] [1, 256, 8, 8] 0

Conv2D-57 [[1, 64, 8, 8]] [1, 64, 8, 8] 36,864

BatchNorm2D-57 [[1, 64, 8, 8]] [1, 64, 8, 8] 256

Conv2D-58 [[1, 64, 8, 8]] [1, 256, 8, 8] 16,384

BatchNorm2D-58 [[1, 256, 8, 8]] [1, 256, 8, 8] 1,024

Conv2D-55 [[1, 64, 8, 8]] [1, 256, 8, 8] 16,384

BatchNorm2D-55 [[1, 256, 8, 8]] [1, 256, 8, 8] 1,024

BottleneckBlock-17 [[1, 64, 8, 8]] [1, 256, 8, 8] 0

Conv2D-59 [[1, 256, 8, 8]] [1, 64, 8, 8] 16,384

BatchNorm2D-59 [[1, 64, 8, 8]] [1, 64, 8, 8] 256

ReLU-21 [[1, 256, 8, 8]] [1, 256, 8, 8] 0

Conv2D-60 [[1, 64, 8, 8]] [1, 64, 8, 8] 36,864

BatchNorm2D-60 [[1, 64, 8, 8]] [1, 64, 8, 8] 256

Conv2D-61 [[1, 64, 8, 8]] [1, 256, 8, 8] 16,384

BatchNorm2D-61 [[1, 256, 8, 8]] [1, 256, 8, 8] 1,024

BottleneckBlock-18 [[1, 256, 8, 8]] [1, 256, 8, 8] 0

Conv2D-62 [[1, 256, 8, 8]] [1, 64, 8, 8] 16,384

BatchNorm2D-62 [[1, 64, 8, 8]] [1, 64, 8, 8] 256

ReLU-22 [[1, 256, 8, 8]] [1, 256, 8, 8] 0

Conv2D-63 [[1, 64, 8, 8]] [1, 64, 8, 8] 36,864

BatchNorm2D-63 [[1, 64, 8, 8]] [1, 64, 8, 8] 256

Conv2D-64 [[1, 64, 8, 8]] [1, 256, 8, 8] 16,384

BatchNorm2D-64 [[1, 256, 8, 8]] [1, 256, 8, 8] 1,024

BottleneckBlock-19 [[1, 256, 8, 8]] [1, 256, 8, 8] 0

Conv2D-66 [[1, 256, 8, 8]] [1, 128, 8, 8] 32,768

BatchNorm2D-66 [[1, 128, 8, 8]] [1, 128, 8, 8] 512

ReLU-23 [[1, 512, 4, 4]] [1, 512, 4, 4] 0

Conv2D-67 [[1, 128, 8, 8]] [1, 128, 4, 4] 147,456

BatchNorm2D-67 [[1, 128, 4, 4]] [1, 128, 4, 4] 512

Conv2D-68 [[1, 128, 4, 4]] [1, 512, 4, 4] 65,536

BatchNorm2D-68 [[1, 512, 4, 4]] [1, 512, 4, 4] 2,048

Conv2D-65 [[1, 256, 8, 8]] [1, 512, 4, 4] 131,072

BatchNorm2D-65 [[1, 512, 4, 4]] [1, 512, 4, 4] 2,048

BottleneckBlock-20 [[1, 256, 8, 8]] [1, 512, 4, 4] 0

Conv2D-69 [[1, 512, 4, 4]] [1, 128, 4, 4] 65,536

BatchNorm2D-69 [[1, 128, 4, 4]] [1, 128, 4, 4] 512

ReLU-24 [[1, 512, 4, 4]] [1, 512, 4, 4] 0

Conv2D-70 [[1, 128, 4, 4]] [1, 128, 4, 4] 147,456

BatchNorm2D-70 [[1, 128, 4, 4]] [1, 128, 4, 4] 512

Conv2D-71 [[1, 128, 4, 4]] [1, 512, 4, 4] 65,536

BatchNorm2D-71 [[1, 512, 4, 4]] [1, 512, 4, 4] 2,048

BottleneckBlock-21 [[1, 512, 4, 4]] [1, 512, 4, 4] 0

Conv2D-72 [[1, 512, 4, 4]] [1, 128, 4, 4] 65,536

BatchNorm2D-72 [[1, 128, 4, 4]] [1, 128, 4, 4] 512

ReLU-25 [[1, 512, 4, 4]] [1, 512, 4, 4] 0

Conv2D-73 [[1, 128, 4, 4]] [1, 128, 4, 4] 147,456

BatchNorm2D-73 [[1, 128, 4, 4]] [1, 128, 4, 4] 512

Conv2D-74 [[1, 128, 4, 4]] [1, 512, 4, 4] 65,536

BatchNorm2D-74 [[1, 512, 4, 4]] [1, 512, 4, 4] 2,048

BottleneckBlock-22 [[1, 512, 4, 4]] [1, 512, 4, 4] 0

Conv2D-75 [[1, 512, 4, 4]] [1, 128, 4, 4] 65,536

BatchNorm2D-75 [[1, 128, 4, 4]] [1, 128, 4, 4] 512

ReLU-26 [[1, 512, 4, 4]] [1, 512, 4, 4] 0

Conv2D-76 [[1, 128, 4, 4]] [1, 128, 4, 4] 147,456

BatchNorm2D-76 [[1, 128, 4, 4]] [1, 128, 4, 4] 512

Conv2D-77 [[1, 128, 4, 4]] [1, 512, 4, 4] 65,536

BatchNorm2D-77 [[1, 512, 4, 4]] [1, 512, 4, 4] 2,048

BottleneckBlock-23 [[1, 512, 4, 4]] [1, 512, 4, 4] 0

Conv2D-79 [[1, 512, 4, 4]] [1, 256, 4, 4] 131,072

BatchNorm2D-79 [[1, 256, 4, 4]] [1, 256, 4, 4] 1,024

ReLU-27 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 0

Conv2D-80 [[1, 256, 4, 4]] [1, 256, 2, 2] 589,824

BatchNorm2D-80 [[1, 256, 2, 2]] [1, 256, 2, 2] 1,024

Conv2D-81 [[1, 256, 2, 2]] [1, 1024, 2, 2] 262,144

BatchNorm2D-81 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 4,096

Conv2D-78 [[1, 512, 4, 4]] [1, 1024, 2, 2] 524,288

BatchNorm2D-78 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 4,096

BottleneckBlock-24 [[1, 512, 4, 4]] [1, 1024, 2, 2] 0

Conv2D-82 [[1, 1024, 2, 2]] [1, 256, 2, 2] 262,144

BatchNorm2D-82 [[1, 256, 2, 2]] [1, 256, 2, 2] 1,024

ReLU-28 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 0

Conv2D-83 [[1, 256, 2, 2]] [1, 256, 2, 2] 589,824

BatchNorm2D-83 [[1, 256, 2, 2]] [1, 256, 2, 2] 1,024

Conv2D-84 [[1, 256, 2, 2]] [1, 1024, 2, 2] 262,144

BatchNorm2D-84 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 4,096

BottleneckBlock-25 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 0

Conv2D-85 [[1, 1024, 2, 2]] [1, 256, 2, 2] 262,144

BatchNorm2D-85 [[1, 256, 2, 2]] [1, 256, 2, 2] 1,024

ReLU-29 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 0

Conv2D-86 [[1, 256, 2, 2]] [1, 256, 2, 2] 589,824

BatchNorm2D-86 [[1, 256, 2, 2]] [1, 256, 2, 2] 1,024

Conv2D-87 [[1, 256, 2, 2]] [1, 1024, 2, 2] 262,144

BatchNorm2D-87 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 4,096

BottleneckBlock-26 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 0

Conv2D-88 [[1, 1024, 2, 2]] [1, 256, 2, 2] 262,144

BatchNorm2D-88 [[1, 256, 2, 2]] [1, 256, 2, 2] 1,024

ReLU-30 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 0

Conv2D-89 [[1, 256, 2, 2]] [1, 256, 2, 2] 589,824

BatchNorm2D-89 [[1, 256, 2, 2]] [1, 256, 2, 2] 1,024

Conv2D-90 [[1, 256, 2, 2]] [1, 1024, 2, 2] 262,144

BatchNorm2D-90 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 4,096

BottleneckBlock-27 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 0

Conv2D-91 [[1, 1024, 2, 2]] [1, 256, 2, 2] 262,144

BatchNorm2D-91 [[1, 256, 2, 2]] [1, 256, 2, 2] 1,024

ReLU-31 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 0

Conv2D-92 [[1, 256, 2, 2]] [1, 256, 2, 2] 589,824

BatchNorm2D-92 [[1, 256, 2, 2]] [1, 256, 2, 2] 1,024

Conv2D-93 [[1, 256, 2, 2]] [1, 1024, 2, 2] 262,144

BatchNorm2D-93 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 4,096

BottleneckBlock-28 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 0

Conv2D-94 [[1, 1024, 2, 2]] [1, 256, 2, 2] 262,144

BatchNorm2D-94 [[1, 256, 2, 2]] [1, 256, 2, 2] 1,024

ReLU-32 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 0

Conv2D-95 [[1, 256, 2, 2]] [1, 256, 2, 2] 589,824

BatchNorm2D-95 [[1, 256, 2, 2]] [1, 256, 2, 2] 1,024

Conv2D-96 [[1, 256, 2, 2]] [1, 1024, 2, 2] 262,144

BatchNorm2D-96 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 4,096

BottleneckBlock-29 [[1, 1024, 2, 2]] [1, 1024, 2, 2] 0

Conv2D-98 [[1, 1024, 2, 2]] [1, 512, 2, 2] 524,288

BatchNorm2D-98 [[1, 512, 2, 2]] [1, 512, 2, 2] 2,048

ReLU-33 [[1, 2048, 1, 1]] [1, 2048, 1, 1] 0

Conv2D-99 [[1, 512, 2, 2]] [1, 512, 1, 1] 2,359,296

BatchNorm2D-99 [[1, 512, 1, 1]] [1, 512, 1, 1] 2,048

Conv2D-100 [[1, 512, 1, 1]] [1, 2048, 1, 1] 1,048,576

BatchNorm2D-100 [[1, 2048, 1, 1]] [1, 2048, 1, 1] 8,192

Conv2D-97 [[1, 1024, 2, 2]] [1, 2048, 1, 1] 2,097,152

BatchNorm2D-97 [[1, 2048, 1, 1]] [1, 2048, 1, 1] 8,192

BottleneckBlock-30 [[1, 1024, 2, 2]] [1, 2048, 1, 1] 0

Conv2D-101 [[1, 2048, 1, 1]] [1, 512, 1, 1] 1,048,576

BatchNorm2D-101 [[1, 512, 1, 1]] [1, 512, 1, 1] 2,048

ReLU-34 [[1, 2048, 1, 1]] [1, 2048, 1, 1] 0

Conv2D-102 [[1, 512, 1, 1]] [1, 512, 1, 1] 2,359,296

BatchNorm2D-102 [[1, 512, 1, 1]] [1, 512, 1, 1] 2,048

Conv2D-103 [[1, 512, 1, 1]] [1, 2048, 1, 1] 1,048,576

BatchNorm2D-103 [[1, 2048, 1, 1]] [1, 2048, 1, 1] 8,192

BottleneckBlock-31 [[1, 2048, 1, 1]] [1, 2048, 1, 1] 0

Conv2D-104 [[1, 2048, 1, 1]] [1, 512, 1, 1] 1,048,576

BatchNorm2D-104 [[1, 512, 1, 1]] [1, 512, 1, 1] 2,048

ReLU-35 [[1, 2048, 1, 1]] [1, 2048, 1, 1] 0

Conv2D-105 [[1, 512, 1, 1]] [1, 512, 1, 1] 2,359,296

BatchNorm2D-105 [[1, 512, 1, 1]] [1, 512, 1, 1] 2,048

Conv2D-106 [[1, 512, 1, 1]] [1, 2048, 1, 1] 1,048,576

BatchNorm2D-106 [[1, 2048, 1, 1]] [1, 2048, 1, 1] 8,192

BottleneckBlock-32 [[1, 2048, 1, 1]] [1, 2048, 1, 1] 0

AdaptiveAvgPool2D-2 [[1, 2048, 1, 1]] [1, 2048, 1, 1] 0

ResNet-2 [[1, 3, 32, 32]] [1, 2048, 1, 1] 0

Flatten-2 [[1, 2048, 1, 1]] [1, 2048] 0

Linear-4 [[1, 2048]] [1, 512] 1,049,088

Linear-5 [[1, 512]] [1, 256] 131,328

ReLU-36 [[1, 256]] [1, 256] 0

Dropout-2 [[1, 256]] [1, 256] 0

Linear-6 [[1, 256]] [1, 8] 2,056

===============================================================================

Total params: 24,743,624

Trainable params: 24,637,384

Non-trainable params: 106,240

-------------------------------------------------------------------------------

Input size (MB): 0.01

Forward/backward pass size (MB): 5.39

Params size (MB): 94.39

Estimated Total Size (MB): 99.79

-------------------------------------------------------------------------------

'total_params': 24743624, 'trainable_params': 24637384

3.模型训练

# 模型训练相关配置,准备损失计算方法,优化器和精度计算方法

model.prepare(paddle.optimizer.Adam(learning_rate=lr, parameters=model.parameters()),

paddle.nn.CrossEntropyLoss(),

paddle.metric.Accuracy())

# 模型训练

model.fit(train_data=train_dataset, #训练数据集

eval_data=eval_dataset, #测试数据集

batch_size=batch_size, #一个批次的样本数量

epochs=27, #迭代轮次

save_dir="/home/aistudio/lup", #把模型参数、优化器参数保存至自定义的文件夹

save_freq=3, #设定每隔多少个epoch保存模型参数及优化器参数

verbose=1

)

step 898/898 [==============================] - loss: 1.8075 - acc: 0.2449 - 53ms/step

save checkpoint at /home/aistudio/lup/0

Eval begin...

step 113/113 [==============================] - loss: 1.7850 - acc: 0.2530 - 23ms/step

Eval samples: 3589

4.模型评估测试

model.evaluate(eval_dataset, batch_size=5, verbose=1)

Eval begin...

step 718/718 [==============================] - loss: 1.9499 - acc: 0.4870 - 22ms/step

Eval samples: 3589

'loss': [1.9498544], 'acc': 0.48704374477570356

四、预测

1.对预测数据处理

def load_test(img_path):

img = cv2.cvtColor(cv2.imread(img_path), cv2.COLOR_BGR2RGB)

#resize

img = cv2.resize(img,(imagesize,imagesize))

img = np.array(img).astype('float32')

# HWC to CHW

img = img.transpose((2,0,1))

img = np.expand_dims(img, axis=0)

#Normalize

img = img / 255

return img

test_dataset=[]

for i in os.listdir(test_file):

test_dataset.append(load_test(test_file+'//'+i))

2.对模型预测

# 进行预测操作

result = model.predict(test_dataset)

# 定义产出数字与表情的对应关系

face=0:'anger',1:'disgust',2:'fear',3:'happy',4:'sad',5:'surprised',6:'normal'

# 定义画图方法

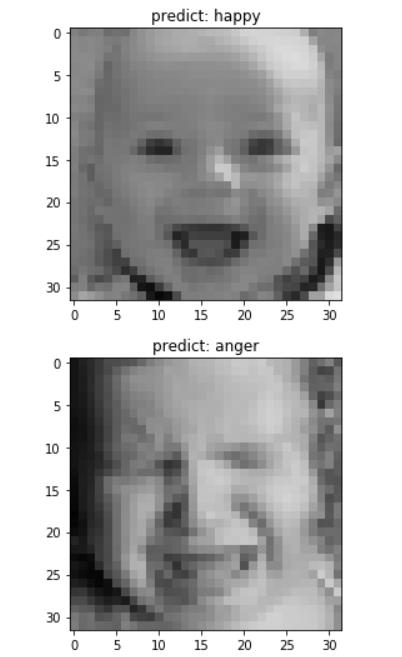

def show_img(img, predict):

plt.figure()

plt.title('predict: '.format(face[predict]))

plt.imshow(img.reshape([3, 32, 32]).transpose(1,2,0))

plt.show()

# 抽样展示

indexs = [4, 15, 45,]

for idx in indexs:

show_img(test_dataset[idx][0], np.argmax(result[0][idx]))

Predict begin...

step 3589/3589 [==============================] - 21ms/step

Predict samples: 3589

五、效果展示

五、总结与升华

1.在图像分类中输出数要大于所分图像类数。

2.对于维度缺失问题可以用img = np.expand_dims(img, axis=0)增加维度。

六、个人介绍

太原理工大学 软件学院 软件工程专业 2020级 本科生 王志洲

AIstudio地址链接:https://aistudio.baidu.com/aistudio/personalcenter/thirdview/559770

以上是关于如何在忘乎所以时察觉对方的情感变化的主要内容,如果未能解决你的问题,请参考以下文章