PyTorch:卷积/padding/pooling api

Posted -柚子皮-

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了PyTorch:卷积/padding/pooling api相关的知识,希望对你有一定的参考价值。

填充padding

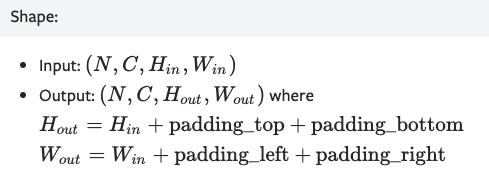

torch.nn.ConstantPad2d(padding: Union[T, Tuple[T, T, T, T]], value: float)

参数:padding (int, tuple) – the size of the padding. If is int, uses the same padding in all boundaries. If a 4-tuple, uses padding_left , padding_right , padding_top , padding_bottom )

示例:

>>> m = nn.ConstantPad2d(2, 3.5)

>>> input = torch.randn(1, 2, 2)

>>> input

tensor([[[ 1.6585, 0.4320],

[-0.8701, -0.4649]]])

>>> m(input)

tensor([[[ 3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000],

[ 3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000],

[ 3.5000, 3.5000, 1.6585, 0.4320, 3.5000, 3.5000],

[ 3.5000, 3.5000, -0.8701, -0.4649, 3.5000, 3.5000],

[ 3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000],

[ 3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000]]])

>>> # using different paddings for different sides

>>> m = nn.ConstantPad2d((3, 0, 2, 1), 3.5)

>>> m(input)

tensor([[[ 3.5000, 3.5000, 3.5000, 3.5000, 3.5000],

[ 3.5000, 3.5000, 3.5000, 3.5000, 3.5000],

[ 3.5000, 3.5000, 3.5000, 1.6585, 0.4320],

[ 3.5000, 3.5000, 3.5000, -0.8701, -0.4649],

[ 3.5000, 3.5000, 3.5000, 3.5000, 3.5000]]])

二维巻积CONV2D

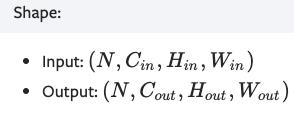

torch.nn.Conv2d(in_channels: int, out_channels: int, kernel_size: Union[T, Tuple[T, T]], stride: Union[T, Tuple[T, T]] = 1, padding: Union[T, Tuple[T, T]] = 0, dilation: Union[T, Tuple[T, T]] = 1, groups: int = 1, bias: bool = True, padding_mode: str = 'zeros')

参数:in_channels:输入通道数。一般第1层是1个通道,如果是多层cnn堆叠,从第二层起通道数为上层的输出feature maps数=即上一层的out_channels。

Note:每层参数量计算。第l层参数量:C_in*C_out*kernel_size[l][0]*kernel_size[l][1],其中C_in即in_channels[l]。

示例:

>>> # With square kernels and equal stride

>>> m = nn.Conv2d(16, 33, 3, stride=2)

Conv2d(1, 8, kernel_size=[3, 3], stride=(1, 1))

Conv2d(8, 16, kernel_size=[3, 3], stride=(1, 1))

自适应池化Adaptive Pooling

torch.nn.AdaptiveAvgPool2d(output_size: Union[T, Tuple[T, ...]])

普通Max/AvgPooling计算公式为:output_size = ceil ( (input_size+2∗padding−kernel_size)/stride)+1

当我们使用Adaptive Pooling时,这个问题就变成了由已知量input_size,output_size求解kernel_size与stride。你只需要告诉torch你需要什么样的输出结果。

为了简化问题,我们将padding设为0(后面我们可以发现源码里也是这样操作的c++源码部分)

stride = floor ( (input_size / (output_size) )

kernel_size = input_size − (output_size−1) * stride

padding = 0

示例:

>>> # target output size of 5x7

>>> m = nn.AdaptiveAvgPool2d((5,7))

>>> input = torch.randn(1, 64, 8, 9)

>>> output = m(input)

>>> # target output size of 7x7 (square)

>>> m = nn.AdaptiveAvgPool2d(7)

>>> input = torch.randn(1, 64, 10, 9)

>>> output = m(input)

[[开发技巧]·AdaptivePooling与Max/AvgPooling相互转换]

一维巻积CONV1D

torch.nn.Conv1d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode='zeros')

in_channels(int) – 输入信号的通道。在文本分类中,即为词向量的维度

out_channels(int) – 卷积产生的通道。有多少个out_channels,就需要多少个1维卷积

kernel_size(int or tuple) - 卷积核的尺寸,卷积核的大小为(k,),第二个维度是由in_channels来决定的,所以实际上卷积大小为kernel_size*in_channels

stride(int or tuple, optional) - 卷积步长

padding (int or tuple, optional)- 输入的每一条边补充0的层数

dilation(int or tuple, `optional``) – 卷积核元素之间的间距

groups(int, optional) – 从输入通道到输出通道的阻塞连接数

bias(bool, optional) - 如果bias=True,添加偏置

示例

常用于textcnn[深度学习:文本CNN-textcnn]

conv1 = nn.Conv1d(in_channels=256,out_channels=100,kernel_size=2)

input = torch.randn(32,35,256)

# batch_size x text_len x embedding_size -> batch_size x embedding_size x text_len

input = input.permute(0,2,1)

out = conv1(input)

print(out.size())

这里32为batch_size,35为句子最大长度,256为词向量。在输入一维卷积的时候,需要将32*35*256变换为32*256*35,因为一维卷积是在最后维度上扫的,最后out的大小即为:32*100*(35-2+1)=32*100*34。

from: -柚子皮-

ref:

以上是关于PyTorch:卷积/padding/pooling api的主要内容,如果未能解决你的问题,请参考以下文章