模式识别与图像处理课程实验二:基于UNet的目标检测网络

Posted 编程爱好者-阿新

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了模式识别与图像处理课程实验二:基于UNet的目标检测网络相关的知识,希望对你有一定的参考价值。

模式识别与图像处理课程实验二:基于UNet的目标检测网络

一、 实验原理与目的

-

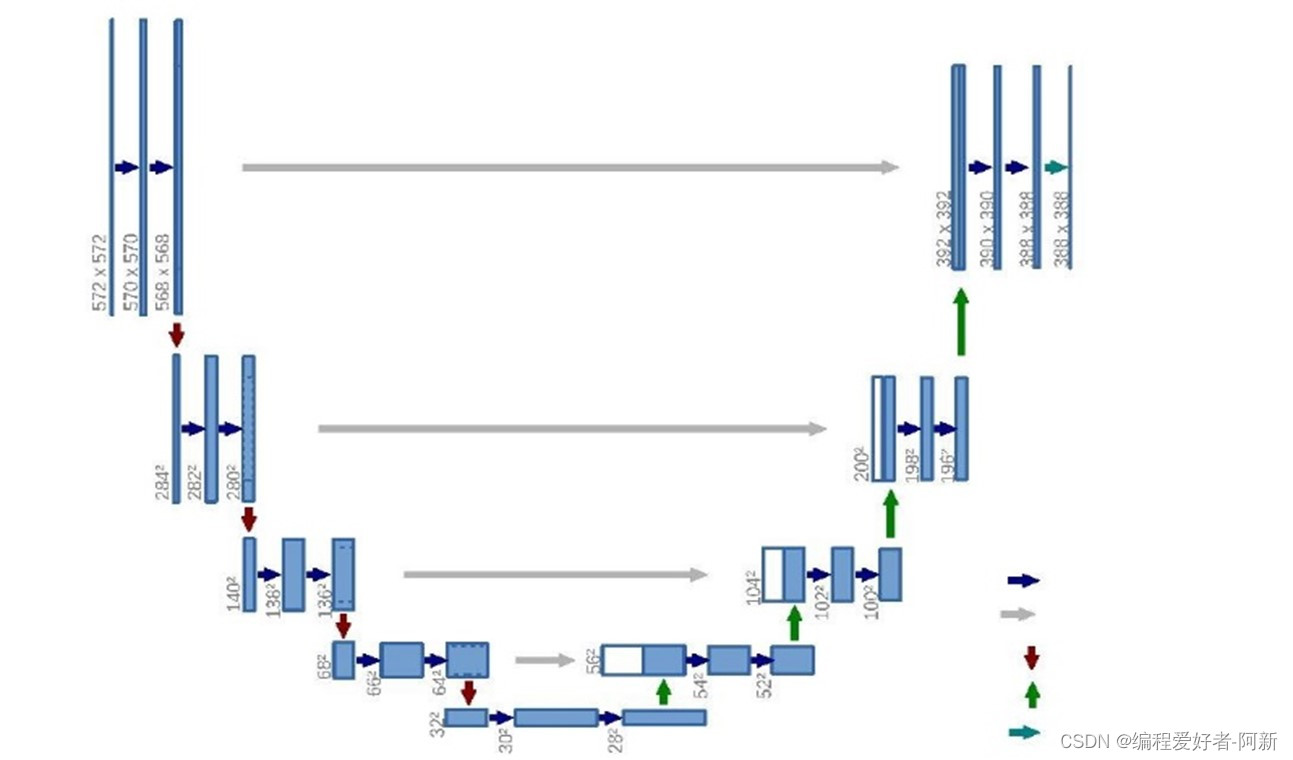

实验采用Unet目标检测网络实现对目标的检测。例如检测舰船、车辆、人脸、道路等。其中的Unet网络结构如下所示

-

U-Net 是一个 encoder-decoder 结构,左边一半的 encoder 包括若干卷积,池化,把图像进行下采样,右边的 decoder 进行上采样,恢复到原图的形状,给出每个像素的预测。

-

编码器有四个子模块,每个子模块包含两个卷积层,每个子模块之后有一个通过 maxpool 实现的下采样层。

-

输入图像的分辨率是 572x572, 第 1-5 个模块的分辨率分别是 572x572, 284x284, 140x140, 68x68 和 32x32。

-

解码器包含四个子模块,分辨率通过上采样操作依次上升,直到与输入图像的分辨率一致。该网络还使用了跳跃连接,将上采样结果与编码器中具有相同分辨率的子模块的输出进行连接,作为解码器中下一个子模块的输入。

-

架构中的一个重要修改部分是在上采样中还有大量的特征通道,这些通道允许网络将上下文信息传播到具有更高分辨率的层。因此,拓展路径或多或少地与收缩路径对称,并产生一个 U 形结构。

-

在该网络中没有任何完全连接的层,并且仅使用每个卷积的有效部分,即分割映射仅包含在输入图像中可获得完整上下文的像素。该策略允许通过重叠平铺策略对任意大小的图像进行无缝分割,如图所示。为了预测图像边界区域中的像素,通过镜像输入图像来推断缺失的上下文。这种平铺策略对于将网络应用于大型的图像非常重要,否则分辨率将受到 GPU 内存的限制。

二、 实验内容

本实验通过Unet网络,实现对道路目标的检测,测试的数据集存放于文件夹中。使用Unet网络得到训练的数据集:道路目标检测的结果。

三、 实验程序

3.1、导入库

# 导入库

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms, models, utils

from torch.utils.data import DataLoader, Dataset, random_split

from torch.utils.tensorboard import SummaryWriter

#from torchsummary import summary

import matplotlib.pyplot as plt

import numpy as np

import time

import os

import copy

import cv2

import argparse # argparse库: 解析命令行参数

from tqdm import tqdm # 进度条

3.2、创建一个解析对象

# 创建一个解析对象

parser = argparse.ArgumentParser(description="Choose mode")

3.3、输入命令行和参数

# 输入命令行和参数

parser.add_argument('-mode', required=True, choices=['train', 'test'], default='train')

parser.add_argument('-dim', type=int, default=16)

parser.add_argument('-num_epochs', type=int, default=3)

parser.add_argument('-image_scale_h', type=int, default=256)

parser.add_argument('-image_scale_w', type=int, default=256)

parser.add_argument('-batch', type=int, default=4)

parser.add_argument('-img_cut', type=int, default=4)

parser.add_argument('-lr', type=float, default=5e-5)

parser.add_argument('-lr_1', type=float, default=5e-5)

parser.add_argument('-alpha', type=float, default=0.05)

parser.add_argument('-sa_scale', type=float, default=8)

parser.add_argument('-latent_size', type=int, default=100)

parser.add_argument('-data_path', type=str, default='./munich/train/img')

parser.add_argument('-label_path', type=str, default='./munich/train/lab')

parser.add_argument('-gpu', type=str, default='0')

parser.add_argument('-load_model', required=True, choices=['True', 'False'], help='choose True or False', default='False')

3.4、parse_args()方法进行解析

# parse_args()方法进行解析

opt = parser.parse_args()

print(opt)

os.environ["CUDA_VISIBLE_DEVICES"] = opt.gpu

use_cuda = torch.cuda.is_available()

print("use_cuda:", use_cuda)

3.5、指定计算机的第一个设备是GPU

# 指定计算机的第一个设备是GPU

device = torch.device("cuda" if use_cuda else "cpu")

IMG_CUT = opt.img_cut

LATENT_SIZE = opt.latent_size

writer = SummaryWriter('./runs2/gx0102')

3.6、创建文件路径

# 创建文件路径

def auto_create_path(FilePath):

if os.path.exists(FilePath):

print(FilePath + ' dir exists')

else:

print(FilePath + ' dir not exists')

os.makedirs(FilePath)

3.7、创建文件存放训练的结果

# 创建文件存放训练的结果

auto_create_path('./test/lab_dete_AVD')

auto_create_path('./model')

auto_create_path('./results')

3.8、向下采样,求剩余的区域

# 向下采样,求剩余的区域

class ResidualBlockClass(nn.Module):

def __init__(self, name, input_dim, output_dim, resample=None, activate='relu'):

super(ResidualBlockClass, self).__init__()

self.name = name

self.input_dim = input_dim

self.output_dim = output_dim

self.resample = resample

self.batchnormlize_1 = nn.BatchNorm2d(input_dim)

self.activate = activate

if resample == 'down':

self.conv_0 = nn.Conv2d(in_channels=input_dim, out_channels=output_dim, kernel_size=3, stride=1, padding=1)

self.conv_shortcut = nn.AvgPool2d(3, stride=2, padding=1)

self.conv_1 = nn.Conv2d(in_channels=input_dim, out_channels=input_dim, kernel_size=3, stride=1, padding=1)

self.conv_2 = nn.Conv2d(in_channels=input_dim, out_channels=output_dim, kernel_size=3, stride=2, padding=1)

self.batchnormlize_2 = nn.BatchNorm2d(input_dim)

elif resample == 'up':

self.conv_0 = nn.Conv2d(in_channels=input_dim, out_channels=output_dim, kernel_size=3, stride=1, padding=1)

self.conv_shortcut = nn.Upsample(scale_factor=2)

self.conv_1 = nn.Conv2d(in_channels=input_dim, out_channels=output_dim, kernel_size=3, stride=1, padding=1)

self.conv_2 = nn.ConvTranspose2d(in_channels=output_dim, out_channels=output_dim, kernel_size=3, stride=2, padding=2,

output_padding=1, dilation=2)

self.batchnormlize_2 = nn.BatchNorm2d(output_dim)

elif resample == None:

self.conv_shortcut = nn.Conv2d(in_channels=input_dim, out_channels=output_dim, kernel_size=3, stride=1, padding=1)

self.conv_1 = nn.Conv2d(in_channels=input_dim, out_channels=input_dim, kernel_size=3, stride=1, padding=1)

self.conv_2 = nn.Conv2d(in_channels=input_dim, out_channels=output_dim, kernel_size=3, stride=1, padding=1)

self.batchnormlize_2 = nn.BatchNorm2d(input_dim)

else:

raise Exception('invalid resample value')

def forward(self, inputs):

if self.output_dim == self.input_dim and self.resample == None:

shortcut = inputs

elif self.resample == 'down':

x = self.conv_0(inputs)

shortcut = self.conv_shortcut(x)

elif self.resample == None:

x = inputs

shortcut = self.conv_shortcut(x)

else:

x = self.conv_0(inputs)

shortcut = self.conv_shortcut(x)

if self.activate == 'relu':

x = inputs

x = self.batchnormlize_1(x)

x = F.relu(x)

x = self.conv_1(x)

x = self.batchnormlize_2(x)

x = F.relu(x)

x = self.conv_2(x)

return shortcut + x

else:

x = inputs

x = self.batchnormlize_1(x)

x = F.leaky_relu(x)

x = self.conv_1(x)

x = self.batchnormlize_2(x)

x = F.leaky_relu(x)

x = self.conv_2(x)

return shortcut + x

class Self_Attn(nn.Module):

""" Self attention Layer"""

def __init__(self,in_dim,activation=None):

super(Self_Attn,self).__init__()

self.chanel_in = in_dim

# self.activation = activation

self.query_conv = nn.Conv2d(in_channels = in_dim, out_channels = in_dim//opt.sa_scale, kernel_size = 1)

self.key_conv = nn.Conv2d(in_channels = in_dim, out_channels = in_dim//opt.sa_scale, kernel_size = 1)

self.value_conv = nn.Conv2d(in_channels = in_dim, out_channels = in_dim, kernel_size = 1)

self.gamma = nn.Parameter(torch.zeros(1))

self.softmax = nn.Softmax(dim=-1)

def forward(self,x):

"""

inputs :

x : input feature maps( B X C X W X H)

returns :

out : self attention value + input feature

attention: B X N X N (N is Width*Height)

"""

m_batchsize, C, width, height = x.size()

proj_query = self.query_conv(x).view(m_batchsize,-1,width*height).permute(0,2,1) # B X (W*H) X C

proj_key = self.key_conv(x).view(m_batchsize,-1,width*height) # B X C x (*W*H)

energy = torch.bmm(proj_query,proj_key) # transpose check

attention = self.softmax(energy) # BX (N) X (N)

proj_value = self.value_conv(x).view(m_batchsize,-1,width*height) # B X C X N

out = torch.bmm(proj_value,attention.permute(0,2,1))

out = out.view(m_batchsize, C, width, height)

out = self.gamma*out + x

return out

3.9、上采样,使用卷积恢复区域

# 上采样,使用卷积恢复区域

class UpProject(nn.Module):

def __init__(self, in_channels, out_channels):

super(UpProject, self).__init__()

# self.batch_size = batch_size

self.conv1_1 = nn.Conv2d(in_channels, out_channels, 3)

self.conv1_2 = nn.Conv2d(in_channels, out_channels, (2, 3))

self.conv1_3 = nn.Conv2d(in_channels, out_channels, (3, 2))

self.conv1_4 = nn.Conv2d(in_channels, out_channels, 2)

self.conv2_1 = nn.Conv2d(in_channels, out_channels, 3)

self.conv2_2 = nn.Conv2d(in_channels, out_channels, (2, 3))

self.conv2_3 = nn.Conv2d(in_channels, out_channels, (3, 2))

self.conv2_4 = nn.Conv2d(in_channels, out_channels, 2)

self.bn1_1 = nn.BatchNorm2d(out_channels)

self.bn1_2 = nn.BatchNorm2d(out_channels)

self.relu = nn.ReLU(inplace=True)

self.conv3 = nn.Conv2d(out_channels, out_channels, 3, padding=1)

self.bn2 = nn.BatchNorm2d(out_channels)

def forward(self, x):

# b, 10, 8, 1024

batch_size = x.shape[0]

out1_1 = self.conv1_1(nn.functional.pad(x, (1, 1, 1, 1)))

out1_2 = self.conv1_2(nn.functional.pad(x, (1, 1, 0, 1)))#right interleaving padding

#out1_2 = self.conv1_2(nn.functional.pad(x, (1, 1, 1, 0)))#author's interleaving pading in github

out1_3 = self.conv1_3(nn.functional.pad(x, (0, 1, 1, 1)))#right interleaving padding

#out1_3 = self.conv1_3(nn.functional.pad(x, (1, 0, 1, 1)))#author's interleaving pading in github

out1_4 = self.conv1_4(nn.functional.pad(x, (0, 1, 0, 1)))#right interleaving padding

#out1_4 = self.conv1_4(nn.functional.pad(x, (1, 0, 1, 0)))#author's interleaving pading in github

out2_1 = self.conv2_1(nn.functional.pad(x, (1, 1, 1, 1)))

out2_2 = self.conv2_2(nn.functional.pad(x, (1, 1, 0, 1)))#right interleaving padding

#out2_2 = self.conv2_2(nn.functional.pad(x, (1, 1, 1, 0)))#author's interleaving pading in github

out2_3 = self.conv2_3(nn.functional.pad(x, (0, 1, 1, 1)))#right interleaving padding

#out2_3 = self.conv2_3(nn.functional.pad(x, (1, 0, 1, 1)))#author's interleaving pading in github

out2_4 = self.conv2_4(nn.functional.pad(x, (0, 1, 0, 1)))#right interleaving padding

#out2_4 = self.conv2_4(nn.functional.pad(x, (1, 0, 1, 0)))#author's interleaving pading in github

height = out1_1.size()[2]

width = out1_1.size()[3]

out1_1_2 = torch.stack((out1_1, out1_2), dim=-3).permute(0, 1, 3, 4, 2).contiguous().view(

batch_size, -1, height, width * 2)

out1_3_4 = torch.stack((out1_3, out1_4), dim=-3).permute(0, 1, 3, 4, 2).contiguous().view(

batch_size, -1, height, width * 2)

out1_1234 = torch.stack((out1_1_2, out1_3_4), dim=-3).permute(0, 1, 3, 2, 4).contiguous().view(

batch_size, -1, height * 2, width * 2)

out2_1_2 = torch.stack((out2_1, out2_2), dim=-3).permute(0, 1, 3, 4, 2).contiguous(