使用kubekey的all-in-one安装K8S1.24及KubeSphere3.3

Posted 虎鲸不是鱼

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了使用kubekey的all-in-one安装K8S1.24及KubeSphere3.3相关的知识,希望对你有一定的参考价值。

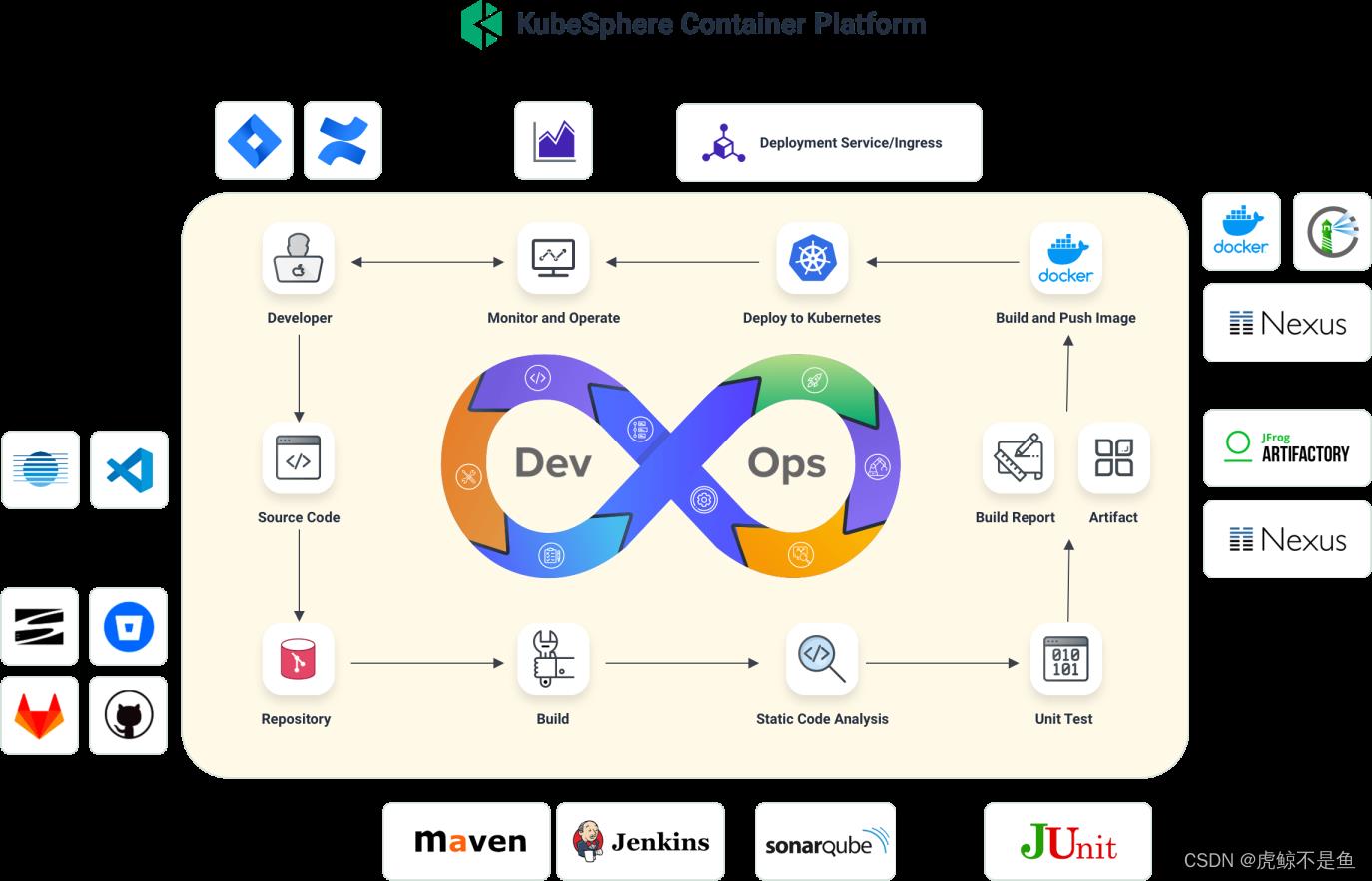

KubeSphere简介

有中文,别人介绍的很详细,笔者不再赘述,本篇主要实操。

KubeSphere可以很方便地部署devops的各种组件:

常用组件一应俱全,对于非专业运维的开发人员异常友好,构建基于Jenkins的Devops流水线非常方便。比K8S自带的DashBoard也花哨很多。流水线构建之后尝试,先安装基础环境。

Kubekey简介

官网文档:https://kubesphere.com.cn/docs/v3.3/installing-on-linux/introduction/kubekey/

Github:https://github.com/kubesphere/kubekey

Github中文文档:https://github.com/kubesphere/kubekey/blob/master/README_zh-CN.md

Kubekey采用Go编写,不像Ansible那样依赖运行环境,可以同时安装 Kubernetes 和 KubeSphere。此外Kubekey还可以对K8S集群做升级、扩缩容、根据Yaml安装插件等操作,对非专业运维的开发人员相当友好。

多节点安装K8S及KubeSphere官方文档:https://kubesphere.com.cn/docs/v3.3/installing-on-linux/introduction/multioverview/

All-in-one选用

原因

由于K8S的涉及初衷就是run forever,想要让各种Pod正常情况一直运行下去,但是笔者使用的宿主机不可能不关机,吸取了大数据集群挂起后检查出现ntp时间不同步导致HBase组件宕掉的教训后,自己玩的K8S选用All-in-one,不用时挂起即可,否则每次重启虚拟机及组件也是个麻烦事。Kuboard也很方便,但是笔者一向喜欢比较重的组件。Kuboard搭建K8S集群目前只支持到1.23,还是老了点,重启还需要自行杀坏掉的pod:

kubectl delete pods pod名称 --grace-period=0 --force

所以最终选择了All-in-one的KubeSphere。

Kubekey版本

根据Github的官方文档:https://github.com/kubesphere/kubekey/releases

当然是选用目前最新的2.2.2。

虚拟机配置

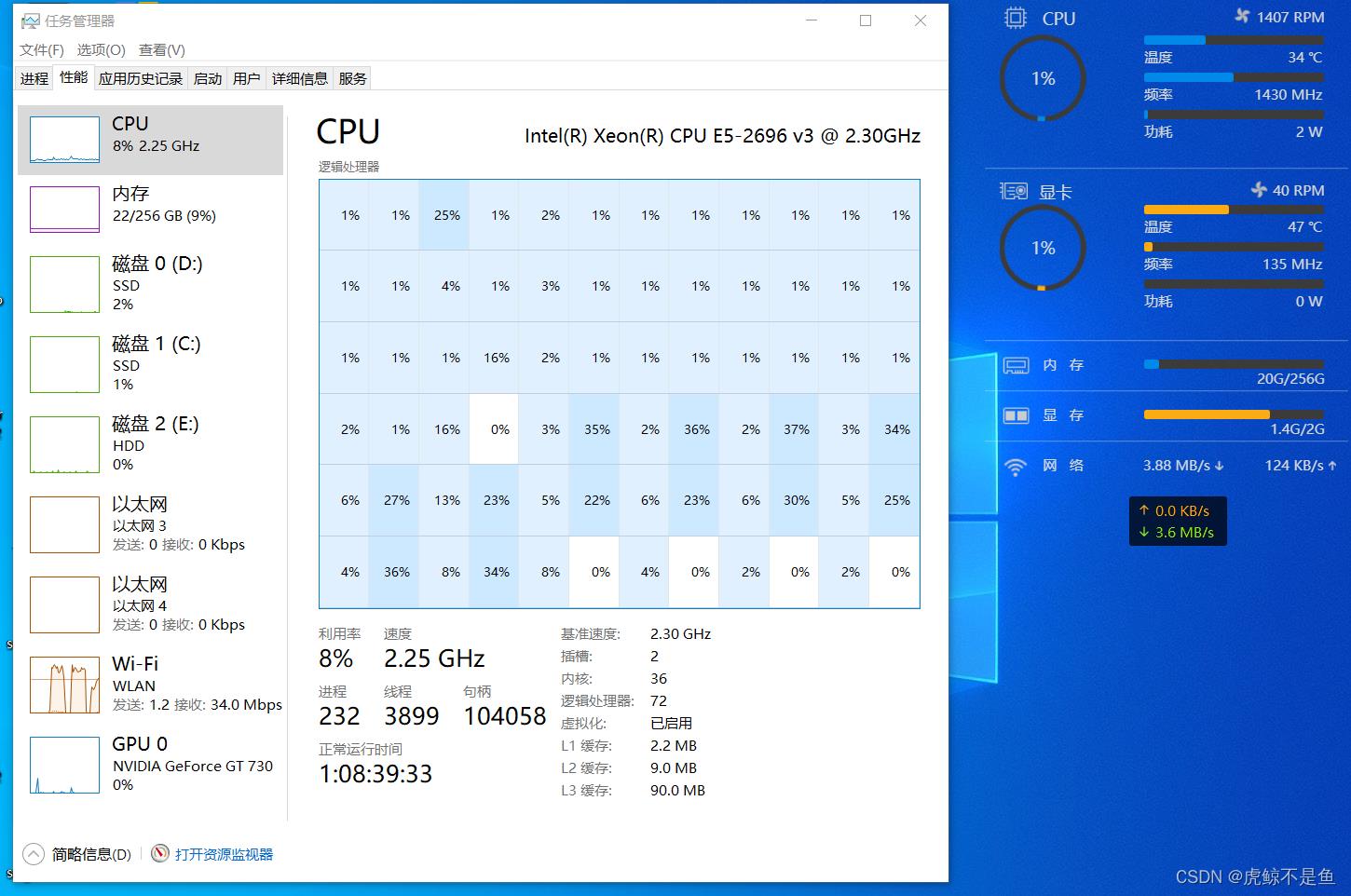

官网建议默认最小化安装最低2C+4G+40G,双路E5 2696V3 + 256G + 3块硬盘的宿主机可以奢侈一点,笔者分配12C + 40G + 300G,一步到位,方便后期加入更多组件。较官网建议的8C + 16G + 100G只多不少。

Linux版本

-

Linux 发行版

- Ubuntu 16.04, 18.04, 20.04

- Debian Buster, Stretch

- CentOS/RHEL 7

- SUSE Linux Enterprise Server 15

建议使用 Linux Kernel 版本:

4.15 or later

可以通过命令uname -srm查看 Linux Kernel 版本。

由于Centos已经停更,且Ubuntu的Kernel版本要更新一些,可以更好地支持K8S1.24的新特性。当然Centos7.9也可以升级Kernel版本后使用,看个人喜好。Ubuntu22.04暂时不建议非专业运维的开发人员使用,安装K8S1.24这种新版本,安装Calico这种CNI组件会有问题,如果使用Flannel当我没说。。。

K8S版本

- v1.17: v1.17.9

- v1.18: v1.18.8

- v1.19: v1.19.9

- v1.20: v1.20.10

- v1.21: v1.21.13

- v1.22: v1.22.12

- v1.23: v1.23.9 (default)

- v1.24: v1.24.3

目前Kubekey支持这些主流版本。Github的文档要比官网文档新一些。

当然是安装1.24,毕竟移除了DockerShim,也是个里程碑版本。

运行时

常见的运行时docker, crio, containerd and isula,目前当然应该选用containerd,选用docker就过时了。

依赖

| Kubernetes 版本 ≥ 1.18 | Kubernetes 版本 < 1.18 | |

|---|---|---|

socat | 必须安装 | 可选,但推荐安装 |

conntrack | 必须安装 | 可选,但推荐安装 |

ebtables | 可选,但推荐安装 | 可选,但推荐安装 |

ipset | 可选,但推荐安装 | 可选,但推荐安装 |

ipvsadm | 可选,但推荐安装 | 可选,但推荐安装 |

从官方文档可以看出必须安装socat及conntrack。

虚拟机准备

为了方便使用,笔者选用Ubuntu20.04LTS的Desktop image,自己玩玩有GUI还是很方便。

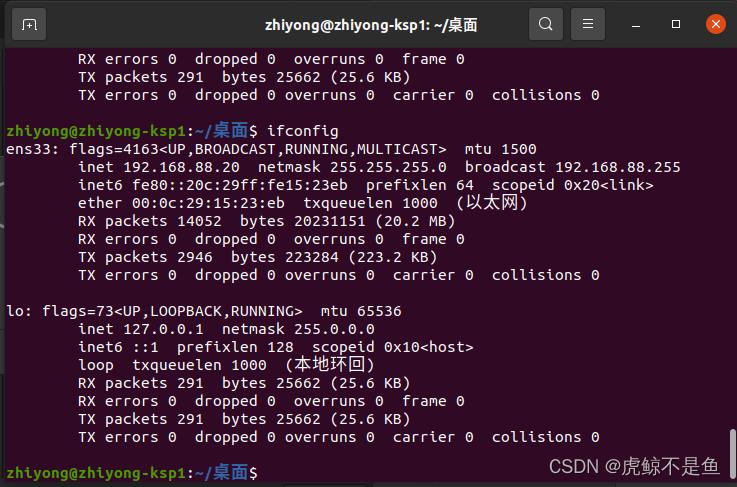

配置网卡

直接GUI中设置即可:

设置固定IP:192.168.88.20

设置子网掩码:255.255.255.0

设置网关:192.168.88.2

并且禁用IPV6。

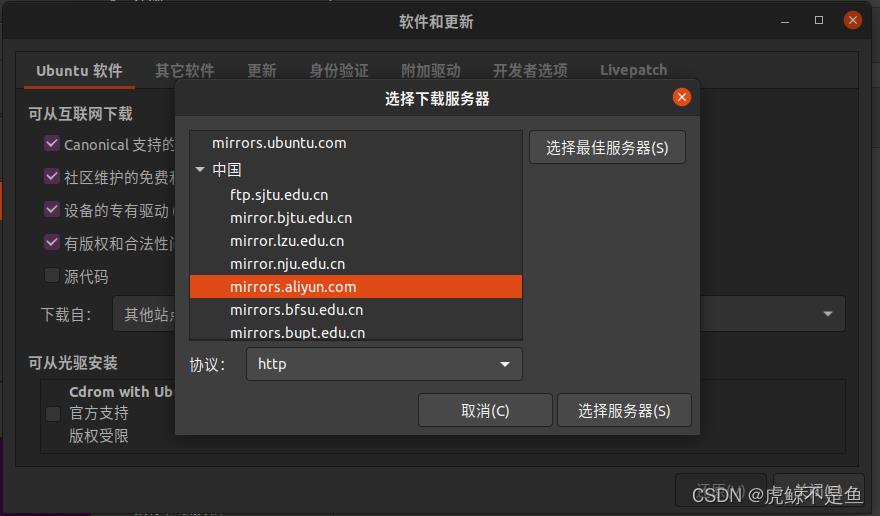

更换阿里源

众所周知阿里源比Ubuntu的源速度快很多:

安装必要命令

Ubuntu的Desktop默认缺少很多命令集,必须手动安装后才能使用SSH等常用命令。

sudo apt install net-tools

可以看到net-tools已经可用,并且固定IP配置成功。

SSH是必须安装的,否则会Network error: Connection refused。安装好只后才能使用MobaXterm远程连接并且传输文件。

sudo apt-get install openssh-server

sudo apt-get install openssh-client

sudo apt-get install socat

sudo apt-get install conntrack

sudo apt-get install curl

安装Kubekey

执行:

sudo mkdir -p /home/zhiyong/kubesphereinstall

cd /home/zhiyong/kubesphereinstall

export KKZONE=cn #从CN下载

curl -sfL https://get-kk.kubesphere.io | VERSION=v2.2.2 sh - #下载

chmod +x kk #可以不用执行

等待:

zhiyong@zhiyong-ksp1:~/kubesphereinstall$ curl -sfL https://get-kk.kubesphere.io | VERSION=v2.2.2 sh -

Downloading kubekey v2.2.2 from https://kubernetes.pek3b.qingstor.com/kubekey/releases/download/v2.2.2/kubekey-v2.2.2-linux-amd64.tar.gz ...

Kubekey v2.2.2 Download Complete!

zhiyong@zhiyong-ksp1:~/kubesphereinstall$ ll

总用量 70336

drwxrwxr-x 2 zhiyong zhiyong 4096 8月 8 01:03 ./

drwxr-xr-x 16 zhiyong zhiyong 4096 8月 8 00:52 ../

-rwxr-xr-x 1 zhiyong zhiyong 54910976 7月 26 14:17 kk*

-rw-rw-r-- 1 zhiyong zhiyong 17102249 8月 8 01:03 kubekey-v2.2.2-linux-amd64.tar.gz

吊起Kubekey

指定版本安装K8S及KubeSphere:

export KKZONE=cn

./kk create cluster --with-kubernetes v1.24.3 --with-kubesphere v3.3.0 --container-manager containerd

需要切换root用户才能操作:

zhiyong@zhiyong-ksp1:~/kubesphereinstall$ ./kk create cluster --with-kubernetes v1.24.3 --with-kubesphere v3.3.0 --container-manager containerd

error: Current user is zhiyong. Please use root!

zhiyong@zhiyong-ksp1:~/kubesphereinstall$ su - root

密码:

su: 认证失败

zhiyong@zhiyong-ksp1:~/kubesphereinstall$ su root

密码:

su: 认证失败

zhiyong@zhiyong-ksp1:~/kubesphereinstall$ sudo su root

root@zhiyong-ksp1:/home/zhiyong/kubesphereinstall# ./kk create cluster --with-kubernetes v1.24.3 --with-kubesphere v3.3.0 --container-manager containerd

_ __ _ _ __

| | / / | | | | / /

| |/ / _ _| |__ ___| |/ / ___ _ _

| \\| | | | '_ \\ / _ \\ \\ / _ \\ | | |

| |\\ \\ |_| | |_) | __/ |\\ \\ __/ |_| |

\\_| \\_/\\__,_|_.__/ \\___\\_| \\_/\\___|\\__, |

__/ |

|___/

01:11:22 CST [GreetingsModule] Greetings

01:11:23 CST message: [zhiyong-ksp1]

Greetings, KubeKey!

01:11:23 CST success: [zhiyong-ksp1]

01:11:23 CST [NodePreCheckModule] A pre-check on nodes

01:11:23 CST success: [zhiyong-ksp1]

01:11:23 CST [ConfirmModule] Display confirmation form

+--------------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| name | sudo | curl | openssl | ebtables | socat | ipset | ipvsadm | conntrack | chrony | docker | containerd | nfs client | ceph client | glusterfs client | time |

+--------------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| zhiyong-ksp1 | y | y | y | y | y | | | y | | | y | | | | CST 01:11:23 |

+--------------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

This is a simple check of your environment.

Before installation, ensure that your machines meet all requirements specified at

https://github.com/kubesphere/kubekey#requirements-and-recommendations

Continue this installation? [yes/no]: yes

01:12:55 CST success: [LocalHost]

01:12:55 CST [NodeBinariesModule] Download installation binaries

01:12:55 CST message: [localhost]

downloading amd64 kubeadm v1.24.3 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 42.3M 100 42.3M 0 0 1115k 0 0:00:38 0:00:38 --:--:-- 1034k

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 42.3M 100 42.3M 0 0 813k 0 0:00:53 0:00:53 --:--:-- 674k

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 42.3M 100 42.3M 0 0 607k 0 0:01:11 0:01:11 --:--:-- 616k

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 42.3M 100 42.3M 0 0 696k 0 0:01:02 0:01:02 --:--:-- 778k

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 42.3M 100 42.3M 0 0 1686k 0 0:00:25 0:00:25 --:--:-- 4429k

01:17:09 CST message: [LocalHost]

Failed to download kubeadm binary: curl -L -o /home/zhiyong/kubesphereinstall/kubekey/kube/v1.24.3/amd64/kubeadm https://storage.googleapis.com/kubernetes-release/release/v1.24.3/bin/linux/amd64/kubeadm error: No SHA256 found for kubeadm. v1.24.3 is not supported.

01:17:09 CST failed: [LocalHost]

error: Pipeline[CreateClusterPipeline] execute failed: Module[NodeBinariesModule] exec failed:

failed: [LocalHost] [DownloadBinaries] exec failed after 1 retires: Failed to download kubeadm binary: curl -L -o /home/zhiyong/kubesphereinstall/kubekey/kube/v1.24.3/amd64/kubeadm https://storage.googleapis.com/kubernetes-release/release/v1.24.3/bin/linux/amd64/kubeadm error: No SHA256 found for kubeadm. v1.24.3 is not supported.

可用看出可能是对象存储丢失了哈希校验文件或者别的原因,导致不能从二进制安装K8S1.24.3。

经过多次尝试,发现目前可以安装K8S1.24.1:

root@zhiyong-ksp1:/home/zhiyong/kubesphereinstall# ./kk create cluster --with-kubernetes v1.24.1 --with-kubesphere v3.3.0 --container-manager containerd

_ __ _ _ __

| | / / | | | | / /

| |/ / _ _| |__ ___| |/ / ___ _ _

| \\| | | | '_ \\ / _ \\ \\ / _ \\ | | |

| |\\ \\ |_| | |_) | __/ |\\ \\ __/ |_| |

\\_| \\_/\\__,_|_.__/ \\___\\_| \\_/\\___|\\__, |

__/ |

|___/

07:55:51 CST [GreetingsModule] Greetings

07:55:51 CST message: [zhiyong-ksp1]

Greetings, KubeKey!

07:55:51 CST success: [zhiyong-ksp1]

07:55:51 CST [NodePreCheckModule] A pre-check on nodes

07:55:51 CST success: [zhiyong-ksp1]

07:55:51 CST [ConfirmModule] Display confirmation form

+--------------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| name | sudo | curl | openssl | ebtables | socat | ipset | ipvsadm | conntrack | chrony | docker | containerd | nfs client | ceph client | glusterfs client | time |

+--------------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| zhiyong-ksp1 | y | y | y | y | y | | | y | | | y | | | | CST 07:55:51 |

+--------------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

This is a simple check of your environment.

Before installation, ensure that your machines meet all requirements specified at

https://github.com/kubesphere/kubekey#requirements-and-recommendations

Continue this installation? [yes/no]: yes

07:55:53 CST success: [LocalHost]

07:55:53 CST [NodeBinariesModule] Download installation binaries

07:55:53 CST message: [localhost]

downloading amd64 kubeadm v1.24.1 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 42.3M 100 42.3M 0 0 1019k 0 0:00:42 0:00:42 --:--:-- 1061k

07:56:36 CST message: [localhost]

downloading amd64 kubelet v1.24.1 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 110M 100 110M 0 0 1022k 0 0:01:51 0:01:51 --:--:-- 1141k

07:58:29 CST message: [localhost]

downloading amd64 kubectl v1.24.1 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 43.5M 100 43.5M 0 0 994k 0 0:00:44 0:00:44 --:--:-- 997k

07:59:14 CST message: [localhost]

downloading amd64 helm v3.6.3 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 43.0M 100 43.0M 0 0 1014k 0 0:00:43 0:00:43 --:--:-- 1026k

07:59:58 CST message: [localhost]

downloading amd64 kubecni v0.9.1 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 37.9M 100 37.9M 0 0 1011k 0 0:00:38 0:00:38 --:--:-- 1027k

08:00:37 CST message: [localhost]

downloading amd64 crictl v1.24.0 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 13.8M 100 13.8M 0 0 1012k 0 0:00:14 0:00:14 --:--:-- 1051k

08:00:51 CST message: [localhost]

downloading amd64 etcd v3.4.13 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 16.5M 100 16.5M 0 0 1022k 0 0:00:16 0:00:16 --:--:-- 1070k

08:01:08 CST message: [localhost]

downloading amd64 containerd 1.6.4 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 42.3M 100 42.3M 0 0 1011k 0 0:00:42 0:00:42 --:--:-- 1087k

08:01:51 CST message: [localhost]

downloading amd64 runc v1.1.1 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 9194k 100 9194k 0 0 999k 0 0:00:09 0:00:09 --:--:-- 1089k

08:02:01 CST success: [LocalHost]

08:02:01 CST [ConfigureOSModule] Prepare to init OS

08:02:01 CST success: [zhiyong-ksp1]

08:02:01 CST [ConfigureOSModule] Generate init os script

08:02:01 CST success: [zhiyong-ksp1]

08:02:01 CST [ConfigureOSModule] Exec init os script

08:02:03 CST stdout: [zhiyong-ksp1]

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

vm.swappiness = 1

fs.inotify.max_user_instances = 524288

kernel.pid_max = 65535

08:02:03 CST success: [zhiyong-ksp1]

08:02:03 CST [ConfigureOSModule] configure the ntp server for each node

08:02:03 CST skipped: [zhiyong-ksp1]

08:02:03 CST [KubernetesStatusModule] Get kubernetes cluster status

08:02:03 CST success: [zhiyong-ksp1]

08:02:03 CST [InstallContainerModule] Sync containerd binaries

08:02:06 CST success: [zhiyong-ksp1]

08:02:06 CST [InstallContainerModule] Sync crictl binaries

08:02:07 CST success: [zhiyong-ksp1]

08:02:07 CST [InstallContainerModule] Generate containerd service

08:02:07 CST success: [zhiyong-ksp1]

08:02:07 CST [InstallContainerModule] Generate containerd config

08:02:07 CST success: [zhiyong-ksp1]

08:02:07 CST [InstallContainerModule] Generate crictl config

08:02:07 CST success: [zhiyong-ksp1]

08:02:07 CST [InstallContainerModule] Enable containerd

08:02:08 CST success: [zhiyong-ksp1]

08:02:08 CST [PullModule] Start to pull images on all nodes

08:02:08 CST message: [zhiyong-ksp1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.7

08:02:11 CST message: [zhiyong-ksp1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.24.1

08:02:22 CST message: [zhiyong-ksp1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.24.1

08:02:31 CST message: [zhiyong-ksp1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.24.1

08:02:38 CST message: [zhiyong-ksp1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.24.1

08:02:49 CST message: [zhiyong-ksp1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.6

08:02:54 CST message: [zhiyong-ksp1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

08:03:06 CST message: [zhiyong-ksp1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.23.2

08:03:21 CST message: [zhiyong-ksp1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.23.2

08:03:49 CST message: [zhiyong-ksp1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.23.2

08:04:10 CST message: [zhiyong-ksp1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.23.2

08:04:13 CST success: [zhiyong-ksp1]

08:04:13 CST [ETCDPreCheckModule] Get etcd status

08:04:13 CST success: [zhiyong-ksp1]

08:04:13 CST [CertsModule] Fetch etcd certs

08:04:13 CST success: [zhiyong-ksp1]

08:04:13 CST [CertsModule] Generate etcd Certs

[certs] Generating "ca" certificate and key

[certs] admin-zhiyong-ksp1 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost zhiyong-ksp1] and IPs [127.0.0.1 ::1 192.168.88.20]

[certs] member-zhiyong-ksp1 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost zhiyong-ksp1] and IPs [127.0.0.1 ::1 192.168.88.20]

[certs] node-zhiyong-ksp1 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost zhiyong-ksp1] and IPs [127.0.0.1 ::1 192.168.88.20]

08:04:15 CST success: [LocalHost]

08:04:15 CST [CertsModule] Synchronize certs file

08:04:16 CST success: [zhiyong-ksp1]

08:04:16 CST [CertsModule] Synchronize certs file to master

08:04:16 CST skipped: [zhiyong-ksp1]

08:04:16 CST [InstallETCDBinaryModule] Install etcd using binary

08:04:17 CST success: [zhiyong-ksp1]

08:04:17 CST [InstallETCDBinaryModule] Generate etcd service

08:04:17 CST success: [zhiyong-ksp1]

08:04:17 CST [InstallETCDBinaryModule] Generate access address

08:04:17 CST success: [zhiyong-ksp1]

08:04:17 CST [ETCDConfigureModule] Health check on exist etcd

08:04:17 CST skipped: [zhiyong-ksp1]

08:04:17 CST [ETCDConfigureModule] Generate etcd.env config on new etcd

08:04:17 CST success: [zhiyong-ksp1]

08:04:17 CST [ETCDConfigureModule] Refresh etcd.env config on all etcd

08:04:17 CST success: [zhiyong-ksp1]

08:04:17 CST [ETCDConfigureModule] Restart etcd

08:04:22 CST stdout: [zhiyong-ksp1]

Created symlink /etc/systemd/system/multi-user.target.wants/etcd.service → /etc/systemd/system/etcd.service.

08:04:22 CST success: [zhiyong-ksp1]

08:04:22 CST [ETCDConfigureModule] Health check on all etcd

08:04:22 CST success: [zhiyong-ksp1]

08:04:22 CST [ETCDConfigureModule] Refresh etcd.env config to exist mode on all etcd

08:04:22 CST success: [zhiyong-ksp1]

08:04:22 CST [ETCDConfigureModule] Health check on all etcd

08:04:22 CST success: [zhiyong-ksp1]

08:04:22 CST [ETCDBackupModule] Backup etcd data regularly

08:04:22 CST success: [zhiyong-ksp1]

08:04:22 CST [ETCDBackupModule] Generate backup ETCD service

08:04:23 CST success: [zhiyong-ksp1]

08:04:23 CST [ETCDBackupModule] Generate backup ETCD timer

08:04:23 CST success: [zhiyong-ksp1]

08:04:23 CST [ETCDBackupModule] Enable backup etcd service

08:04:23 CST success: [zhiyong-ksp1]

08:04:23 CST [InstallKubeBinariesModule] Synchronize kubernetes binaries

08:04:33 CST success: [zhiyong-ksp1]

08:04:33 CST [InstallKubeBinariesModule] Synchronize kubelet

08:04:33 CST success: [zhiyong-ksp1]

08:04:33 CST [InstallKubeBinariesModule] Generate kubelet service

08:04:34 CST success: [zhiyong-ksp1]

08:04:34 CST [InstallKubeBinariesModule] Enable kubelet service

08:04:34 CST success: [zhiyong-ksp1]

08:04:34 CST [InstallKubeBinariesModule] Generate kubelet env

08:04:34 CST success: [zhiyong-ksp1]

08:04:34 CST [InitKubernetesModule] Generate kubeadm config

08:04:35 CST success: [zhiyong-ksp1]

08:04:35 CST [InitKubernetesModule] Init cluster using kubeadm

08:04:51 CST stdout: [zhiyong-ksp1]

W0808 08:04:35.263265 5320 common.go:83] your configuration file uses a deprecated API spec: "kubeadm.k8s.io/v1beta2". Please use 'kubeadm config migrate --old-config old.yaml --new-config new.yaml', which will write the new, similar spec using a newer API version.

W0808 08:04:35.264800 5320 common.go:83] your configuration file uses a deprecated API spec: "kubeadm.k8s.io/v1beta2". Please use 'kubeadm config migrate --old-config old.yaml --new-config new.yaml', which will write the new, similar spec using a newer API version.

W0808 08:04:35.267646 5320 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

[init] Using Kubernetes version: v1.24.1

[preflight] Running pre-flight checks

[WARNING FileExisting-ethtool]: ethtool not found in system path

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local lb.kubesphere.local localhost zhiyong-ksp1 zhiyong-ksp1.cluster.local] and IPs [10.233.0.1 192.168.88.20 127.0.0.1]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] External etcd mode: Skipping etcd/ca certificate authority generation

[certs] External etcd mode: Skipping etcd/server certificate generation

[certs] External etcd mode: Skipping etcd/peer certificate generation

[certs] External etcd mode: Skipping etcd/healthcheck-client certificate generation

[certs] External etcd mode: Skipping apiserver-etcd-client certificate generation

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 11.004889 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node zhiyong-ksp1 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node zhiyong-ksp1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: 1g0q46.cdguvbypxok882ne

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join lb.kubesphere.local:6443 --token 1g0q46.cdguvbypxok882ne \\

--discovery-token-ca-cert-hash sha256:b44eb7d34699d4efc2b51013d5e217d97e977ebbed7c77ac27934a0883501c02 \\

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join lb.kubesphere.local:6443 --token 1g0q46.cdguvbypxok882ne \\

--discovery-token-ca-cert-hash sha256:b44eb7d34699d4efc2b51013d5e217d97e977ebbed7c77ac27934a0883501c02

08:04:51 CST success: [zhiyong-ksp1]

08:04:51 CST [InitKubernetesModule] Copy admin.conf to ~/.kube/config

08:04:51 CST success: [zhiyong-ksp1]

08:04:51 CST [InitKubernetesModule] Remove master taint

08:04:51 CST stdout: [zhiyong-ksp1]

node/zhiyong-ksp1 untainted

08:04:51 CST stdout: [zhiyong-ksp1]

node/zhiyong-ksp1 untainted

08:04:51 CST success: [zhiyong-ksp1]

08:04:51 CST [InitKubernetesModule] Add worker label

08:04:51 CST stdout: [zhiyong-ksp1]

node/zhiyong-ksp1 labeled

08:04:51 CST success: [zhiyong-ksp1]

08:04:51 CST [ClusterDNSModule] Generate coredns service

08:04:52 CST success: [zhiyong-ksp1]

08:04:52 CST [ClusterDNSModule] Override coredns service

08:04:52 CST stdout: [zhiyong-ksp1]

service "kube-dns" deleted

08:04:53 CST stdout: [zhiyong-ksp1]

service/coredns created

Warning: resource clusterroles/system:coredns is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

clusterrole.rbac.authorization.k8s.io/system:coredns configured

08:04:53 CST success: [zhiyong-ksp1]

08:04:53 CST [ClusterDNSModule] Generate nodelocaldns

08:04:54 CST success: [zhiyong-ksp1]

08:04:54 CST [ClusterDNSModule] Deploy nodelocaldns

08:04:54 CST stdout: [zhiyong-ksp1]

serviceaccount/nodelocaldns created

daemonset.apps/nodelocaldns created

08:04:54 CST success: [zhiyong-ksp1]

08:04:54 CST [ClusterDNSModule] Generate nodelocaldns configmap

08:04:54 CST success: [zhiyong-ksp1]

08:04:54 CST [ClusterDNSModule] Apply nodelocaldns configmap

08:04:55 CST stdout: [zhiyong-ksp1]

configmap/nodelocaldns created

08:04:55 CST success: [zhiyong-ksp1]

08:04:55 CST [KubernetesStatusModule] Get kubernetes cluster status

08:04:55 CST stdout: [zhiyong-ksp1]

v1.24.1

08:04:55 CST stdout: [zhiyong-ksp1]

zhiyong-ksp1 v1.24.1 [map[address:192.168.88.20 type:InternalIP] map[address:zhiyong-ksp1 type:Hostname]]

08:04:56 CST stdout: [zhiyong-ksp1]

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

a8f69e35232215a9e8129098a4a7eede8cacaf554073374a05d970e52b156403

08:04:56 CST stdout: [zhiyong-ksp1]

secret/kubeadm-certs patched

08:04:57 CST stdout: [zhiyong-ksp1]

secret/kubeadm-certs patched

08:04:57 CST stdout: [zhiyong-ksp1]

secret/kubeadm-certs patched

08:04:57 CST stdout: [zhiyong-ksp1]

zosd83.v0iafx5s1n5d3ax7

08:04:57 CST success: [zhiyong-ksp1]

08:04:57 CST [JoinNodesModule] Generate kubeadm config

08:04:57 CST skipped: [zhiyong-ksp1]

08:04:57 CST [JoinNodesModule] Join control-plane node

08:04:57 CST skipped: [zhiyong-ksp1]

08:04:57 CST [JoinNodesModule] Join worker node

08:04:57 CST skipped: [zhiyong-ksp1]

08:04:57 CST [JoinNodesModule] Copy admin.conf to ~/.kube/config

08:04:57 CST skipped: [zhiyong-ksp1]

08:04:57 CST [JoinNodesModule] Remove master taint

08:04:57 CST skipped: [zhiyong-ksp1]

08:04:57 CST [JoinNodesModule] Add worker label to master

08:04:57 CST skipped: [zhiyong-ksp1]

08:04:57 CST [JoinNodesModule] Synchronize kube config to worker

08:04:57 CST skipped: [zhiyong-ksp1]

08:04:57 CST [JoinNodesModule] Add worker label to worker

08:04:57 CST skipped: [zhiyong-ksp1]

08:04:57 CST [DeployNetworkPluginModule] Generate calico

08:04:57 CST success: [zhiyong-ksp1]

08:04:57 CST [DeployNetworkPluginModule] Deploy calico

08:04:58 CST stdout: [zhiyong-ksp1]

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

poddisruptionbudget.policy/calico-kube-controllers created

08:04:58 CST success: [zhiyong-ksp1]

08:04:58 CST [ConfigureKubernetesModule] Configure kubernetes

08:04:58 CST success: [zhiyong-ksp1]

08:04:58 CST [ChownModule] Chown user $HOME/.kube dir

08:04:58 CST success: [zhiyong-ksp1]

08:04:58 CST [AutoRenewCertsModule] Generate k8s certs renew script

08:04:58 CST success: [zhiyong-ksp1]

08:04:58 CST [AutoRenewCertsModule] Generate k8s certs renew service

08:04:58 CST success: [zhiyong-ksp1]

08:04:58 CST [AutoRenewCertsModule] Generate k8s certs renew timer

08:04:58 CST success: [zhiyong-ksp1]

08:04:58 CST [AutoRenewCertsModule] Enable k8s certs renew service

08:04:58 CST success: [zhiyong-ksp1]

08:04:58 CST [SaveKubeConfigModule] Save kube config as a configmap

08:04:58 CST success: [LocalHost]

08:04:58 CST [AddonsModule] Install addons

08:04:58 CST success: [LocalHost]

08:04:58 CST [DeployStorageClassModule] Generate OpenEBS manifest

08:04:59 CST success: [zhiyong-ksp1]

08:04:59 CST [DeployStorageClassModule] Deploy OpenEBS as cluster default StorageClass

08:05:00 CST success: [zhiyong-ksp1]

08:05:00 CST [DeployKubeSphereModule] Generate KubeSphere ks-installer crd manifests

08:05:00 CST success: [zhiyong-ksp1]

08:05:00 CST [DeployKubeSphereModule] Apply ks-installer

08:05:01 CST stdout: [zhiyong-ksp1]

namespace/kubesphere-system created

serviceaccount/ks-installer created

customresourcedefinition.apiextensions.k8s.io/clusterconfigurations.installer.kubesphere.io created

clusterrole.rbac.authorization.k8s.io/ks-installer created

clusterrolebinding.rbac.authorization.k8s.io/ks-installer created

deployment.apps/ks-installer created

08:05:01 CST success: [zhiyong-ksp1]

08:05:01 CST [DeployKubeSphereModule] Add config to ks-installer manifests

08:05:01 CST success: [zhiyong-ksp1]

08:05:01 CST [DeployKubeSphereModule] Create the kubesphere namespace

08:05:01 CST success: [zhiyong-ksp1]

08:05:01 CST [DeployKubeSphereModule] Setup ks-installer config

08:05:01 CST stdout: [zhiyong-ksp1]

secret/kube-etcd-client-certs created

08:05:01 CST success: [zhiyong-ksp1]

08:05:01 CST [DeployKubeSphereModule] Apply ks-installer

08:05:04 CST stdout: [zhiyong-ksp1]

namespace/kubesphere-system unchanged

serviceaccount/ks-installer unchanged

customresourcedefinition.apiextensions.k8s.io/clusterconfigurations.installer.kubesphere.io unchanged

clusterrole.rbac.authorization.k8s.io/ks-installer unchanged

clusterrolebinding.rbac.authorization.k8s.io/ks-installer unchanged

deployment.apps/ks-installer unchanged

clusterconfiguration.installer.kubesphere.io/ks-installer created

此时双路E5核多但是主频吃不满:

多等一会儿:

08:05:04 CST success: [zhiyong-ksp1]

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://192.168.88.20:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

"Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

####################以上是关于使用kubekey的all-in-one安装K8S1.24及KubeSphere3.3的主要内容,如果未能解决你的问题,请参考以下文章