深度学习------用NNCNNRNN神经网络实现mnist数据集处理

Posted 小飞龙程序员

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了深度学习------用NNCNNRNN神经网络实现mnist数据集处理相关的知识,希望对你有一定的参考价值。

1. 用NN神经网络完成MNIST数据集处理

# 用NN神经网络完成MNIST数据集处理

# 1、导包

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

# 2、加载mnist数据集

mnist=input_data.read_data_sets('mnist_data',one_hot=True)

x_data=mnist.train.images

y_data=mnist.train.labels

# 3、设置占位符

x=tf.placeholder(tf.float32,shape=[None,28*28])

y=tf.placeholder(tf.float32,shape=[None,10])

# 4、设置偏置权重

w1=tf.Variable(tf.random_normal([28*28,200]))

b1=tf.Variable(tf.random_normal([200]))

w2=tf.Variable(tf.random_normal([200,100]))

b2=tf.Variable(tf.random_normal([100]))

w3=tf.Variable(tf.random_normal([100,10]))

b3=tf.Variable(tf.random_normal([10]))

# 5、设置预测模型

a1=tf.tanh(tf.matmul(x,w1)+b1)

a2=tf.tanh(tf.matmul(a1,w2)+b2)

a3=tf.matmul(a2,w3)+b3

# 6、代价函数

cost=tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=a3,labels=y))

# 7、小批量梯度下降

# optimiter=tf.train.AdamOptimizer(learning_rate=0.001).minimize(cost)

dz3=a3-y

dw3=tf.matmul(tf.transpose(a2),dz3)/tf.cast(tf.shape(a2)[0],dtype=tf.float32)

db3=tf.reduce_mean(dz3,axis=0)

da2=tf.matmul(dz3,tf.transpose(w3))

dz2=da2*a2*(1-a2)

dw2=tf.matmul(tf.transpose(a1),dz2)/tf.cast(tf.shape(a1)[0],dtype=tf.float32)

db2=tf.reduce_mean(dz2,axis=0)

da1=tf.matmul(dz2,tf.transpose(w2))

dz1=da1*a1*(1-a1)

dw1=tf.matmul(tf.transpose(x),dz1)/tf.cast(tf.shape(x)[0],dtype=tf.float32)

db1=tf.reduce_mean(dz1,axis=0)

learning=0.01

optimiter=[

tf.assign(w3,w3-learning*dw3),

tf.assign(w2,w2-learning*dw2),

tf.assign(w1,w1-learning*dw1),

tf.assign(b3,b3-learning*db3),

tf.assign(b2,b2-learning*db2),

tf.assign(b1,b1-learning*db1),

]

# correct=tf.nn.in_top_k(a3,y,1)

# accuracy=tf.reduce_mean(tf.cast(correct,tf.float32))

y_true=tf.argmax(y,1)

y_predict=tf.argmax(a3,1)

accuracy=tf.reduce_mean(tf.cast(tf.equal(y_true,y_predict),tf.float32))

# 8、创建会话

sess=tf.Session()

sess.run(tf.global_variables_initializer())

# 9、循环输出精度和代价

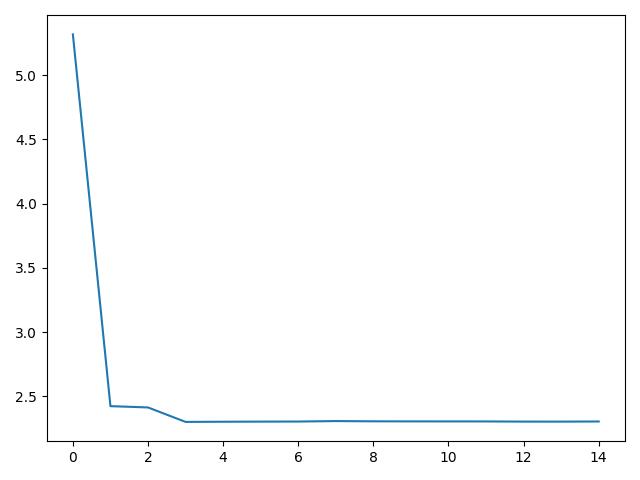

batch_size=100

train_count=20

ls=[]

for epo in range(train_count):

avg_cost=0

total_batch=mnist.train.num_examples//batch_size

for i in range(total_batch):

batch_x,batch_y=mnist.train.next_batch(batch_size)

cost_val,_,acc=sess.run([cost,optimiter,accuracy],feed_dict=x:batch_x,y:batch_y)

avg_cost+=cost_val/total_batch

ls.append(avg_cost)

print('epo:',epo,'代价值:',avg_cost)

acc_v=sess.run(accuracy,feed_dict=x:mnist.test.images,y:mnist.test.labels)

print(acc_v)

# 10、画出代价函数图

plt.plot(ls)

plt.show()

2. 用卷积神经网络完成mnist数据集处理

方法一:

# 1.运用卷积神经网络完成mnist数据集处理

# 1、导包

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

# 2、加载数据

mnist=input_data.read_data_sets('mnist_data',one_hot=True)

x_data=mnist.train.images

y_data=mnist.train.labels

# 3、设置超参数

width=28

height=28

# 4、定义卷积占位符

x=tf.placeholder(tf.float32,shape=[None,height*width])

y=tf.placeholder(tf.float32,shape=[None,10])

x_img=tf.reshape(x,[-1,width,height,1])

# 5、设置第一层权重,卷积,池化层

w1=tf.Variable(tf.random_normal([3,3,1,16]))

l1=tf.nn.conv2d(x_img,w1,strides=[1,1,1,1],padding='SAME')

l1=tf.nn.relu(l1)

l1=tf.nn.max_pool(l1,ksize=[1,2,2,1],strides=[1,2,2,1],padding='VALID')

# 6、设置第二层卷积,权重,池化层

w2=tf.Variable(tf.random_normal([3,3,16,32]))

l2=tf.nn.conv2d(l1,w2,strides=[1,1,1,1],padding='SAME')

l2=tf.nn.relu(l2)

l2=tf.nn.max_pool(l2,ksize=[1,2,2,1],strides=[1,2,2,1],padding='VALID')

dim=l2.get_shape()[1].value*l2.get_shape()[2].value*l2.get_shape()[3].value

l2_flat=tf.reshape(l2,[-1,dim])

# 7、设置全连接层

w3=tf.Variable(tf.random_normal([dim,100],stddev=0.01))

b3=tf.Variable(tf.random_normal([100]))

logit1=tf.matmul(l2_flat,w3)+b3

w4=tf.Variable(tf.random_normal([100,10],stddev=0.01))

b4=tf.Variable(tf.random_normal([10]))

logit2=tf.matmul(logit1,w4)+b4

# 8、设置代价函数,设置精度函数

cost=tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=logit2,labels=y))

optimiter=tf.train.AdamOptimizer(learning_rate=0.001).minimize(cost)

y_true=tf.argmax(y,1)

y_predict=tf.argmax(logit2,1)

accuracy=tf.reduce_mean(tf.cast(tf.equal(y_true,y_predict),tf.float32))

# 9、小批量梯度下降训练模型

sess=tf.Session()

sess.run(tf.global_variables_initializer())

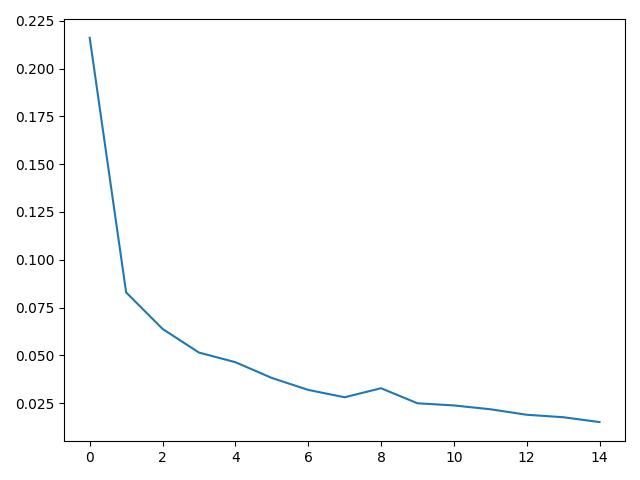

batch_size=100

train_count=15

ls=[]

for epo in range(train_count):

avg_cost=0

total_batch=mnist.train.num_examples//batch_size

for i in range(total_batch):

batch_x,batch_y=mnist.train.next_batch(batch_size)

cost_val,_,acc=sess.run([cost,optimiter,accuracy],feed_dict=x:batch_x,y:batch_y)

avg_cost+=cost_val/total_batch

ls.append(avg_cost)

print('epo:',epo,'代价值:',avg_cost)

# 10、输出精度与代价

acc_v=sess.run(accuracy,feed_dict=x:mnist.test.images,y:mnist.test.labels)

print(acc_v)

plt.plot(ls)

plt.show()

方法二:

# 1.运用卷积神经网络完成mnist数据集处理(40分)

# 1、导包

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

# 2、加载数据

mnist=input_data.read_data_sets('mnist_data')

x_data=mnist.train.images

y_data=mnist.train.labels

# 3、设置超参数

width=28

height=28

# 4、定义卷积占位符

x=tf.placeholder(tf.float32,shape=[None,height*width])

y=tf.placeholder(tf.int32,shape=[None])

x_img=tf.reshape(x,[-1,width,height,1])

# 5、设置第一层权重,卷积,池化层

w1=tf.Variable(tf.random_normal([3,3,1,16]))

l1=tf.nn.conv2d(x_img,w1,strides=[1,1,1,1],padding='SAME')

l1=tf.nn.relu(l1)

l1=tf.nn.max_pool(l1,ksize=[1,2,2,1],strides=[1,2,2,1],padding='VALID')

# 6、设置第二层卷积,权重,池化层

w2=tf.Variable(tf.random_normal([3,3,16,32]))

l2=tf.nn.conv2d(l1,w2,strides=[1,1,1,1],padding='SAME')

l2=tf.nn.relu(l2)

l2=tf.nn.max_pool(l2,ksize=[1,2,2,1],strides=[1,2,2,1],padding='VALID')

dim=l2.get_shape()[1].value*l2.get_shape()[2].value*l2.get_shape()[3].value

l2_flat=tf.reshape(l2,[-1,dim])

# 7、设置全连接层

w3=tf.Variable(tf.random_normal([dim,100],stddev=0.01))

b3=tf.Variable(tf.random_normal([100]))

logit1=tf.matmul(l2_flat,w3)+b3

w4=tf.Variable(tf.random_normal([100,10],stddev=0.01))

b4=tf.Variable(tf.random_normal([10]))

logit2=tf.matmul(logit1,w4)+b4

# 8、设置代价函数,设置精度函数

cost=tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(logits=logit2,labels=y))

optimiter=tf.train.AdamOptimizer(learning_rate=0.001).minimize(cost)

correct=tf.nn.in_top_k(logit2,y,1)#每个样本的预测结果的前k个最大的数里面是否包含targets预测中的标签

accuracy=tf.reduce_mean(tf.cast(correct,tf.float32))

# y_true=tf.argmax(y,1)

# y_predict=tf.argmax(logit2,1)

# accuracy=tf.reduce_mean(tf.cast(tf.equal(y_true,y_predict),tf.float32))

# 9、小批量梯度下降训练模型

sess=tf.Session()

sess.run(tf.global_variables_initializer())

batch_size=100

train_count=15

ls=[]

for epo in range(train_count):

avg_cost=0

total_batch=mnist.train.num_examples//batch_size

for i in range(total_batch):

batch_x,batch_y=mnist.train.next_batch(batch_size)

cost_val,_,acc=sess.run([cost,optimiter,accuracy],feed_dict=x:batch_x,y:batch_y)

avg_cost+=cost_val/total_batch

ls.append(avg_cost)

print('epo:',epo,'代价值:',avg_cost)

# 10、输出精度与代价

acc_v=sess.run(accuracy,feed_dict=x:mnist.test.images,y:mnist.test.labels)

print(acc_v)

plt.plot(ls)

plt.show()

3. 用循环神经网络完成mnist数据集处理

方法一:

# 2.处理循环神经网络完成mnist数据集处理(30分)

# 1、导包

import tensorflow as tf

from tensorflow.contrib.layers import fully_connected

from tensorflow.contrib.seq2seq import sequence_loss

from tensorflow.examples.tutorials.mnist import input_data

import matplotlib.pyplot as plt

# 2、加载数据

mnist=input_data.read_data_sets('mnist_data')

x_data=mnist.train.images

y_data=mnist.train.labels

# 3、设置超参数

n_input=28

steps=28

hidden_size=10

output_layer=10

# 4、占位符

x=tf.placeholder(tf.float32,shape=[None,steps,n_input])

y=tf.placeholder(tf.int32,shape=[None])

# 5、设置基础循环神经单元

# cell=tf.nn.rnn_cell.BasicRNNCell(num_units=hidden_size)

#cell=tf.nn.rnn_cell.BasicLSTMCell(num_units=hidden_size)

lstm_cell=[tf.nn.rnn_cell.LSTMCell(num_units=hidden_size) for layer in range(n_layers)]#10为隐藏层数

multi_cell=tf.nn.rnn_cell.MultiRNNCell(lstm_cell)

# 6、设置动态循环神经网络

outputs,states=tf.nn.dynamic_rnn(multi_cell,x,dtype=tf.f以上是关于深度学习------用NNCNNRNN神经网络实现mnist数据集处理的主要内容,如果未能解决你的问题,请参考以下文章