Flink 系例 之 Connectors 读写 csv 文件

Posted 不会飞的小龙人

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Flink 系例 之 Connectors 读写 csv 文件相关的知识,希望对你有一定的参考价值。

通过使用 Flink DataSet Connectors 数据流连接器打开 csv 文件,并提供数据流输入与输出操作;

示例环境

java.version: 1.8.x

flink.version: 1.11.1示例数据源 (项目码云下载)

示例模块 (pom.xml)

Flink 系例 之 DataStream Connectors 与 示例模块

数据流输入

CsvSource.java

package com.flink.examples.file;

import org.apache.flink.api.java.DataSet;

import org.apache.flink.api.java.ExecutionEnvironment;

import org.apache.flink.api.java.tuple.Tuple7;

import java.util.HashMap;

import java.util.Map;

/**

* @Description 从csv文件中读取内容输出到DataSet中

*/publicclassCsvSource

public static void main(String[] args) throws Exception

ExecutionEnvironment env = ExecutionEnvironment.getExecutionEnvironment();

String filePath = "D:\\\\Workspaces\\\\idea_2\\\\flink-examples\\\\connectors\\\\src\\\\main\\\\resources\\\\user.csv";

DataSet<Tuple7<Integer, String, Integer, Integer, String, String, Long>> dataSet = env

.readCsvFile(filePath)

.fieldDelimiter(",")

.types(Integer.class, String.class, Integer.class, Integer.class, String.class, String.class, Long.class);//打印

dataSet.print();

数据流输出

CsvSink.java

package com.flink.examples.file;

import org.apache.flink.api.java.DataSet;

import org.apache.flink.api.java.ExecutionEnvironment;

import org.apache.flink.api.java.tuple.Tuple7;

import org.apache.flink.core.fs.FileSystem;

/**

* @Description 将DataSet数据写入到csv文件中

*/publicclass CsvSink

publicstaticvoid main(String[] args) throws Exception

ExecutionEnvironment env = ExecutionEnvironment.getExecutionEnvironment();

//需先建立文件String filePath = "D:\\\\Workspaces\\\\idea_2\\\\flink-examples\\\\connectors\\\\src\\\\main\\\\resources\\\\user.csv";

//添加数据

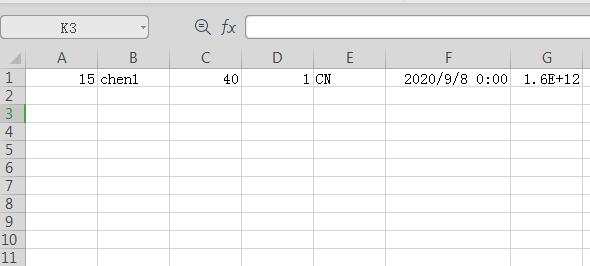

Tuple7<Integer, String, Integer, Integer, String, String, Long> row = new Tuple7<>(15, "chen1", 40, 1, "CN", "2020-09-08 00:00:00", 1599494400000L);

//转换为dataSet

DataSet<Tuple7<Integer, String, Integer, Integer, String, String, Long>> dataSet = env.fromElements(row);

//将内容写入到File中,如果文件已存在,将会被复盖

dataSet.writeAsCsv(filePath, FileSystem.WriteMode.OVERWRITE).setParallelism(1);

env.execute("fline file sink");

数据展示

以上是关于Flink 系例 之 Connectors 读写 csv 文件的主要内容,如果未能解决你的问题,请参考以下文章