Spark 重分区函数:coalesce和repartition区别与实现,可以优化Spark程序性能

Posted javartisan

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Spark 重分区函数:coalesce和repartition区别与实现,可以优化Spark程序性能相关的知识,希望对你有一定的参考价值。

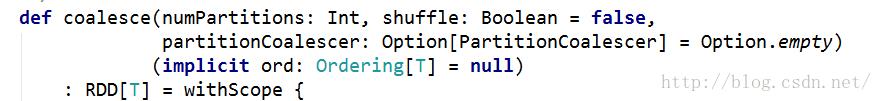

源码包路径: org.apache.spark.rdd.RDD

coalesce函数:

方法注释:

Return a new RDDthat is reduced into numPartitions partitions. This results in a narrowdependency, e.g. if you go from 1000 partitions to 100 partitions, there willnot be a shuffle, instead each of the 100 new partitions will claim 10 of thecurrent partitions. However, if you're doing a drastic(激烈的,猛烈的)coalesce, e.g. to numPartitions = 1, this may result in your computation takingplace on fewer nodes than you like (e.g. one node in the case of numPartitions= 1). To avoid this, you can pass shuffle = true. This will add a shuffle step,but means the current upstream partitions will be executed in parallel (perwhatever the current partitioning is). Note: With shuffle = true, you canactually coalesce to a larger number of partitions. This is useful if you have asmall number of partitions, say 100, potentially with a few partitions beingabnormally large. Calling coalesce(1000, shuffle = true) will result in 1000partitions with the data distributed using a hash partitioner.

译文:

返回一个经过简化到numPartitions个分区的新RDD。这会导致一个窄依赖,例如:你将1000个分区转换成100个分区,这个过程不会发生shuffle,相反如果10个分区转换成100个分区将会发生shuffle。然而如果你想大幅度合并分区,例如合并成一个分区,这会导致你的计算在少数几个集群节点上计算(言外之意:并行度不够)。为了避免这种情况,你可以将第二个shuffle参数传递一个true,这样会在重新分区过程中多一步shuffle,这意味着上游的分区可以并行运行。

注意:第二个参数shuffle=true,将会产生多于之前的分区数目,例如你有一个个数较少的分区,假如是100,调用coalesce(1000, shuffle = true)将会使用一个 HashPartitioner产生1000个分区分布在集群节点上。这个(对于提高并行度)是非常有用的。

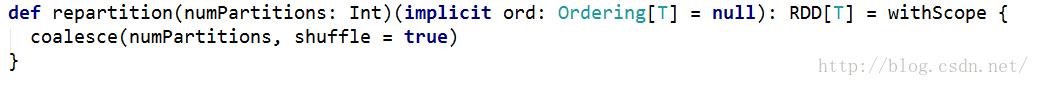

repartition函数:

Return a new RDDthat has exactly numPartitions partitions. Can increase or decrease the levelof parallelism in this RDD. Internally, this uses a shuffle to redistributedata. If you are decreasing the number of partitions in this RDD, considerusing coalesce, which can avoid performing a shuffle.

译文:

返回一个恰好有numPartitions个分区的RDD,可以增加或者减少此RDD的并行度。内部,这将使用shuffle重新分布数据,如果你减少分区数,考虑使用coalesce,这样可以避免执行shuffle

以上是关于Spark 重分区函数:coalesce和repartition区别与实现,可以优化Spark程序性能的主要内容,如果未能解决你的问题,请参考以下文章