iOS:百度长语音识别具体的封装:识别播放进度刷新

Posted 程序猿

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了iOS:百度长语音识别具体的封装:识别播放进度刷新相关的知识,希望对你有一定的参考价值。

一、介绍

以前做过讯飞语音识别,比较简单,识别率很不错,但是它的识别时间是有限制的,最多60秒。可是有的时候我们需要更长的识别时间,例如朗诵古诗等功能。当然讯飞语音也是可以通过曲线救国来实现,就是每达到60秒时识别停止就立即重新开启,每次结束拼接录音。这么做,显然是麻烦的,百度语音解决了这个问题,它最近上线了长语音识别技术,可以不受时间限制,还是非常棒的。这次,我就专门抽成一个工具类使用,包括语音识别,录音拼接,录音播放、进度刷新,具体如何集成SDK看官方文档,我就不废话了,直接介绍如何使用我的这个工具类。

二、依赖

因为语音录制格式是pcm格式的,我使用lame静态库将其转成了mp3格式。

下载路径参考我的博客,有具体的介绍:http://www.cnblogs.com/XYQ-208910/p/7650759.html

三、代码

SJVoiceTransform.h

#import <Foundation/Foundation.h> @interface SJVoiceTransform : NSObject /** * 根据路径将pcm文件转化为MP3 * * @param docPath docment路径 */ +(NSString *)stransformToMp3ByUrlWithUrl:(NSString *)docPath; @end

SJVoiceTransform.m

#import "SJVoiceTransform.h" #import "lame.h" @interface SJVoiceTransform() //@property (strong , nonatomic)NSString * path;//存放音频沙河路径 @end @implementation SJVoiceTransform +(NSString *)stransformToMp3ByUrlWithUrl:(NSString *)docPath { NSString *pathUrl = [NSString stringWithFormat:@"%@",docPath];//存储录音pcm格式音频地址 NSString * mp3Url = pathUrl; NSString *mp3FilePath = [docPath stringByAppendingString:@".mp3"];//存放Mp3地址 if (!mp3Url || !mp3FilePath) { return 0; } @try { unsigned long read, write; FILE *pcm = fopen([mp3Url cStringUsingEncoding:1], "rb"); //source 被转换的音频文件位置 //音频不能为空 if (!pcm) { return nil; } fseek(pcm, 4*1024, SEEK_CUR); //skip file header FILE *mp3 = fopen([mp3FilePath cStringUsingEncoding:1], "wb"); //output 输出生成的Mp3文件位置 const int PCM_SIZE = 8192; const int MP3_SIZE = 8192; short int pcm_buffer[PCM_SIZE*2]; unsigned char mp3_buffer[MP3_SIZE]; lame_t lame = lame_init(); lame_set_num_channels(lame,1); lame_set_in_samplerate(lame, 8000.0); //11025.0 //lame_set_VBR(lame, vbr_default); lame_set_brate(lame, 8); lame_set_mode(lame, 3); lame_set_quality(lame, 2);// lame_init_params(lame); do { read = fread(pcm_buffer, 2*sizeof(short int), PCM_SIZE, pcm); if (read == 0) write = lame_encode_flush(lame, mp3_buffer, MP3_SIZE); else write = lame_encode_buffer_interleaved(lame, pcm_buffer, read, mp3_buffer, MP3_SIZE); fwrite(mp3_buffer, write, 1, mp3); } while (read != 0); lame_close(lame); fclose(mp3); fclose(pcm); } @catch (NSException *exception) { NSLog(@"%@",[exception description]); } @finally { NSLog(@"MP3生成成功: %@",mp3FilePath); } return mp3FilePath; } @end

BDHelper.h

// // BDHelper.h // BDRecognizer // // Created by 夏远全 on 2017/11/14. // Copyright © 2017年 夏远全. All rights reserved. #import <Foundation/Foundation.h> #import <UIKit/UIKit.h> #import <AudioToolbox/AudioToolbox.h> #import <AVFoundation/AVFoundation.h> @protocol BDHelperDelegate <NSObject> @optional -(void)recognitionPartialResult:(NSString *)recognitionResult; //中间结果 -(void)recognitionFinalResult:(NSString *)recognitionResult; //最终结果 -(void)recognitionError:(NSError *)error; //识别错误 -(void)updateProgress:(CGFloat)progress duration:(int)duration;//更新播放进度 -(void)updateReadingTime:(int)readingTime;//更新朗诵时间 -(void)recognitionRecordFinishedPlay;//语音识别录音播放完成 @end @interface BDHelper : NSObject /** 代理 */ @property (nonatomic, weak) id<BDHelperDelegate> delegate; /** 播放器 */ @property (nonatomic, strong) AVAudioPlayer *audioPlayer; /** 文件路径 */ @property (nonatomic, copy) NSString *audioFilePath; /** 创建对象 @param voiceFileName 录音文件名 @return 实例 */ +(BDHelper *)sharedBDHelperWithVoiceFileName:(NSString *)voiceFileName; /** 开始语音识别 */ - (void)startLongSpeechRecognition; /** 结束语音识别 */ - (void)endLongSpeechRecognition; /** 播放识别语音 */ -(void)playListenningRecognition; /** 暂停语音播放 */ -(void)pauseListenningRecognition; /** @param isNeedDeleteFilePath 是否需要移除缓存的音频文件 销毁播放器 */ -(void)didRemoveAudioPlayer:(BOOL)isNeedDeleteFilePath; /** 启动计时器,累计朗诵时间 */ - (void)beginStatisticsReadingTime; /** 销毁计时器 */ - (void)endStatisticsReadingTime; @end

BDHelper.m

// // BDHelper.m // BDRecognizer // // Created by 夏远全 on 2017/11/14. // Copyright © 2017年 夏远全. All rights reserved. // #import "BDHelper.h" #import "SJVoiceTransform.h" #if !TARGET_IPHONE_SIMULATOR #import "BDSEventManager.h" #import "BDSASRDefines.h" #import "BDSASRParameters.h" /// "请在官网新建应用,配置包名,并在此填写应用的 api key, secret key, appid(即appcode)" static NSString* const API_KEY = @"BxLweqmGUxxxxxxxxxxxxxx"; static NSString* const SECRET_KEY = @"rhUIXG4gXmxxxxxxxxxxxxxx"; static NSString* const APP_ID = @"81xxxxx"; @interface BDHelper()<BDSClientASRDelegate,AVAudioPlayerDelegate> @property (nonatomic, strong) BDSEventManager *asrEventManager; @property (nonatomic, strong) CADisplayLink *progressLink; @property (nonatomic, strong) NSTimer *readingTimer; @property (nonatomic, strong) NSMutableData *mutabelData; @property (nonatomic, strong) NSFileHandle *fileHandler; @property (nonatomic, copy) NSString *voiceFileName; @end #endif @implementation BDHelper #if !TARGET_IPHONE_SIMULATOR +(BDHelper *)sharedBDHelperWithVoiceFileName:(NSString *)voiceFileName{ BDHelper *helper = [[self alloc] init]; helper.voiceFileName = voiceFileName; [helper setupDefalutValue]; return helper; } -(void)setupDefalutValue{ self.asrEventManager = [BDSEventManager createEventManagerWithName:BDS_ASR_NAME]; [self configVoiceRecognitionClient]; NSLog(@"current sdk version: %@", [self.asrEventManager libver]); } #pragma mark - public: Method - (void)startLongSpeechRecognition{ //移除播放器 [self didRemoveAudioPlayer:NO]; //设置录音路径 if (!_audioFilePath) { [self pcmFilePathConfig]; } //启动识别服务 [self beginStatisticsReadingTime]; [self.asrEventManager sendCommand:BDS_ASR_CMD_START]; } - (void)endLongSpeechRecognition{ //关闭识别服务 [self endStatisticsReadingTime]; [self.asrEventManager sendCommand:BDS_ASR_CMD_STOP]; [self.fileHandler writeData:self.mutabelData]; self.mutabelData = nil; } -(void)playListenningRecognition{ //避免重复点击 if (_audioPlayer && _audioPlayer.isPlaying) { return; } //直接播放 if (_audioPlayer && !_audioPlayer.isPlaying) { [_audioPlayer play]; _progressLink.paused = NO; return; } //播放识别语音(pcm格式转成mp3格式) NSString *mp3Path = [SJVoiceTransform stransformToMp3ByUrlWithUrl:_audioFilePath]; if (!mp3Path) { return; } //初始化播放器 _audioPlayer = [[AVAudioPlayer alloc]initWithContentsOfURL:[NSURL fileURLWithPath:mp3Path] error:NULL]; _audioPlayer.volume = 1; _audioPlayer.delegate = self; [[AVAudiosession sharedInstance] setCategory:AVAudioSessionCategoryPlayback error:nil]; [[AVAudioSession sharedInstance] setActive:YES error:nil]; [_audioPlayer prepareToPlay]; [_audioPlayer play]; _progressLink = [CADisplayLink displayLinkWithTarget:self selector:@selector(updateProgressValue)]; [_progressLink addToRunLoop:[NSRunLoop currentRunLoop] forMode:NSRunLoopCommonModes]; } -(void)pauseListenningRecognition{ //暂停播放 if (_audioPlayer && _audioPlayer.isPlaying) { [_audioPlayer pause]; _progressLink.paused = YES; } } #pragma mark - event -(void)updateProgressValue{ //更新播放进度 int duration = round(_audioPlayer.duration); if (self.delegate && [self.delegate respondsToSelector:@selector(updateProgress:duration:)]) { [self.delegate updateProgress:_audioPlayer.currentTime/_audioPlayer.duration duration:duration]; } } -(void)startReadingTimer{ //累计朗诵时间 if (self.delegate && [self.delegate respondsToSelector:@selector(updateReadingTime:)]) { [self.delegate updateReadingTime:1]; } } #pragma mark - Private: Configuration - (void)configVoiceRecognitionClient { // ---- 设置DEBUG_LOG的级别 [self.asrEventManager setParameter:@(EVRDebugLogLevelTrace) forKey:BDS_ASR_DEBUG_LOG_LEVEL]; // ---- 配置API_KEY 和 SECRET_KEY 和 APP_ID [self.asrEventManager setParameter:@[API_KEY, SECRET_KEY] forKey:BDS_ASR_API_SECRET_KEYS]; [self.asrEventManager setParameter:APP_ID forKey:BDS_ASR_OFFLINE_APP_CODE]; // ---- 配置端点检测(二选一) [self configModelVAD]; //[self configDNNMFE]; // ---- 语义与标点 ----- [self enableNLU]; [self enablePunctuation]; // ---- 长语音请务必开启本地VAD ----- [self.asrEventManager setParameter:@(YES) forKey:BDS_ASR_ENABLE_LONG_SPEECH]; [self.asrEventManager setParameter:@(YES) forKey:BDS_ASR_ENABLE_LOCAL_VAD]; // ---- 录音文件路径 ----- [self pcmFilePathConfig]; // ---- 设置代理 ----- [self.asrEventManager setDelegate:self]; [self.asrEventManager setParameter:nil forKey:BDS_ASR_AUDIO_FILE_PATH]; [self.asrEventManager setParameter:nil forKey:BDS_ASR_AUDIO_INPUT_STREAM]; } - (void)pcmFilePathConfig{ [self configFileHandler:self.voiceFileName]; _audioFilePath = [self getFilePath:self.voiceFileName]; } - (void)enableNLU { // ---- 开启语义理解 ----- [self.asrEventManager setParameter:@(YES) forKey:BDS_ASR_ENABLE_NLU]; [self.asrEventManager setParameter:@"15361" forKey:BDS_ASR_PRODUCT_ID]; } - (void)enablePunctuation { // ---- 开启标点输出 ----- [self.asrEventManager setParameter:@(NO) forKey:BDS_ASR_DISABLE_PUNCTUATION]; // ---- 普通话标点 ----- [self.asrEventManager setParameter:@"1537" forKey:BDS_ASR_PRODUCT_ID]; } - (void)configModelVAD { NSString *modelVAD_filepath = [[NSBundle mainBundle] pathForResource:@"bds_easr_basic_model" ofType:@"dat"]; [self.asrEventManager setParameter:modelVAD_filepath forKey:BDS_ASR_MODEL_VAD_DAT_FILE]; [self.asrEventManager setParameter:@(YES) forKey:BDS_ASR_ENABLE_MODEL_VAD]; } - (void)configDNNMFE { NSString *mfe_dnn_filepath = [[NSBundle mainBundle] pathForResource:@"bds_easr_mfe_dnn" ofType:@"dat"]; NSString *cmvn_dnn_filepath = [[NSBundle mainBundle] pathForResource:@"bds_easr_mfe_cmvn" ofType:@"dat"]; [self.asrEventManager setParameter:mfe_dnn_filepath forKey:BDS_ASR_MFE_DNN_DAT_FILE]; [self.asrEventManager setParameter:cmvn_dnn_filepath forKey:BDS_ASR_MFE_CMVN_DAT_FILE]; //自定义静音时长(单位:每帧10ms) //[self.asrEventManager setParameter:@(500) forKey:BDS_ASR_MFE_MAX_SPEECH_PAUSE]; //[self.asrEventManager setParameter:@(501) forKey:BDS_ASR_MFE_MAX_WAIT_DURATION]; } #pragma mark - MVoiceRecognitionClientDelegate - (void)VoiceRecognitionClientWorkStatus:(int)workStatus obj:(id)aObj { switch (workStatus) { case EVoiceRecognitionClientWorkStatusNewRecordData: { /// 录音数据回调、NSData-原始音频数据,此处可以用来存储录音 NSData *originData = (NSData *)aObj; [self.mutabelData appendData:originData]; break; } case EVoiceRecognitionClientWorkStatusStartWorkIng: { /// 识别工作开始,开始采集及处理数据 NSDictionary *logDic = [self parseLogToDic:aObj]; NSLog(@"%@",[NSString stringWithFormat:@"CALLBACK: start vr, log: %@\\n", logDic]); break; } case EVoiceRecognitionClientWorkStatusStart: { /// 检测到用户开始说话 NSLog(@"CALLBACK: detect voice start point.\\n"); break; } case EVoiceRecognitionClientWorkStatusEnd: { /// 本地声音采集结束 NSLog(@"CALLBACK: detect voice end point.\\n"); break; } case EVoiceRecognitionClientWorkStatusFlushData: { /// 连续上屏、NSDictionary-中间结果 NSString *result = [self getDescriptionForDic:aObj]; NSLog(@"%@",[NSString stringWithFormat:@"CALLBACK: partial result -%@.\\n\\n" ,result]); NSMutableString *recognitionResult = [aObj[@"results_recognition"] firstObject]; if (self.delegate && [self.delegate respondsToSelector:@selector(recognitionPartialResult:)]) { [self.delegate recognitionPartialResult:recognitionResult]; } break; } case EVoiceRecognitionClientWorkStatusFinish: { /// 语音识别功能完成,服务器返回正确结果、NSDictionary-最终识别结果 NSString *result = [self getDescriptionForDic:aObj]; NSLog(@"%@",[NSString stringWithFormat:@"CALLBACK: final result - %@.\\n\\n",result]); NSString *recognitionResult = [aObj[@"results_recognition"] firstObject]; if (self.delegate && [self.delegate respondsToSelector:@selector(recognitionFinalResult:)]) { [self.delegate recognitionFinalResult:recognitionResult]; } break; } case EVoiceRecognitionClientWorkStatusMeterLevel: { /// 当前音量回调、NSNumber:int-当前音量 NSLog(@"-------voice volume:%d-------",[aObj intValue]); break; } case EVoiceRecognitionClientWorkStatusCancel: { /// 用户主动取消 NSLog(@"CALLBACK: user press cancel.\\n"); break; } case EVoiceRecognitionClientWorkStatusError: { /// 发生错误 NSError-错误信息 NSLog(@"%@", [NSString stringWithFormat:@"CALLBACK: encount error - %@.\\n", (NSError *)aObj]); if (self.delegate && [self.delegate respondsToSelector:@selector(recognitionError:)]) { [self.delegate recognitionError:(NSError *)aObj]; } break; } case EVoiceRecognitionClientWorkStatusLoaded: { /// 离线引擎加载完成 NSLog(@"CALLBACK: offline engine loaded.\\n"); break; } case EVoiceRecognitionClientWorkStatusUnLoaded: { /// 离线引擎卸载完成 NSLog(@"CALLBACK: offline engine unLoaded.\\n"); break; } case EVoiceRecognitionClientWorkStatusChunkThirdData: { /// CHUNK: 识别结果中的第三方数据 NSData NSLog(@"%@",[NSString stringWithFormat:@"CALLBACK: Chunk 3-party data length: %lu\\n", (unsigned long)[(NSData *)aObj length]]); break; } case EVoiceRecognitionClientWorkStatusChunkNlu: { /// CHUNK: 识别结果中的语义结果 NSData NSString *nlu = [[NSString alloc] initWithData:(NSData *)aObj encoding:NSUTF8StringEncoding]; NSLog(@"%@",[NSString stringWithFormat:@"CALLBACK: Chunk NLU data: %@\\n", nlu]); break; } case EVoiceRecognitionClientWorkStatusChunkEnd: { /// CHUNK: 识别过程结束 NSString NSLog(@"%@",[NSString stringWithFormat:@"CALLBACK: Chunk end, sn: %@.\\n", aObj]); break; } case EVoiceRecognitionClientWorkStatusFeedback: { /// Feedback: 识别过程反馈的打点数据 NSString NSDictionary *logDic = [self parseLogToDic:aObj]; NSLog(@"%@",[NSString stringWithFormat:@"CALLBACK Feedback: %@\\n", logDic]); break; } case EVoiceRecognitionClientWorkStatusRecorderEnd: { /// 录音机关闭,页面跳转需检测此时间,规避状态条 (iOS) NSLog(@"CALLBACK: recorder closed.\\n"); break; } case EVoiceRecognitionClientWorkStatusLongSpeechEnd: { /// 长语音结束状态 NSLog(@"CALLBACK: Long Speech end.\\n"); [self endLongSpeechRecognition]; break; } default: break; } } #pragma mark - AVAudioPlayerDelegate -(void)audioPlayerDidFinishPlaying:(AVAudioPlayer *)player successfully:(BOOL)flag{ if (flag) { if (self.delegate && [self.delegate respondsToSelector:@selector(recognitionRecordFinishedPlay)]) { [self.delegate recognitionRecordFinishedPlay]; } } } #pragma mark - public: Method -(void)didRemoveAudioPlayer:(BOOL)isNeedDeleteFilePath{ [_audioPlayer stop]; [_progressLink invalidate]; _audioPlayer = nil; _progressLink = nil; if (isNeedDeleteFilePath) { [[NSFileManager defaultManager] removeItemAtPath:_audioFilePath error:nil]; _audioFilePath = nil; } } - (void)beginStatisticsReadingTime{ [self.readingTimer fire]; } - (void)endStatisticsReadingTime{ if (self.readingTimer.isValid) { [self.readingTimer invalidate]; self.readingTimer = nil; } } #pragma mark - private: Method - (NSDictionary *)parseLogToDic:(NSString *)logString { NSArray *tmp = NULL; NSMutableDictionary *logDic = [[NSMutableDictionary alloc] initWithCapacity:3]; NSArray *items = [logString componentsSeparatedByString:@"&"]; for (NSString *item in items) { tmp = [item componentsSeparatedByString:@"="]; if (tmp.count == 2) { [logDic setObject:tmp.lastObject forKey:tmp.firstObject]; } } return logDic; } - (NSString *)getDescriptionForDic:(NSDictionary *)dic { if (dic) { return [[NSString alloc] initWithData:[NSJSONSerialization dataWithJSONObject:dic options:NSJSONWritingPrettyPrinted error:nil] encoding:NSUTF8StringEncoding]; } return nil; } #pragma mark - Private: File - (NSString *)getFilePath:(NSString *)fileName { NSArray *paths = NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES); if (paths && [paths count]) { return [[paths objectAtIndex:0] stringByAppendingPathComponent:fileName]; } else { return nil; } } - (void)configFileHandler:(NSString *)fileName { self.fileHandler = [self createFileHandleWithName:fileName isAppend:NO]; } - (NSFileHandle *)createFileHandleWithName:(NSString *)aFileName isAppend:(BOOL)isAppend { NSFileHandle *fileHandle = nil; NSString *fileName = [self getFilePath:aFileName]; int fd = -1; if (fileName) { if ([[NSFileManager defaultManager] fileExistsAtPath:fileName]&& !isAppend) { [[NSFileManager defaultManager] removeItemAtPath:fileName error:nil]; } int flags = O_WRONLY | O_APPEND | O_CREAT; fd = open([fileName fileSystemRepresentation], flags, 0644); } if (fd != -1) { fileHandle = [[NSFileHandle alloc] initWithFileDescriptor:fd closeOnDealloc:YES]; } return fileHandle; } #pragma mark - lazy load -(NSMutableData *)mutabelData{ if (!_mutabelData) { _mutabelData = [NSMutableData data]; } return _mutabelData; } -(NSTimer *)readingTimer{ if (!_readingTimer) { _readingTimer = [NSTimer scheduledTimerWithTimeInterval:1.0 target:self selector:@selector(startReadingTimer) userInfo:nil repeats:YES]; [[NSRunLoop currentRunLoop] addTimer:_readingTimer forMode:UITrackingRunLoopMode]; } return _readingTimer; } #endif @end

四、注意

百度语音SDK只支持armv6、armv7的真机架构,不支持x86_64模拟器架构。

五、如何在模拟器下开发

办法:

1、首先将涉及到百度语音的代码全部采用宏定义注释掉,如:

#if !TARGET_IPHONE_SIMULATOR // 语音相关调用 // self.asrEventManager = [BDSEventManager createEventManagerWithName:BDS_ASR_NAME]; // 其他调用 #endif

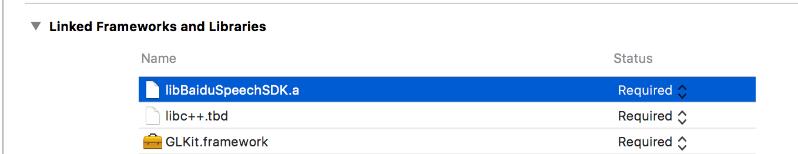

2、(重点要处理的地方)切换到模拟时,将libBaiduSpeechSDK.a静态包从Linked Frameworks and Librarise删掉(切换到真机时,再将libBaiduSpeechSDK.a导入进来就行)

以上是关于iOS:百度长语音识别具体的封装:识别播放进度刷新的主要内容,如果未能解决你的问题,请参考以下文章