Deep learning II - III Batch Normalization - Normalizing activation in a network 激励函数输出归一化

Posted dqhl1990

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Deep learning II - III Batch Normalization - Normalizing activation in a network 激励函数输出归一化相关的知识,希望对你有一定的参考价值。

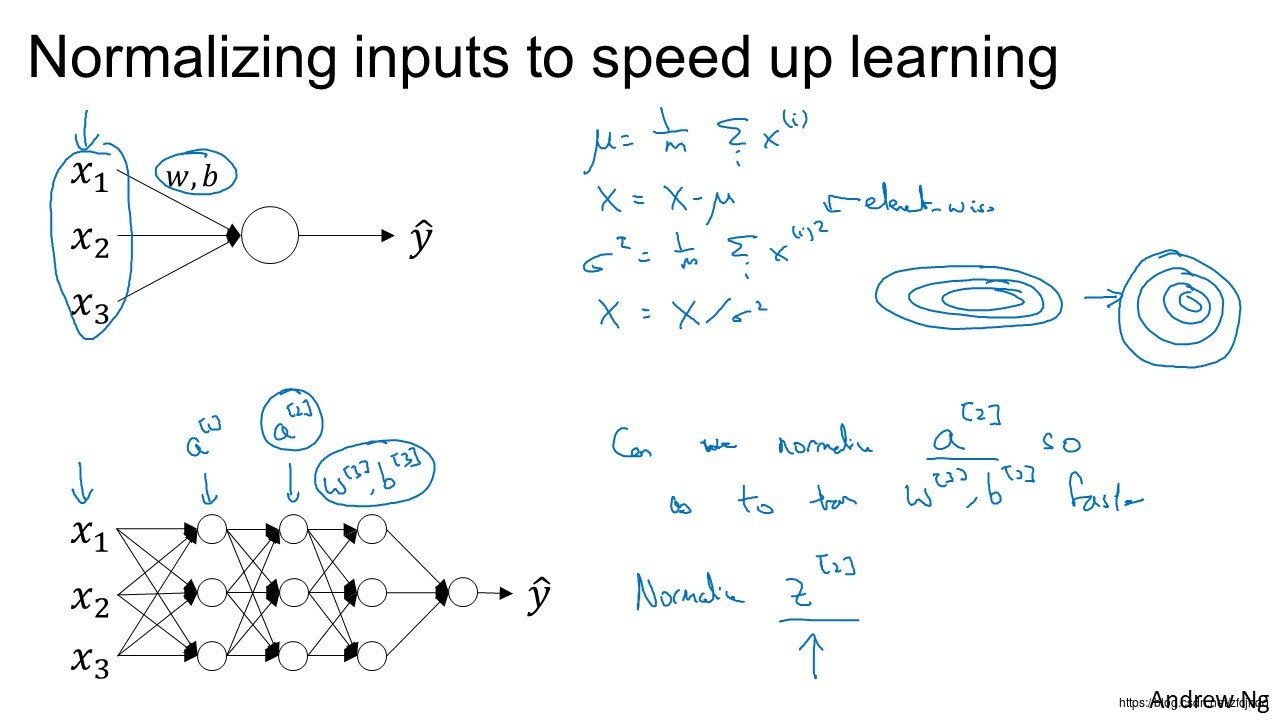

Normalizing activation in a network 激励函数输出归一化

之前我们提到过将输入归一化,能够使学习加速;但是,由于深度神经网络的层数较多,每一层的输出又会变成下一层的输入,因此对于每一层

z[l]

z

[

l

]

(常用,应作为default)或者

a[l]

a

[

l

]

的输出进行归一化,同样能够加速学习。

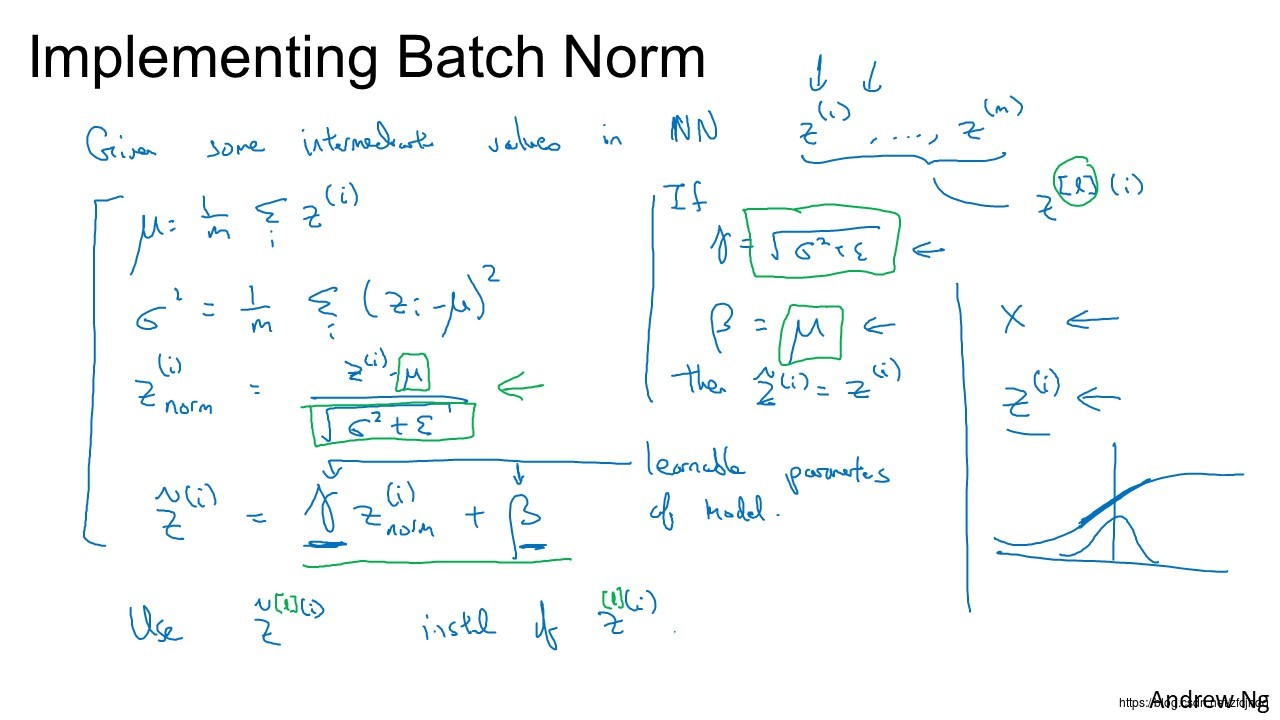

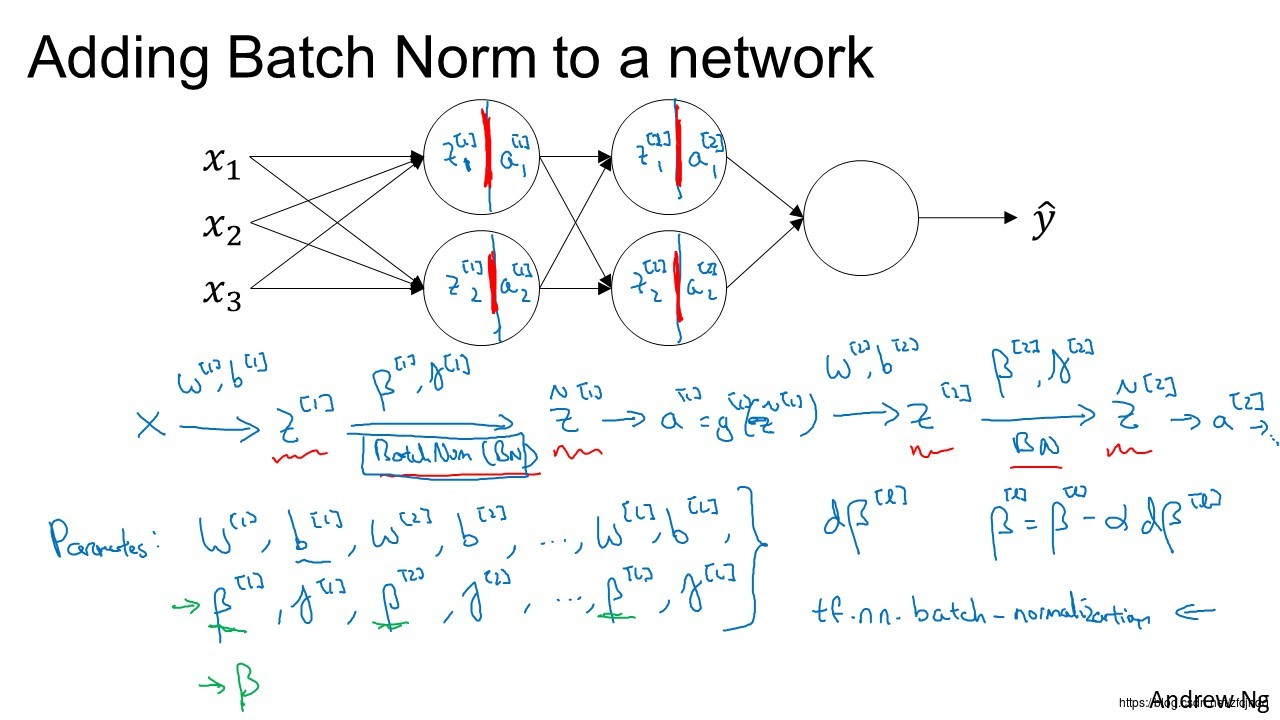

如何计算Batch Norm

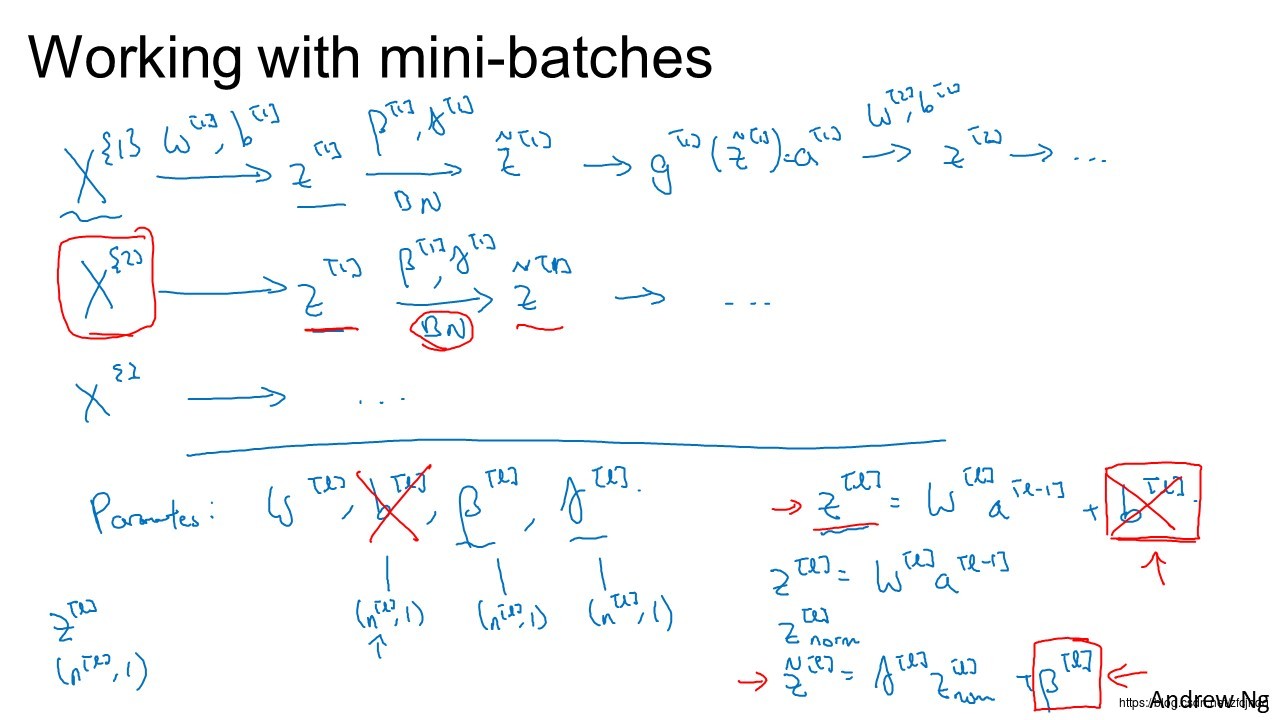

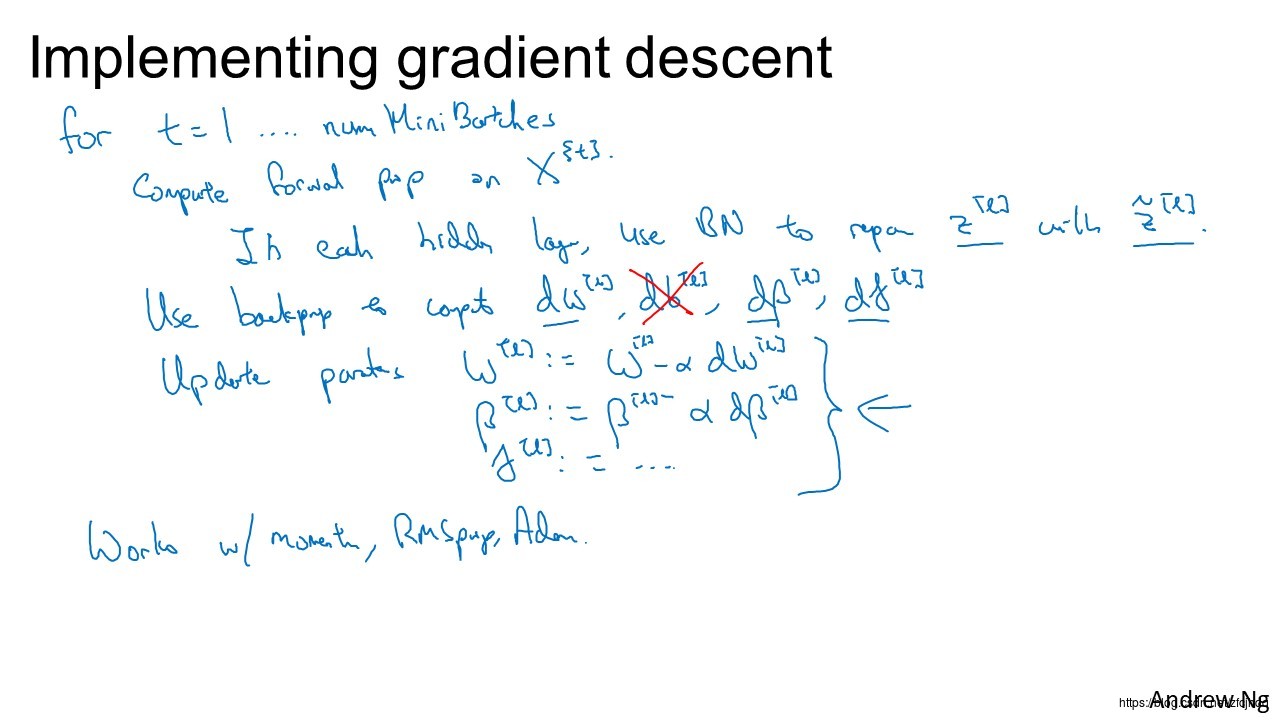

如何在mini-batch中实现Batch Norm

以上是关于Deep learning II - III Batch Normalization - Normalizing activation in a network 激励函数输出归一化的主要内容,如果未能解决你的问题,请参考以下文章