darknet YoloV4手册翻译

Posted henreash

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了darknet YoloV4手册翻译相关的知识,希望对你有一定的参考价值。

https://github.com/AlexeyAB/darknet

(对象检测神经网络) – 可用于Linxu和windows的张量计算核心

Yolo v4网页: https://arxiv.org/abs/2004.10934

更多细节: http://pjreddie.com/darknet/yolo/

- 必要条件(及依赖性安装)Requirements (and how to install dependecies)

- 预训练模型Pre-trained models

- 问题的解释Explanations in issues

- YoloV3在其他框架中的应用Yolo v3 in other frameworks (TensorRT, TensorFlow, PyTorch, OpenVINO, OpenCV-dnn, TVM,...)

- 数据集Datasets

- 改进Improvements in this repository

- 如何使用How to use

- 如何在Linux下编译How to compile on Linux

- 使用cmake Using cmake

- 使用make Using make

- 如何在Windows下编译How to compile on Windows

- 使用CMake-GUI Using CMake-GUI

- 使用vcpkg Using vcpkg

- 传统方式 Legacy way

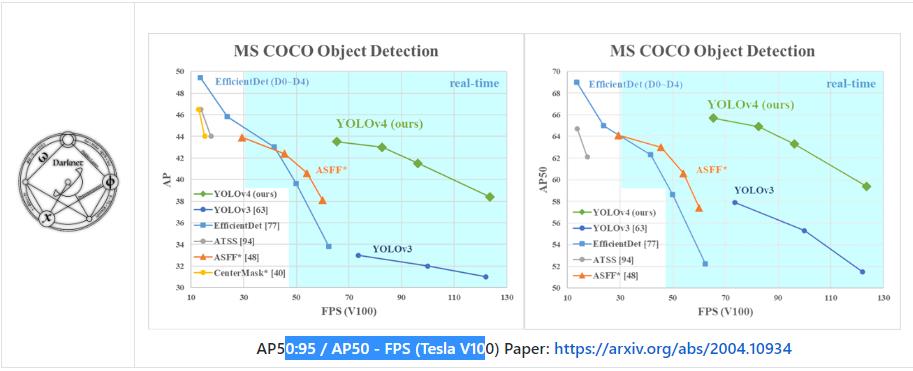

- 在MS COCO上训练评估速度和精度Training and Evaluation of speed and accuracy on MS COCO

- 如何在多GPU上训练How to train with multi-GPU:

- 如何训练自定义对象检测器How to train (to detect your custom objects)

- 如何训练tiny-yolo自定义对象检测器How to train tiny-yolo (to detect your custom objects)

- 何时需要停止训练When should I stop training

- 如何改进对象检测How to improve object detection

- 如何标注对象范围框并创建标注文件How to mark bounded boxes of objects and create annotation files

- 如何使用Yolo的dll和so库How to use Yolo as DLL and SO libraries

- YoloV4完全比对Yolo v4 Full comparison: map_fps

- CSPNet: (paper and map_fps )比较: https://github.com/WongKinYiu/CrossStagePartialNetworks

- MS COCO YoloV3: Speed / Accuracy (mAP@0.5) chart

- MS COCO YoloV3 (YoloV3 vs RetinaNet) - Figure 3: https://arxiv.org/pdf/1804.02767v1.pdf

- Pascal VOC 2007 YoloV2: https://hsto.org/files/a24/21e/068/a2421e0689fb43f08584de9d44c2215f.jpg

- Pascal VOC 2012 YoloV2(comp4): https://hsto.org/files/3a6/fdf/b53/3a6fdfb533f34cee9b52bdd9bb0b19d9.jpg

如何在MS COCO评估服务上评估YoloV4的AP

- 在MS COCO服务器上下载并解压test-dev2017( http://images.cocodataset.org/zips/test2017.zip)

Download and unzip test-dev2017 dataset from MS COCO server: http://images.cocodataset.org/zips/test2017.zip

- 下载检测任务图片,并替换文件路径

下载检测任务图片列表并替换为实际路径:

https://raw.githubusercontent.com/AlexeyAB/darknet/master/scripts/testdev2017.txt

- 下载yolov4.weights文件: https://drive.google.com/open?id=1cewMfusmPjYWbrnuJRuKhPMwRe_b9PaT

- 调整cfg/coco.data文件如下所示:

classes= 80

train = <replace with your path>/trainvalno5k.txt

valid = <replace with your path>/testdev2017.txt

names = data/coco.names

backup = backup

eval=coco

- 在./darknet可执行文件同级创建/results目录

- 执行验证: ./darknet detector valid cfg/coco.data cfg/yolov4.cfg yolov4.weights

- 将/results/coco_results.json 文件重命名为 detections_test-dev2017_yolov4_results.json,并压缩为detections_test-dev2017_yolov4_results.zip。

- 提交detections_test-dev2017_yolov4_results.zip文件到MS COCO的test-dev2019(bbox)评估服务

如何在GPU下评估YoloV4的帧率

- 设置Makefile中参数GPU=1 CUDNN=1 CUDNN_HALF=1 OPENCV=1后编译Darknet (Cmake使用同样配置)

- 下载 yolov4.weights: https://drive.google.com/open?id=1cewMfusmPjYWbrnuJRuKhPMwRe_b9PaT

- 获取任意.avi/.mp4视频文件(分辨率最好不要超过1920x1080以免引起cpu性能瓶颈)

- 运行下面两个命令之一,查看平均帧率:

- 包含video_capturing + NMS + drawing_bboxes: ./darknet detector demo cfg/coco.data cfg/yolov4.cfg yolov4.weights test.mp4 -dont_show -ext_output

- 不含video_capturing + NMS + drawing_bboxes: ./darknet detector demo cfg/coco.data cfg/yolov4.cfg yolov4.weights test.mp4 -benchmark

预训练模型

不同cfg-文件对应的权重文件(使用MS COCO 数据集训练):

在RTX 2070 (R)和Tesla V100 (V)上的帧率:

- yolov4.cfg - 245 MB: yolov4.weights paper Yolo v4

- width=608 height=608 in cfg: 65.7% mAP@0.5 (43.5% AP@0.5:0.95) - 34(R) FPS / 62(V) FPS - 128.5 BFlops

- width=512 height=512 in cfg: 64.9% mAP@0.5 (43.0% AP@0.5:0.95) - 45(R) FPS / 83(V) FPS - 91.1 BFlops

- width=416 height=416 in cfg: 62.8% mAP@0.5 (41.2% AP@0.5:0.95) - 55(R) FPS / 96(V) FPS - 60.1 BFlops

- width=320 height=320 in cfg: 60% mAP@0.5 ( 38% AP@0.5:0.95) - 63(R) FPS / 123(V) FPS - 35.5 BFlops

- yolov3-tiny-prn.cfg - 33.1% mAP@0.5 - 370(R) FPS - 3.5 BFlops - 18.8 MB: yolov3-tiny-prn.weights

- enet-coco.cfg (EfficientNetB0-Yolov3) - 45.5% mAP@0.5 - 55(R) FPS - 3.7 BFlops - 18.3 MB: enetb0-coco_final.weights

- yolov3-openimages.cfg - 247 MB - 18(R) FPS - OpenImages dataset: yolov3-openimages.weights

Yolo v3 模型

- csresnext50-panet-spp-original-optimal.cfg - 65.4% mAP@0.5 (43.2% AP@0.5:0.95) - 32(R) FPS - 100.5 BFlops - 217 MB: csresnext50-panet-spp-original-optimal_final.weights

- yolov3-spp.cfg - 60.6% mAP@0.5 - 38(R) FPS - 141.5 BFlops - 240 MB: yolov3-spp.weights

- csresnext50-panet-spp.cfg - 60.0% mAP@0.5 - 44 FPS - 71.3 BFlops - 217 MB: csresnext50-panet-spp_final.weights

- yolov3.cfg - 55.3% mAP@0.5 - 66(R) FPS - 65.9 BFlops - 236 MB: yolov3.weights

- yolov3-tiny.cfg - 33.1% mAP@0.5 - 345(R) FPS - 5.6 BFlops - 33.7 MB: yolov3-tiny.weights

Yolo v2 模型

yolov2.cfg(194 MB COCO Yolo v2) - requires 4 GB GPU-RAM: https://pjreddie.com/media/files/yolov2.weightsyolo-voc.cfg(194 MB VOC Yolo v2) - requires 4 GB GPU-RAM: http://pjreddie.com/media/files/yolo-voc.weightsyolov2-tiny.cfg(43 MB COCO Yolo v2) - requires 1 GB GPU-RAM: https://pjreddie.com/media/files/yolov2-tiny.weightsyolov2-tiny-voc.cfg(60 MB VOC Yolo v2) - requires 1 GB GPU-RAM: http://pjreddie.com/media/files/yolov2-tiny-voc.weightsyolo9000.cfg(186 MB Yolo9000-model) - requires 4 GB GPU-RAM: http://pjreddie.com/media/files/yolo9000.weights

将下载的模型拷贝到darknet.exe同目录下。

在目录darknet/cfg/中获取cfg-文件

必要条件

- Windows 或Linux

- CMake >= 3.8 支持最新的CUDA: https://cmake.org/download/

- CUDA 10.0: https://developer.nvidia.com/cuda-toolkit-archive (Linux:Post-installation Actions)

- OpenCV >= 2.4: 使用喜欢的包管理器(brew、apt)下载,或使用vcpkg从源码编译,或从OpenCV official site下载(Windows系统设置环境变量OpenCV_DIR = C:\\opencv\\build -其中包括include 和x64 目录)

- cuDNN >= 7.0 for CUDA 10.0 https://developer.nvidia.com/rdp/cudnn-archive (Linux 下复制 cudnn.h,libcudnn.

详细见 https://docs.nvidia.com/deeplearning/sdk/cudnn-install/index.html#installlinux-tar , Windows 下复制 cudnn.h,cudnn64_7.dll, cudnn64_7.lib 详细见 https://docs.nvidia.com/deeplearning/sdk/cudnn-install/index.html#installwindows )

- GPU with CC >= 3.0: https://en.wikipedia.org/wiki/CUDA#GPUs_supported

- Linux下使用 GCC 或 Clang, Windows下使用 MSVC 2015/2017/2019 https://visualstudio.microsoft.com/thank-you-downloading-visual-studio/?sku=Community

其他框架下的YoloV3

- TensorFlow: 将yolov3.weights/cfg 文件转换为yolov3.ckpt/pb/meta: 使用mystic123 或 jinyu121项目,和TensorFlow-lite

- Intel OpenVINO 2019 R1: (Myriad X / USB Neural Compute Stick / Arria FPGA): 详见 manual

- OpenCV-dnn 最快的CPU实现(x86/ARM-android), OpenCV配合OpenVINO-backend 编译可运行在(Myriad X / USB Neural Compute Stick / Arria FPGA), 使用yolov3.weights/cfg: C++ example or Python example

- PyTorch > ONNX > CoreML > iOS 如何将cfg/weights-files 转换为pt-file: ultralytics/yolov3 and ios App

- TensorRT for YOLOv3 (-70% faster inference): Yolo is natively supported in DeepStream 4.0 read PDF

- TVM - 将深度学习模型(Keras, MXNet, PyTorch, Tensorflow, CoreML, DarkNet) 编译为可在各种低端硬件(CPUs, GPUs, FPGA, 及专用加速器)上最小化部署的模块: https://tvm.ai/about

- OpenDataCam - 使用Yolo对移动物体进行检查、跟踪和计数: https://github.com/opendatacam/opendatacam#-hardware-pre-requisite

- Netron - 神经网络可视化工具: https://github.com/lutzroeder/netron

数据集

- MS COCO: 使用 ./scripts/get_coco_dataset.sh 下载标注好的MS COCO检测数据集

- OpenImages: 使用python ./scripts/get_openimages_dataset.py 获取标注好的训练检测数据集

- Pascal VOC: 使用python ./scripts/voc_label.py 获取标注好的Train/Test/Val 检测数据集

- ILSVRC2012 (ImageNet 分类器): 使用 ./scripts/get_imagenet_train.sh (或 imagenet_label.sh 下载标注好的集合)

- German/Belgium/Russian/LISA/MASTIF 交通信号检测数据集-见:

https://github.com/angeligareta/Datasets2Darknet#detection-task

范例结果

https://www.youtube.com/watch?v=MPU2HistivI

其他: https://www.youtube.com/user/pjreddie/videos

本库的改进

- 添加对windows的支持

- 添加先进的(State-of-Art)模型: CSP, PRN(医学搜索网络), EfficientNet

- 增加层: [conv_lstm], [scale_channels] SE/ASFF/BiFPN, [local_avgpool], [sam], [Gaussian_yolo], [reorg3d] (fixed [reorg]), fixed [batchnorm]

- 添加训练循环模型的能力(conv-lstm[conv_lstm]/conv-rnn[crnn]层),可精确检测视频

- 增加新参数: [net] mixup=1 cutmix=1 mosaic=1 blur=1. 增加新的架构: SWISH, MISH, NORM_CHAN, NORM_CHAN_SOFTMAX

- 增加使用CPU-RAM做GPU处理的训练能力,增加mini_batch_size参数,提升精确度(替换batch-norm)

added the ability for training with GPU-processing using CPU-RAM to increase the mini_batch_size and increase accuracy (instead of batch-norm sync)

- 如果使用XNOR-net(https://github.com/AlexeyAB/darknet/blob/master/cfg/yolov3-tiny_xnor.cfg)模型训练权重,则使用CPU和GPU做二分类神经网络检测,性能提升2-4倍

improved binary neural network performance 2x-4x times for Detection on CPU and GPU if you trained your own weights by using this XNOR-net model (bit-1 inference) : https://github.com/AlexeyAB/darknet/blob/master/cfg/yolov3-tiny_xnor.cfg

- 合并Convolutional + Batch-norm 为一层,神经网络效率提升约7%

improved neural network performance ~7% by fusing 2 layers into 1: Convolutional + Batch-norm

- 提升效率:在Makefile或darknet.sln中定义CUDNN_HALF启用张量运算则在Volta/Turing(Tesla V100、Geforce RTX…)GPU上,检测效率x2

Detection 2x times, on GPU Volta/Turing (Tesla V100, GeForce RTX, ...) using Tensor Cores if CUDNN_HALF defined in the Makefile or darknet.sln

- 提升效率:使用darknet detector demo检测视频(文件或流), FullHD1.2倍提速,4K 2倍提速

improved performance ~1.2x times on FullHD, ~2x times on 4K, for detection on the video (file/stream) using darknet detector demo...

- 提升效率:训练中数据增强速度提升3.5倍(使用opencv SSE/AVX函数替代原有函数)-移除多GPU或GPU Volta训练中的瓶颈

improved performance 3.5 X times of data augmentation for training (using OpenCV SSE/AVX functions instead of hand-written functions) - removes bottleneck for training on multi-GPU or GPU Volta

- 提升效率:在支持AVX指令的Inter CPU上检测和训练效率提升85%

Improved performance of detection and training on Intel CPU with AVX (Yolo v3 ~85%)

- 当random=1时,在调整网络大小时优化内存分配

optimized memory allocation during network resizing when random=1

- 优化检测时的GPU初始化过程-直接将batch初始化为1,而不是重新初始化为1

optimized GPU initialization for detection - we use batch=1 initially instead of re-init with batch=1

- 增加精确计算mAp、F1、Iou、Precision-Recall功能,命令行:darknet detector map…

added correct calculation of mAP, F1, IoU, Precision-Recall using command darknet detector map…

- 训练过程中绘制average-Loss 和accuracy-mAP (加-map 标志)chart图

added drawing of chart of average-Loss and accuracy-mAP (-map flag) during training

- 运行./darknet detector demo ... -json_port 8070 -mjpeg_port 8090 ,可作为JSON、MJPEG服务让软件或浏览器在线获取实时训练结果

run ./darknet detector demo ... -json_port 8070 -mjpeg_port 8090 as JSON and MJPEG server to get results online over the network by using your soft or Web-browser

- 增加训练描点计算功能

- 增加检测和对象跟踪范例: https://github.com/AlexeyAB/darknet/blob/master/src/yolo_console_dll.cpp

- 如果使用错误的cfg-文件或数据集,运行时进行提示和警告

- 代码中多处修正...

增加教程 - How to train Yolo v4-v2 (to detect your custom objects)

同时,你也可能对简化库感兴趣-使用INT8-quantization 实现(速度提升30%,mAP降低1%): https://github.com/AlexeyAB/yolo2_light

如何使用命令行

Linux下使用./darknet 替代darknet.exe, 如:./darknet detector test ./cfg/coco.data ./cfg/yolov4.cfg ./yolov4.weights

Linux在根目录查找可执行文件./darknet, Windows下在\\build\\darknet\\x64目录

- Yolo v4 COCO - 图像: darknet.exe detector test cfg/coco.data cfg/yolov4.cfg yolov4.weights -thresh 0.25

- 输出对象坐标: darknet.exe detector test cfg/coco.data yolov4.cfg yolov4.weights -ext_output dog.jpg

- Yolo v4 COCO – 视频: darknet.exe detector demo cfg/coco.data cfg/yolov4.cfg yolov4.weights -ext_output test.mp4

- Yolo v4 COCO - WebCam 0: darknet.exe detector demo cfg/coco.data cfg/yolov4.cfg yolov4.weights -c 0

- Yolo v4 COCO net-videocam - Smart WebCam: darknet.exe detector demo cfg/coco.data cfg/yolov4.cfg yolov4.weights http://192.168.0.80:8080/video?dummy=param.mjpg

- Yolo v4 - 保存结果视频文件 res.avi: darknet.exe detector demo cfg/coco.data cfg/yolov4.cfg yolov4.weights test.mp4 -out_filename res.avi

- Yolo v3 Tiny COCO - 视频: darknet.exe detector demo cfg/coco.data cfg/yolov3-tiny.cfg yolov3-tiny.weights test.mp4

- JSON 和 MJPEG 服务 让多个软件或浏览器连接到 ip-address:8070 and 8090: ./darknet detector demo ./cfg/coco.data ./cfg/yolov3.cfg ./yolov3.weights test50.mp4 -json_port 8070 -mjpeg_port 8090 -ext_output

- Yolo v3 Tiny 使用 GPU #1: darknet.exe detector demo cfg/coco.data cfg/yolov3-tiny.cfg yolov3-tiny.weights -i 1 test.mp4

- 替代 Yolo v3 COCO - 图像: darknet.exe detect cfg/yolov4.cfg yolov4.weights -i 0 -thresh 0.25

- 在Amazon EC2训练,在Chrome或Firefox浏览器上访问URL: http://ec2-35-160-228-91.us-west-2.compute.amazonaws.com:8090查看mAP & Loss-chart (Darknet编译需要依赖OpenCV): ./darknet detector train cfg/coco.data yolov4.cfg yolov4.conv.137 -dont_show -mjpeg_port 8090 -map

- 186 MB Yolo9000 - 图像: darknet.exe detector test cfg/combine9k.data cfg/yolo9000.cfg yolo9000.weights

- 如果实用cpp api编译app,将data/9k.tree 和 data/coco9k.map文件放到app同目录中

- 处理图像列表 data/train.txt并将检测结果保存到result.json文件: darknet.exe detector test cfg/coco.data cfg/yolov4.cfg yolov4.weights -ext_output -dont_show -out result.json < data/train.txt

- 处理图像列表 data/train.txt 并将结果保存到result.txt文件:

darknet.exe detector test cfg/coco.data cfg/yolov4.cfg yolov4.weights -dont_show -ext_output < data/train.txt > result.txt - 伪标记 - 处理图像列表data/new_train.txt ,并将每个图像的检测结果以Yolo训练标注格式保存为到<image_name>.txt (可以用来增加训练数据) : darknet.exe detector test cfg/coco.data cfg/yolov4.cfg yolov4.weights -thresh 0.25 -dont_show -save_labels < data/new_train.txt

- 计算描点: darknet.exe detector calc_anchors data/obj.data -num_of_clusters 9 -width 416 -height 416

- 检测精度 mAP@IoU=50: darknet.exe detector map data/obj.data yolo-obj.cfg backup\\yolo-obj_7000.weights

- 检测精度 mAP@IoU=75: darknet.exe detector map data/obj.data yolo-obj.cfg backup\\yolo-obj_7000.weights -iou_thresh 0.75

在安卓智能手机中使用网络video-camera mjpeg-stream

- 下载Android phone mjpeg-stream 软件: IP Webcam / Smart WebCam

- 使用wifi(through a WiFi-router)或usb将安卓连接到计算机

- 在手机上启动Smart WebCam

- 替换下面的地址为手机Smart WebCam APP中的地址,并启动:

- Yolo v4 COCO-model: darknet.exe detector demo data/coco.data yolov4.cfg yolov4.weights http://192.168.0.80:8080/video?dummy=param.mjpg -i 0

如何在Linux下编译 (使用cmake)

CMakeLists.txt 文件尝试寻找以安装的可选依赖,如CUDA、cudn、ZED并据此进行编译。同时创建darkent共享对象库文件用于编码开发。

在clone的源码库中:

mkdir build-release

cd build-release

cmake ..

make

make install如何在Linux下编译 (使用 make)

在darknet目录中执行make. make前,可以在Makefile中设置如下选项: link

- GPU=1 使用CUDA来build,进而使用GPU加速(CUDA包含在/usr/local/cuda)

- CUDNN=1 使用cuDNN v5-v7来build,进而使用GPU加速训练(cuDNN 包含在/usr/local/cudnn)

- CUDNN_HALF=1 使用Tensor Cores 来build,在Titan V / Tesla V100 / DGX-2 及更新硬件上检测提速3倍,训练提速2倍

- OPENCV=1使用 OpenCV 4.x/3.x/2.4.x来build -可在网络摄像头或web-cams上检测视频文件和视频流

- DEBUG=1 编译Yolo的debug版本

- OPENMP=1 to build with OpenMP support to accelerate Yolo by using multi-core CPU

- LIBSO=1 编译darknet.so动态库及使用这个库的可执行文件uselib.尝试运行:LD_LIBRARY_PATH=./:$LD_LIBRARY_PATH ./uselib test.mp4。如何使用这个so库,见C++范例: https://github.com/AlexeyAB/darknet/blob/master/src/yolo_console_dll.cpp 或: LD_LIBRARY_PATH=./:$LD_LIBRARY_PATH ./uselib data/coco.names cfg/yolov4.cfg yolov4.weights test.mp4

- ZED_CAMERA=1使用ZED-3D-camera 支持来build(依赖ZED SDK的安装), 然后执行LD_LIBRARY_PATH=./:$LD_LIBRARY_PATH ./uselib data/coco.names cfg/yolov4.cfg yolov4.weights zed_camera

在linux下运行本文中的darknet范例,将./darknet 替换darknet.exe, i.e. use this command: ./darknet detector test ./cfg/coco.data ./cfg/yolov4.cfg ./yolov4.weights

如何在Windows下编译(使用CMake-GUI)

如果安装了VS2015/2017/2019,CUDA >10.0,cuDNN > 7.0,OpenCV>2.4,推荐采用此方法。

注意:确保安装好CUDA和OPENCV

CMake-GUI如下图所示:

- Configure

- 选择生成平台(设置为x64)Optional platform for generator (Set: x64)

- Finish

- Generate

- Open Project

- 设置为x64&Release Set: x64 & Release

- Build

- Build solution

如何在Windows下编译(使用vcpkg)

vcpkg是自动下载依赖包的工具

如果已经安装了Visual Studio 2015/2017/2019, CUDA > 10.0, cuDNN > 7.0, OpenCV > 2.4,推荐使用CMake-GUI方式。

否则,按如下步骤:

- 安装并升级VS2017的最新版,确保安装全部的补丁(如不确定可再次运行安装程序)。如果是全新安装,可从Visual Studio Community下载。

- 安装CUDA和cuDNN

- 安装git和cmake.并确保都加入到了PATH环境变量中。 Make sure they are on the Path at least for the current account

- 安装vcpkg并安装测试库,确保所有组件ok,例如运行cvpkg install opengl

- 定义VCPKG_ROOT环境变量,指向vcpkg的安装目录

- 定义环境变量VCPKG_DEFAULT_TRIPLET,值为x64-windows

- 打开Powershell并输入如下命令:

PS \\> cd $env:VCPKG_ROOT PS Code\\vcpkg> .\\vcpkg install pthreads opencv[ffmpeg] #replace with opencv[cuda,ffmpeg] in case you want to use cuda-accelerated openCV - 打开Powershell,进入到darknet目录,运行命令.\\build.ps1。如果要使用vs,可以在build后看到cmake生成的两个解决方案文件,一个是build_win_debug,一个是build_win_release,包含了与当前系统匹配的所有配置信息

如何在windows下编译(传统方式)

- If you have CUDA 10.0, cuDNN 7.4 and OpenCV 3.x (with paths: C:\\opencv_3.0\\opencv\\build\\include & C:\\opencv_3.0\\opencv\\build\\x64\\vc14\\lib), then open build\\darknet\\darknet.sln, set x64 and Release https://hsto.org/webt/uh/fk/-e/uhfk-eb0q-hwd9hsxhrikbokd6u.jpeg and do the: Build -> Build darknet. Also add Windows system variable CUDNN with path to CUDNN: https://user-images.githubusercontent.com/4096485/53249764-019ef880-36ca-11e9-8ffe-d9cf47e7e462.jpg

1.1. Find files opencv_world320.dll and opencv_ffmpeg320_64.dll (or opencv_world340.dll and opencv_ffmpeg340_64.dll) in C:\\opencv_3.0\\opencv\\build\\x64\\vc14\\bin and put it near with darknet.exe

1.2 Check that there are bin and include folders in the C:\\Program Files\\NVIDIA GPU Computing Toolkit\\CUDA\\v10.0 if aren't, then copy them to this folder from the path where is CUDA installed

1.3. To install CUDNN (speedup neural network), do the following:

-

- download and install cuDNN v7.4.1 for CUDA 10.0: https://developer.nvidia.com/rdp/cudnn-archive

- add Windows system variable CUDNN with path to CUDNN: https://user-images.githubusercontent.com/4096485/53249764-019ef880-36ca-11e9-8ffe-d9cf47e7e462.jpg

- copy file cudnn64_7.dll to the folder \\build\\darknet\\x64 near with darknet.exe

1.4. If you want to build without CUDNN then: open \\darknet.sln -> (right click on project) -> properties -> C/C++ -> Preprocessor -> Preprocessor Definitions, and remove this: CUDNN;

- If you have other version of CUDA (not 10.0) then open build\\darknet\\darknet.vcxproj by using Notepad, find 2 places with "CUDA 10.0" and change it to your CUDA-version. Then open \\darknet.sln -> (right click on project) -> properties -> CUDA C/C++ -> Device and remove there ;compute_75,sm_75. Then do step 1

- If you don't have GPU, but have OpenCV 3.0 (with paths: C:\\opencv_3.0\\opencv\\build\\include & C:\\opencv_3.0\\opencv\\build\\x64\\vc14\\lib), then open build\\darknet\\darknet_no_gpu.sln, set x64 and Release, and do the: Build -> Build darknet_no_gpu

- If you have OpenCV 2.4.13 instead of 3.0 then you should change paths after \\darknet.sln is opened

4.1 (right click on project) -> properties -> C/C++ -> General -> Additional Include Directories: C:\\opencv_2.4.13\\opencv\\build\\include

4.2 (right click on project) -> properties -> Linker -> General -> Additional Library Directories: C:\\opencv_2.4.13\\opencv\\build\\x64\\vc14\\lib

- If you have GPU with Tensor Cores (nVidia Titan V / Tesla V100 / DGX-2 and later) speedup Detection 3x, Training 2x: \\darknet.sln -> (right click on project) -> properties -> C/C++ -> Preprocessor -> Preprocessor Definitions, and add here: CUDNN_HALF;

Note: CUDA must be installed only after Visual Studio has been installed.

How to compile (custom):

Also, you can to create your own darknet.sln & darknet.vcxproj, this example for CUDA 9.1 and OpenCV 3.0

Then add to your created project:

- (right click on project) -> properties -> C/C++ -> General -> Additional Include Directories, put here:

C:\\opencv_3.0\\opencv\\build\\include;..\\..\\3rdparty\\include;%(AdditionalIncludeDirectories);$(CudaToolkitIncludeDir);$(CUDNN)\\include

- (right click on project) -> Build dependecies -> Build Customizations -> set check on CUDA 9.1 or what version you have - for example as here: http://devblogs.nvidia.com/parallelforall/wp-content/uploads/2015/01/VS2013-R-5.jpg

- add to project:

- all .c files

- all .cu files

- file http_stream.cpp from \\src directory

- file darknet.h from \\include directory

- (right click on project) -> properties -> Linker -> General -> Additional Library Directories, put here:

C:\\opencv_3.0\\opencv\\build\\x64\\vc14\\lib;$(CUDA_PATH)\\lib\\$(PlatformName);$(CUDNN)\\lib\\x64;%(AdditionalLibraryDirectories)

- (right click on project) -> properties -> Linker -> Input -> Additional dependecies, put here:

..\\..\\3rdparty\\lib\\x64\\pthreadVC2.lib;cublas.lib;curand.lib;cudart.lib;cudnn.lib;%(AdditionalDependencies)

- (right click on project) -> properties -> C/C++ -> Preprocessor -> Preprocessor Definitions

OPENCV;_TIMESPEC_DEFINED;_CRT_SECURE_NO_WARNINGS;_CRT_RAND_S;WIN32;NDEBUG;_CONSOLE;_LIB;%(PreprocessorDefinitions)

- compile to .exe (X64 & Release) and put .dll-s near with .exe: https://hsto.org/webt/uh/fk/-e/uhfk-eb0q-hwd9hsxhrikbokd6u.jpeg

- pthreadVC2.dll, pthreadGC2.dll from \\3rdparty\\dll\\x64

- cusolver64_91.dll, curand64_91.dll, cudart64_91.dll, cublas64_91.dll - 91 for CUDA 9.1 or your version, from C:\\Program Files\\NVIDIA GPU Computing Toolkit\\CUDA\\v9.1\\bin

- For OpenCV 3.2: opencv_world320.dll and opencv_ffmpeg320_64.dll from C:\\opencv_3.0\\opencv\\build\\x64\\vc14\\bin

- For OpenCV 2.4.13: opencv_core2413.dll, opencv_highgui2413.dll and opencv_ffmpeg2413_64.dll from C:\\opencv_2.4.13\\opencv\\build\\x64\\vc14\\bin

使用多GPU训练:

- 首先在1GPU下训练1000次: darknet.exe detector train cfg/coco.data cfg/yolov4.cfg yolov4.conv.137

- 然后停止训练,使用部分训练模型/backup/yolov4_1000.weights 继续使用多gpu(如4个gpu)进行训练: darknet.exe detector train cfg/coco.data cfg/yolov4.cfg /backup/yolov4_1000.weights -gpus 0,1,2,3

数据集较小时应该减少学习速率,如4gpu训练时设置learning_rate=0.00025(learning_rate = 0.001 / GPUs)。同时在cfg-文件中将burn_in和max_batches变为原来的4倍。如burn_in从1000变为4000.如果policy-steps则steps参数也要做同样变更。

Only for small datasets sometimes better to decrease learning rate, for 4 GPUs set learning_rate = 0.00025 (i.e. learning_rate = 0.001 / GPUs). In this case also increase 4x times burn_in =以上是关于darknet YoloV4手册翻译的主要内容,如果未能解决你的问题,请参考以下文章