CNN 卷积层输出计算

Posted 三つ叶

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了CNN 卷积层输出计算相关的知识,希望对你有一定的参考价值。

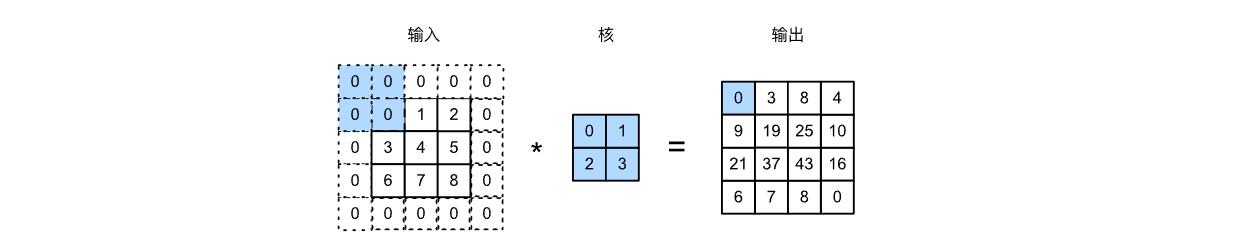

o u t p u t _ s i z e = ⌈ i n p u t _ s i z e + 2 × p a d d i n g − k e r n e l _ s i z e s t r i d e ⌉ + 1 output\\_size = \\lceil \\fracinput\\_size + 2\\times padding - kernel\\_sizestride \\rceil + 1 output_size=⌈strideinput_size+2×padding−kernel_size⌉+1

class Classifier(nn.Module):

def __init__(self):

super(Classifier, self).__init__()

# The arguments for commonly used modules:

# torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0)

# torch.nn.MaxPool2d(kernel_size, stride=kernel_size, padding=0)

# input: [batch_size, 3, 128, 128]

# Conv2d/MaxPool2d compute: [(input_size + 2*padding - kernel_size)/stride]+1 []中内容向上取整

self.cnn_layers = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1), # (128+2-3)/1+1=128

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2, padding=0), # (128+0-2)/2+1=64

nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1), # (64+2-3)/1+1=64

nn.BatchNorm2d(128),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2, padding=0), # (64+0-2)/2+1=32

nn.Conv2d(128, 256, kernel_size=3, stride=1, padding=1), # (32+2-3)/1+1=32

nn.BatchNorm2d(256),

nn.ReLU(),

nn.MaxPool2d(kernel_size=4, stride=4, padding=0), # (32+0-4)/4+1=8

)

self.fc_layers = nn.Sequential(

nn.Linear(256 * 8 * 8, 256),

nn.ReLU(),

nn.Linear(256, 256),

nn.ReLU(),

nn.Linear(256, 11)

)

def forward(self, x):

# input (x): [batch_size, 3, 128, 128]

# output: [batch_size, 11]

# Extract features by convolutional layers.

x = self.cnn_layers(x)

# The extracted feature map must be flatten before going to fully-connected layers.

x = x.flatten(1)

# The features are transformed by fully-connected layers to obtain the final logits.

x = self.fc_layers(x)

return x

打印出每层维度信息

net = Classifier().cnn_layers

X = torch.rand(size=(1, 3, 128, 128), dtype=torch.float32)

for layer in net:

X = layer(X)

print(layer.__class__.__name__, 'output shape: \\t', X.shape)

-----------------------------------------------------------------

Conv2d output shape: torch.Size([1, 64, 128, 128])

BatchNorm2d output shape: torch.Size([1, 64, 128, 128])

ReLU output shape: torch.Size([1, 64, 128, 128])

MaxPool2d output shape: torch.Size([1, 64, 64, 64])

Conv2d output shape: torch.Size([1, 128, 64, 64])

BatchNorm2d output shape: torch.Size([1, 128, 64, 64])

ReLU output shape: torch.Size([1, 128, 64, 64])

MaxPool2d output shape: torch.Size([1, 128, 32, 32])

Conv2d output shape: torch.Size([1, 256, 32, 32])

BatchNorm2d output shape: torch.Size([1, 256, 32, 32])

ReLU output shape: torch.Size([1, 256, 32, 32])

MaxPool2d output shape: torch.Size([1, 256, 8, 8])

Conclusion

- 3 × 3 3 \\times 3 3×3的卷积核(stride=1,padding=1)不改变图片大小

- flatten()函数作用

以上是关于CNN 卷积层输出计算的主要内容,如果未能解决你的问题,请参考以下文章