在KubeSphere启用基于Jenkins的DevOps

Posted 虎鲸不是鱼

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了在KubeSphere启用基于Jenkins的DevOps相关的知识,希望对你有一定的参考价值。

DevOps概述

参考KubeSphere官网:https://kubesphere.com.cn/docs/v3.3/devops-user-guide/devops-overview/overview/

DevOps 是一系列做法和工具,可以使 IT 和软件开发团队之间的流程实现自动化。其中,随着敏捷软件开发日趋流行,持续集成 (CI) 和持续交付 (CD) 已经成为该领域一个理想的解决方案。在 CI/CD 工作流中,每次集成都通过自动化构建来验证,包括编码、发布和测试,从而帮助开发者提前发现集成错误,团队也可以快速、安全、可靠地将内部软件交付到生产环境。

不过,传统的 Jenkins Controller-Agent 架构(即多个 Agent 为一个 Controller 工作)有以下不足。

- 如果 Controller 宕机,整个 CI/CD 流水线会崩溃。

- 资源分配不均衡,一些 Agent 的流水线任务 (Job) 出现排队等待,而其他 Agent 处于空闲状态。

- 不同的 Agent 可能配置环境不同,并需要使用不同的编码语言。这种差异会给管理和维护带来不便。

了解 KubeSphere DevOps

KubeSphere DevOps 项目支持源代码管理工具,例如 GitHub、Git 和 SVN。用户可以通过图形编辑面板 (Jenkinsfile out of SCM) 构建 CI/CD 流水线,或者从代码仓库 (Jenkinsfile in SCM) 创建基于 Jenkinsfile 的流水线。

功能

KubeSphere DevOps 系统为您提供以下功能:

- 独立的 DevOps 项目,提供访问可控的 CI/CD 流水线。

- 开箱即用的 DevOps 功能,无需复杂的 Jenkins 配置。

- 支持 Source-to-image (S2I) 和 Binary-to-image (B2I),快速交付镜像。

- 基于 Jenkinsfile 的流水线,提供一致的用户体验,支持多个代码仓库。

- 图形编辑面板,用于创建流水线,学习成本低。

- 强大的工具集成机制,例如 SonarQube,用于代码质量检查。

KubeSphere CI/CD 流水线工作流

KubeSphere CI/CD 流水线基于底层 Kubernetes Jenkins Agent 而运行。这些 Jenkins Agent 可以动态扩缩,即根据任务状态进行动态供应或释放。Jenkins Controller 和 Agent 以 Pod 的形式运行在 KubeSphere 节点上。Controller 运行在其中一个节点上,其配置数据存储在一个持久卷声明中。Agent 运行在各个节点上,但可能不会一直处于运行状态,而是根据需求动态创建并自动删除。

当 Jenkins Controller 收到构建请求,会根据标签动态创建运行在 Pod 中的 Jenkins Agent 并注册到 Controller 上。当 Agent 运行完任务后,将会被释放,相关的 Pod 也会被删除。

动态供应 Jenkins Agent

动态供应 Jenkins Agent 有以下优势:

资源分配合理:KubeSphere 动态分配已创建的 Agent 至空闲节点,避免因单个节点资源利用率高而导致任务排队等待。

高可扩缩性:当 KubeSphere 集群因资源不足而导致任务长时间排队等待时,您可以向集群新增节点。

高可用性:当 Jenkins Controller 故障时,KubeSphere 会自动创建一个新的 Jenkins Controller 容器,并将持久卷挂载至新创建的容器,保证数据不会丢失,从而实现集群高可用。

简言之,传统的Jenkins Controller-Agent 架构,容易出现常驻服务Controller宕机从而导致流水线无法启动的问题。K8S的Pod有故障重启机制,可以在Pod崩溃后自动重启,或者冗余多个pod实现高可用。作为有状态的应用,Jenkins会将状态数据持久化到挂载的持久卷中,从而实现状态的持久化保存及故障恢复后状态的一致性。

而Agent容易因资源分配不均衡的问题导致出现排队、空闲这种整体资源占用率很低的不良情况。忙的忙死闲的闲死显然是不利于充分榨干硬件性能。而K8S借助namespace、cgroup等技术,天生就很容易做资源隔离及资源分配。通过动态创建、销毁Agent的Pod实现Agent的动态供应,无状态的Agent通过这种方式充分压榨了硬件资源。

由于Containerd、Docker等Rni组件以容器的方式启动应用,容器内部可以有不同的运行时环境,K8S完全可以适配多种不同的配置、环境、编程语言,尤其与编程语言弱耦合,当运行在K8S的pod时,go编译的应用、Spring boot服务对外其实并没有太大区别。使用基于K8S的Jenkins,在部署多语言、多环境应用服务时,可以不用刻意关心语言、环境差异的影响,更加从容。

启动DevOps

官网文档:https://kubesphere.com.cn/docs/v3.3/pluggable-components/devops/

和之前启动服务网格Istio半差不差,改配置即可,对非专业运维的开发人员异常友好。

可以参考上一篇【启动Istio】:https://lizhiyong.blog.csdn.net/article/details/126380224

安装KubeSphere前配置启动

Linux安装KubeSphere时

修改kk创建的默认的配置文件:

vi config-sample.yaml

增加:

devops:

enabled: true # 将“false”更改为“true”。

此配置项会使kk在Linux安装K8S集群及KubeSphere时自动创建并启动DevOps相关的pod:

./kk create cluster -f config-sample.yaml

KubeKey相关的介绍及使用参考上篇,附带官网及GitHub地址。

K8S集群安装KubeSphere时

修改与上篇相同的cluster-configuration.yaml文件:

vi cluster-configuration.yaml

同样是修改此处:

devops:

enabled: true # 将“false”更改为“true”。

之后使用命令根据该yaml配置文件安装:

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.3.0/kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

之后根据该配置文件启用各种pod。

安装KubeSphere后配置启动

最常用的方式还是安装好基础的K8S集群及KubeSphere环境后再去web UI中修改配置文件。笔者使用KubeKey的All-in-one部署后,默认是没有启动DevOps的。笔者很好奇这次启动DevOps又会踩到什么坑。

找到clusterconfiguration的yaml并修改已经轻车熟路。

当前kk-install的yaml:

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: >

"apiVersion":"installer.kubesphere.io/v1alpha1","kind":"ClusterConfiguration","metadata":"annotations":,"labels":"version":"v3.3.0","name":"ks-installer","namespace":"kubesphere-system","spec":"alerting":"enabled":false,"auditing":"enabled":false,"authentication":"jwtSecret":"","common":"core":"console":"enableMultiLogin":true,"port":30880,"type":"NodePort","es":"basicAuth":"enabled":false,"password":"","username":"","elkPrefix":"logstash","externalElasticsearchHost":"","externalElasticsearchPort":"","logMaxAge":7,"gpu":"kinds":["default":true,"resourceName":"nvidia.com/gpu","resourceType":"GPU"],"minio":"volumeSize":"20Gi","monitoring":"GPUMonitoring":"enabled":false,"endpoint":"http://prometheus-operated.kubesphere-monitoring-system.svc:9090","openldap":"enabled":false,"volumeSize":"2Gi","redis":"enabled":false,"volumeSize":"2Gi","devops":"enabled":false,"jenkinsJavaOpts_MaxRAM":"2g","jenkinsJavaOpts_Xms":"1200m","jenkinsJavaOpts_Xmx":"1600m","jenkinsMemoryLim":"2Gi","jenkinsMemoryReq":"1500Mi","jenkinsVolumeSize":"8Gi","edgeruntime":"enabled":false,"kubeedge":"cloudCore":"cloudHub":"advertiseAddress":[""],"service":"cloudhubHttpsNodePort":"30002","cloudhubNodePort":"30000","cloudhubQuicNodePort":"30001","cloudstreamNodePort":"30003","tunnelNodePort":"30004","enabled":false,"iptables-manager":"enabled":true,"mode":"external","etcd":"endpointIps":"192.168.88.20","monitoring":false,"port":2379,"tlsEnable":true,"events":"enabled":false,"logging":"enabled":false,"logsidecar":"enabled":true,"replicas":2,"metrics_server":"enabled":false,"monitoring":"gpu":"nvidia_dcgm_exporter":"enabled":false,"node_exporter":"port":9100,"storageClass":"","multicluster":"clusterRole":"none","network":"ippool":"type":"none","networkpolicy":"enabled":false,"topology":"type":"none","openpitrix":"store":"enabled":false,"persistence":"storageClass":"","servicemesh":"enabled":false,"istio":"components":"cni":"enabled":false,"ingressGateways":["enabled":false,"name":"istio-ingressgateway"],"terminal":"timeout":600,"zone":"cn"

labels:

version: v3.3.0

name: ks-installer

namespace: kubesphere-system

spec:

alerting:

enabled: false

auditing:

enabled: false

authentication:

jwtSecret: ''

common:

core:

console:

enableMultiLogin: true

port: 30880

type: NodePort

es:

basicAuth:

enabled: false

password: ''

username: ''

elkPrefix: logstash

externalElasticsearchHost: ''

externalElasticsearchPort: ''

logMaxAge: 7

gpu:

kinds:

- default: true

resourceName: nvidia.com/gpu

resourceType: GPU

minio:

volumeSize: 20Gi

monitoring:

GPUMonitoring:

enabled: false

endpoint: 'http://prometheus-operated.kubesphere-monitoring-system.svc:9090'

openldap:

enabled: false

volumeSize: 2Gi

redis:

enabled: false

volumeSize: 2Gi

devops:

enabled: false

jenkinsJavaOpts_MaxRAM: 2g

jenkinsJavaOpts_Xms: 1200m

jenkinsJavaOpts_Xmx: 1600m

jenkinsMemoryLim: 2Gi

jenkinsMemoryReq: 1500Mi

jenkinsVolumeSize: 8Gi

edgeruntime:

enabled: false

kubeedge:

cloudCore:

cloudHub:

advertiseAddress:

- ''

service:

cloudhubHttpsNodePort: '30002'

cloudhubNodePort: '30000'

cloudhubQuicNodePort: '30001'

cloudstreamNodePort: '30003'

tunnelNodePort: '30004'

enabled: false

iptables-manager:

enabled: true

mode: external

etcd:

endpointIps: 192.168.88.20

monitoring: false

port: 2379

tlsEnable: true

events:

enabled: false

logging:

enabled: false

logsidecar:

enabled: true

replicas: 2

metrics_server:

enabled: false

monitoring:

gpu:

nvidia_dcgm_exporter:

enabled: false

node_exporter:

port: 9100

storageClass: ''

multicluster:

clusterRole: none

network:

ippool:

type: none

networkpolicy:

enabled: false

topology:

type: none

openpitrix:

store:

enabled: false

persistence:

storageClass: ''

servicemesh:

enabled: true

istio:

components:

cni:

enabled: false

ingressGateways:

- enabled: false

name: istio-ingressgateway

terminal:

timeout: 600

zone: cn

可以看到默认的Jenkins配置是限制最大2G内存,预申请1.5G,存储卷8G。比较正常,笔者不修改了。

修改:

devops:

enabled: true # 将“false”更改为“true”。

确定保存后,使用root查看安装状态:

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='.items[0].metadata.name') -f

可以看到:

Start installing monitoring

Start installing multicluster

Start installing openpitrix

Start installing network

Start installing devops

Start installing servicemesh

**************************************************

Waiting for all tasks to be completed ...

task network status is successful (1/6)

task openpitrix status is successful (2/6)

task multicluster status is successful (3/6)

task servicemesh status is successful (4/6)

task monitoring status is successful (5/6)

task devops status is successful (6/6)

显然这是kk把所有组件又根据yaml重装了一遍。。。没什么软件问题是重启和重装系统解决不了的。。。

验证组件安装情况

执行:

root@zhiyong-ksp1:/home/zhiyong# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

argocd devops-argocd-application-controller-0 1/1 Running 0 9m25s

argocd devops-argocd-applicationset-controller-5864597bfc-pf8ht 1/1 Running 0 9m25s

argocd devops-argocd-dex-server-f885fb4b4-fkpls 1/1 Running 0 9m25s

argocd devops-argocd-notifications-controller-54b744556f-f4g24 1/1 Running 0 9m25s

argocd devops-argocd-redis-556fdd5876-xftmq 1/1 Running 0 9m25s

argocd devops-argocd-repo-server-5dbf9b87db-9tw2c 1/1 Running 0 9m25s

argocd devops-argocd-server-6f9898cc75-s7jkm 1/1 Running 0 9m25s

istio-system istiod-1-11-2-54dd699c87-99krn 1/1 Running 0 20h

istio-system jaeger-collector-67cfc55477-7757f 1/1 Running 5 (19h ago) 19h

istio-system jaeger-operator-fccc48b86-vtcr8 1/1 Running 0 20h

istio-system jaeger-query-8497bdbfd7-csbts 2/2 Running 0 19h

istio-system kiali-75c777bdf6-xhbq7 1/1 Running 0 19h

istio-system kiali-operator-c459985f7-sttfs 1/1 Running 0 20h

kube-system calico-kube-controllers-f9f9bbcc9-2v7lm 1/1 Running 2 (19h ago) 9d

kube-system calico-node-4mgc7 1/1 Running 2 (19h ago) 9d

kube-system coredns-f657fccfd-2gw7h 1/1 Running 2 (19h ago) 9d

kube-system coredns-f657fccfd-pflwf 1/1 Running 2 (19h ago) 9d

kube-system kube-apiserver-zhiyong-ksp1 1/1 Running 2 (19h ago) 9d

kube-system kube-controller-manager-zhiyong-ksp1 1/1 Running 2 (19h ago) 9d

kube-system kube-proxy-cn68l 1/1 Running 2 (19h ago) 9d

kube-system kube-scheduler-zhiyong-ksp1 1/1 Running 2 (19h ago) 9d

kube-system nodelocaldns-96gtw 1/1 Running 2 (19h ago) 9d

kube-system openebs-localpv-provisioner-68db4d895d-p9527 1/1 Running 1 (19h ago) 9d

kube-system snapshot-controller-0 1/1 Running 2 (19h ago) 9d

kubesphere-controls-system default-http-backend-587748d6b4-ccg59 1/1 Running 2 (19h ago) 9d

kubesphere-controls-system kubectl-admin-5d588c455b-82cnk 1/1 Running 2 (19h ago) 9d

kubesphere-devops-system devops-apiserver-6b468c95cb-9s7lz 1/1 Running 0 9m18s

kubesphere-devops-system devops-controller-667f8449d7-gjgj8 1/1 Running 0 9m18s

kubesphere-devops-system devops-jenkins-bf85c664c-c6qnq 1/1 Running 0 9m18s

kubesphere-devops-system s2ioperator-0 1/1 Running 0 9m18s

kubesphere-logging-system elasticsearch-logging-curator-elasticsearch-curator-2767784rhhk 0/1 Completed 0 20h

kubesphere-logging-system elasticsearch-logging-data-0 1/1 Running 0 20h

kubesphere-logging-system elasticsearch-logging-discovery-0 1/1 Running 0 20h

kubesphere-monitoring-system alertmanager-main-0 2/2 Running 4 (19h ago) 9d

kubesphere-monitoring-system kube-state-metrics-6d6786b44-bbb4f 3/3 Running 6 (19h ago) 9d

kubesphere-monitoring-system node-exporter-8sz74 2/2 Running 4 (19h ago) 9d

kubesphere-monitoring-system notification-manager-deployment-6f8c66ff88-pt4l8 2/2 Running 4 (19h ago) 9d

kubesphere-monitoring-system notification-manager-operator-6455b45546-nkmx8 2/2 Running 4 (19h ago) 9d

kubesphere-monitoring-system prometheus-k8s-0 2/2 Running 0 8m4s

kubesphere-monitoring-system prometheus-operator-66d997dccf-c968c 2/2 Running 4 (19h ago) 9d

kubesphere-system ks-apiserver-6b9bcb86f4-hsdzs 1/1 Running 2 (19h ago) 9d

kubesphere-system ks-console-599c49d8f6-ngb6b 1/1 Running 2 (19h ago) 9d

kubesphere-system ks-controller-manager-66747fcddc-r7cpt 1/1 Running 2 (19h ago) 9d

kubesphere-system ks-installer-5fd8bd46b8-dzhbb 1/1 Running 2 (19h ago) 9d

kubesphere-system minio-746f646bfb-hcf5c 1/1 Running 0 14m

kubesphere-system openldap-0 1/1 Running 1 (13m ago) 14m

这次居然一次性都启动了。。。

从这个慈眉善目的DevOps点进去:

发现所有系统组件全部启用,表面上没有任何问题。。。但是:

root@zhiyong-ksp1:/home/zhiyong# kubectl describe pod devops-jenkins-bf85c664c-c6qnq -n kubesphere-devops-system

Name: devops-jenkins-bf85c664c-c6qnq

Namespace: kubesphere-devops-system

Priority: 0

Node: zhiyong-ksp1/192.168.88.20

Start Time: Wed, 17 Aug 2022 21:08:28 +0800

Labels: app=devops-jenkins

chart=jenkins-0.19.3

component=devops-jenkins-master

heritage=Helm

pod-template-hash=bf85c664c

release=devops

Annotations: checksum/config: 094a3bf5a3dba2968646e2bacc5ab516c0562f2a0843997179c79f2d9be21438

cni.projectcalico.org/containerID: 61a3daa005a3f678176776fffdcf7fc9419d429051841c805738c61422e436ed

cni.projectcalico.org/podIP: 10.233.107.84/32

cni.projectcalico.org/podIPs: 10.233.107.84/32

Status: Running

IP: 10.233.107.84

IPs:

IP: 10.233.107.84

Controlled By: ReplicaSet/devops-jenkins-bf85c664c

Init Containers:

copy-default-config:

Container ID: containerd://205637c600ec1a4deab965f0a51c976e2215db945f0835e955c3409c04a68384

Image: registry.cn-beijing.aliyuncs.com/kubesphereio/ks-jenkins:v3.3.0-2.319.1

Image ID: registry.cn-beijing.aliyuncs.com/kubesphereio/ks-jenkins@sha256:c34e3f56f317197235f7d6e6582d531b09caa5c5f81bad21f4f8b4e432e44b7b

Port: <none>

Host Port: <none>

Command:

sh

/var/jenkins_config/apply_config.sh

State: Terminated

Reason: Completed

Exit Code: 0

Started: Wed, 17 Aug 2022 21:14:41 +0800

Finished: Wed, 17 Aug 2022 21:14:41 +0800

Ready: True

Restart Count: 0

Limits:

cpu: 1

memory: 2Gi

Requests:

cpu: 100m

memory: 1500Mi

Environment:

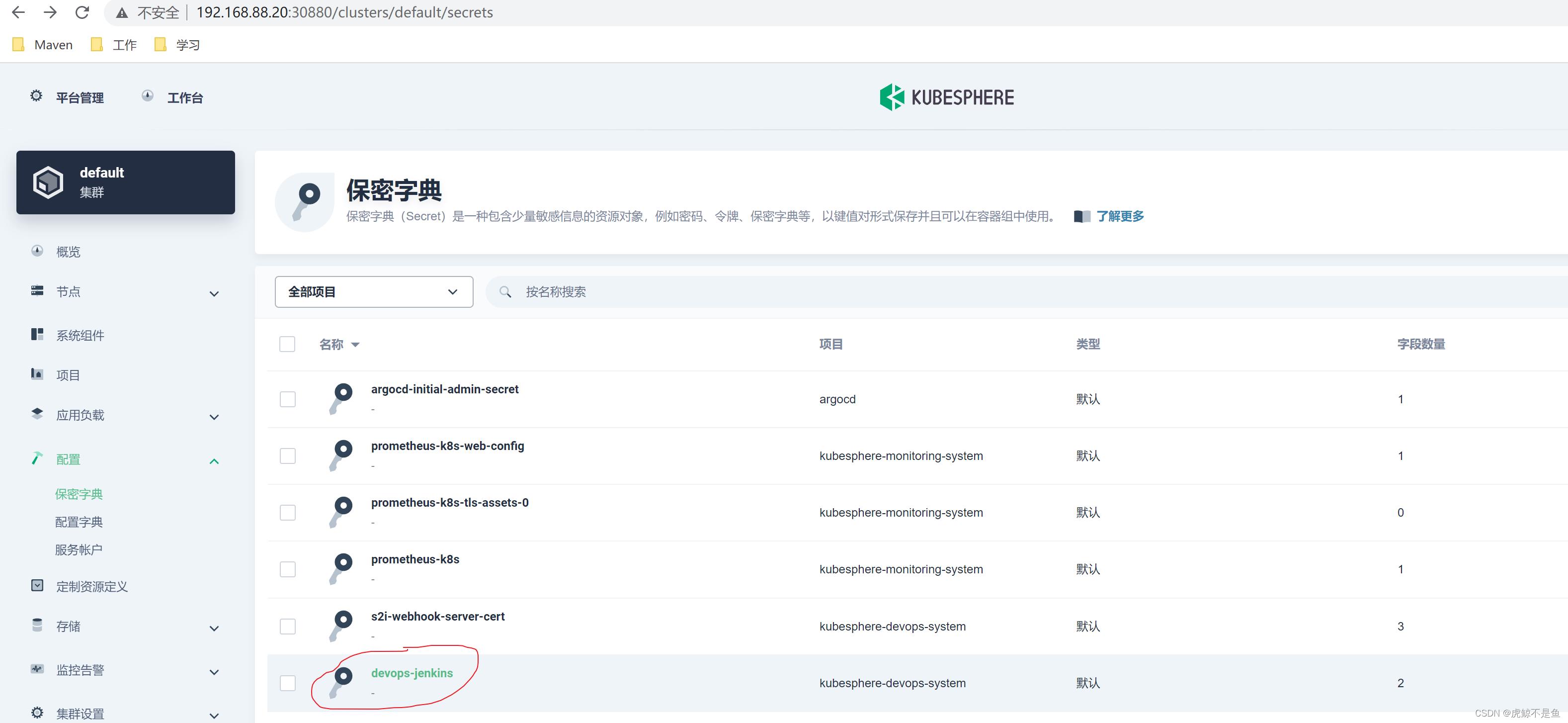

ADMIN_PASSWORD: <set to the key 'jenkins-admin-password' in secret 'devops-jenkins'> Optional: false

ADMIN_USER: <set to the key 'jenkins-admin-user' in secret 'devops-jenkins'> Optional: false

Mounts:

/usr/share/jenkins/ref/secrets/ from secrets-dir (rw)

/var/jenkins_config from jenkins-config (rw)

/var/jenkins_home from jenkins-home (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-md26x (ro)

Containers:

devops-jenkins:

Container ID: containerd://5b6d9d912cbe6b6c290619c753cb023ed2f135e0f079b174c4c2d45115d996c0

Image: registry.cn-beijing.aliyuncs.com/kubesphereio/ks-jenkins:v3.3.0-2.319.1

Image ID: registry.cn-beijing.aliyuncs.com/kubesphereio/ks-jenkins@sha256:c34e3f56f317197235f7d6e6582d531b09caa5c5f81bad21f4f8b4e432e44b7b

Ports: 8080/TCP, 50000/TCP

Host Ports: 0/TCP, 0/TCP

Args:

--argumentsRealm.passwd.$(ADMIN_USER)=$(ADMIN_PASSWORD)

--argumentsRealm.roles.$(ADMIN_USER)=admin

State: Running

Started: Wed, 17 Aug 2022 21:15:02 +0800

Ready: True

Restart Count: 0

Limits:

cpu: 1

memory: 2Gi

Requests:

cpu: 100m

memory: 1500Mi

Liveness: http-get http://:http/login delay=90s timeout=5s period=10s #success=1 #failure=12

Readiness: http-get http://:http/login delay=60s timeout=1s period=10s #success=1 #failure=3

Environment:

JAVA_TOOL_OPTIONS: -Xms1200m -Xmx1600m -XX:MaxRAM=2g -Dhudson.slaves.NodeProvisioner.initialDelay=20 -Dhudson.slaves.NodeProvisioner.MARGIN=50 -Dhudson.slaves.NodeProvisioner.MARGIN0=0.85 -Dhudson.model.LoadStatistics.clock=5000 -Dhudson.model.LoadStatistics.decay=0.2 -Dhudson.slaves.NodeProvisioner.recurrencePeriod=5000 -Dhudson.security.csrf.DefaultCrumbIssuer.EXCLUDE_SESSION_ID=true -Dio.jenkins.plugins.casc.ConfigurationAsCode.initialDelay=10000 -Djenkins.install.runSetupWizard=false -XX:+UseG1GC -XX:+UseStringDeduplication -XX:+ParallelRefProcEnabled -XX:+DisableExplicitGC -XX:+UnlockDiagnosticVMOptions -XX:+UnlockExperimentalVMOptions

JENKINS_OPTS:

ADMIN_PASSWORD: <set to the key 'jenkins-admin-password' in secret 'devops-jenkins'> Optional: false

ADMIN_USER: <set to the key 'jenkins-admin-user' in secret 'devops-jenkins'> Optional: false

CASC_JENKINS_CONFIG: /var/jenkins_home/casc_configs/

CASC_MERGE_STRATEGY: override

com.sun.jndi.ldap.connect.timeout: 15000

com.sun.jndi.ldap.read.timeout: 60000

kubernetes.connection.timeout: 60000

kubernetes.request.timeout: 60000

EMAIL_SMTP_HOST: mail.example.com

EMAIL_SMTP_PORT: 465

EMAIL_USE_SSL: false

EMAIL_FROM_NAME: KubeSphere

EMAIL_FROM_ADDR: admin@example.com

EMAIL_FROM_PASS: P@ssw0rd

Mounts:

/usr/share/jenkins/ref/secrets/ from secrets-dir (rw)

/var/jenkins_config from jenkins-config (ro)

/var/jenkins_home from jenkins-home (rw)

/var/jenkins_home/casc_configs from casc-config (ro)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-md26x (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

casc-config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: jenkins-casc-config

Optional: false

jenkins-config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: devops-jenkins

Optional: false

secrets-dir:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

jenkins-home:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: devops-jenkins

ReadOnly: false

kube-api-access-md26x:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 32m default-scheduler Successfully assigned kubesphere-devops-system/devops-jenkins-bf85c664c-c6qnq to zhiyong-ksp1

Normal Pulling 32m kubelet Pulling image "registry.cn-beijing.aliyuncs.com/kubesphereio/ks-jenkins:v3.3.0-2.319.1"

Normal Pulled 26m kubelet Successfully pulled image "registry.cn-beijing.aliyuncs.com/kubesphereio/ks-jenkins:v3.3.0-2.319.1" in 6m11.516956987s

Normal Created 26m kubelet Created container copy-default-config

Normal Started 26m kubelet Started container copy-default-config

Normal Pulling 26m kubelet Pulling image "registry.cn-beijing.aliyuncs.com/kubesphereio/ks-jenkins:v3.3.0-2.319.1"

Normal Pulled 26m kubelet Successfully pulled image "registry.cn-beijing.aliyuncs.com/kubesphereio/ks-jenkins:v3.3.0-2.319.1" in 19.346314156s

Normal Created 26m kubelet Created container devops-jenkins

Normal Started 26m kubelet Started container devops-jenkins

Warning Unhealthy 24m (x5 over 25m) kubelet Readiness probe failed: HTTP probe failed with statuscode: 503

Warning Unhealthy 24m (x2 over 24m) kubelet Liveness probe failed: HTTP probe failed with statuscode: 503

Warning Unhealthy 24m (x2 over 24m) kubelet Readiness probe failed: Get "http://10.233.107.84:8080/login": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

root@zhiyong-ksp1:/home/zhiyong#

结尾可以看到三连警告。

排查Warning

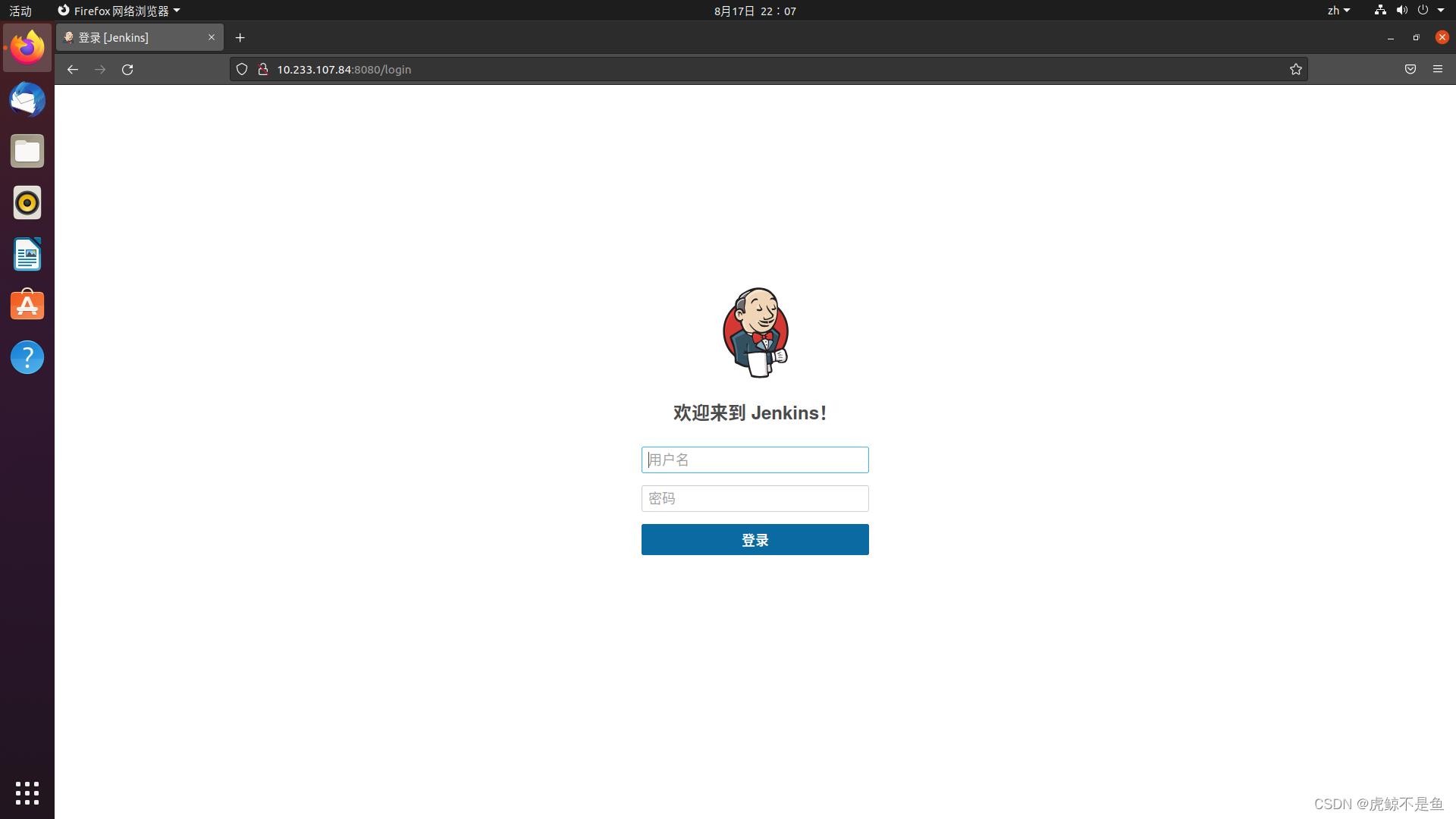

不管表面上多么正常,warning是不能忽视的。按照warning的log,应该是探针访问http://10.233.107.84:8080/login超时了。从外部浏览器访问:

可以看出确实超时了。

但是在该Ubuntu访问相同的网站:

可以看到成功了。

使用CMD:

Microsoft Windows [版本 10.0.19044.1889]

(c) Microsoft Corporation。保留所有权利。

C:\\Users\\zhiyong>ping 10.233.107.84

正在 Ping 10.233.107.84 具有 32 字节的数据:

请求超时。

请求超时。

请求超时。

请求超时。

10.233.107.84 的 Ping 统计信息:

数据包: 已发送 = 4,已接收 = 0,丢失 = 4 (100% 丢失),

C:\\Users\\zhiyong>

可以看出外部网络并不能ping通这个IP。

但是ssh的黑窗口:

root@zhiyong-ksp1:/home/zhiyong# ping 10.233.107.84

PING 10.233.107.84 (10.233.107.84) 56(84) bytes of data.

64 比特,来自 10.233.107.84: icmp_seq=1 ttl=64 时间=0.133 毫秒

64 比特,来自 10.233.107.84: icmp_seq=2 ttl=64 时间=0.074 毫秒

64 比特,来自 10.233.107.84: icmp_seq=3 ttl=64 时间=0.070 毫秒

64 比特,来自 10.233.107.84: icmp_seq=4 ttl=64 时间=0.087 毫秒

64 比特,来自 10.233.107.84: icmp_seq=5 ttl=64 时间=0.083 毫秒

64 比特,来自 10.233.107.84: icmp_seq=6 ttl=64 时间=0.050 毫秒

64 比特,来自 10.233.107.84: icmp_seq=7 ttl=64 时间=0.091 毫秒

64 比特,来自 10.233.107.84: icmp_seq=8 ttl=64 时间=0.057 毫秒

64 比特,来自 10.233.107.84: icmp_seq=9 ttl=64 时间=0.066 毫秒

64 比特,来自 10.233.107.84: icmp_seq=10 ttl=64 时间=0.072 毫秒

64 比特,来自 10.233.107.84: icmp_seq=11 ttl=64 时间=0.050 毫秒

64 比特,来自 10.233.107.84: icmp_seq=12 ttl=64 时间=0.051 毫秒

^C

--- 10.233.107.84 ping 统计 ---

已发送 12 个包, 已接收 12 个包, 0% 包丢失, 耗时 11244 毫秒

rtt min/avg/max/mdev = 0.050/0.073/0.133/0.022 ms

可以ping通该IP。

根据warning的log,可以看到admin的用户名和密码存在这个叫devops-jenkins的secret当中:

在Ubuntu中根据解密后的账号密码登录:

会失败。

根据官网文档:https://kubesphere.com.cn/docs/v3.3/faq/devops/install-jenkins-plugins/

执行:

root@zhiyong-ksp1:/home/zhiyong# export NODE_PORT=$(kubectl get --namespace kubesphere-devops-system -o jsonpath=".spec.ports[0].nodePort" services devops-jenkins)

root@zhiyong-ksp1:/home/zhiyong# export NODE_IP=$(kubectl get nodes --namespace kubesphere-devops-system -o jsonpath=".items[0].status.addresses[0].address")

root@zhiyong-ksp1:/home/zhiyong# echo http://$NODE_IP:$NODE_PORT

http://192.168.88.20:30180

可以看到需要用这个网址才能访问Jenkins:

而且最坑爹的是:

密码写死了是这个admin/P@88w0rd。。。使用这个外部可以登录成功: