Prometheus + Grafana on Kubernetes部署

Posted 张志翔 ̮

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Prometheus + Grafana on Kubernetes部署相关的知识,希望对你有一定的参考价值。

一键部署链接 点我跳转

使用k8s方式部署Prometheus + grafana 监控pod 和 node 节点信息

环境基础:

k8s集群

创建命名空间方便管理

kubectl create namespace monitoring

3个组件

1.数据源pod(任选其一,推荐kube-state-metrics方式 更详细)

node节点基础数据,cpu,io,内存,磁盘,网络等

偏向使用DaemonSet部署的node-exporter

- node-exporter.yaml

一键部署默认是这种pod

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: monitoring

labels:

k8s-app: node-exporter

spec:

selector:

matchLabels:

k8s-app: node-exporter

template:

metadata:

labels:

k8s-app: node-exporter

spec:

containers:

- image: prom/node-exporter:v1.0.1

name: node-exporter

ports:

- containerPort: 9100

protocol: TCP

name: http

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: node-exporter

name: node-exporter

namespace: monitoring

spec:

ports:

- name: http

port: 9100

nodePort: 31672

protocol: TCP

type: NodePort

selector:

k8s-app: node-exporter

更为详细的k8s内部信息,pod,svc,namespace,等集群信息

推荐deployment部署kube-state-metrics方式

项目地址 点我跳转

本次实验使用这种模式

- kube-state-metrics.yaml

包含ClusterRoleBinding-ClusterRole-sa-svc-dep 一系列资源创建作用是收集集群数据,所以不用在同一个namespace。

kubectl create ns ops-monitapiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v1.9.7

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: ops-monit

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v1.9.7

name: kube-state-metrics

rules:

- apiGroups:

- ""

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs:

- list

- watch

- apiGroups:

- extensions

resources:

- daemonsets

- deployments

- replicasets

- ingresses

verbs:

- list

- watch

- apiGroups:

- apps

resources:

- statefulsets

- daemonsets

- deployments

- replicasets

verbs:

- list

- watch

- apiGroups:

- batch

resources:

- cronjobs

- jobs

verbs:

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

verbs:

- list

- watch

- apiGroups:

- authentication.k8s.io

resources:

- tokenreviews

verbs:

- create

- apiGroups:

- authorization.k8s.io

resources:

- subjectaccessreviews

verbs:

- create

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- list

- watch

- apiGroups:

- certificates.k8s.io

resources:

- certificatesigningrequests

verbs:

- list

- watch

- apiGroups:

- storage.k8s.io

resources:

- storageclasses

- volumeattachments

verbs:

- list

- watch

- apiGroups:

- admissionregistration.k8s.io

resources:

- mutatingwebhookconfigurations

- validatingwebhookconfigurations

verbs:

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- networkpolicies

verbs:

- list

- watch

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v1.9.7

name: kube-state-metrics

namespace: ops-monit

---

apiVersion: v1

kind: Service

metadata:

# annotations:

# prometheus.io/scrape: 'true'

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v1.9.7

name: kube-state-metrics

namespace: ops-monit

spec:

clusterIP: None

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

- name: telemetry

port: 8081

targetPort: telemetry

selector:

app.kubernetes.io/name: kube-state-metrics

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v1.9.7

name: kube-state-metrics

namespace: ops-monit

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: kube-state-metrics

template:

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v1.9.7

spec:

containers:

- image: quay.mirrors.ustc.edu.cn/coreos/kube-state-metrics:v1.9.7

livenessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

name: kube-state-metrics

ports:

- containerPort: 8080

name: http-metrics

- containerPort: 8081

name: telemetry

readinessProbe:

httpGet:

path: /

port: 8081

initialDelaySeconds: 5

timeoutSeconds: 5

nodeSelector:

beta.kubernetes.io/os: linux

serviceAccountName: kube-state-metrics

2.Prometheus相关

- rbac-setup.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: monitoring

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: monitoring

- configmap.yaml

用与重新定义收集到的数据源提供给grafan展现

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: monitoring

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'k8s-cadvisor'

metrics_path: /metrics/cadvisor

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '$1:10255'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

metric_relabel_configs:

- source_labels: [instance]

separator: ;

regex: (.+)

target_label: node

replacement: $1

action: replace

- source_labels: [pod_name]

separator: ;

regex: (.+)

target_label: pod

replacement: $1

action: replace

- source_labels: [container_name]

separator: ;

regex: (.+)

target_label: container

replacement: $1

action: replace

- job_name: kube-state-metrics

kubernetes_sd_configs:

- role: endpoints

namespaces:

names:

- ops-monit

relabel_configs:

- source_labels: [__meta_kubernetes_service_label_app_kubernetes_io_name]

regex: kube-state-metrics

replacement: $1

action: keep

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: k8s_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: k8s_sname

- prometheus-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: prometheus-deployment

name: prometheus

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- image: prom/prometheus:v2.7.1

name: prometheus

command:

- "/bin/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=24h"

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: "/prometheus"

name: data

- mountPath: "/etc/prometheus"

name: config-volume

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 2500Mi

serviceAccountName: prometheus

volumes:

- name: data

emptyDir:

- name: config-volume

configMap:

name: prometheus-config

---

kind: Service

apiVersion: v1

metadata:

labels:

app: prometheus

name: prometheus

namespace: monitoring

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

nodePort: 30003

selector:

app: prometheus

3. Grafana 部署 (两种方式,任选其一 k8s-dep部署 or docker-compose部署)

docker-compose部署

version: '3'

services:

grafana:

image: grafana/grafana # 原镜像`grafana/grafana`

container_name: grafana # 容器名为'grafana'

restart: always # 指定容器退出后的重启策略为始终重启

volumes: # 数据卷挂载路径设置,将本机目录映射到容器目录

- "./data:/var/lib/grafana"

- "./log:/var/log/grafana"

ports: # 映射端口

- "3000:3000"

k8s-dep方式

- grafana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana-dep

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: grafana-dep

template:

metadata:

labels:

app: grafana-dep

spec:

containers:

- image: grafana/grafana

imagePullPolicy: Always

#command:

# - "tail"

# - "-f"

# - "/dev/null"

securityContext:

allowPrivilegeEscalation: false

runAsUser: 0

name: grafana

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: "/var/lib/grafana"

name: data

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 2500Mi

volumes:

- name: data

emptyDir:

---

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: monitoring

spec:

type: NodePort

ports:

- port: 80

targetPort: 3000

nodePort: 30006

selector:

app: grafana-dep

执行yaml文件

kubectl create -f xxx.yaml

查看相关资源状态

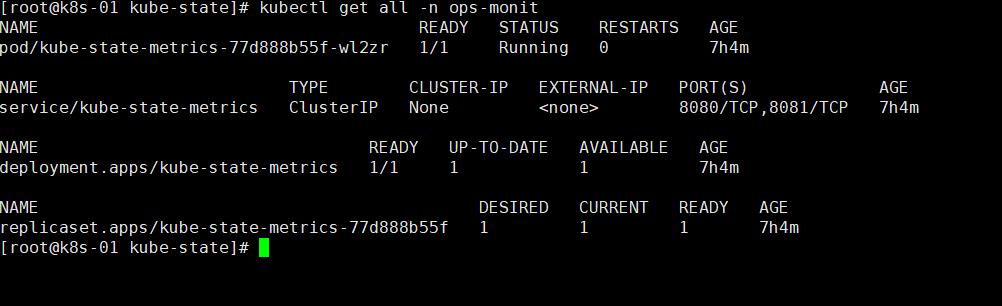

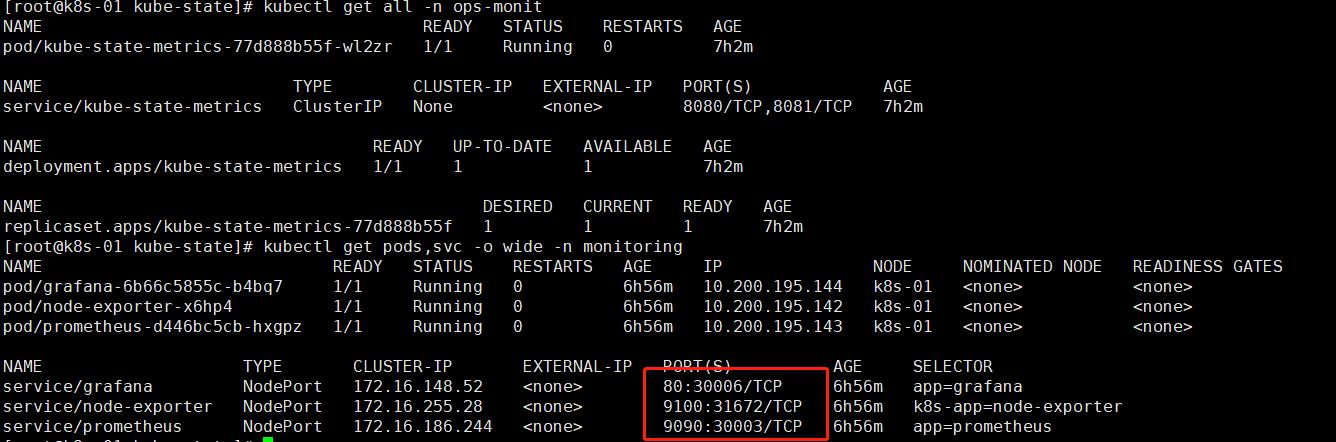

kubectl get all -n ops-monit ##查看kube-state-metrics服务

kubectl get all -n monitoring ##查看监控相关

相关地址:http://:30003 ##Prometheus地址第一步查看是否有数据源

http://192.168.17.130:30006/ ##grafana地址 默认密码admin/addmin

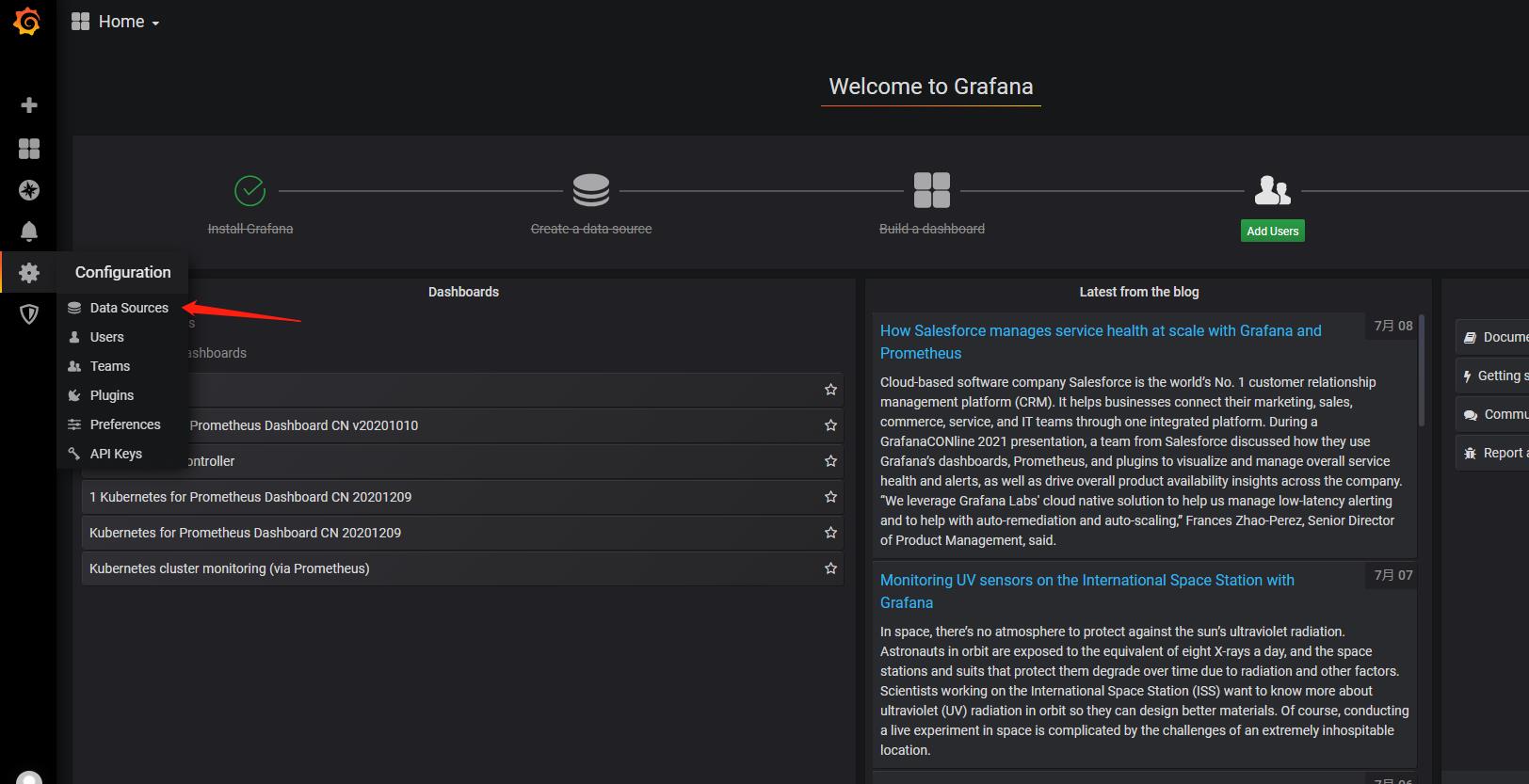

开始配置grafana

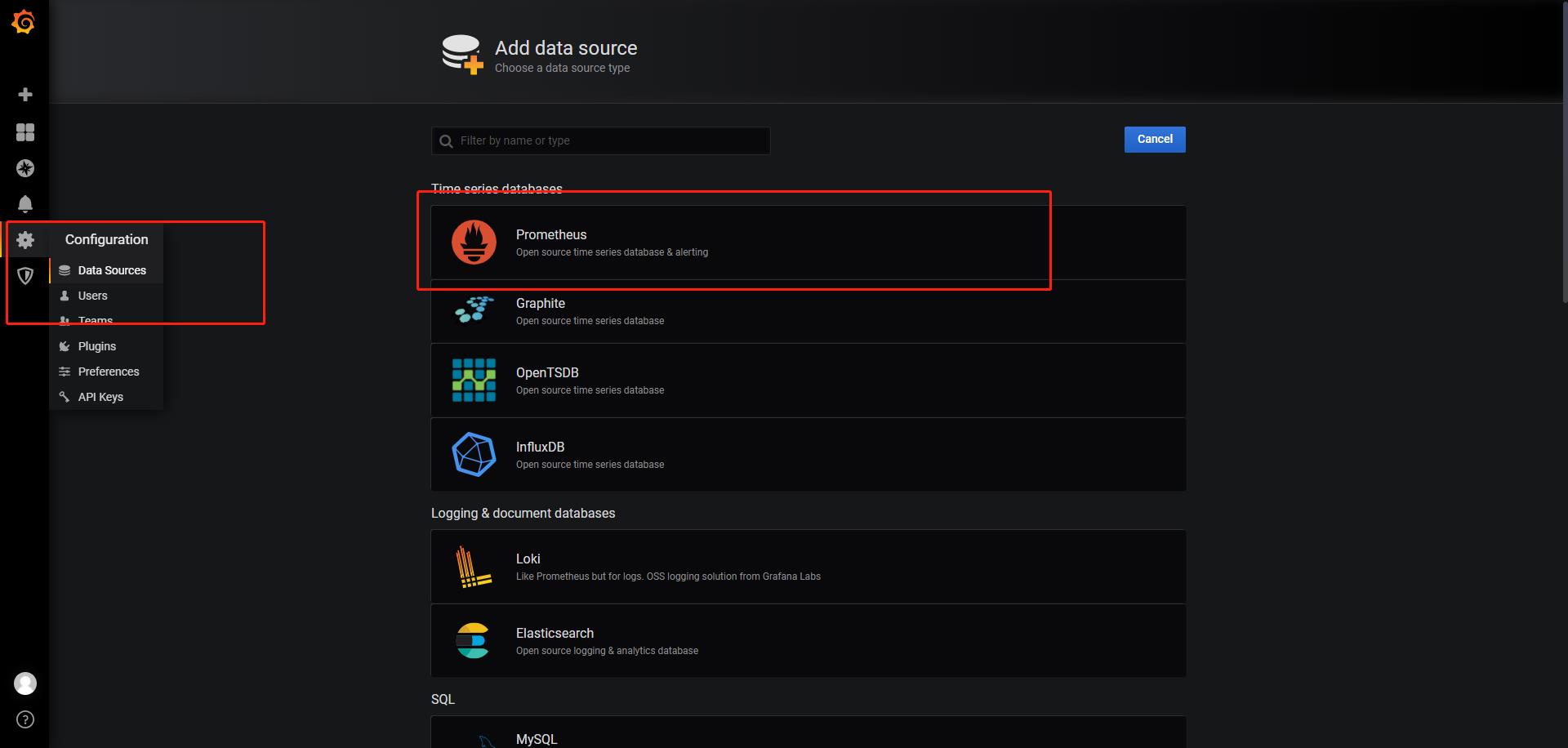

添加数据源

添加上面的ip:30003端口

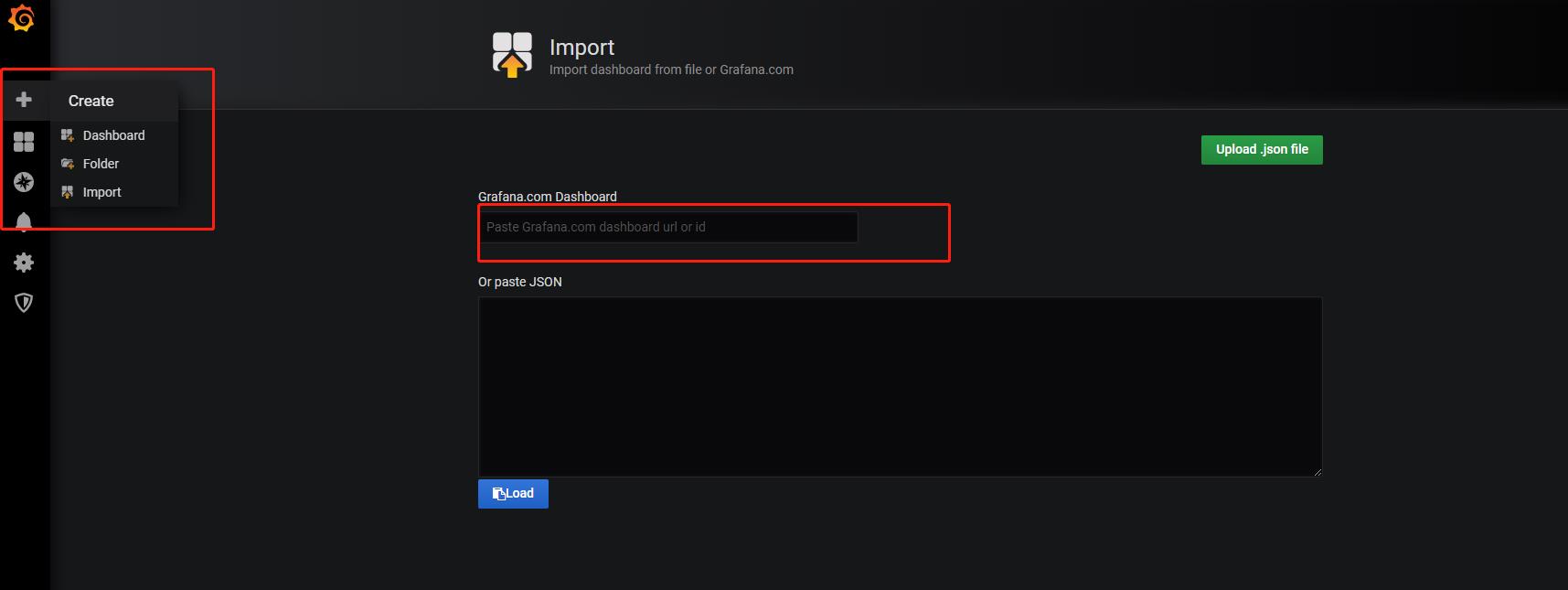

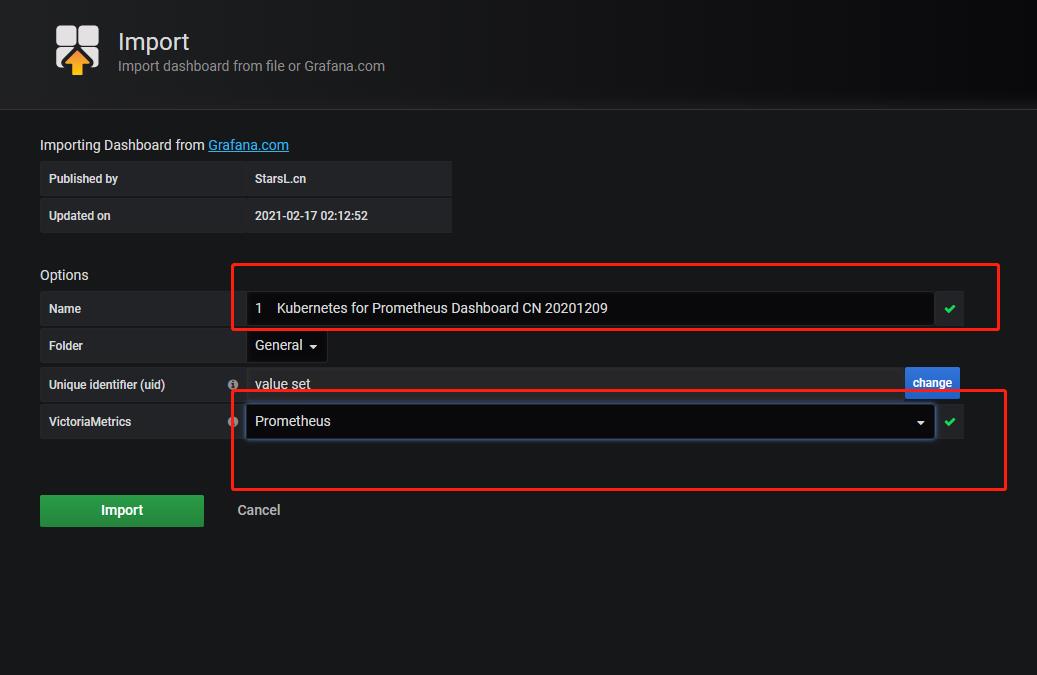

导入grafan模板

地址 点我跳转

13105 官网 Dashboard-id

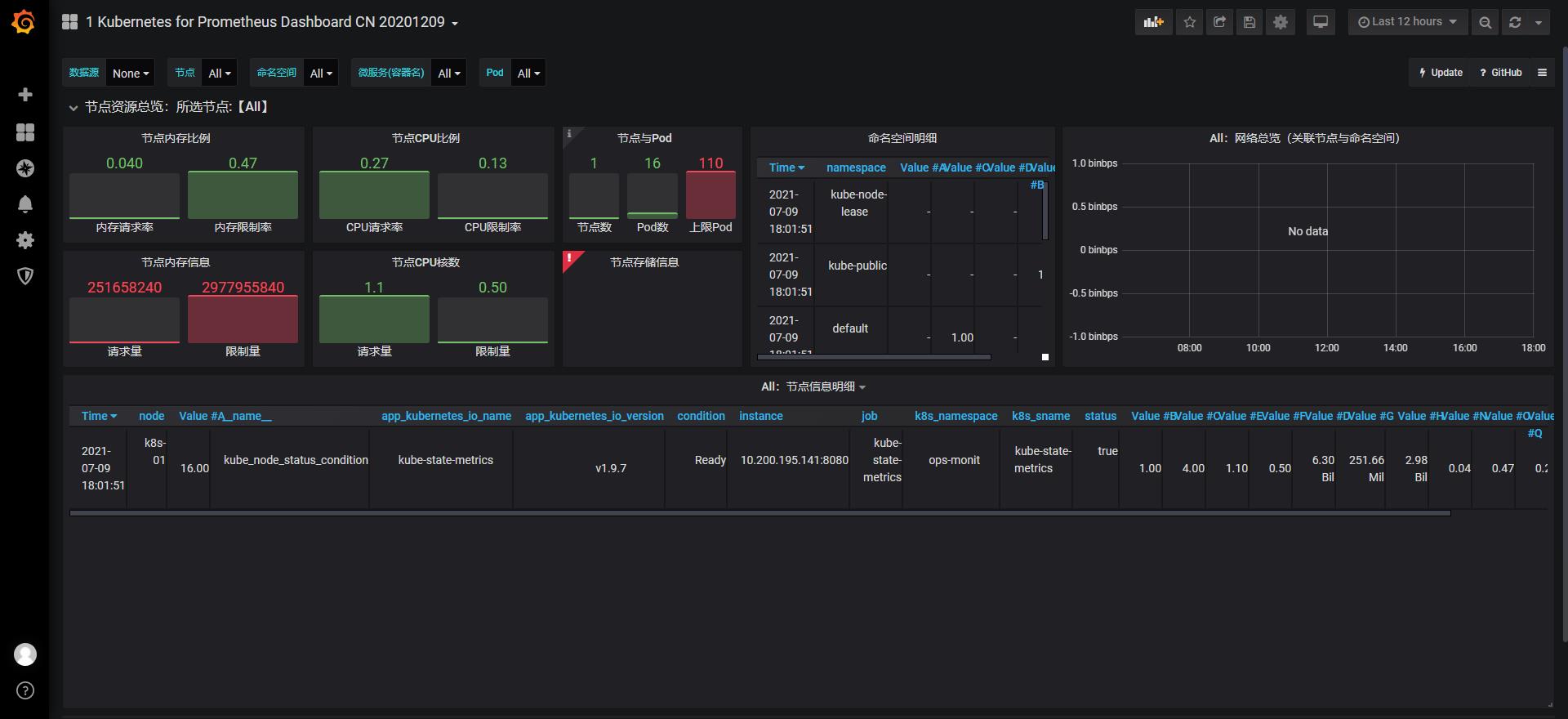

最后效果展示

最后效果展示

以上是关于Prometheus + Grafana on Kubernetes部署的主要内容,如果未能解决你的问题,请参考以下文章

Prometheus监控之报错Error on ingesting out-of-order samples

Centos7.X 搭建Prometheus+node_exporter+Grafana实时监控平台