内存回收机制lru

Posted bubbleben

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了内存回收机制lru相关的知识,希望对你有一定的参考价值。

本文基于linux-5.0内核源码分析

include/linux/mmzone.h

include/linux/pagevec.h

include/linux/mm_inline.h

include/linux/pagemap.h

include/linux/vmstat.h

mm/swap.c

mm/vmscan.c

mm/util.c

mm/rmap.c

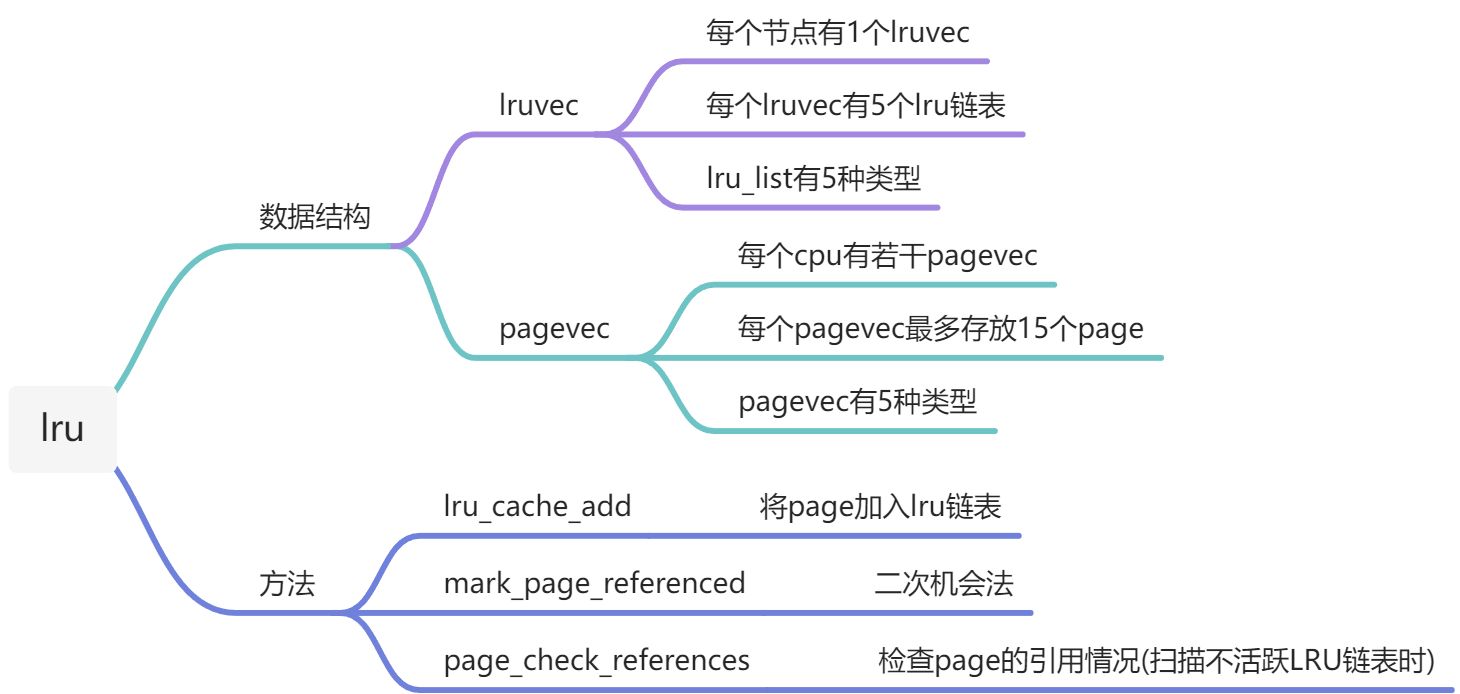

1. lru_list

#define LRU_BASE 0

#define LRU_ACTIVE 1

#define LRU_FILE 2

// lru是双向链表: 内核根据页面类型(匿名页和文件页)与活跃性(活跃和不活跃), 分成5种类型lru链表

enum lru_list

// 0: inactive anonymous page lru list

LRU_INACTIVE_ANON = LRU_BASE,

// 1: active anonymous page lru list

LRU_ACTIVE_ANON = LRU_BASE + LRU_ACTIVE,

// 2: inactive page cache lru list

LRU_INACTIVE_FILE = LRU_BASE + LRU_FILE,

// 3: active page cache lru list

LRU_ACTIVE_FILE = LRU_BASE + LRU_FILE + LRU_ACTIVE,

// 4: unevictable page lru list

LRU_UNEVICTABLE,

NR_LRU_LISTS

;

2. lruvec

struct lruvec

// 每个lruvec都包含5个lru链表

struct list_head lists[NR_LRU_LISTS];

struct zone_reclaim_stat reclaim_stat;

/* Evictions & activations on the inactive file list */

atomic_long_t inactive_age;

/* Refaults at the time of last reclaim cycle */

unsigned long refaults;

#ifdef CONFIG_MEMCG

// 每个node都包含1个lruvec: pgdat标识lruvec所属的node

struct pglist_data *pgdat;

#endif

;

3. pagevec

/* 15 pointers + header align the pagevec structure to a power of two */

// 对比4.14.186的内核: PAGEVEC_SIZE为14

#define PAGEVEC_SIZE 15

// pagevec用于批量处理

struct pagevec

unsigned long nr;

bool percpu_pvec_drained;

// 每个pagevec都有1个15个page大小的数组

struct page *pages[PAGEVEC_SIZE];

;

4. lru_cache_add

// 将page添加到指定的lru链表

void lru_cache_add(struct page *page)

// 活跃且不可回收的页面不能加入lru链表

VM_BUG_ON_PAGE(PageActive(page) && PageUnevictable(page), page);

// 已经添加到lru链表的不能再重复添加

VM_BUG_ON_PAGE(PageLRU(page), page);

__lru_cache_add(page);

/*

*每个cpu定义1个pagevec

*/

// lru_add_pvec用于存放添加到lru链表的页面

static DEFINE_PER_CPU(struct pagevec, lru_add_pvec);

static DEFINE_PER_CPU(struct pagevec, lru_rotate_pvecs);

static DEFINE_PER_CPU(struct pagevec, lru_deactivate_file_pvecs);

static DEFINE_PER_CPU(struct pagevec, lru_lazyfree_pvecs);

#ifdef CONFIG_SMP

static DEFINE_PER_CPU(struct pagevec, activate_page_pvecs);

#endif

static void __lru_cache_add(struct page *page)

// 获取当前cpu的pagevec

struct pagevec *pvec = &get_cpu_var(lru_add_pvec);

get_page(page);

// 1.首先尝试通过pagevec_add将page添加到pagevec的pages数组

// 2.如果添加失败代表当前pagevec已满, 需要将pagevec的15个page批量提交到lru链表

// 3.如果是复合页也直接批量提交

if (!pagevec_add(pvec, page) || PageCompound(page))

__pagevec_lru_add(pvec);

// 更新lru_add_pvec

put_cpu_var(lru_add_pvec);

4.1 pagevec_add

// 将page添加到pagevec, 并返回剩余可用的空间

static inline unsigned pagevec_add(struct pagevec *pvec, struct page *page)

// 将page保存到pagevec的pages数组, 并将page数量加1

pvec->pages[pvec->nr++] = page;

// 返回剩余空间: 为0代表空间已满添加失败

return pagevec_space(pvec);

4.2 pagevec_space

// pagevec最多保存15个page, nr保存pagevec当前存储的page数: 两者之差等于pagevec剩余可用空间

static inline unsigned pagevec_space(struct pagevec *pvec)

return PAGEVEC_SIZE - pvec->nr;

4.3 __pagevec_lru_add

void __pagevec_lru_add(struct pagevec *pvec)

// 批量处理pagevec的所有page: 针对每个page调用__pagevec_lru_add_fn方法

pagevec_lru_move_fn(pvec, __pagevec_lru_add_fn, NULL);

4.4 pagevec_lru_move_fn

static void pagevec_lru_move_fn(struct pagevec *pvec,

void (*move_fn)(struct page *page, struct lruvec *lruvec, void *arg),

void *arg)

int i;

struct pglist_data *pgdat = NULL;

struct lruvec *lruvec;

unsigned long flags = 0;

// 遍历pagevec中的每个page

for (i = 0; i < pagevec_count(pvec); i++)

struct page *page = pvec->pages[i];

// page所属的节点

struct pglist_data *pagepgdat = page_pgdat(page);

if (pagepgdat != pgdat)

if (pgdat)

spin_unlock_irqrestore(&pgdat->lru_lock, flags);

pgdat = pagepgdat;

spin_lock_irqsave(&pgdat->lru_lock, flags);

// 1.如果mem_cgroup_disabled: 则返回pglist_data的lruvec

// 2.否则返回mem_cgroup_per_node的lruvec

lruvec = mem_cgroup_page_lruvec(page, pgdat);

// 回调__pagevec_lru_add种定义的move_fn函数: __pagevec_lru_add_fn

(*move_fn)(page, lruvec, arg);

if (pgdat)

spin_unlock_irqrestore(&pgdat->lru_lock, flags);

// 释放并重新初始化pagevec

release_pages(pvec->pages, pvec->nr, pvec->cold);

pagevec_reinit(pvec);

4.5 __pagevec_lru_add_fn

static inline int page_is_file_cache(struct page *page)

// anonymous page通过磁盘上的swap分区或者在RAM开辟swap分区(zram)实现回收

// page cache通过drop或者writeback回收

// PG_swapbacked为0, 即page cache

return !PageSwapBacked(page);

// inactive list:包括inactive page cache和inactive anonymous page

static inline enum lru_list page_lru_base_type(struct page *page)

if (page_is_file_cache(page))

return LRU_INACTIVE_FILE;

return LRU_INACTIVE_ANON;

static void __pagevec_lru_add_fn(struct page *page, struct lruvec *lruvec,

void *arg)

// 4.14.186内核实现

// 判断是否文件缓存: 不需要swap分区支持的就是文件缓存

// int file = page_is_file_cache(page);

// 判断是否活跃

// int active = PageActive(page);

// 计算page的lru类型

// enum lru_list lru = page_lru(page);

// 将page添加到lruvec类型为lru的链表上, 然后更新node和zone的统计信息

// add_page_to_lru_list(page, lruvec, lru);

// 更新lruvec的zone_reclaim_stat成员信息

// update_page_reclaim_stat(lruvec, file, active);

// trace_mm_lru_insertion(page, lru);

enum lru_list lru;

// 判断page曾经是否不可回收, 同时清除其PG_unevictable标志位

int was_unevictable = TestClearPageUnevictable(page);

// 不能重复添加到lru链表

VM_BUG_ON_PAGE(PageLRU(page), page);

// 设置PG_lru标志位

SetPageLRU(page);

smp_mb();

// 判断page是否可回收

if (page_evictable(page))

// 获取page的lru链表类型

lru = page_lru(page);

update_page_reclaim_stat(lruvec, page_is_file_cache(page),

PageActive(page));

if (was_unevictable)

count_vm_event(UNEVICTABLE_PGRESCUED);

else

// page属于不可回收的lru链表

lru = LRU_UNEVICTABLE;

// 清除PG_active标志位

ClearPageActive(page);

// 设置PG_unevictable标志位

SetPageUnevictable(page);

if (!was_unevictable)

count_vm_event(UNEVICTABLE_PGCULLED);

// 将page添加到lruvec类型为lru的链表上, 然后更新node和zone的统计信息

add_page_to_lru_list(page, lruvec, lru);

trace_mm_lru_insertion(page, lru);

4.5.1 page_evictable

// 两种不可回收的情况

// 1.page->mapping被标记为不可回收

// 2.page属于1个被锁住的vma

int page_evictable(struct page *page)

int ret;

/* Prevent address_space of inode and swap cache from being freed */

rcu_read_lock();

// 首先判断page是否可以回收, 其次判断page是否设置PG_mlocked标志位

ret = !mapping_unevictable(page_mapping(page)) && !PageMlocked(page);

rcu_read_unlock();

return ret;

4.5.2 page_mapping

struct address_space *page_mapping(struct page *page)

struct address_space *mapping;

page = compound_head(page);

/* This happens if someone calls flush_dcache_page on slab page */

if (unlikely(PageSlab(page)))

return NULL;

// swap缓存

if (unlikely(PageSwapCache(page)))

swp_entry_t entry;

entry.val = page_private(page);

// 返回swapper_spaces数组的address_space元素

return swap_address_space(entry);

mapping = page->mapping;

// 如果是匿名映射则返回NULL

if ((unsigned long)mapping & PAGE_MAPPING_ANON)

return NULL;

// 返回page映射的address_space

return (void *)((unsigned long)mapping & ~PAGE_MAPPING_FLAGS);

4.5.3 mapping_unevictable

/*

* Bits in mapping->flags.

*/

enum mapping_flags

AS_EIO = 0, /* IO error on async write */

AS_ENOSPC = 1, /* ENOSPC on async write */

AS_MM_ALL_LOCKS = 2, /* under mm_take_all_locks() */

AS_UNEVICTABLE = 3, /* e.g., ramdisk, SHM_LOCK */

AS_EXITING = 4, /* final truncate in progress */

/* writeback related tags are not used */

AS_NO_WRITEBACK_TAGS = 5,

;

static inline int mapping_unevictable(struct address_space *mapping)

// 判断address_space->flags是否含有AS_UNEVICTABLE标志位

if (mapping)

return test_bit(AS_UNEVICTABLE, &mapping->flags);

return !!mapping;

4.6 add_page_to_lru_list

static __always_inline void add_page_to_lru_list(struct page *page,

struct lruvec *lruvec, enum lru_list lru)

// 更新node和zone中的lru链表大小: page_zonenum返回page对应的zone索引

update_lru_size(lruvec, lru, page_zonenum(page), hpage_nr_pages(page));

// 将page插入到lruvec对应的链表末尾

list_add(&page->lru, &lruvec->lists[lru]);

4.6.1 update_lru_size

static __always_inline void update_lru_size(struct lruvec *lruvec,

enum lru_list lru, enum zone_type zid,

int nr_pages)

// 继续调用__update_lru_size

__update_lru_size(lruvec, lru, zid, nr_pages);

#ifdef CONFIG_MEMCG

// memory cgroup使能时更新mem_cgroup_per_node

mem_cgroup_update_lru_size(lruvec, lru, zid, nr_pages);

#endif

4.6.2 __update_lru_size

static __always_inline void __update_lru_size(struct lruvec *lruvec,

enum lru_list lru, enum zone_type zid,

int nr_pages)

// lruvec对应的节点

struct pglist_data *pgdat = lruvec_pgdat(lruvec);

// 更新node统计信息

__mod_node_page_state(pgdat, NR_LRU_BASE + lru, nr_pages);

// 更新zone统计信息

__mod_zone_page_state(&pgdat->node_zones[zid],

NR_ZONE_LRU_BASE + lru, nr_pages);

4.6.3 __mod_node_page_state

enum node_stat_item

NR_LRU_BASE,

NR_INACTIVE_ANON = NR_LRU_BASE, /* must match order of LRU_[IN]ACTIVE */

NR_ACTIVE_ANON, /* " " " " " */

NR_INACTIVE_FILE, /* " " " " " */

NR_ACTIVE_FILE, /* " " " " " */

NR_UNEVICTABLE, /* " " " " " */

...

NR_VM_NODE_STAT_ITEMS

;

static inline void __mod_node_page_state(struct pglist_data *pgdat,

enum node_stat_item item, int delta)

// delta代表新增的page数量

node_page_state_add(delta, pgdat, item);

static inline void node_page_state_add(long x, struct pglist_data *pgdat,

enum node_stat_item item)

// 更新node的vm_stat统计

atomic_long_add(x, &pgdat->vm_stat[item]);

// 更新全局的vm_node_stat统计

atomic_long_add(x, &vm_node_stat[item]);

4.6.4 __mod_zone_page_state

enum zone_stat_item

/* First 128 byte cacheline (assuming 64 bit words) */

NR_FREE_PAGES,

NR_ZONE_LRU_BASE, /* Used only for compaction and reclaim retry */

NR_ZONE_INACTIVE_ANON = NR_ZONE_LRU_BASE,

NR_ZONE_ACTIVE_ANON,

NR_ZONE_INACTIVE_FILE,

NR_ZONE_ACTIVE_FILE,

NR_ZONE_UNEVICTABLE,

...

NR_VM_ZONE_STAT_ITEMS ;

static inline void __mod_zone_page_state(struct zone *zone,

enum zone_stat_item item, long delta)

// delta代表新增的page数量

zone_page_state_add(delta, zone, item);

static inline void zone_page_state_add(long x, struct zone *zone,

enum zone_stat_item item)

// 更新zone的vm_stat统计

atomic_long_add(x, &zone->vm_stat[item]);

// 更新全局的vm_zone_stat统计

atomic_long_add(x, &vm_zone_stat[item]);

5. mark_page_accessed(二次机会法)

// 当page被访问时会有以下三种PG_active和PG_referenced的组合

// 一.不活跃且未被引用 -> 转换为不活跃且被引用

// 二.不活跃且被引用 -> 转换为活跃且未被引用

// 三.活跃且未被引用 -> 转换为活跃且被引用

void mark_page_accessed(struct page *page)

page = compound_head(page);

// 1. PG_active为0, 即inactive page

// 2. PG_unevictable为0, 即可回收的page

// 3. PG_referenced为1, 即已经被使用的page

// 对应第二种组合: inactive,referenced -> active,unreferenced

if (!PageActive(page) && !PageUnevictable(page) &&

PageReferenced(page))

// PG_lru为1, 即在lru链表中

if (PageLRU(page))

// 激活page: 将page从inactive list迁移到active list

activate_page(page);

else

// 激活page: 将PG_active标志位设置为1

__lru_cache_activate_page(page);

// 清除PG_referenced标志位

ClearPageReferenced(page);

if (page_is_file_cache(page))

workingset_activation(page);

else if (!PageReferenced(page))

// 对应第一种和第三种组合

// inactive,unreferenced -> inactive,referenced

// active,unreferenced -> active,referenced

// 只需设置PG_referenced标志位

SetPageReferenced(page);

if (page_is_idle(page))

clear_page_idle(page);

5.1 activate_page

// 支持对称多处理器

#ifdef CONFIG_SMP

// 每个cpu都有1个pagevec用于保存active page

static DEFINE_PER_CPU(struct pagevec, activate_page_pvecs);

void activate_page(struct page *page)

page = compound_head(page);

// page需要满足在lru链表, inactive和evictable三个条件

if (PageLRU(page) && !PageActive(page) && !PageUnevictable(page))

// 获取当前cpu的activate_page_pvecs

struct pagevec *pvec = &get_cpu_var(activate_page_pvecs);

get_page(page);

// 同前面介绍过的__lru_cache_add类似

// 1.首先尝试调用pagevec_add将page添加到pagevec

// 2.如果添加失败代表pagevec已满, 则将pagevec批量激活

if (!pagevec_add(pvec, page) || PageCompound(page))

pagevec_lru_move_fn(pvec, __activate_page, NULL);

// 更新activate_page_pvecs

put_cpu_var(activate_page_pvecs);

#