linux 核间通讯rpmsg架构分析

Posted WindLOR

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了linux 核间通讯rpmsg架构分析相关的知识,希望对你有一定的参考价值。

以imx8为例

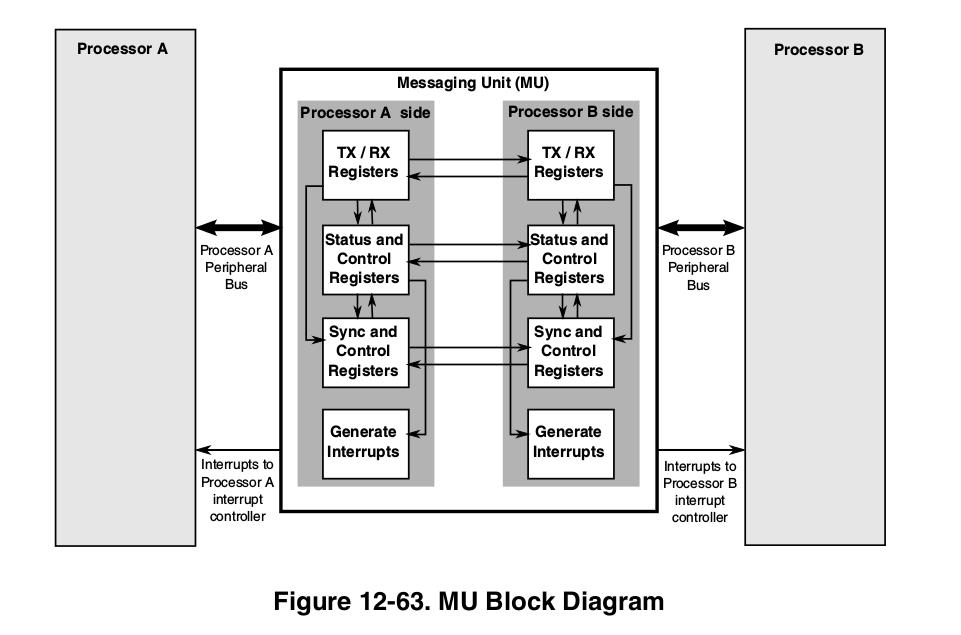

在最底层硬件上,A核和M核通讯是靠硬件来进行的,称为MU,如图

Linux RPMsg 是在virtio framework上实现的一个消息传递机制

VirtIO 是一个用来实现“虚拟IO”的通用框架,典型虚拟的pci,网卡,磁盘等虚拟设备,kvm等都使用了这个技术

与virtio对应的还有一个virtio-ring,其实现了 virtio 的具体通信机制和数据流程。

virtio 层属于控制层,负责前后端之间的通知机制(kick,notify)和控制流程,而 virtio-vring 则负责具体数据流转发

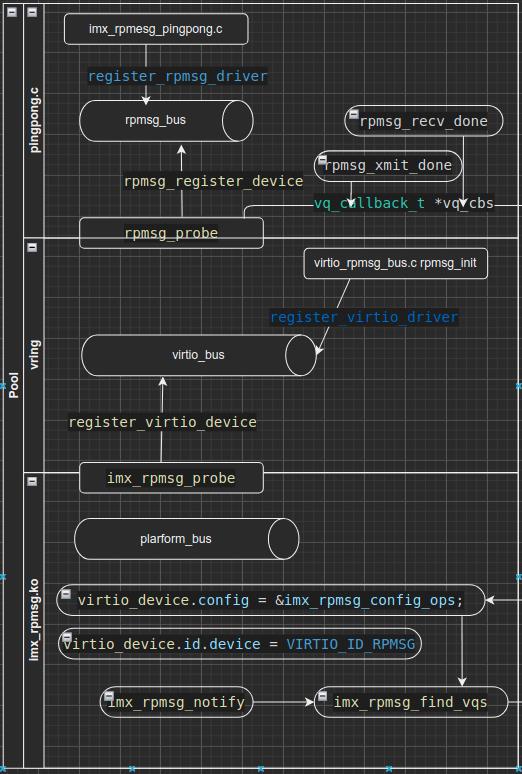

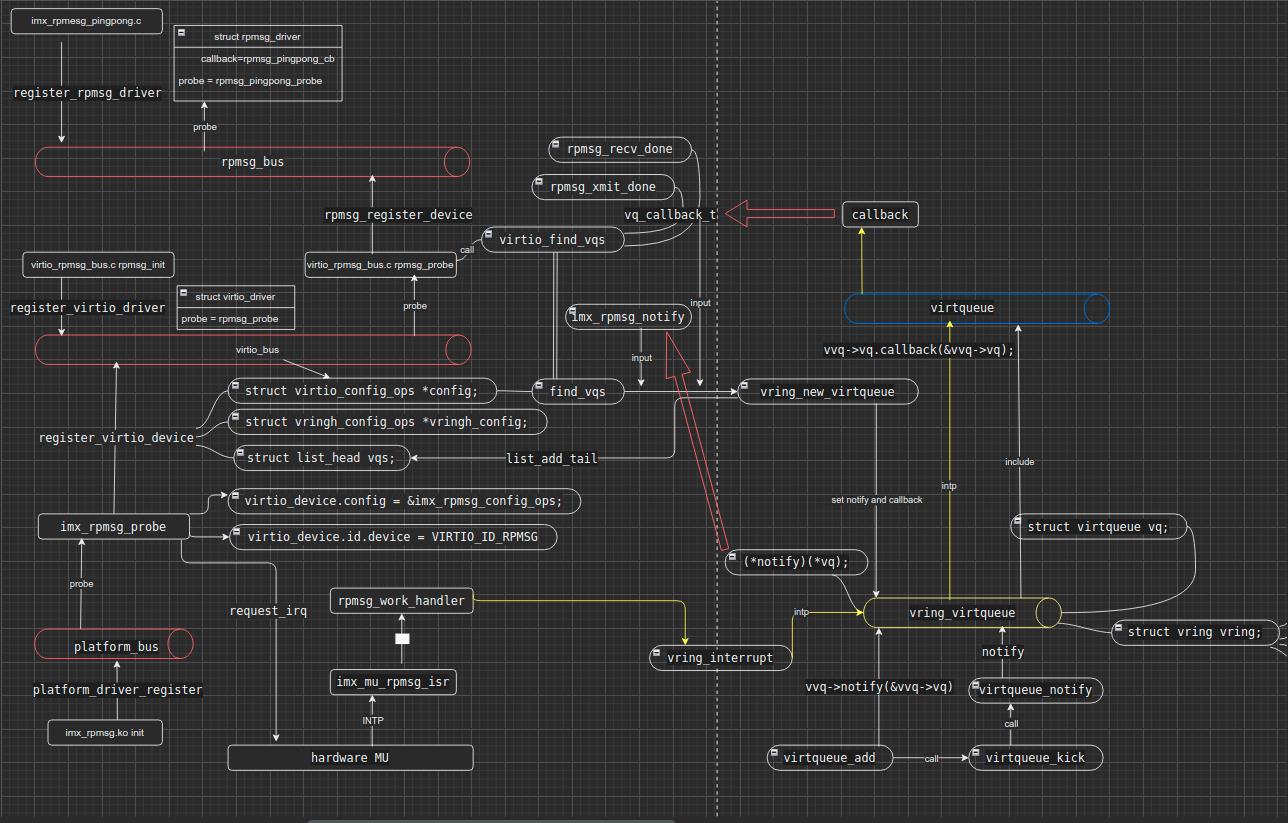

从整体架构上看,关系如下:

最底层有platform_bus,负责从dts获取配置来初始化相关对象,如virtio_device,初始化其config操作函数列表以及devID等,同时注册到virtio_bus

dts相关配置:

&rpmsg

/*

* 64K for one rpmsg instance:

*/

vdev-nums = <2>;

reg = <0x0 0x90000000 0x0 0x20000>;

status = "okay";

;主要初始化过程在imx_rpmsg_probe中,关键操作有:

注册MU相关的硬件中断

ret = request_irq(irq, imx_mu_rpmsg_isr, IRQF_EARLY_RESUME | IRQF_SHARED,

"imx-mu-rpmsg", rpdev);

初始化MU硬件

ret = imx_rpmsg_mu_init(rpdev);

创建工作队列用于处理MU中断数据

INIT_DELAYED_WORK(&(rpdev->rpmsg_work), rpmsg_work_handler);

创建通知链用于对接virtio queue

BLOCKING_INIT_NOTIFIER_HEAD(&(rpdev->notifier));

初始化virtio_device并注册

for (j = 0; j < rpdev->vdev_nums; j++)

pr_debug("%s rpdev%d vdev%d: vring0 0x%x, vring1 0x%x\\n",

__func__, rpdev->core_id, rpdev->vdev_nums,

rpdev->ivdev[j].vring[0],

rpdev->ivdev[j].vring[1]);

rpdev->ivdev[j].vdev.id.device = VIRTIO_ID_RPMSG;

rpdev->ivdev[j].vdev.config = &imx_rpmsg_config_ops;

rpdev->ivdev[j].vdev.dev.parent = &pdev->dev;

rpdev->ivdev[j].vdev.dev.release = imx_rpmsg_vproc_release;

rpdev->ivdev[j].base_vq_id = j * 2;

ret = register_virtio_device(&rpdev->ivdev[j].vdev);

if (ret)

pr_err("%s failed to register rpdev: %d\\n",

__func__, ret);

return ret;

值得注意的是virtio_device的config结构 rpdev->ivdev[j].vdev.config = &imx_rpmsg_config_ops;

static struct virtio_config_ops imx_rpmsg_config_ops =

.get_features = imx_rpmsg_get_features,

.finalize_features = imx_rpmsg_finalize_features,

.find_vqs = imx_rpmsg_find_vqs,

.del_vqs = imx_rpmsg_del_vqs,

.reset = imx_rpmsg_reset,

.set_status = imx_rpmsg_set_status,

.get_status = imx_rpmsg_get_status,

;

imx_rpmsg_find_vqs

--> rp_find_vq

-->ioremap_nocache

-->vring_new_virtqueue(...imx_rpmsg_notify, callback...)

需要注意的是callback的注册过程,在rpmsg_bus中

rpmsg_probe

-->vq_callback_t *vq_cbs[] = rpmsg_recv_done, rpmsg_xmit_done ;

-->virtio_find_vqs(vdev, 2, vqs, vq_cbs, names, NULL);

在此处注册的imx_rpmsg_notify 和 callback 将被virtio_bus框架所调用

中间virtio_bus承上启下,并负责提供统一标准的virtio queue操作接口,如virtqueue_add,virtqueue_kick等

针对struct virtqueue,对外只有一个callback函数,用于表示queue的数据变化

struct virtqueue

struct list_head list;

void (*callback)(struct virtqueue *vq);

const char *name;

struct virtio_device *vdev;

unsigned int index;

unsigned int num_free;

void *priv;

;其实virtqueue只是提供一层标准queue的操作接口,其具体实现依靠vring_virtqueue

struct vring_virtqueue

struct virtqueue vq;

/* Actual memory layout for this queue */

struct vring vring

//queue的具体实现

unsigned int num;

struct vring_desc *desc;

struct vring_avail *avail;

struct vring_used *used;

;

/* How to notify other side. FIXME: commonalize hcalls! */

bool (*notify)(struct virtqueue *vq);

...

/* Per-descriptor state. */

struct vring_desc_state desc_state[];

;其触发过程在vring_interrupt

irqreturn_t vring_interrupt(int irq, void *_vq)

##对外只有virtqueue,找到其包装vring_virtqueue

struct vring_virtqueue *vq = to_vvq(_vq);

if (!more_used(vq))

pr_debug("virtqueue interrupt with no work for %p\\n", vq);

return IRQ_NONE;

if (unlikely(vq->broken))

return IRQ_HANDLED;

pr_debug("virtqueue callback for %p (%p)\\n", vq, vq->vq.callback);

##调用virtqueue的callback

if (vq->vq.callback)

vq->vq.callback(&vq->vq);

return IRQ_HANDLED;

结合中断,整体流程如下:

imx_mu_rpmsg_isr

-->rpmsg_work_handler

-->vring_interrupt

-->virtqueue.callback

关于vring_virtqueue,包含一个notify,用于通知queue有变化

virtqueue_add 和 virtqueue_kick 以及 virtqueue_notify 都能够触发notify

最终notify的实现在imx_rpmsg_notify,其内容为设置MU寄存器,发送数据

/* kick the remote processor, and let it know which virtqueue to poke at */

static bool imx_rpmsg_notify(struct virtqueue *vq)

unsigned int mu_rpmsg = 0;

struct imx_rpmsg_vq_info *rpvq = vq->priv;

mu_rpmsg = rpvq->vq_id << 16;

mutex_lock(&rpvq->rpdev->lock);

/*

* Send the index of the triggered virtqueue as the mu payload.

* Use the timeout MU send message here.

* Since that M4 core may not be loaded, and the first MSG may

* not be handled by M4 when multi-vdev is enabled.

* To make sure that the message wound't be discarded when M4

* is running normally or in the suspend mode. Only use

* the timeout mechanism by the first notify when the vdev is

* registered.

*/

if (unlikely(rpvq->rpdev->first_notify > 0))

rpvq->rpdev->first_notify--;

MU_SendMessageTimeout(rpvq->rpdev->mu_base, 1, mu_rpmsg, 200);

else

MU_SendMessage(rpvq->rpdev->mu_base, 1, mu_rpmsg);

mutex_unlock(&rpvq->rpdev->lock);

return true;

最上面可看成基于rpmsg的应用,挂载到rpmsg_bus总线,针对rpmsg也有对应的标准操作接口,如rpmsg_send,rpmsg_sendto,rpmsg_poll等等

在rpmsg_bus这一层,还有一个rpmsg_endpoint概念,其对应有一个rpmsg_endpoint_ops,包含send,send_to等接口,目前还未对其深入研究

static const struct rpmsg_endpoint_ops virtio_endpoint_ops =

.destroy_ept = virtio_rpmsg_destroy_ept,

.send = virtio_rpmsg_send,

.sendto = virtio_rpmsg_sendto,

.send_offchannel = virtio_rpmsg_send_offchannel,

.trysend = virtio_rpmsg_trysend,

.trysendto = virtio_rpmsg_trysendto,

.trysend_offchannel = virtio_rpmsg_trysend_offchannel,

;

发送流程为

rpmsg_send

-->rpmsg_endpoint.ops->send

-->virtio_rpmsg_send

-->virtqueue_add_outbuf 往queue填充数据

-->virtqueue_kick 通知对端

-->virtqueue_notify

-->imx_rpmsg_notify

-->MU_REG_WRITE

rpmsg_bus总线默认提供两个回调rpmsg_recv_done和rpmsg_xmit_done以便通知给上层rpmsg应用,分别表示收到数据及发送完成

接收处理流程:

imx_mu_rpmsg_isr

-->rpmsg_work_handler

-->vring_interrupt

-->virtqueue.callback

-->rpmsg_recv_done or rpmsg_xmit_done

/* called when an rx buffer is used, and it's time to digest a message */

static void rpmsg_recv_done(struct virtqueue *rvq)

struct virtproc_info *vrp = rvq->vdev->priv;

struct device *dev = &rvq->vdev->dev;

struct rpmsg_hdr *msg;

unsigned int len, msgs_received = 0;

int err;

msg = virtqueue_get_buf(rvq, &len);

if (!msg)

dev_err(dev, "uhm, incoming signal, but no used buffer ?\\n");

return;

while (msg)

err = rpmsg_recv_single(vrp, dev, msg, len);

if (err)

break;

msgs_received++;

msg = virtqueue_get_buf(rvq, &len);

dev_dbg(dev, "Received %u messages\\n", msgs_received);

/* tell the remote processor we added another available rx buffer */

if (msgs_received)

## 通知接收queue

virtqueue_kick(vrp->rvq);

static void rpmsg_xmit_done(struct virtqueue *svq)

struct virtproc_info *vrp = svq->vdev->priv;

dev_dbg(&svq->vdev->dev, "%s\\n", __func__);

/* wake up potential senders that are waiting for a tx buffer */

wake_up_interruptible(&vrp->sendq);

整体过程如下:

rpmsg源码驱动分析

// SPDX-License-Identifier: GPL-2.0+

/*

* Copyright (C) STMicroelectronics 2019 - All Rights Reserved

* Author: Jean-Philippe Romain <jean-philippe.romain@st.com>

*/

#include <linux/module.h>

#include <linux/kernel.h>

#include <linux/rpmsg.h>

#include <linux/slab.h>

#include <linux/fs.h>

#include <linux/mm.h>

#include <linux/dma-mapping.h>

#include <linux/miscdevice.h>

#include <linux/eventfd.h>

#include <linux/of_platform.h>

#include <linux/list.h>

#define RPMSG_SDB_DRIVER_VERSION "1.0"

/*

* Static global variables

*/

static const char rpmsg_sdb_driver_name[] = "stm32-rpmsg-sdb";

static int LastBufferId;

struct rpmsg_sdb_ioctl_set_efd

int bufferId, eventfd;

;

struct rpmsg_sdb_ioctl_get_data_size

int bufferId;

uint32_t size;

;

/* ioctl numbers */

/* _IOW means userland is writing and kernel is reading */

/* _IOR means userland is reading and kernel is writing */

/* _IOWR means userland and kernel can both read and write */

#define RPMSG_SDB_IOCTL_SET_EFD _IOW('R', 0x00, struct rpmsg_sdb_ioctl_set_efd *)

#define RPMSG_SDB_IOCTL_GET_DATA_SIZE _IOWR('R', 0x01, struct rpmsg_sdb_ioctl_get_data_size *)

struct sdb_buf_t

int index; /* index of buffer */

size_t size; /* buffer size */

size_t writing_size; /* size of data written by copro */

dma_addr_t paddr; /* physical address*/

void *vaddr; /* virtual address */

void *uaddr; /* mapped address for userland */

struct eventfd_ctx *efd_ctx; /* eventfd context */

struct list_head buflist; /* reference in the buffers list */

;

struct rpmsg_sdb_t

struct mutex mutex; /* mutex to protect the ioctls */

struct miscdevice mdev; /* misc device ref */

struct rpmsg_device *rpdev; /* handle rpmsg device */

struct list_head buffer_list; /* buffer instances list */

;

/*

其中:struct rpmsg_device

struct device dev;

struct rpmsg_device_id id;

char *driver_override;

u32 src;

u32 dst;

struct rpmsg_endpoint *ept;

bool announce;

const struct rpmsg_device_ops *ops;

;

*/

struct device *rpmsg_sdb_dev;

static int rpmsg_sdb_format_txbuf_string(struct sdb_buf_t *buffer, char *bufinfo_str)

return sprintf(bufinfo_str, "B%dA%08xL%08x", buffer->index, buffer->paddr, buffer->size);

// sprintf 如果成功,则返回写入的字符总数,但不包括字符串追加在字符串末尾的空字符。

/*解码收到的字符串*/

static long rpmsg_sdb_decode_rxbuf_string(char *rxbuf_str, int *buffer_id, size_t *size)

int ret = 0;

char *sub_str;

long bsize;

long bufid;

const char delimiter[1] = 'L';

//pr_err("%s: rxbuf_str:%s\\n", __func__, rxbuf_str);

/* Get first part containing the buffer id */

/*

char *strsep(char **s, const char *delim); s为要分解的字符串,delim为分隔符字符串。

返回值:从s开头开始的一个个子串,当没有分割的子串时返回NULL。

*/

sub_str = strsep(&rxbuf_str, delimiter);

//pr_err("%s: sub_str:%s\\n", __func__, sub_str);

/* Save Buffer id and size: template BxLyyyyyyyy*/

/*

long int kstrtol (const char* str, int base, char** endptr);

str 为要转换的字符串,base 为字符串 str 所采用的进制,endstr 为第一个不能转换的字符的指针,

这样,当传过来为 BxLyyyyyyyy ,则

*sub_str=Bx

*bufid = x -> buffer_id

*bsize = yyyyyyyy -> size

*/

ret = kstrtol(&sub_str[1], 10, &bufid);

if (ret < 0)

pr_err("%s: extract of buffer id failed(%d)", __func__, ret);

goto out;

ret = kstrtol(&rxbuf_str[2], 16, &bsize);

if (ret < 0)

pr_err("%s: extract of buffer size failed(%d)", __func__, ret);

goto out;

*size = (size_t)bsize;

*buffer_id = (int)bufid;

out:

return ret;

/* 发送buffer 里面的数据信息 */

static int rpmsg_sdb_send_buf_info(struct rpmsg_sdb_t *rpmsg_sdb, struct sdb_buf_t *buffer)

int count = 0, ret = 0;

const unsigned char *tbuf;

char mybuf[21];

int msg_size;

struct rpmsg_device *_rpdev;

_rpdev = rpmsg_sdb->rpdev;

/*

rpmsg_get_buffer_size返回rpmsg设备下所建立端点的可发送消息的数据大小

*/

msg_size = rpmsg_get_buffer_size(_rpdev->ept);

if (msg_size < 0)

return msg_size;

/*

根据struct sdb_buf_t *buffer中的数据成员,建立 mybuf->"B%dA%08xL%08x"结构

*/

count = rpmsg_sdb_format_txbuf_string(buffer, mybuf);

tbuf = &mybuf[0];

do

/* send a message to our remote processor */

ret = rpmsg_send(_rpdev->ept, (void *)tbuf,

count > msg_size ? msg_size : count);

if (ret)

dev_err(&_rpdev->dev, "rpmsg_send failed: %d\\n", ret);

return ret;

if (count > msg_size)

count -= msg_size;

tbuf += msg_size;

else

count = 0;

while (count > 0);

return count;

static int rpmsg_sdb_mmap(struct file *file, struct vm_area_struct *vma)

/* struct vm_area_struct 结构体 defines a memory VMM memory area

NumPages -> 页

align -> get_order(size)从size中提取order

prot -> vma->vm_page_prot 此vma的访问权限

*/

unsigned long vsize = vma->vm_end - vma->vm_start;

unsigned long size = PAGE_ALIGN(vsize); //对齐页的上边界

unsigned long NumPages = size >> PAGE_SHIFT;

unsigned long align = get_order(size);

pgprot_t prot = vma->vm_page_prot;

struct rpmsg_sdb_t *_rpmsg_sdb;

struct sdb_buf_t *_buffer;

if (align > CONFIG_CMA_ALIGNMENT)

align = CONFIG_CMA_ALIGNMENT;

if (rpmsg_sdb_dev == NULL)

return -ENOMEM;

rpmsg_sdb_dev->coherent_dma_mask = DMA_BIT_MASK(32);

rpmsg_sdb_dev->dma_mask = &rpmsg_sdb_dev->coherent_dma_mask;

_rpmsg_sdb = container_of(file->private_data, struct rpmsg_sdb_t,

mdev);

/* Field the last buffer entry which is the last one created */

if (!list_empty(&_rpmsg_sdb->buffer_list))

//获得list中的前一个元素地址

_buffer = list_last_entry(&_rpmsg_sdb->buffer_list,

struct sdb_buf_t, buflist);

_buffer->uaddr = NULL;

_buffer->size = NumPages * PAGE_SIZE;

_buffer->writing_size = -1;

//dma_alloc_writecombine返回分配的内存的虚拟起始地址,在内核要用此地址来操作所分配的内存

_buffer->vaddr = dma_alloc_writecombine(rpmsg_sdb_dev,

_buffer->size,

&_buffer->paddr,

GFP_KERNEL);

if (!_buffer->vaddr)

pr_err("%s: Memory allocation issue\\n", __func__);

return -ENOMEM;

pr_debug("%s - dma_alloc_writecombine done - paddr[%d]:%x - vaddr[%d]:%p\\n", __func__, _buffer->index, _buffer->paddr, _buffer->index, _buffer->vaddr);

/* Get address for userland */

// 当用户调用mmap时,驱动中的file_operations->mmap->remap_pfn_range负责为一段物理地址建立新的页表

if (remap_pfn_range(vma, vma->vm_start,

(_buffer->paddr >> PAGE_SHIFT) + vma->vm_pgoff,

size, prot))

return -EAGAIN;

_buffer->uaddr = (void *)vma->vm_start;

/* Send information to remote proc */

rpmsg_sdb_send_buf_info(_rpmsg_sdb, _buffer);

else

dev_err(rpmsg_sdb_dev, "No existing buffer entry exist in the list !!!");

return -EINVAL;

/* Increment for number of requested buffer */

LastBufferId++;

return 0;

/**

* rpmsg_sdb_open - Open Session

*

* @inode: inode struct

* @file: file struct

*

* Return:

* 0 - Success

* Non-zero - Failure

*/

static int rpmsg_sdb_open(struct inode *inode, struct file *file)

struct rpmsg_sdb_t *_rpmsg_sdb;

_rpmsg_sdb = container_of(file->private_data, struct rpmsg_sdb_t,

mdev);

/* Initialize the buffer list*/

INIT_LIST_HEAD(&_rpmsg_sdb->buffer_list);

mutex_init(&_rpmsg_sdb->mutex);

return 0;

/**

* rpmsg_sdb_close - Close Session

*

* @inode: inode struct

* @file: file struct

*

* Return:

* 0 - Success

* Non-zero - Failure

*/

static int rpmsg_sdb_close(struct inode *inode, struct file *file)

struct rpmsg_sdb_t *_rpmsg_sdb;

struct sdb_buf_t *pos, *next;

_rpmsg_sdb = container_of(file->private_data, struct rpmsg_sdb_t,

mdev);

list_for_each_entry_safe(pos, next, &_rpmsg_sdb->buffer_list, buflist)

/* Free the CMA allocation */

dma_free_writecombine(rpmsg_sdb_dev, pos->size, pos->vaddr,

pos->paddr);

/* Remove the buffer from the list */

list_del(&pos->buflist);

/* Free the buffer */

kfree(pos);

/* Reset LastBufferId */

LastBufferId = 0;

return 0;

/**

* rpmsg_sdb_ioctl - IOCTL

*

* @session: ibmvmc_file_session struct

* @cmd: cmd field

* @arg: Argument field

*

* Return:

* 0 - Success

* Non-zero - Failure

*/

static long rpmsg_sdb_ioctl(struct file *file, unsigned int cmd, unsigned long arg)

int idx = 0;

struct rpmsg_sdb_t *_rpmsg_sdb;

struct sdb_buf_t *buffer, *lastbuffer;

struct list_head *pos;

struct sdb_buf_t *datastructureptr = NULL;

struct rpmsg_sdb_ioctl_set_efd q_set_efd;

struct rpmsg_sdb_ioctl_get_data_size q_get_dat_size;

/*

(void __user *)arg 指的是arg值是一个用户空间的地址,不能直接进行拷贝等,要使用例如copy_from_user,copy_to_user等函数。

__user 仅仅表示该变量是用户空间的变量

*/

void __user *argp = (void __user *)arg;

/*

container_of(ptr, type, member)

ptr:结构体变量中某个成员的地址

type:结构体类型

member:该结构体中的成员变量名

作用:已知member这个结构体成员的地址ptr,且其在类型为type的结构体中,获取这个结构体变量的首地址。

可知:file->private_data保存的是mdev的地址

*/

_rpmsg_sdb = container_of(file->private_data, struct rpmsg_sdb_t,

mdev);

switch (cmd)

case RPMSG_SDB_IOCTL_SET_EFD:

mutex_lock(&_rpmsg_sdb->mutex);

/* Get index from the last buffer in the list */

if (!list_empty(&_rpmsg_sdb->buffer_list))

//list_last_entry->list_entry同container_of,只不过其获取的是前一个结构体变量的地址

lastbuffer = list_last_entry(&_rpmsg_sdb->buffer_list, struct sdb_buf_t, buflist);

idx = lastbuffer->index;

/* increment this index for the next buffer creation*/

idx++;

/*

从用户空间拷贝数据到内核空间,失败则返回还没有被拷贝的字节数,成功返回0.

*/

if (copy_from_user(&q_set_efd, (struct rpmsg_sdb_ioctl_set_efd *)argp,

sizeof(struct rpmsg_sdb_ioctl_set_efd)))

pr_warn("rpmsg_sdb: RPMSG_SDB_IOCTL_GET_DATA_SIZE: copy to user failed.\\n");

mutex_unlock(&_rpmsg_sdb->mutex);

return -EFAULT;

/* create a new buffer which will be added in the buffer list */

// kmalloc() 申请的内存位于物理内存映射区域,而且在物理上也是连续的

buffer = kmalloc(sizeof(struct sdb_buf_t), GFP_KERNEL);

buffer->index = idx;

buffer->efd_ctx = eventfd_ctx_fdget(q_set_efd.eventfd);

list_add_tail(&buffer->buflist, &_rpmsg_sdb->buffer_list);

mutex_unlock(&_rpmsg_sdb->mutex);

break;

case RPMSG_SDB_IOCTL_GET_DATA_SIZE:

if (copy_from_user(&q_get_dat_size, (struct rpmsg_sdb_ioctl_get_data_size *)argp,

sizeof(struct rpmsg_sdb_ioctl_get_data_size)))

pr_warn("rpmsg_sdb: RPMSG_SDB_IOCTL_GET_DATA_SIZE: copy from user failed.\\n");

return -EFAULT;

/* Get the index of the requested buffer and then look-up in the buffer list*/

idx = q_get_dat_size.bufferId;

list_for_each(pos, &_rpmsg_sdb->buffer_list)

datastructureptr = list_entry(pos, struct sdb_buf_t, buflist);

if (datastructureptr->index == idx)

/* Get the writing size*/

q_get_dat_size.size = datastructureptr->writing_size;

break;

if (copy_to_user((struct rpmsg_sdb_ioctl_get_data_size *)argp, &q_get_dat_size,

sizeof(struct rpmsg_sdb_ioctl_get_data_size)))

pr_warn("rpmsg_sdb: RPMSG_SDB_IOCTL_GET_DATA_SIZE: copy to user failed.\\n");

return -EFAULT;

/* Reset the writing size*/

datastructureptr->writing_size = -1;

break;

default:

return -EINVAL;

return 0;

static const struct file_operations rpmsg_sdb_fops =

.owner = THIS_MODULE,

.unlocked_ioctl = rpmsg_sdb_ioctl,

.mmap = rpmsg_sdb_mmap,

.open = rpmsg_sdb_open,

.release = rpmsg_sdb_close,

;

static int rpmsg_sdb_drv_cb(struct rpmsg_device *rpdev, void *data, int len,

void *priv, u32 src)

int ret = 0;

int buffer_id = 0;

size_t buffer_size;

char rpmsg_RxBuf[len+1];

struct list_head *pos;

struct sdb_buf_t *datastructureptr = NULL;

struct rpmsg_sdb_t *drv = dev_get_drvdata(&rpdev->dev);

if (len == 0)

dev_err(rpmsg_sdb_dev, "(%s) Empty lenght requested\\n", __func__);

return -EINVAL;

//dev_err(rpmsg_sdb_dev, "(%s) lenght: %d\\n", __func__,len);

memcpy(rpmsg_RxBuf, data, len);

rpmsg_RxBuf[len] = 0;

ret = rpmsg_sdb_decode_rxbuf_string(rpmsg_RxBuf, &buffer_id, &buffer_size);

if (ret < 0)

goto out;

if (buffer_id > LastBufferId)

ret = -EINVAL;

goto out;

/* Signal to User space application */

//遍历列表,以不断变化的pos作为循环变量

list_for_each(pos, &drv->buffer_list)

//list_entry获取链表数据

datastructureptr = list_entry(pos, struct sdb_buf_t, buflist);

if (datastructureptr->index == buffer_id)

datastructureptr->writing_size = buffer_size;

if (datastructureptr->writing_size > datastructureptr->size)

dev_err(rpmsg_sdb_dev, "(%s) Writing size is bigger than buffer size\\n", __func__);

ret = -EINVAL;

goto out;

eventfd_signal(datastructureptr->efd_ctx, 1);

break;

/* TODO: quid if nothing find during the loop ? */

out:

return ret;

static int rpmsg_sdb_drv_probe(struct rpmsg_device *rpdev)

int ret = 0;

struct device *dev = &rpdev->dev;

struct rpmsg_sdb_t *rpmsg_sdb;

/*

devm_kzalloc() 是跟设备有关的内核内存分配函数,当设备驱动卸载时,内存会被自动释放。

kzalloc()则需要手动释放(使用kfree()))

注意:在驱动的探针函数中调用devm_kzalloc()

*/

rpmsg_sdb = devm_kzalloc(dev, sizeof(*rpmsg_sdb), GFP_KERNEL);

if (!rpmsg_sdb)

return -ENOMEM;

mutex_init(&rpmsg_sdb->mutex);

rpmsg_sdb->rpdev = rpdev;

rpmsg_sdb->mdev.name = "rpmsg-sdb";

rpmsg_sdb->mdev.minor = MISC_DYNAMIC_MINOR;

rpmsg_sdb->mdev.fops = &rpmsg_sdb_fops;

/* dev_set_drvdata函数用来设置device 的私有驱动数据

static inline void dev_set_drvdata(struct device *dev, void *data)

dev->driver_data = data;

*/

dev_set_drvdata(&rpdev->dev, rpmsg_sdb);

/* Register misc device */

ret = misc_register(&rpmsg_sdb->mdev);

if (ret)

dev_err(dev, "Failed to register device\\n");

goto err_out;

rpmsg_sdb_dev = rpmsg_sdb->mdev.this_device;

//dev_info()用于启动过程、或者模块加载过程等“通知类的”信息

dev_info(dev, "%s probed\\n", rpmsg_sdb_driver_name);

err_out:

return ret;

static void rpmsg_sdb_drv_remove(struct rpmsg_device *rpmsgdev)

struct rpmsg_sdb_t *drv = dev_get_drvdata(&rpmsgdev->dev);

misc_deregister