android硬编解码MediaCodec

Posted 步基

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了android硬编解码MediaCodec相关的知识,希望对你有一定的参考价值。

一 mediacodec简介

MediaCodec 类可以用来访问底层媒体编解码器,即编码器/解码器的组件。 它是 android 底层多媒体支持架构的一部分(通常与 MediaExtractor,MediaSync,MediaMuxer,MediaCrypto,MediaDrm,Image,Surface 和 AudioTrack 一起使用)。

编解码器可以处理三类数据:压缩数据、原始音频数据、原始视频数据。

a Compressed Buffers 压缩缓冲区

输入和输出缓冲区包含了对应类型的压缩数据;对于视频类型通常是简单的压缩视频帧;音频数据通常是一个单入单元,(一种编码格式典型的包含了许多 ms 的音频类型),但当一个缓冲区包含了多种编码音频进入单元,可以不需要。另一方面,缓冲区不能在任意字节边界开始或停止,但当标记了 BUFFERFLAGPARTIAL_FRAME 标记时,可以访问帧或进入单元边界。

b Raw Audio Buffers 原始音频缓冲区

原始音频缓冲区包含完整的 PCM 格式的帧数据,一种通道对应一个采样率。每一种采样率是一个 16 位有符号整型在规定参数里面;

c Raw Video Buffers 原始视频缓冲区

在 ByteBuffer 模式,视频缓冲区根据颜色格式;可以通过 getCodecInfo().getCapabilitiesForType(…).colorFormats 获取支持的颜色格式,视频编码支持三种类型的颜色格式:

native raw video format: 标记 COLOR_FormatSurface,可以配合输入输出 surface 使用

flexible YUV buffers:COLOR_FormatYUV420Flexible,可以配合输入输出 surface、在 ByteBuffer 模式,可以通过 getInput/OutputImage(int)访问

other, specific formats:这些格式只在 ByteBuffer 模式支持。一些格式是厂商特有的,其他的定义在 MediaCodecInfo.CodecCapabilities;

自从 5.1.1 之后,所有的编解码器支持 YUV 4:2:0 buffers。

d Accessing Raw Video ByteBuffers on Older Devices 在老的设备上面访问原始视频缓冲区

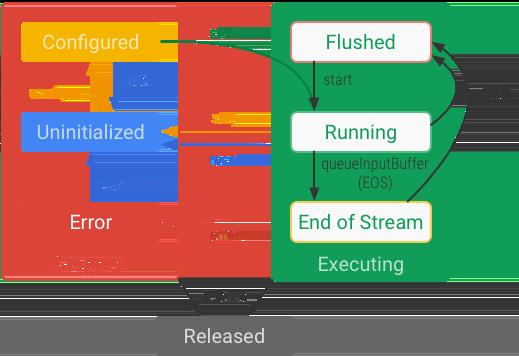

状态

编解码器理论上存在三种状态:停止、执行、释放;停止状态也包含三种子状态:未初始化的、已配置的、错误;执行状态也包含三种子状态:已刷新、正在运行、流结束;

0) 工厂方法创建一个编解码器,处于未初始化状态。

1) configure(…)方法进入已配置状态。

2) start()方法进入执行状态。此时,才可以通过上面缓冲队列来处理数据。

3) start()之后,编解码出于已刷新子状态,此时持有所有的缓冲区;当第一个输入缓冲块被出队时,编解码器会耗费许多时间进入运行状态。当一个输入缓冲块被入队时(被标记流结束标记),编解码器进入流结束状态;此时,编解码器不在接收输入缓冲块,但是可以产生输出缓冲块,直到流结束块被出队。

4) 可以在任意时刻,通过调用 flush(),进入已刷新状态。

5) stop()让其进入未初始化状态,如果需要使用,需要再配置一次。

6) 当你已经用完编解码器,你需要 release();

某些情况下,编解码器会遭遇错误进入错误状态;可以根据不合法返回值或者异常来判断;调用 reset()可以复位编码器,让其可以重新使用,并进入未初始化状态。调用 releases()进入最终释放状态。

1 creating创建

可以根据指定的 MediaFormat 通过 MediaCodecList 创建一个编解码器;

可以根据 MediaExtractor.getTrackFormat 来创建一个可以用于解码文件和流的编解码器;

在引入其他格式之前,当你想 MediaFormat.setFeatureEnabled,需要通过 MediaCodecList.findDecoderForFormat 获得与名字对应的特殊的媒体格式的编解码器;

最后通过 createByCodecName(String)创建;

也可以通过 MIME 类型使用 createDecoder/EncoderByType(String)来创建。

2 Initialization初始化

creating之后,可以设置回调 setCallback 来异步处理数据;然后 configure 配置指定的媒体格式。你可以为视频生成指定一个输出 surface;也可以设置安全编码,参考 MediaCrypto;最后编解码器运行在多个模式下,需要特殊指定在编码或解码状态;

如果你想处理原始输入视频缓冲区,可以在配置后通过 createInputSurface()创建一个指定的 Surface。也可以通过 setInputSurface(Surface)设置编解码器使用指定的 Surface。

AAC audio and MPEG4, H.264 and H.265 video 格式要求预置启动参数或者编解码特殊数据。当处理一些压缩格式时,这些数据必须在任意帧数据之前和 start()之后提交到编解码器。这些数据在调用 queueInputBuffer 时需要被标记 BUFFERFLAGCODEC_CONFIG。

这些数据也可以通过 configure 来配置,可以从 MediaExtractor 获取并放在 MediaFromat 里面。这些数据会在 start()时提交到比爱你解码器里面。

编码器会在任何可用数据之前创建和返回特定标记了 codec-config 标记的编码参数,缓冲区包含了没有时间戳的 codec-specific-data。

3 Data processing执行编解码

同步模式下,获取一个输入缓冲区之后,填充数据,并通过 queueInputBuffer 提交到 codec,不要提交多个同样时间戳一样的输入数据到 codec。codec 处理完后,会返回一个只读输出缓冲区数据。

异步模式可以通过 onOutputBufferAvailable 读取,同步模式通过 dequeuOutputBuffer 读取;最后需要调用 releaseOutputBuffer 返回缓冲区到 codec。

二 用法示例

//播放mp4文件

package com.cclin.jubaohe.activity.Media;

import android.media.AudioFormat;

import android.media.AudioManager;

import android.media.AudioTrack;

import android.media.MediaCodec;

import android.media.MediaExtractor;

import android.media.MediaFormat;

import android.os.Bundle;

import android.view.Surface;

import android.view.SurfaceHolder;

import android.view.SurfaceView;

import android.view.View;

import android.widget.Button;

import com.cclin.jubaohe.R;

import com.cclin.jubaohe.base.BaseActivity;

import com.cclin.jubaohe.util.CameraUtil;

import com.cclin.jubaohe.util.LogUtil;

import com.cclin.jubaohe.util.SDPathConfig;

import java.io.File;

import java.io.IOException;

import java.nio.ByteBuffer;

/**

* Created by LinChengChun on 2018/4/14.

*/

public class MediaTestActivity extends BaseActivity implements SurfaceHolder.Callback, View.OnClickListener

private final static String MEDIA_FILE_PATH = SDPathConfig.LIVE_MOVIE_PATH+"/18-04-12-10:47:06-0.mp4";

private Surface mSurface;

private SurfaceView mSvRenderFromCamera;

private SurfaceView mSvRenderFromFile;

Button mBtnCameraPreview;

Button mBtnPlayMediaFile;

private MediaStream mMediaStream;

private Thread mVideoDecoderThread;

private AudioTrack mAudioTrack;

private Thread mAudioDecoderThread;

@Override

protected void onCreate(Bundle savedInstanceState)

super.onCreate(savedInstanceState);

mBtnCameraPreview = retrieveView(R.id.btn_camera_preview);

mBtnPlayMediaFile = retrieveView(R.id.btn_play_media_file);

mBtnCameraPreview.setOnClickListener(this);

mBtnPlayMediaFile.setOnClickListener(this);

mSvRenderFromCamera = retrieveView(R.id.sv_render);

mSvRenderFromCamera.setOnClickListener(this);

mSvRenderFromCamera.getHolder().addCallback(this);

mSvRenderFromFile = retrieveView(R.id.sv_display);

mSvRenderFromFile.setOnClickListener(this);

mSvRenderFromFile.getHolder().addCallback(this);

init();

@Override

protected int initLayout()

return R.layout.activity_media_test;

private void init()

File file = new File(MEDIA_FILE_PATH);

if (!file.exists())

LogUtil.e("文件不存在!!");

return;

LogUtil.e("目标文件存在!!");

private void startVideoDecoder()

// fill inputBuffer with valid data

mVideoDecoderThread = new Thread("mVideoDecoderThread")

@Override

public void run()

super.run();

MediaFormat mMfVideo = null, mMfAudio = null;

String value = null;

String strVideoMime = null;

String strAudioMime = null;

try

MediaExtractor mediaExtractor = new MediaExtractor(); // 提取器用来从文件中读取音视频

mediaExtractor.setDataSource(MEDIA_FILE_PATH);

int numTracks = mediaExtractor.getTrackCount(); // 轨道数,一般为2

LogUtil.e("获取track数"+numTracks);

for (int i=0; i< numTracks; i++) // 检索每个轨道的格式

MediaFormat mediaFormat = mediaExtractor.getTrackFormat(i);

LogUtil.e("单独显示track MF:"+mediaFormat);

value = mediaFormat.getString(MediaFormat.KEY_MIME);

if (value.contains("audio"))

mMfAudio = mediaFormat;

strAudioMime = value;

else

mMfVideo = mediaFormat;

strVideoMime = value;

mediaExtractor.selectTrack(i);

mSurface = mSvRenderFromFile.getHolder().getSurface();

MediaCodec codec = MediaCodec.createDecoderByType(strVideoMime); // 创建编解码器

codec.configure(mMfVideo, mSurface, null, 0); // 配置解码后的视频帧数据直接渲染到Surface

codec.setVideoScalingMode(MediaCodec.VIDEO_SCALING_MODE_SCALE_TO_FIT);

codec.start(); // 启动编解码器,让codec进入running模式

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo(); //缓冲区信息

int size = -1, outputBufferIndex = -1;

LogUtil.e("开始解码。。。");

long previewStampUs = 0l;

do

int inputBufferId = codec.dequeueInputBuffer(10);// 从编码器中获取 输入缓冲区

if (inputBufferId >= 0)

ByteBuffer inputBuffer = codec.getInputBuffer(inputBufferId); // 获取该输入缓冲区

// fill inputBuffer with valid data

inputBuffer.clear(); // 清空缓冲区

size = mediaExtractor.readSampleData(inputBuffer, 0); // 从提取器中获取一帧数据填充到输入缓冲区

LogUtil.e("readSampleData: size = "+size);

if (size < 0)

break;

int trackIndex = mediaExtractor.getSampleTrackIndex();

long presentationTimeUs = mediaExtractor.getSampleTime(); // 获取采样时间

LogUtil.e("queueInputBuffer: 把数据放入编码器。。。");

codec.queueInputBuffer(inputBufferId, 0, size, presentationTimeUs, 0); // 将输入缓冲区压入编码器

mediaExtractor.advance(); // 获取下一帧

LogUtil.e("advance: 获取下一帧。。。");

outputBufferIndex = codec.dequeueOutputBuffer(bufferInfo, 10000); // 从编码器中读取解码完的数据

LogUtil.e("outputBufferIndex = "+outputBufferIndex);

switch (outputBufferIndex)

case MediaCodec.INFO_OUTPUT_FORMAT_CHANGED:

// MediaFormat mf = codec.getOutputFormat(outputBufferIndex); // 导致播放视频失败

MediaFormat mf = codec.getOutputFormat();

LogUtil.e("INFO_OUTPUT_FORMAT_CHANGED:"+mf);

break;

case MediaCodec.INFO_TRY_AGAIN_LATER:

LogUtil.e("解码当前帧超时");

break;

case MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED:

//outputBuffers = videoCodec.getOutputBuffers();

LogUtil.e("output buffers changed");

break;

default:

//直接渲染到Surface时使用不到outputBuffer

//ByteBuffer outputBuffer = outputBuffers[outputBufferIndex];

//延时操作

//如果缓冲区里的可展示时间>当前视频播放的进度,就休眠一下

boolean firstTime = previewStampUs == 0l;

long newSleepUs = -1;

long sleepUs = (bufferInfo.presentationTimeUs - previewStampUs);

if (!firstTime)

long cache = 0;

newSleepUs = CameraUtil.fixSleepTime(sleepUs, cache, -100000);

previewStampUs = bufferInfo.presentationTimeUs;

//渲染

if (newSleepUs < 0)

newSleepUs = 0;

Thread.sleep(newSleepUs / 1000);

codec.releaseOutputBuffer(outputBufferIndex, true); // 释放输入缓冲区,并渲染到Surface

break;

while (!this.isInterrupted());

LogUtil.e("解码结束。。。");

codec.stop();

codec.release();

codec = null;

mediaExtractor.release();

mediaExtractor = null;

catch (IOException e)

e.printStackTrace();

catch (InterruptedException e)

e.printStackTrace();

;

mVideoDecoderThread.start();

private void startAudioDecoder()

mAudioDecoderThread = new Thread("AudioDecoderThread")

@Override

public void run()

super.run();

try

MediaFormat mMfVideo = null, mMfAudio = null;

String value = null;

String strVideoMime = null;

String strAudioMime = null;

MediaExtractor mediaExtractor = new MediaExtractor();

mediaExtractor.setDataSource(MEDIA_FILE_PATH);

int numTracks = mediaExtractor.getTrackCount();

LogUtil.e("获取track数"+numTracks);

for (int i=0; i< numTracks; i++)

MediaFormat mediaFormat = mediaExtractor.getTrackFormat(i);

LogUtil.e("单独显示track MF:"+mediaFormat);

value = mediaFormat.getString(MediaFormat.KEY_MIME);

if (value.contains("audio"))

mMfAudio = mediaFormat;

strAudioMime = value;

mediaExtractor.selectTrack(i);

else

mMfVideo = mediaFormat;

strVideoMime = value;

// mMfAudio.setInteger(MediaFormat.KEY_IS_ADTS, 1);

mMfAudio.setInteger(MediaFormat.KEY_BIT_RATE, 16000);

MediaCodec codec = MediaCodec.createDecoderByType(strAudioMime);

codec.configure(mMfAudio, null, null, 0);

codec.start();

ByteBuffer outputByteBuffer = null;

ByteBuffer[] outputByteBuffers = null;

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int size = -1, outputBufferIndex = -1;

long previewStampUs = 01;

LogUtil.e("开始解码。。。");

if (mAudioTrack == null)

int sample_rate = mMfAudio.getInteger(MediaFormat.KEY_SAMPLE_RATE);

int channels = mMfAudio.getInteger(MediaFormat.KEY_CHANNEL_COUNT);

int sampleRateInHz = (int) (sample_rate * 1.004);

int channelConfig = channels == 1 ? AudioFormat.CHANNEL_OUT_MONO : AudioFormat.CHANNEL_OUT_STEREO;

int audioFormat = AudioFormat.ENCODING_PCM_16BIT;

int bfSize = AudioTrack.getMinBufferSize(sampleRateInHz, channelConfig, audioFormat) * 4;

mAudioTrack = new AudioTrack(AudioManager.STREAM_MUSIC, sampleRateInHz, channelConfig, audioFormat, bfSize, AudioTrack.MODE_STREAM);

mAudioTrack.play();

// outputByteBuffers = codec.getOutputBuffers();

do

int inputBufferId = codec.dequeueInputBuffer(10);

if (inputBufferId >= 0)

ByteBuffer inputBuffer = codec.getInputBuffer(inputBufferId);

// fill inputBuffer with valid data

inputBuffer.clear();

size = mediaExtractor.readSampleData(inputBuffer, 0);

if (size<0)

break;

long presentationTimeUs = mediaExtractor.getSampleTime();

// LogUtil.e("queueInputBuffer: 把数据放入编码器。。。");

codec.queueInputBuffer(inputBufferId, 0, size, presentationTimeUs, 0);

mediaExtractor.advance();

// LogUtil.e("advance: 获取下一帧。。。");

outputBufferIndex = codec.dequeueOutputBuffer(bufferInfo, 50000);

switch (outputBufferIndex)

case MediaCodec.INFO_OUTPUT_FORMAT_CHANGED:

// MediaFormat mf = codec.getOutputFormat(outputBufferIndex);

MediaFormat mf = codec.getOutputFormat();

LogUtil.e("INFO_OUTPUT_FORMAT_CHANGED:"+mf);

break;

case MediaCodec.INFO_TRY_AGAIN_LATER:

LogUtil.e( "解码当前帧超时");

break;

case MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED:

// outputByteBuffer = codec.getOutputBuffers();

LogUtil.e( "output buffers changed");

break;

default:

//直接渲染到Surface时使用不到outputBuffer

//ByteBuffer outputBuffer = outputBuffers[outputBufferIndex];

//延时操作

//如果缓冲区里的可展示时间>当前视频播放的进度,就休眠一下

LogUtil.e("outputBufferIndex = "+outputBufferIndex);

// outputByteBuffer = outputByteBuffers[outputBufferIndex];

outputByteBuffer = codec.getOutputBuffer(outputBufferIndex); // 获取解码后的数据

outputByteBuffer.clear();

byte[] outData = new byte[bufferInfo.size];

outputByteBuffer.get(outData);

boolean firstTime = previewStampUs == 0l;

long newSleepUs = -1;

long sleepUs = (bufferInfo.presentationTimeUs - previewStampUs);

if (!firstTime)

long cache = 0;

newSleepUs = CameraUtil.fixSleepTime(sleepUs, cache, -100000);

previewStampUs = bufferInfo.presentationTimeUs;

//渲染

if (newSleepUs < 0)

newSleepUs = 0;

Thread.sleep(newSleepUs/1000);

mAudioTrack.write(outData, 0, outData.length); // 输出音频

codec.releaseOutputBuffer(outputBufferIndex, false); // 释放输出缓冲区

break;

while (!this.isInterrupted());

LogUtil.e("解码结束。。。");

codec.stop();

codec.release();

codec = null;

mAudioTrack.stop();

mAudioTrack.release();

mAudioTrack = null;

mediaExtractor.release();

mediaExtractor = null;

catch (IOException e)

e.printStackTrace();

catch (InterruptedException e)

e.printStackTrace();

;

mAudioDecoderThread.start();

@Override

public void onClick(View view)

switch (view.getId())

case R.id.sv_render:

mMediaStream.getCamera().autoFocus(null);

break;

case R.id.sv_display:

break;

case R.id.btn_camera_preview:

break;

case R.id.btn_play_media_file:

break;

default:break;

private int getDgree()

int rotation = getWindowManager().getDefaultDisplay().getRotation();

int degrees = 0;

switch (rotation)

case Surface.ROTATION_0:

degrees = 0;

break; // Natural orientation

case Surface.ROTATION_90:

degrees = 90;

break; // Landscape left

case Surface.ROTATION_180:

degrees = 180;

break;// Upside down

case Surface.ROTATION_270:

degrees = 270;

break;// Landscape right

return degrees;

private void onMediaStreamCreate()

if (mMediaStream==null)

mMediaStream = new MediaStream(this, mSvRenderFromCamera.getHolder());

mMediaStream.setDgree(getDgree());

mMediaStream.createCamera();

mMediaStream.startPreview();

private void onMediaStreamDestroy()

mMediaStream.release();

mMediaStream = null;

@Override

protected void onPause()

super.onPause();

onMediaStreamDestroy();

if (mVideoDecoderThread!=null)

mVideoDecoderThread.interrupt();

if (mAudioDecoderThread!=null)

mAudioDecoderThread.interrupt();

@Override

protected void onResume()

super.onResume();

if (isSurfaceCreated && mMediaStream == null)

onMediaStreamCreate();

private boolean isSurfaceCreated = false;

@Override

public void surfaceCreated(SurfaceHolder holder)

LogUtil.e("surfaceCreated: "+holder);

if (holder.getSurface() == mSvRenderFromCamera.getHolder().getSurface())

isSurfaceCreated = true;

onMediaStreamCreate();

else if (holder.getSurface() == mSvRenderFromFile.getHolder().getSurface())

if (new File(MEDIA_FILE_PATH).exists())

startVideoDecoder();

startAudioDecoder();

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height)

LogUtil.e("surfaceChanged: "

+"\\nholder = "+holder

+"\\nformat = "+format

+"\\nwidth = "+width

+"\\nheight = "+height);

@Override

public void surfaceDestroyed(SurfaceHolder holder)

LogUtil.e("surfaceDestroyed: ");

if (holder.getSurface() == mSvRenderFromCamera.getHolder().getSurface())

isSurfaceCreated = false;

//摄像头数据编码h264

final int millisPerframe = 1000 / 20;

long lastPush = 0;

@Override

public void run()

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferIndex = 0;

byte[] mPpsSps = new byte[0];

byte[] h264 = new byte[mWidth * mHeight];

do

outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, 10000); // 从codec中获取编码完的数据

if (outputBufferIndex == MediaCodec.INFO_TRY_AGAIN_LATER)

// no output available yet

else if (outputBufferIndex == MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED)

// not expected for an encoder

outputBuffers = mMediaCodec.getOutputBuffers();

else if (outputBufferIndex == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED)

synchronized (HWConsumer.this)

newFormat = mMediaCodec.getOutputFormat();

EasyMuxer muxer = mMuxer;

if (muxer != null)

// should happen before receiving buffers, and should only happen once

muxer.addTrack(newFormat, true);

else if (outputBufferIndex < 0)

// let's ignore it

else

ByteBuffer outputBuffer;

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.LOLLIPOP)

outputBuffer = mMediaCodec.getOutputBuffer(outputBufferIndex);

else

outputBuffer = outputBuffers[outputBufferIndex];

outputBuffer.position(bufferInfo.offset);

outputBuffer.limit(bufferInfo.offset + bufferInfo.size);

EasyMuxer muxer = mMuxer;

if (muxer != null)

muxer.pumpStream(outputBuffer, bufferInfo, true);

boolean sync = false;

if ((bufferInfo.flags & MediaCodec.BUFFER_FLAG_CODEC_CONFIG) != 0) // codec会产生sps和pps

sync = (bufferInfo.flags & MediaCodec.BUFFER_FLAG_SYNC_FRAME) != 0; // 标记是I帧还是同步帧

if (!sync) // 如果是同步帧,也就是填充着pps和sps参数

byte[] temp = new byte[bufferInfo.size];

outputBuffer.get(temp);

mPpsSps = temp;

mMediaCodec.releaseOutputBuffer(outputBufferIndex, false);

continue; // 等待下一帧

else

mPpsSps = new byte[0];

sync |= (bufferInfo.flags & MediaCodec.BUFFER_FLAG_SYNC_FRAME) != 0; // 标记是否是关键帧

int len = mPpsSps.length + bufferInfo.size;

if (len > h264.length)

h264 = new byte[len];

if (sync)

// 如果是关键帧

if (BuildConfig.DEBUG)

Log.i(TAG, String.format("push i video stamp:%d", bufferInfo.presentationTimeUs / 1000));

else // 非I帧直接读取出来

outputBuffer.get(h264, 0, bufferInfo.size);

if (BuildConfig.DEBUG)

Log.i(TAG, String.format("push video stamp:%d", bufferInfo.presentationTimeUs / 1000));

mMediaCodec.releaseOutputBuffer(outputBufferIndex, false);

while (mVideoStarted);

@Override

public int onVideo(byte[] data, int format)

if (!mVideoStarted) return 0;

try

if (lastPush == 0)

lastPush = System.currentTimeMillis();

long time = System.currentTimeMillis() - lastPush;

if (time >= 0)

time = millisPerframe - time;

if (time > 0) Thread.sleep(time / 2);

if (format == ImageFormat.YV12)

JNIUtil.yV12ToYUV420P(data, mWidth, mHeight);

else

JNIUtil.nV21To420SP(data, mWidth, mHeight);

int bufferIndex = mMediaCodec.dequeueInputBuffer(0);

if (bufferIndex >= 0)

ByteBuffer buffer = null;

if (android.os.Build.VERSION.SDK_INT >= android.os.Build.VERSION_CODES.LOLLIPOP)

buffer = mMediaCodec.getInputBuffer(bufferIndex);

else

buffer = inputBuffers[bufferIndex];

buffer.clear();

buffer.put(data);

buffer.clear();

mMediaCodec.queueInputBuffer(bufferIndex, 0, data.length, System.nanoTime() / 1000, MediaCodec.BUFFER_FLAG_KEY_FRAME); // 标记含有关键帧

if (time > 0) Thread.sleep(time / 2); // 添加延时,确保帧率

lastPush = System.currentTimeMillis();

catch (InterruptedException ex)

ex.printStackTrace();

return 0;

/**

* 初始化编码器

*/

private void startMediaCodec() throws IOException

/*

SD (Low quality) SD (High quality) HD 720p

1 HD 1080p

1

Video resolution 320 x 240 px 720 x 480 px 1280 x 720 px 1920 x 1080 px

Video frame rate 20 fps 30 fps 30 fps 30 fps

Video bitrate 384 Kbps 2 Mbps 4 Mbps 10 Mbps

*/

int framerate = 20;

// if (width == 640 || height == 640)

// bitrate = 2000000;

// else if (width == 1280 || height == 1280)

// bitrate = 4000000;

// else

// bitrate = 2 * width * height;

//

int bitrate = (int) (mWidth * mHeight * 20 * 2 * 0.05f);

if (mWidth >= 1920 || mHeight >= 1920) bitrate *= 0.3;

else if (mWidth >= 1280 || mHeight >= 1280) bitrate *= 0.4;

else if (mWidth >= 720 || mHeight >= 720) bitrate *= 0.6;

EncoderDebugger debugger = EncoderDebugger.debug(mContext, mWidth, mHeight);

mVideoConverter = debugger.getNV21Convertor();

mMediaCodec = MediaCodec.createByCodecName(debugger.getEncoderName());

MediaFormat mediaFormat = MediaFormat.createVideoFormat("video/avc", mWidth, mHeight);

mediaFormat.setInteger(MediaFormat.KEY_BIT_RATE, bitrate);

mediaFormat.setInteger(MediaFormat.KEY_FRAME_RATE, framerate);

mediaFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, debugger.getEncoderColorFormat());

mediaFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, 1);

mMediaCodec.configure(mediaFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

mMediaCodec.start();

Bundle params = new Bundle();

params.putInt(MediaCodec.PARAMETER_KEY_REQUEST_SYNC_FRAME, 0);

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.KITKAT)

mMediaCodec.setParameters(params);

//h264编码生成pps,sps

inputBuffers = mMediaCodec.getInputBuffers();

outputBuffers = mMediaCodec.getOutputBuffers();

int bufferIndex = mMediaCodec.dequeueInputBuffer(0);

if (bufferIndex >= 0)

inputBuffers[bufferIndex].clear();

mConvertor.convert(data, inputBuffers[bufferIndex]);

mMediaCodec.queueInputBuffer(bufferIndex, 0, inputBuffers[bufferIndex].position(), System.nanoTime() / 1000, 0);

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, 0);

while (outputBufferIndex >= 0)

ByteBuffer outputBuffer = outputBuffers[outputBufferIndex];

// String data0 = String.format("%x %x %x %x %x %x %x %x %x %x ", outData[0], outData[1], outData[2], outData[3], outData[4], outData[5], outData[6], outData[7], outData[8], outData[9]);

// Log.e("out_data", data0);

//记录pps和sps

int type = outputBuffer.get(4) & 0x07; // 判断是什么帧

// LogUtil.e(TAG, String.format("type is %d", type));

if (type == 7 || type == 8)

byte[] outData = new byte[bufferInfo.size];

outputBuffer.get(outData);

mPpsSps = outData;

ArrayList<Integer> posLists = new ArrayList<>(2);

for (int i=0; i<bufferInfo.size-3; i++) // 找寻 pps sps

if (outData[i]==0 && outData[i+1]==0&& outData[i+2]==0 && outData[i+3]==1)

posLists.add(i);

int sps_pos = posLists.get(0);

int pps_pos = posLists.get(1);

posLists.clear();

posLists = null;

ByteBuffer csd0 = ByteBuffer.allocate(pps_pos);

csd0.put(outData, sps_pos, pps_pos);

csd0.clear();

mCSD0 = csd0;

LogUtil.e(TAG, String.format("CSD-0 searched!!!"));

ByteBuffer csd1 = ByteBuffer.allocate(outData.length-pps_pos);

csd1.put(outData, pps_pos, outData.length-pps_pos);

csd1.clear();

mCSD1 = csd1;

LogUtil.e(TAG, String.format("CSD-1 searched!!!"));

LocalBroadcastManager.getInstance(mApplicationContext).sendBroadcast(new Intent(ACTION_H264_SPS_PPS_GOT));

else if (type == 5)

// 这是一个关键帧

if (mEasyMuxer !=null && !isRecordPause)

bufferInfo.presentationTimeUs = TimeStamp.getInstance().getCurrentTimeUS();

mEasyMuxer.pumpStream(outputBuffer, bufferInfo, true);// 用于保存本地视频到本地

isWaitKeyFrame = false; // 拿到关键帧,则清除等待关键帧的条件

// LocalBroadcastManager.getInstance(mApplicationContext).sendBroadcast(new Intent(ACTION_I_KEY_FRAME_GOT));

else

outputBuffer.get(h264, 0, bufferInfo.size);

if (System.currentTimeMillis() - timeStamp >= 3000)

timeStamp = System.currentTimeMillis();

if (Build.VERSION.SDK_INT >= 23)

Bundle params = new Bundle();

params.putInt(MediaCodec.PARAMETER_KEY_REQUEST_SYNC_FRAME, 0);

mMediaCodec.setParameters(params);

if (mEasyMuxer !=null && !isRecordPause && !isWaitKeyFrame)

bufferInfo.presentationTimeUs = TimeStamp.getInstance().getCurrentTimeUS();

mEasyMuxer.pumpStream(outputBuffer, bufferInfo, true);// 用于保存本地视频到本地

mMediaCodec.releaseOutputBuffer(outputBufferIndex, false);

outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, 0);

else

Log.e(TAG, "No buffer available !");

android ndk之opencv+MediaCodec硬编解码来处理视频动态时间水印

android ndk之opencv+MediaCodec硬编解码来处理视频水印学习笔记

- android视频处理学习笔记。以前android增加时间水印的需求,希望多了解视频编解码,直播,特效这一块,顺便熟悉NDK。

openvc能干什么?为什么要集成openvc?

openvc是一套计算机视觉处理库,直白地讲,就是处理图片和识别图片的。有自己的算法后,可以做一些高级的东西,比如机器视觉,AR,人脸识别等。

android方面自带了bitmap和canvas处理库,底层还可以通过opengl硬件加速,其实渲染处理的速度也很快,甚至很多地方优过opencv。

但是,android针对视频数据,相机采集数据的yuv格式缺少api,或者yuv转rgb转bitmap,这些相互转化的过程也耗时巨大,而我发现opencv是对数据直接进行处理的,一个420sp2bgr的转换耗时极少,图像处理完成以后直达yuv,然后就可以给编码器喂数据了。

MediaCodec和ffmpeg

mediaCodec是系统自带的,原则上有GPU的手机就会有MediaCodec提供出来,使用GPU硬编码,理论上应该比软编码ffmpeg快。

YUV420p,YUV420sp,NV21,YV12

不同的系统的中对应还有别名,简单理解为数据排列的方式不同,详询度娘。

freetype

一款处理字体的C++库,可以加载ttf字体。opencv有默认字体,但是不支持中文,所以需要中文的要集成freetype。用ffmpeg处理中文也需要集成

openvc的集成

opencv支持android版,有java代码,但是集成java代码对我没有意义。首先下载opencv4android,在studio为项目include c++ support,或者在build.gradle添加externalNativeBuild

defaultConfig

applicationId "com.dxtx.codec"

minSdkVersion 15

targetSdkVersion 22

versionCode 1

versionName "1.0"

externalNativeBuild

cmake

cppFlags ""

ndk

abiFilters "armeabi","arm64-v8a"

externalNativeBuild

cmake

path 'CMakeLists.txt'

版本太旧的android studio并不支持cmakeLists来编译ndk,我使用的是

classpath ‘com.android.tools.build:gradle:3.0.1’,

gradle版本gradle-4.1-all.zip

NDK版本是r15

NDK版本太旧的话,abi编译不全,不能编译64位。我想很多人并不像我这样嫌科学上网下载大文件麻烦,都使用了最新的吧

CMakeLists.txt中引入opencv的native/jni代码配置如下

cmake_minimum_required(VERSION 3.4.1)

add_library( # Sets the name of the library.

dxtx

SHARED

src/main/jni/bs.cpp

)

set(OpenCV_DIR C:/Users/user/Downloads/opencv-3.4.2-android-sdk/OpenCV-android-sdk/sdk/native/jni)

find_package(OpenCV REQUIRED)

target_include_directories(

dxtx

PRIVATE

C:/Users/user/Downloads/opencv-3.4.2-android-sdk/OpenCV-android-sdk/sdk/native/jni/include

)

find_library(

Log-lib

Log )

target_link_libraries(

dxtx

$OpenCV_LIBS

$Log-lib )

opencv配置的是绝对路径,放在电脑里,而没有放在项目下,毕竟opencv只是编译一下,而且整个文件夹太大。

配置好以后build一下,就可以在jni里面使用opencv了。这里示范为yuv420sp的数据添加文字的关键代码

先建一个java类

public class Utils

static

System.loadLibrary("dxtx");

public static native void drawText(byte[] data, int w, int h, String text);

drawText方法报红,是C代码没有实现,可以alt+enter快捷键自动补全实现。

bs.cpp代码

#include <jni.h>

#include <android/log.h>

#include <opencv2/imgproc.hpp>

#include <opencv2/imgcodecs.hpp>

#include <opencv2/core.hpp>

#include <opencv2/highgui.hpp>

extern "C"

JNIEXPORT void JNICALL

Java_com_dxtx_test_Utils_drawText(JNIEnv *env, jclass type, jbyteArray data_, jint w,

jint h, jstring text_)

jbyte *data = env->GetByteArrayElements(data_, NULL);

const char *text = env->GetStringUTFChars(text_,0);

Mat dst;

Mat yuv(h * 3 / 2, w, CV_8UC1, (uchar *) data);

//转换为可以处理的bgr格式

cvtColor(yuv, dst, CV_YUV420sp2BGR);

// printMat(dst);

//旋转图像

rotate(dst, dst, ROTATE_90_CLOCKWISE);

w = dst.cols;

h = dst.rows;

int baseline;

//测量文字的宽高

Size size = getTextSize(text, CV_FONT_HERSHEY_COMPLEX, 0.6, 1, &baseline);

CvRect rect = cvRect(0, 0, size.width + 10, size.height * 1.5);

//灰色背景

rectangle(dst,rect,Scalar(200,200,200),-1,8,0);

//文字

putText(dst, text, Point(0, size.height), FONT_HERSHEY_SIMPLEX,0.6, Scalar(255, 255, 255),1, 8, 0);

//转换为YV12

cvtColor(dst, dst, CV_BGR2YUV_YV12);

// printMat(dst);

//因为YV12 和NV21格式不同,需要重组UV分量

jbyte *out = new jbyte[w * h / 2];

int ulen = w * h / 4;

for (int i = 0; i < ulen; i += 1)

//从YYYYYYYY UUVV 到YYYYYYYY VUVU

out[i * 2 + 1] = dst.at<uchar>(w * h + ulen + i);

out[i * 2] = dst.at<uchar>(w * h + i);

//返回到原来的数据

env->SetByteArrayRegion(data_, 0, w * h, (const jbyte *) dst.data);

env->SetByteArrayRegion(data_, w * h, w * h / 2, out);

这段代码的方法从YUV,渲染文字到YUV一气呵成了,节省了很多时间,在arm64-v8a架构手机CPU下,耗时只要30ms左右了

然后是用原来mediaCodec的方案进行编码,这个步骤可以跟之前差别不大。

后来集成了freetype,拥有了加中文水印的能力,工序多了一个,代码耗时也多了一点,但就增加时间水印来说freetype没啥必要,时间里面不含中文。

以后尝试一下直接从相机捕获数据,缓冲处理再直接录制视频的方案可行性。

以上是关于android硬编解码MediaCodec的主要内容,如果未能解决你的问题,请参考以下文章