在k8s上部署cerebro访问集群外部署的es

Posted 临江仙我亦是行人

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了在k8s上部署cerebro访问集群外部署的es相关的知识,希望对你有一定的参考价值。

需求:公司购买的是阿里云 Elasticsearch 服务,阿里云提供 kibana 用于可视化控制,而没有 corebro,所以需要在 k8s 集群中安装一个 cerebro,用于查看 k8s 集群外的 Elasticsearch 服务

1 Elastic 信息

查看 elastic 信息

curl -u esuser:123456 http://192.168.1.101:9200/_cat/nodes

2 k8s 集群中设置

2.1 设置一个 external 的 service

本质上是自己手动创建 endpoint,如果外部是域名的话更简单的是用 externalName,不过这个更通用一些

Endpoint 是 kubernetes 中的一个资源对象,存储在 etcd 中,用来记录一个 service 对应的所有 pod 的访问地址,它是根据 service 配置文件中 selector 描述产生的。 Endpoints 是实现实际服务的端点集合。换句话说,service 和 pod 之间的联系是通过 endpoints 实现的。

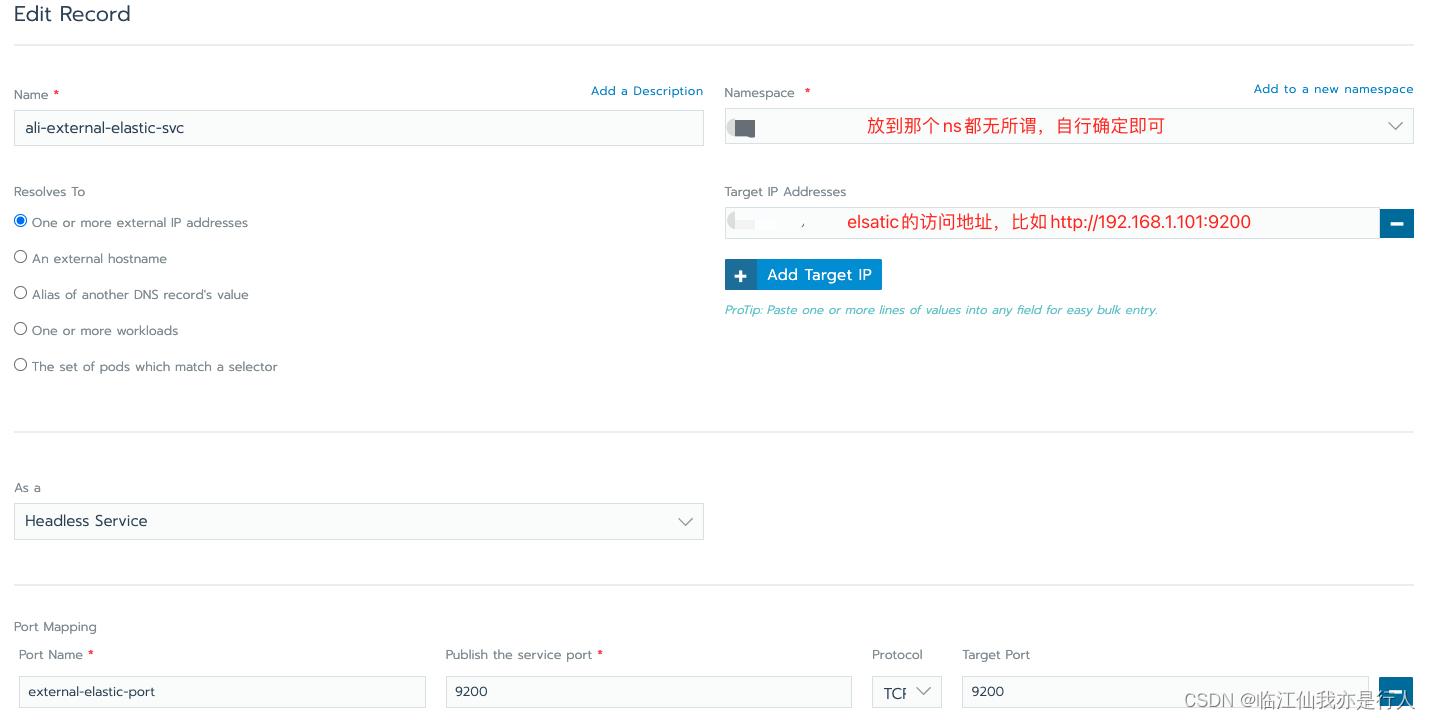

rancher 设置

查看 yaml 信息

root@ai:~# kubectl -n bi-dev get svc ali-external-elastic-svc -o yaml

apiVersion: v1

kind: Service

metadata:

annotations:

field.cattle.io/creatorId: u-sr2hlj3sdd

field.cattle.io/ipAddresses: '["192.168.1.101"]'

field.cattle.io/targetDnsRecordIds: "null"

field.cattle.io/targetWorkloadIds: "null"

creationTimestamp: "2022-07-22T10:25:23Z"

labels:

cattle.io/creator: norman

name: ali-external-elastic-svc

namespace: bi-dev

resourceVersion: "792651920"

selfLink: /api/v1/namespaces/log/services/ali-external-elastic-svc

uid: 9885e2f8-09a8-11ed-b08a-00163e104bcb

spec:

clusterIP: None

ports:

- name: external-elastic-port

port: 9200

protocol: TCP

targetPort: 9200

sessionAffinity: None

type: ClusterIP

status:

loadBalancer:

root@ai:~#

查看 ep 信息

root@ai:~# kubectl -n bi-dev get ep ali-external-elastic-svc -o yaml

apiVersion: v1

kind: Endpoints

metadata:

creationTimestamp: "2022-07-22T10:25:23Z"

labels:

cattle.io/creator: norman

name: ali-external-elastic-svc

namespace: bi-dev

ownerReferences:

- apiVersion: v1

controller: true

kind: Service

name: ali-external-elastic-svc

uid: 9885e2f8-09a8-11ed-b08a-00163e104bcb

resourceVersion: "792651923"

selfLink: /api/v1/namespaces/log/endpoints/ali-external-elastic-svc

uid: 98877a10-09a8-11ed-9356-00163e12ec0c

subsets:

- addresses:

- ip: 192.168.1.101

ports:

- name: external-elastic-port

port: 9200

protocol: TCP

root@ai:~#

2.2 发布 cerebro

cerebro 配置文件信息

root@ai:~# kubectl -n bi-dev get cm cerebro-application -o yaml

apiVersion: v1

data:

application.conf: |

es =

gzip = true

auth =

type: basic

settings

username = "admin"

password = "123456"

hosts = [

host = "http:// ali-external-elastic-svc:9200" # external service 地址

name = "ali-cerebro"

auth =

username = "esuser"

password = "123456"

]

kind: ConfigMap

metadata:

creationTimestamp: "2022-07-22T10:18:32Z"

name: cerebro-application

namespace: bi-dev

resourceVersion: "792652495"

selfLink: /api/v1/namespaces/log/configmaps/cerebro-application

uid: a32a8ec2-09a7-11ed-b292-00163e0b609f

root@ai:~#

cerebro 的 Deployment、svc、ingress 信息

cat <<EOF | kubectl apply -f - -n bi-dev

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: cerebro

name: cerebro

namespace: bi-dev

spec:

replicas: 1

selector:

matchLabels:

app: cerebro

template:

metadata:

labels:

app: cerebro

name: cerebro

spec:

containers:

- image: lmenezes/cerebro:0.8.3

imagePullPolicy: IfNotPresent

name: cerebro

resources:

limits:

cpu: 1

memory: 1Gi

requests:

cpu: 1

memory: 1Gi

volumeMounts:

- name: cerebro-conf

mountPath: /etc/cerebro

volumes:

- name: cerebro-conf

configMap:

name: cerebro-application

---

apiVersion: v1

kind: Service

metadata:

labels:

app: cerebro

name: cerebro

namespace: bi-dev

spec:

ports:

- port: 9000

protocol: TCP

targetPort: 9000

selector:

app: cerebro

type: ClusterIP

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: cerebro

namespace: bi-dev

spec:

rules:

- host: ali-cerebro.test.cn

http:

paths:

- backend:

serviceName: cerebro

servicePort: 9000

path: /

EOF

查看 pod

root@ai:~# kubectl -n bi-dev get pod |grep cerebro

cerebro-56ddd6984d-p9jch 1/1 Running 0 17h

root@ai:~#

2.3 域名解析

解析域名到 k8s 集群的 ingress

ali-cerebro.test.cn A 1.1.1.1

接下来访问域名即可

以上是关于在k8s上部署cerebro访问集群外部署的es的主要内容,如果未能解决你的问题,请参考以下文章