基于 hugging face 预训练模型的实体识别智能标注方案:生成doccano要求json格式

Posted 汀、

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了基于 hugging face 预训练模型的实体识别智能标注方案:生成doccano要求json格式相关的知识,希望对你有一定的参考价值。

强烈推荐:数据标注平台doccano----简介、安装、使用、踩坑记录_汀、的博客-CSDN博客_doccano

参考:数据标注平台doccano----简介、安装、使用、踩坑记录

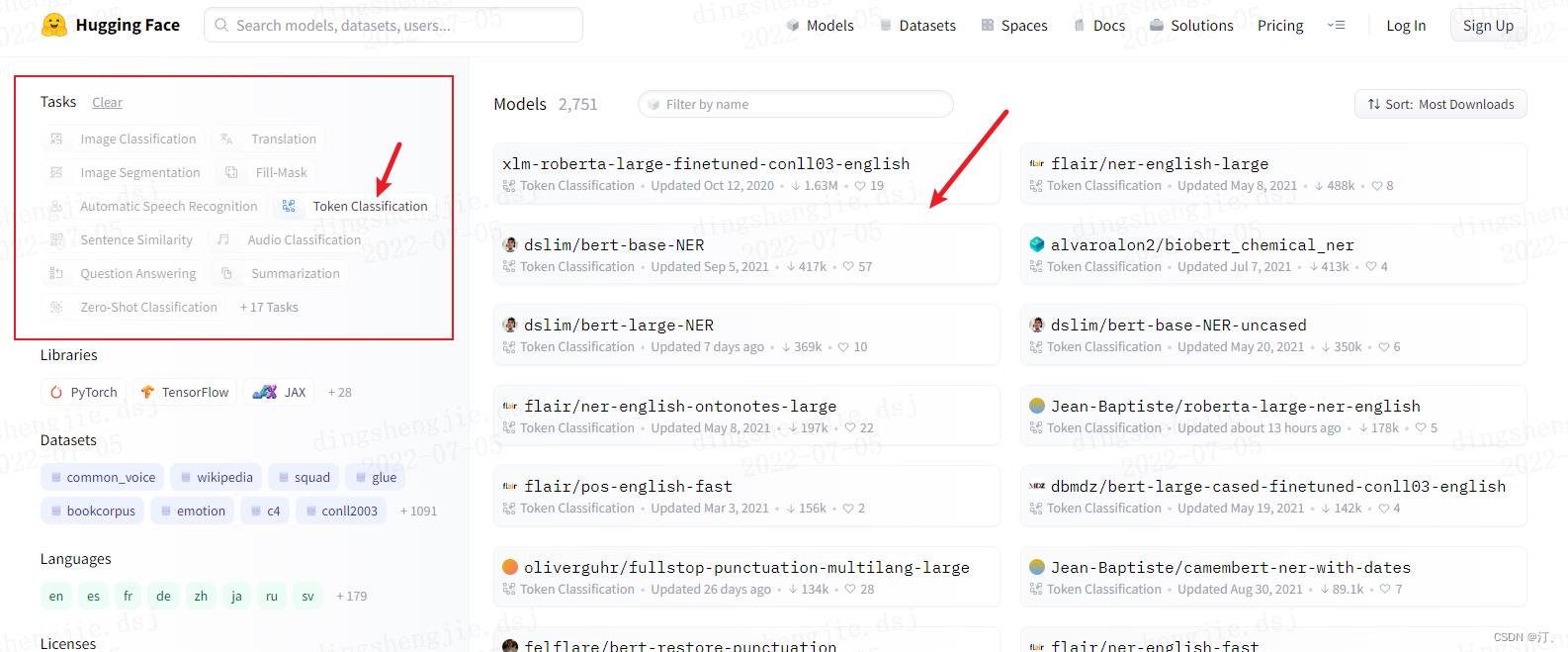

1.hugging face

相关教程直接参考别人的:与训练模型

【Huggingface Transformers】保姆级使用教程—上 - 知乎

【Huggingface Transformers】保姆级使用教程02—微调预训练模型 Fine-tuning - 知乎

huggingface transformers的trainer使用指南 - 知乎

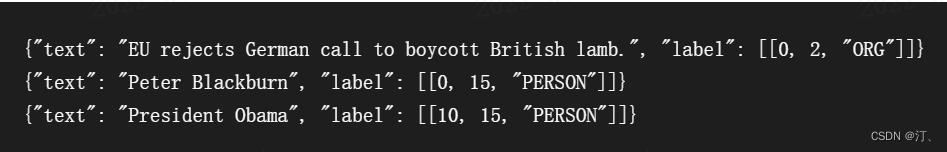

2.doccano标注平台格式要求

doccano平台操作参考文章开头链接:

json格式导入数据格式要求: 实体;包含关系样式展示

"text": "Google was founded on September 4, 1998, by Larry Page and Sergey Brin.",

"entities": [

"id": 0,

"start_offset": 0,

"end_offset": 6,

"label": "ORG"

,

"id": 1,

"start_offset": 22,

"end_offset": 39,

"label": "DATE"

,

"id": 2,

"start_offset": 44,

"end_offset": 54,

"label": "PERSON"

,

"id": 3,

"start_offset": 59,

"end_offset": 70,

"label": "PERSON"

],

"relations": [

"from_id": 0,

"to_id": 1,

"type": "foundedAt"

,

"from_id": 0,

"to_id": 2,

"type": "foundedBy"

,

"from_id": 0,

"to_id": 3,

"type": "foundedBy"

]

3. 实体智能标注+格式转换

3.1 长文本(一个txt长篇)

注释部分包含预训练模型识别实体;以及精灵标注助手格式要求

from transformers import pipeline

import os

from tqdm import tqdm

import pandas as pd

from time import time

import json

def return_single_entity(name, start, end):

return [int(start), int(end), name]

# def return_single_entity(name, word, start, end, id, attributes=[]):

# entity =

# entity['type'] = 'T'

# entity['name'] = name

# entity['value'] = word

# entity['start'] = int(start)

# entity['end'] = int(end)

# entity['attributes'] = attributes

# entity['id'] = int(id)

# return entity

# input_dir = 'E:/datasets/myUIE/inputs'

input_dir = 'C:/Users/admin/Desktop//test_input.txt'

output_dir = 'C:/Users/admin/Desktop//outputs'

tagger = pipeline(task='ner', model='xlm-roberta-large-finetuned-conll03-english',

aggregation_strategy='simple')

keywords = 'PER': '人', 'ORG': '机构' # loc 地理位置 misc 其他类型实体

# for filename in tqdm(input_dir):

# # 读取数据并自动打标

# json_list = []

with open(input_dir, 'r', encoding='utf8') as f:

text = f.readlines()

json_list = [0 for i in range(len(text))]

for t in text:

i = t.strip("\\n").strip("'").strip('"')

named_ents = tagger(i) # 预训练模型

# named_ents = tagger(text)

df = pd.DataFrame(named_ents)

""" 标注结果:entity_group score word start end

0 ORG 0.999997 National Science Board 18 40

1 ORG 0.999997 NSB 42 45

2 ORG 0.999997 NSF 71 74"""

# 放在循环里面,那每次开始新的循环就会重新定义一次,上一次定义的内容就丢了

# json_list = [0 for i in range(len(text))]

entity_list=[]

# entity_list2=[]

for index, elem in df.iterrows():

if not elem.entity_group in keywords:

continue

if elem.end - elem.start <= 1:

continue

entity = return_single_entity(

keywords[elem.entity_group], elem.start, elem.end)

entity_list.append(entity)

# entity_list2.append(entity_list)

json_obj = "text": text[index], "label": entity_list

json_list[index] = json.dumps(json_obj)

# entity_list.append(entity)

# data = json.dumps(json_list)

# json_list.append(data)

with open(f'output_dir/data_2.json', 'w', encoding='utf8') as f:

for line in json_list:

f.write(line+"\\n")

# f.write('\\n'.join(data))

# f.write(str(data))

print('done!')

# 转化为精灵标注助手导入格式(但是精灵标注助手的nlp标注模块有编码的问题,部分utf8字符不能正常显示,会影响标注结果)

# id = 1

# entity_list = ['']

# for index, elem in df.iterrows():

# if not elem.entity_group in keywords:

# continue

# entity = return_single_entity(keywords[elem.entity_group], elem.word, elem.start, elem.end, id)

# id += 1

# entity_list.append(entity)

# python_obj = 'path': f'input_dir/filename',

# 'outputs': 'annotation': 'T': entity_list, "E": [""], "R": [""], "A": [""],

# 'time_labeled': int(1000 * time()), 'labeled': True, 'content': text

# data = json.dumps(python_obj)

# with open(f'output_dir/filename.rstrip(".txt").json', 'w', encoding='utf8') as f:

# f.write(data)识别结果:

"text": "The company was founded in 1852 by Jacob Estey\\n", "label": [[35, 46, "\\u4eba"]]

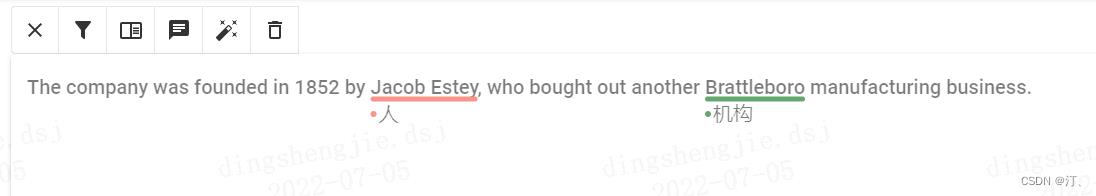

"text": "The company was founded in 1852 by Jacob Estey, who bought out another Brattleboro manufacturing business.", "label": [[35, 46, "\\u4eba"], [71, 82, "\\u673a\\u6784"]]

可以看到label标签是乱码的,不用在意导入到doccano平台后会显示正常

3.2 短文本多个(txt文件)

from transformers import pipeline

import os

from tqdm import tqdm

import pandas as pd

import json

def return_single_entity(name, start, end):

return [int(start), int(end), name]

input_dir = 'C:/Users/admin/Desktop/inputs_test'

output_dir = 'C:/Users/admin/Desktop//outputs'

tagger = pipeline(task='ner', model='xlm-roberta-large-finetuned-conll03-english', aggregation_strategy='simple')

json_list = []

keywords = 'PER': '人', 'ORG': '机构'

for filename in tqdm(os.listdir(input_dir)[:3]):

# 读取数据并自动打标

with open(f'input_dir/filename', 'r', encoding='utf8') as f:

text = f.read()

named_ents = tagger(text)

df = pd.DataFrame(named_ents)

# 转化为doccano的导入格式

entity_list = []

for index, elem in df.iterrows():

if not elem.entity_group in keywords:

continue

if elem.end - elem.start <= 1:

continue

entity = return_single_entity(keywords[elem.entity_group], elem.start, elem.end)

entity_list.append(entity)

file_obj = 'text': text, 'label': entity_list

json_obj = json.dumps(file_obj)

json_list.append(json_obj)

with open(f'output_dir/data3.json', 'w', encoding='utf8') as f:

f.write('\\n'.join(json_list))

print('done!')

3.3 含标注精灵格式要求转换

from transformers import pipeline

import os

from tqdm import tqdm

import pandas as pd

from time import time

import json

def return_single_entity(name, word, start, end, id, attributes=[]):

entity =

entity['type'] = 'T'

entity['name'] = name

entity['value'] = word

entity['start'] = int(start)

entity['end'] = int(end)

entity['attributes'] = attributes

entity['id'] = int(id)

return entity

input_dir = 'E:/datasets/myUIE/inputs'

output_dir = 'E:/datasets/myUIE/outputs'

tagger = pipeline(task='ner', model='xlm-roberta-large-finetuned-conll03-english', aggregation_strategy='simple')

keywords = 'PER': '人', 'ORG': '机构'

for filename in tqdm(os.listdir(input_dir)):

# 读取数据并自动打标

with open(f'input_dir/filename', 'r', encoding='utf8') as f:

text = f.read()

named_ents = tagger(text)

df = pd.DataFrame(named_ents)

# 转化为精灵标注助手导入格式(但是精灵标注助手的nlp标注模块有编码的问题,部分utf8字符不能正常显示,会影响标注结果)

id = 1

entity_list = ['']

for index, elem in df.iterrows():

if not elem.entity_group in keywords:

continue

entity = return_single_entity(keywords[elem.entity_group], elem.word, elem.start, elem.end, id)

id += 1

entity_list.append(entity)

python_obj = 'path': f'input_dir/filename',

'outputs': 'annotation': 'T': entity_list, "E": [""], "R": [""], "A": [""],

'time_labeled': int(1000 * time()), 'labeled': True, 'content': text

data = json.dumps(python_obj)

with open(f'output_dir/filename.rstrip(".txt").json', 'w', encoding='utf8') as f:

f.write(data)

print('done!')

以上是关于基于 hugging face 预训练模型的实体识别智能标注方案:生成doccano要求json格式的主要内容,如果未能解决你的问题,请参考以下文章