i.MX6ULL驱动开发 | 17 - Linux中断机制及使用方法(taskletworkqueue软中断)

Posted Mculover666

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了i.MX6ULL驱动开发 | 17 - Linux中断机制及使用方法(taskletworkqueue软中断)相关的知识,希望对你有一定的参考价值。

Linux内核提供了完善的中断框架,使用时只需要申请中断,注册相应的中断处理函数即可,非常方便。

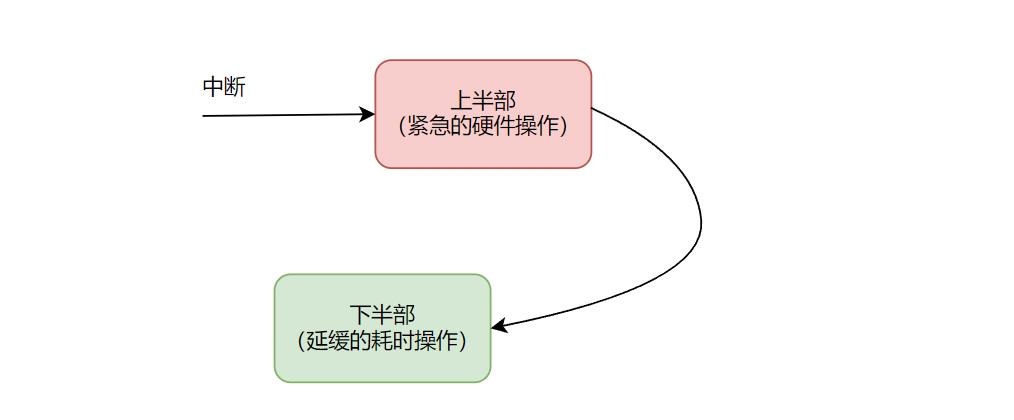

一、Linux中断处理程序架构

为了在中断执行时间尽量短和中断处理需完成的工作量尽量大之间找到一个平衡点,Linux将中断处理程序分解为两个半部:顶半部(Top Half)和底半部(Bottom Half),也称为上半部和下半部。

上半部用于完成尽量少的比较紧急的功能,它往往只是简单的读取寄存器中的中断状态,并在清除中断标志后就就进行“登记中断”的工作。“登记中断”意味着将下半部处理程序挂到该设备的下半部执行队列中。这样,上半部的执行速度就会很快,从而可以服务更多的中断请求。

之后,中断处理工作的重心就落在了下半部的头上,需要用它来完成中断事件的绝大多数任务。下半部几乎做了中断处理程序所有的事情,而且可以被新的中断打断,这也是下半部和上半部最大的不同,因为上半部往往被设计为不可中断。下半部相对来说并不是非常紧急的,而且相对比较耗时,不在硬件中断服务程序中执行。

尽管上半部、下半部的结合能够改善系统的响应能力,但并不是所有中断处理都需要上半部和下半部,如果中断要处理的工作本身很少,则完全可以直接在上半部全部完成。

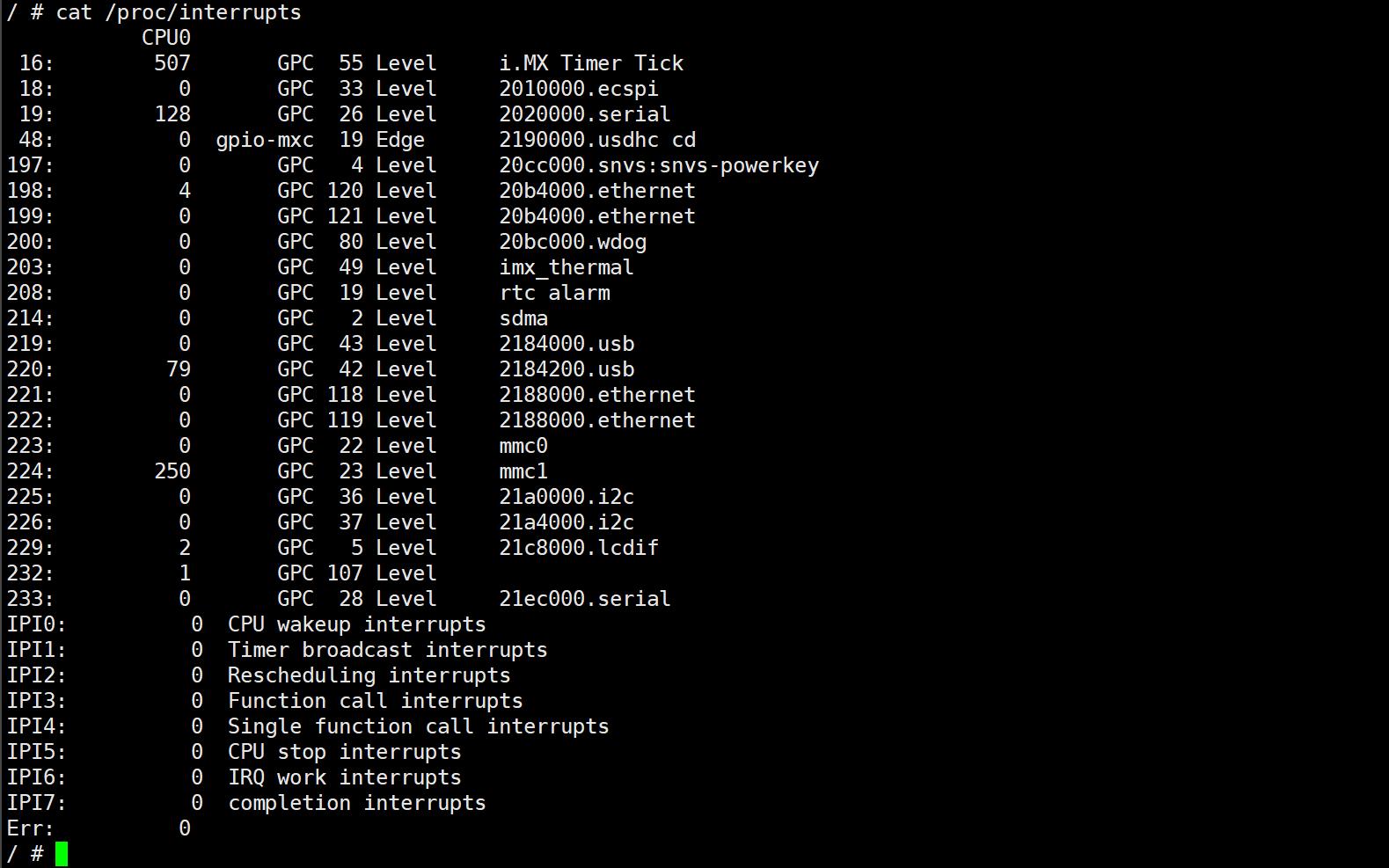

在Linux中,查看/proc/interrupt文件开源获得系统中中断的统计信息,并能统计出每一个中断号上的中断在每一个CPU上发生的次数:

二、Linux中断编程

1. 申请和释放中断

在Linux设备驱动中,使用中断的设备需要申请和释放对应的中断,分别使用内核提供的request_irq和free_irq函数。

在内核头文件include/linux/interrupt.h中。

1.1. 申请irq

API原型如下:

static inline int __must_check

request_irq(unsigned int irq, irq_handler_t handler, unsigned long flags,

const char *name, void *dev)

return request_threaded_irq(irq, handler, NULL, flags, name, dev);

参数意义如下:

- irq:要申请的硬件中断号

- handler:向系统等级的中断处理函数(上半部),中断发生时,回调该函数,dev参数将传递给它

- flags:中断处理的属性,可以指定中断的触发方式以及处理方式

- name:中断名称

- dev:要传递给中断服务函数的私有数据,一般设置为这个设备的设备结构体或者NULL

返回0表示中断申请成功,返回负值表示中断申请失败,-EINVAL表示中断号无效或处理函数指针为NULL,-EBUSY表示中断已经被占用且不能共享。

其中flag参数在中断的触发方式方面可用值如下:

/*

* These correspond to the IORESOURCE_IRQ_* defines in

* linux/ioport.h to select the interrupt line behaviour. When

* requesting an interrupt without specifying a IRQF_TRIGGER, the

* setting should be assumed to be "as already configured", which

* may be as per machine or firmware initialisation.

*/

#define IRQF_TRIGGER_NONE 0x00000000

#define IRQF_TRIGGER_RISING 0x00000001

#define IRQF_TRIGGER_FALLING 0x00000002

#define IRQF_TRIGGER_HIGH 0x00000004

#define IRQF_TRIGGER_LOW 0x00000008

#define IRQF_TRIGGER_MASK (IRQF_TRIGGER_HIGH | IRQF_TRIGGER_LOW | \\

IRQF_TRIGGER_RISING | IRQF_TRIGGER_FALLING)

#define IRQF_TRIGGER_PROBE 0x00000010

- IRQF_TRIGGER_NONE:无触发

- IRQF_TRIGGER_RISING:上升沿触发

- IRQF_TRIGGER_FALLING:下降沿触发

- IRQF_TRIGGER_HIGH:高电平触发

- IRQF_TRIGGER_LOW :低电平触发

其中flag参数在中断的处理方式方面可用值如下:

#define IRQF_SHARED 0x00000080

- IRQF_SHARED:表示多个设备共享中断

1.2. 释放irq

使用中断的时候需要通过request_irq函数申请,使用完之后通过free_irq函数释放相应的中断,原型如下:

/**

* free_irq - free an interrupt allocated with request_irq

* @irq: Interrupt line to free

* @dev_id: Device identity to free

*

* Remove an interrupt handler. The handler is removed and if the

* interrupt line is no longer in use by any driver it is disabled.

* On a shared IRQ the caller must ensure the interrupt is disabled

* on the card it drives before calling this function. The function

* does not return until any executing interrupts for this IRQ

* have completed.

*

* This function must not be called from interrupt context.

*/

void free_irq(unsigned int irq, void *dev_id)

如果中断不是共享的,那么free_irq会删除中断处理函数并禁止中断;而共享中断只有在释放最后中断处理函数的时候才会被禁止。

1.3. 无需显示释放的申请irq

static inline int __must_check

devm_request_irq(struct device *dev, unsigned int irq, irq_handler_t handler,

unsigned long irqflags, const char *devname, void *dev_id)

return devm_request_threaded_irq(dev, irq, handler, NULL, irqflags,

devname, dev_id);

devm_开头的API申请的是内核“managed”的资源,一般不需要在出错处理或者remove接口里再显示的使用free_irq释放。

1.4. 中断处理函数

中断处理函数格式如下:

typedef irqreturn_t (*irq_handler_t)(int, void *);

第一个参数是中断处理函数相应的中断号,第二个参数是一个void*指针,需要与request_irq的dev参数保持一致,用于区分共享中断的不同设备。

返回值定义如下:

/**

* enum irqreturn

* @IRQ_NONE interrupt was not from this device

* @IRQ_HANDLED interrupt was handled by this device

* @IRQ_WAKE_THREAD handler requests to wake the handler thread

*/

enum irqreturn

IRQ_NONE = (0 << 0),

IRQ_HANDLED = (1 << 0),

IRQ_WAKE_THREAD = (1 << 1),

;

typedef enum irqreturn irqreturn_t;

#define IRQ_RETVAL(x) ((x) ? IRQ_HANDLED : IRQ_NONE)

2. 中断使能与屏蔽

2.1. 使能一个中断源

/**

* enable_irq - enable handling of an irq

* @irq: Interrupt to enable

*

* Undoes the effect of one call to disable_irq(). If this

* matches the last disable, processing of interrupts on this

* IRQ line is re-enabled.

*

* This function may be called from IRQ context only when

* desc->irq_data.chip->bus_lock and desc->chip->bus_sync_unlock are NULL !

*/

void enable_irq(unsigned int irq);

2.2. 屏蔽一个中断源

(1)等待目前的中断处理完成

/**

* disable_irq - disable an irq and wait for completion

* @irq: Interrupt to disable

*

* Disable the selected interrupt line. Enables and Disables are

* nested.

* This function waits for any pending IRQ handlers for this interrupt

* to complete before returning. If you use this function while

* holding a resource the IRQ handler may need you will deadlock.

*

* This function may be called - with care - from IRQ context.

*/

void disable_irq(unsigned int irq);

(2)立即返回

/**

* disable_irq_nosync - disable an irq without waiting

* @irq: Interrupt to disable

*

* Disable the selected interrupt line. Disables and Enables are

* nested.

* Unlike disable_irq(), this function does not ensure existing

* instances of the IRQ handler have completed before returning.

*

* This function may be called from IRQ context.

*/

void disable_irq_nosync(unsigned int irq);

由于 disable_irq 会等待指定的中断被处理完,如果在n号中断的上半部调用disable_irq(n),会引发系统死锁,在这种情况下,只能调用disable_irq_nosync。

2.3. 开关整个中断系统

(1)屏蔽本CPU内的所有中断:

local_irq_disable()

local_irq_save(flags)

disable会直接禁止中断,而save会在禁止中断的同时将目前的中断状态保存到flag中。

(2)恢复本CPU内的所有中断:

local_irq_enable()

local_irq_restore(flags)

同样,enable会开启中断,而restore会将flags状态恢复。

二、下半部机制

Linux实现下半部的机制主要有:tasklet、工作队列、软中断、线程化irq。

1. tasklet

tasklet的使用比较简单,在软中断中执行,执行时机通常是上半部返回的时候。

定义在内核头文件<linux/interrupt.h>中。

1.1. 基本概念

Linux内核使用tasklet_struct结构体来表示tasklet:

/* Tasklets --- multithreaded analogue of BHs.

Main feature differing them of generic softirqs: tasklet

is running only on one CPU simultaneously.

Main feature differing them of BHs: different tasklets

may be run simultaneously on different CPUs.

*/

struct tasklet_struct

struct tasklet_struct *next;

unsigned long state;

atomic_t count;

void (*func)(unsigned long);

unsigned long data;

;

1.2. 使用方法

使用的时候,只需要定义tasklet及其处理函数,并将两者关联即可。

(1)处理函数

void (*func)(unsigned long);

(2)定义tasklet并绑定处理函数

可以直接定义一个tasklet类型的变量,然后使用 tasklet_init 函数初始化:

void tasklet_init(struct tasklet_struct *t,

void (*func)(unsigned long), unsigned long data)

参数意义如下:

- t:tasklet指针

- func:tasklet的处理函数

- data:要传递给func函数的参数

也可以使用宏 DECLARE_TASKLET 来一次性完成tasklet定义和初始化:

#define DECLARE_TASKLET(name, func, data) \\

struct tasklet_struct name = NULL, 0, ATOMIC_INIT(0), func, data

(3)调度

在上半部的中断处理函数中,调用下面的API就能使tasklet在合适的时间运行:

void __tasklet_schedule(struct tasklet_struct *t);

2. 工作队列

工作队列的使用方法和 tasklet 非常相似,但是工作队列的执行上下文是内核线程,因此可以调度和睡眠。

定义在内核头文件<linux/workqueue.h>中。

2.1. 基本概念

(1)工作

Linux内核用 work_struct 结构体表示一个工作,如下:

struct work_struct

atomic_long_t data;

struct list_head entry;

work_func_t func;

#ifdef CONFIG_LOCKDEP

struct lockdep_map lockdep_map;

#endif

;

(2)工作队列

这些工作可以放入工作队列,工作队列用 workqueue_struct 表示,如下(部分省略):

/*

* The externally visible workqueue. It relays the issued work items to

* the appropriate worker_pool through its pool_workqueues.

*/

struct workqueue_struct

struct list_head pwqs; /* WR: all pwqs of this wq */

struct list_head list; /* PR: list of all workqueues */

struct mutex mutex; /* protects this wq */

int work_color; /* WQ: current work color */

int flush_color; /* WQ: current flush color */

atomic_t nr_pwqs_to_flush; /* flush in progress */

struct wq_flusher *first_flusher; /* WQ: first flusher */

struct list_head flusher_queue; /* WQ: flush waiters */

struct list_head flusher_overflow; /* WQ: flush overflow list */

struct list_head maydays; /* MD: pwqs requesting rescue */

struct worker *rescuer; /* I: rescue worker */

int nr_drainers; /* WQ: drain in progress */

int saved_max_active; /* WQ: saved pwq max_active */

struct workqueue_attrs *unbound_attrs; /* WQ: only for unbound wqs */

struct pool_workqueue *dfl_pwq; /* WQ: only for unbound wqs */

#ifdef CONFIG_SYSFS

struct wq_device *wq_dev; /* I: for sysfs interface */

#endif

#ifdef CONFIG_LOCKDEP

struct lockdep_map lockdep_map;

#endif

char name[WQ_NAME_LEN]; /* I: workqueue name */

/*

* Destruction of workqueue_struct is sched-RCU protected to allow

* walking the workqueues list without grabbing wq_pool_mutex.

* This is used to dump all workqueues from sysrq.

*/

struct rcu_head rcu;

/* hot fields used during command issue, aligned to cacheline */

unsigned int flags ____cacheline_aligned; /* WQ: WQ_* flags */

struct pool_workqueue __percpu *cpu_pwqs; /* I: per-cpu pwqs */

struct pool_workqueue __rcu *numa_pwq_tbl[]; /* FR: unbound pwqs indexed by node */

;

(3)工作者

Linux内核使用 worker_thread 来处理工作队列中的各个工作,用 worker 结构体来表示:

/*

* The poor guys doing the actual heavy lifting. All on-duty workers are

* either serving the manager role, on idle list or on busy hash. For

* details on the locking annotation (L, I, X...), refer to workqueue.c.

*

* Only to be used in workqueue and async.

*/

struct worker

/* on idle list while idle, on busy hash table while busy */

union

struct list_head entry; /* L: while idle */

struct hlist_node hentry; /* L: while busy */

;

struct work_struct *current_work; /* L: work being processed */

work_func_t current_func; /* L: current_work's fn */

struct pool_workqueue *current_pwq; /* L: current_work's pwq */

bool desc_valid; /* ->desc is valid */

struct list_head scheduled; /* L: scheduled works */

/* 64 bytes boundary on 64bit, 32 on 32bit */

struct task_struct *task; /* I: worker task */

struct worker_pool *pool; /* I: the associated pool */

/* L: for rescuers */

struct list_head node; /* A: anchored at pool->workers */

/* A: runs through worker->node */

unsigned long last_active; /* L: last active timestamp */

unsigned int flags; /* X: flags */

int id; /* I: worker id */

/*

* Opaque string set with work_set_desc(). Printed out with task

* dump for debugging - WARN, BUG, panic or sysrq.

*/

char desc[WORKER_DESC_LEN];

/* used only by rescuers to point to the target workqueue */

struct workqueue_struct *rescue_wq; /* I: the workqueue to rescue */

;

(4)工作者线程

每个工作者都有一个工作队列,由工作者线程处理自己工作队列中的所有工作:

/**

* worker_thread - the worker thread function

* @__worker: self

*

* The worker thread function. All workers belong to a worker_pool -

* either a per-cpu one or dynamic unbound one. These workers process all

* work items regardless of their specific target workqueue. The only

* exception is work items which belong to workqueues with a rescuer which

* will be explained in rescuer_thread().

*

* Return: 0

*/

static int worker_thread(void *__worker);

2.2. 使用方法

使用时只需要定义工作(work_struct)即可,无需关注工作队列和工作者线程。

(1)工作对应的处理函数

typedef void (*work_func_t)(struct work_struct *work);

(2)定义工作

先定义 work_struct 类型的变量,再使用宏定义 INIT_WORK 来初始化工作:

#define INIT_WORK(_work, _func) \\

__INIT_WORK((_work), (_func), 0)

__work表示要初始化的工作,__func是工作对应的处理函数。

同样,可以使用宏定义DECLARE_WORK来一次性完成工作的创建和初始化:

#define DECLARE_WORK(n, f) \\

struct work_struct n = __WORK_INITIALIZER(n, f)

(3)调度

在上半部的中断处理函数中,调用下面的API就能使工作在合适的时间运行:

/**

* schedule_work - put work task in global workqueue

* @work: job to be done

*

* Returns %false if @work was already on the kernel-global workqueue and

* %true otherwise.

*

* This puts a job in the kernel-global workqueue if it was not already

* queued and leaves it in the same position on the kernel-global

* workqueue otherwise.

*/

static inline bool schedule_work(struct work_struct *work)

return queue_work(system_wq, work);

参数work表示要调度的工作,返回值为0表示成功。

3. 软中断

软中断(Softirq)是一种传统的下半部处理机制,它的执行时机通常是上半部返回的时候,tasklet就是基于软中断实现的,也运行于软中断上下文,因此软中断和tasklet处理函数中不允许睡眠。

定义在内核头文件<linux/interrupt.h>中。

(1)软中断

Linux内核中,用 softirq_action 结构体表示一个软中断:

/* softirq mask and active fields moved to irq_cpustat_t in

* asm/hardirq.h to get better cache usage. KAO

*/

struct softirq_action

void (*action)(struct softirq_action *);

;

(2)注册软中断对应的处理函数

void open_softirq(int nr, void (*action)(struct softirq_action *))

softirq_vec[nr].action = action;

其中nr表示要开启的软中断,数组 softirq_vec 定义如下:

static struct softirq_action softirq_vec[NR_SOFTIRQS] __cacheline_aligned_in_smp;

数组标号NR_SOFTIRQS的枚举如下:

/* PLEASE, avoid to allocate new softirqs, if you need not _really_ high

frequency threaded job scheduling. For almost all the purposes

tasklets are more than enough. F.e. all serial device BHs et

al. should be converted to tasklets, not to softirqs.

*/

enum

HI_SOFTIRQ=0,

TIMER_SOFTIRQ,

NET_TX_SOFTIRQ,

NET_RX_SOFTIRQ,

BLOCK_SOFTIRQ,

BLOCK_IOPOLL_SOFTIRQ,

TASKLET_SOFTIRQ,

SCHED_SOFTIRQ,

HRTIMER_SOFTIRQ,

RCU_SOFTIRQ, /* Preferable RCU should always be the last softirq */

NR_SOFTIRQS

;

(3)触发软中断

void raise_softirq(unsigned int nr);

nr表示要触发的软中断。

(4)内核中软中断初始化

软中断必须在编译的时候静态注册,Linux内核使用softirq_init函数初始化软中断,定义在kernel/softirq.c中:

void __init softirq_init(void)

int cpu;

for_each_possible_cpu(cpu)

per_cpu(tasklet_vec, cpu).tail =

&per_cpu(tasklet_vec, cpu).head;

per_cpu(tasklet_hi_vec, cpu).tail =

&per_cpu(tasklet_hi_vec, cpu).head;

open_softirq(TASKLET_SOFTIRQ, tasklet_action);

open_softirq(HI_SOFTIRQ, tasklet_hi_action);

可以看到,Linux内核默认打开tasklet软中断,开发驱动时,不推荐是直接使用软中断。

三、threaded_irq

在内核中,除了可以通过 request_irq 和 devm_request_irq 申请中断以外,还可以通过 request_thread_irq 和 devm_request_irq 申请。

直接申请:

/**

* request_threaded_irq - allocate an interrupt line

* @irq: Interrupt line to allocate

* @handler: Function to be called when the IRQ occurs.

* Primary handler for threaded interrupts

* If NULL and thread_fn != NULL the default

* primary handler is installed

* @thread_fn: Function called from the irq handler thread

* If NULL, no irq thread is created

* @irqflags: Interrupt type flags

* @devname: An ascii name for the claiming device

* @dev_id: A cookie passed back to the handler function

*/

int request_threaded_irq(unsigned int irq, irq_handler_t handler,

irq_handler_t thread_fn, unsigned long irqflags,

const char *devname, void *dev_id);

申请可以自动回收的中断:

/**

* devm_request_threaded_irq - allocate an interrupt line for a managed device

* @dev: device to request interrupt for

* @irq: Interrupt line to allocate

* @handler: Function to be called when the IRQ occurs

* @thread_fn: function to be called in a threaded interrupt context. NULL

* for devices which handle everything in @handler

* @irqflags: Interrupt type flags

* @devname: An ascii name for the claiming device

* @dev_id: A cookie passed back to the handler function

*/

int devm_request_threaded_irq(struct device *dev, unsigned int irq,

irq_handler_t handler, irq_handler_t thread_fn,

unsigned long irqflags, const char *devname,

void *dev_id);

用这两个API申请中断时,内核会为相应的中断号分配一个对应的内核线程,该线程只针对这个中断号。

以上是关于i.MX6ULL驱动开发 | 17 - Linux中断机制及使用方法(taskletworkqueue软中断)的主要内容,如果未能解决你的问题,请参考以下文章