性能评价指标(Precision, Recall, F-score, MAP)

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了性能评价指标(Precision, Recall, F-score, MAP)相关的知识,希望对你有一定的参考价值。

参考技术A 转自: CSDN博客原文一:Precision, Recall, F-score

信息检索、分类、识别、翻译等领域两个最基本指标是召回率(Recall Rate)和准确率(Precision Rate------注意统计学习方法中precesion称为精确率,而准确率为accuracy 是分类正确的样本除以总样本的个数。),召回率也叫查全率,准确率也叫查准率,概念公式:

召回率(Recall)= 系统检索到的相关文件 / 系统所有相关的文件总数;;;亦即预测为真实正例除以所有真实正例样本的个数

准确率(Precision)= 系统检索到的相关文件 / 系统所有检索到的文件总数;;;亦即等于预测为真实正例除以所有被预测为正例样本的个数

注意:(1)准确率和召回率是互相影响的,理想情况下肯定是做到两者都高,但是一般情况下准确率高、召回率就低,召回率低、准确率高,当然如果两者都低,那是什么地方出问题了。

(2)如果是做搜索,那就是保证召回的情况下提升准确率;如果做疾病监测、反垃圾,则是保准确率的条件下,提升召回。

所以,在两者都要求高的情况下,可以用F1(或者称为F-score)来衡量。计算公式如下:

F1= 2 * P * R / (P + R)

(1) 公式基本上就是这样,但是如何算图1中的A、B、C、D呢? 这需要人工标注,人工标注数据需要较多时间且枯燥,如果仅仅是做实验可以用用现成的语料。当然,还有一个办法,找个一个比较成熟的算法作为基准,用该算法的结果作为样本来进行比照 ,这个方法也有点问题,如果有现成的很好的算法,就不用再研究了。

(2) 形象直观的理解就是Recall要求的是全,宁可错杀一千,不能放过一人,这样Recall就会很高,但是precision就会最低。比如将所有的样本都判为正例,这是Recall就会等于1,但是很多负样本都被当做了正例,在某些情况就不适用,比如邮件过滤,此时要求的是准确率,不能是召回率,将所有的邮件都当做垃圾邮件肯定是最坏的结果(此时Recall=1)。

如果没有证据证明你有罪,那么你就有罪,召回率会很高;如果没有证据证明你有罪,那么你就无罪,召回率会很低,不全,很多人逍遥法外;

二:MAP

MAP:全称mean average precision(平均准确率)。mAP是为解决P,R,F-measure的单点值局限性的,同时考虑了检索效果的排名情况。

如:

mPA 是Object Detection算法中衡量算法的精确度的指标,涉及两个概念:查准率Precision、查全率Recall。对于object detection任务,每一个object都可以计算出其Precision和Recall,多次计算/试验,每个类都 可以得到 一条P-R曲线 ,曲线下的面积就是AP的值,这个mean的意思是对每个类的AP再求平均,得到的就是mPA的值,mPA的大小一定在[0,1]区间。

mmaction2 入门教程 02评价指标 每类动作(行为)数量 precision(准确率),recall(召回率)apmAP

目录

0 前言

这次的博客说说如何在mmaction2中的评价指标,主要是评价每一个动作(行为)的数量和召回率Recall和精准率(Precision)、 AP、mAP。

如果对这些指标不理解的,参考:机器学习:mAP评价指标、mAP (mean Average Precision) for Object Detection

b站:https://www.bilibili.com/video/BV1a14y1475H/

GPU平台:https://cloud.videojj.com/auth/register?inviter=18452&activityChannel=student_invite

1 mmaction2中评价指标

但是在mmaction2中,默认的的评价结果一般如下:

执行:

cd /home/MPCLST/mmaction2_YF/

python tools/test.py configs/detection/ava/my_slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb3.py ./work_dirs/22-8-7ava-lr0005/slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb/latest.pth --eval mAP

其中

cd /home/MPCLST/mmaction2_YF/ 为mmaction2的路径

my_slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb3.py为配置文件

slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb/latest.pth为训练的权重(最后一次epoch)

–eval mAP 评价指标采用mAP

[>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>] 77/77, 9.3 task/s, elapsed: 8s, ETA: 0s2022-08-05 18:51:42,986 - mmaction - INFO - Evaluating mAP ...

INFO:mmaction:Evaluating mAP ...

==> 0.0539076 seconds to read file /home/MPCLST/Dataset/annotations/val.csv

==> 0.0548043 seconds to Reading detection results

==> 0.11351 seconds to read file AVA_20220805_185142_result.csv

==> 0.11438 seconds to Reading detection results

==> 0.0137618 seconds to Convert groundtruth

==> 0.678621 seconds to convert detections

==> 0.0614183 seconds to run_evaluator

mAP@0.5IOU= 0.1576104042635473

PerformanceByCategory/AP@0.5IOU/eye Invisible= 0.2180298892002125

PerformanceByCategory/AP@0.5IOU/eye ohter= PerformanceByCategory/AP@0.5IOU/open eyes= PerformanceByCategory/AP@0.5IOU/close eyes= PerformanceByCategory/AP@0.5IOU/lip Invisible= 0.21092473617973181 PerformanceByCategory/AP@0.5IOU/lip ohter= nan PerformanceByCategory/AP@0.5IOU/open mouth= 0.018103458579299812 PerformanceByCategory/AP@0.5IOU/close mouth= 0.4808178582451651 PerformanceByCategory/AP@0.5IOU/body Invisible= 0.0 PerformanceByCategory/AP@0.5IOU/body other= 0.0 PerformanceByCategory/AP@0.5IOU/body sit= 0.3023163122037017 PerformanceByCategory/AP@0.5IOU/body side Sit= 0.05629252504252504 PerformanceByCategory/AP@0.5IOU/body stand= 0.4428815974966386 PerformanceByCategory/AP@0.5IOU/body lying down= nan PerformanceByCategory/AP@0.5IOU/body bend over= 0.011661807580174927 PerformanceByCategory/AP@0.5IOU/body squat= nan PerformanceByCategory/AP@0.5IOU/body rely= nan PerformanceByCategory/AP@0.5IOU/body lie flat= nan PerformanceByCategory/AP@0.5IOU/body lateral= nan PerformanceByCategory/AP@0.5IOU/left hand invisible= PerformanceByCategory/AP@0.5IOU/left hand other= PerformanceByCategory/AP@0.5IOU/left hand palm grip= PerformanceByCategory/AP@0.5IOU/left hand palm spread= 0.3608785801187531 PerformanceByCategory/AP@0.5IOU/left hand palm Point= 0.011808118081180811

PerformanceByCategory/AP@0.5IOU/left hand applause= PerformanceByCategory/AP@0.5IOU/left hand write= nan PerformanceByCategory/AP@0.5IOU/left arm invisible= 0.12179166353235524 PerformanceByCategory/AP@0.5IOU/left arm other= nan PerformanceByCategory/AP@0.5IOU/left arm flat= 0.025848791267662225 PerformanceByCategory/AP@0.5IOU/left arm droop= 0.17178401700149137

nan 0.39957288548832476

0.018996960486322188

PerformanceByCategory/AP@0.5IOU/left arm forward=

PerformanceByCategory/AP@0.5IOU/left arm flexion=

PerformanceByCategory/AP@0.5IOU/left arm raised=

PerformanceByCategory/AP@0.5IOU/left handed behavior object invisible= 0.2202811921971896 PerformanceByCategory/AP@0.5IOU/left handed behavior object other= 0.004090314136125654 PerformanceByCategory/AP@0.5IOU/left handed behavior object book = 0.07097950951517762 PerformanceByCategory/AP@0.5IOU/left handed behavior object exercise book= 0.2699992939947191 PerformanceByCategory/AP@0.5IOU/left handed behavior object spare head= 0.0 PerformanceByCategory/AP@0.5IOU/left handed behavior object electronic equipment= 0.0625 PerformanceByCategory/AP@0.5IOU/left handed behavior object electronic pointing at others= 0.002109704641350211

PerformanceByCategory/AP@0.5IOU/left handed behavior object chalk= nan PerformanceByCategory/AP@0.5IOU/left handed behavior object no interaction= 0.5040241544391736

PerformanceByCategory/AP@0.5IOU/right hand invisible= PerformanceByCategory/AP@0.5IOU/right hand other= PerformanceByCategory/AP@0.5IOU/right hand palm grip= PerformanceByCategory/AP@0.5IOU/right hand palm spread= 0.3032998644432045

0.22222112912575823 nan

0.24973343634144107

nan

0.00720624758668529 0.3526806569267596 0.27237318666844396

0.2581980631539392 0.014660633484162897 0.22999279538304435

PerformanceByCategory/AP@0.5IOU/right hand palm Point= 0.0006038647342995169 PerformanceByCategory/AP@0.5IOU/right hand applause= nan PerformanceByCategory/AP@0.5IOU/right hand write= 0.0 PerformanceByCategory/AP@0.5IOU/right arm invisible= 0.09170294175736904 PerformanceByCategory/AP@0.5IOU/right arm other= nan PerformanceByCategory/AP@0.5IOU/right arm flat= 0.04363534094842507

PerformanceByCategory/AP@0.5IOU/right arm droop= PerformanceByCategory/AP@0.5IOU/right arm forward= PerformanceByCategory/AP@0.5IOU/right arm flexion= PerformanceByCategory/AP@0.5IOU/right arm raised=

0.12056235779316193 0.003629032258064516

0.30850548191847726 0.11344222969091167

PerformanceByCategory/AP@0.5IOU/right handed PerformanceByCategory/AP@0.5IOU/right handed PerformanceByCategory/AP@0.5IOU/right handed PerformanceByCategory/AP@0.5IOU/right handed PerformanceByCategory/AP@0.5IOU/right handed PerformanceByCategory/AP@0.5IOU/right handed 0.021052631578947368

PerformanceByCategory/AP@0.5IOU/right handed PerformanceByCategory/AP@0.5IOU/right handed PerformanceByCategory/AP@0.5IOU/leg invisible= 0.36735369213840974

PerformanceByCategory/AP@0.5IOU/leg other= PerformanceByCategory/AP@0.5IOU/leg stand= PerformanceByCategory/AP@0.5IOU/leg run= PerformanceByCategory/AP@0.5IOU/leg walk= PerformanceByCategory/AP@0.5IOU/leg jump= PerformanceByCategory/AP@0.5IOU/leg Kick= 2022-08-05 18:51:43,917 - mmaction - INFO - mAP@0.5IOU 0.1576

0.28758302075183967 0.21229324685277162

0.488660662884261 nan

0.017013888888888887 nan

behavior object invisible= 0.24774165620121377 behavior object other= 0.037239973118687986 behavior object book = 0.0045173926595858515 behavior object exercise book= 0.09175462925197815 behavior object spare head= 0.0

behavior object electronic equipment=

behavior object electronic pointing at others= nan behavior object chalk= nan

INFO:mmaction:

mAP@0.5IOU 0.1576

2022-08-05 18:51:43,918 - mmaction - INFO - Epoch(val) [50][77] mAP@0.5IOU: 0.1576 INFO:mmaction:Epoch(val) [50][77] mAP@0.5IOU: 0.1576

可以看出,这里有每个类别动作(行为)的AP@0.5IOU,但是我们却没有precision, recall(准确率与召回率)。

其实 precision, recall(准确率与召回率)在mmaction2当中是有处理的,只不过没有输出,那么这篇博客,我就说说,如何把 precision, recall(准确率与召回率)输出。

2 解析评价指标

2.1 默认评价指标

最关键的代码在哪里呢?

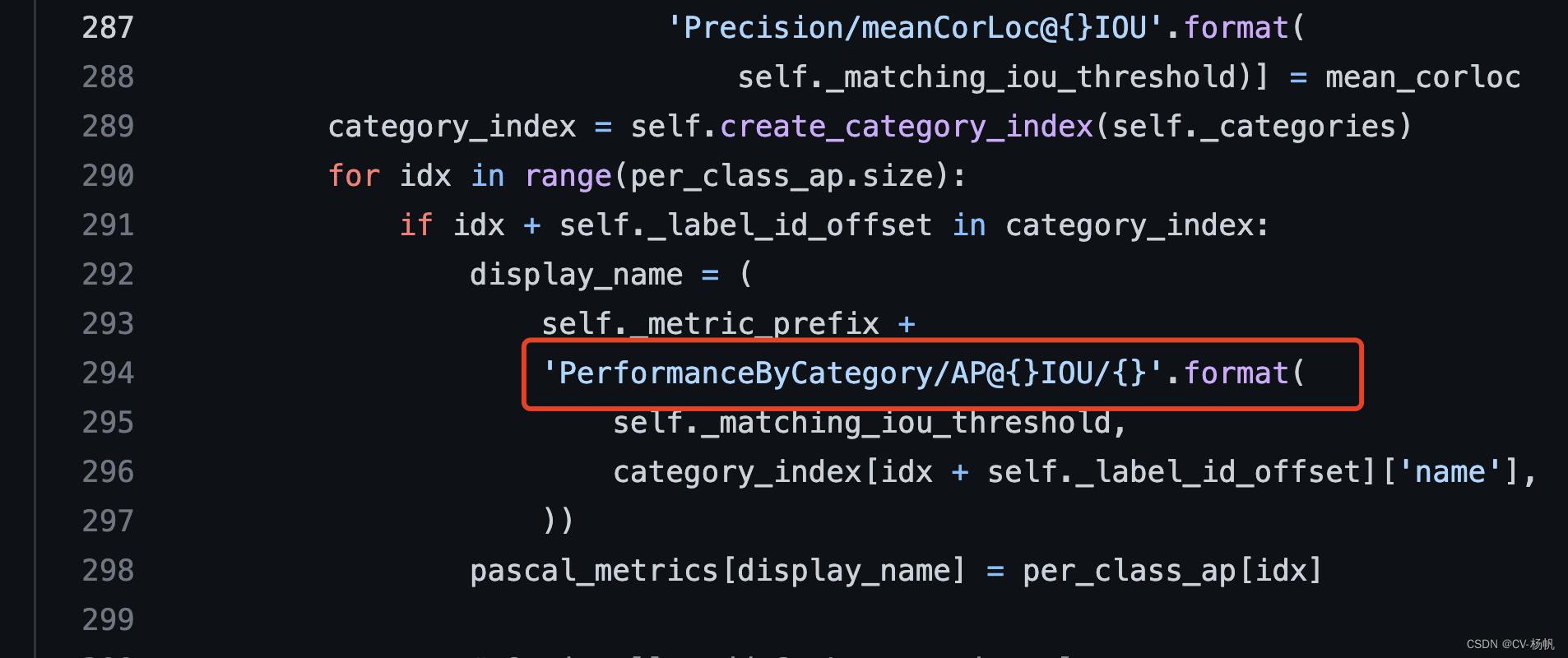

那么首先,我们先找出 PerformanceByCategory/AP@0.5IOU/left arm flat= 0.025848791267662225 是从哪里输出的。

首先找到 object_detection_evaluation.py :

https://github.com/open-mmlab/mmaction2/blob/master/mmaction/core/evaluation/ava_evaluation/object_detection_evaluation.py

for idx in range(per_class_ap.size):

if idx + self._label_id_offset in category_index:

display_name = (

self._metric_prefix +

'PerformanceByCategory/AP@IOU/'.format(

self._matching_iou_threshold,

category_index[idx + self._label_id_offset]['name'],

))

pascal_metrics[display_name] = per_class_ap[idx]

可以看出,这里 pascal_metrics 中存放了每个动作(行为)的分数值,并且输出格式也在其中了。

在哪里输出呢?或者说代码执行到这里后,下一步到哪里去呢?

下一步就到了ava_utils.py:https://github.com/open-mmlab/mmaction2/blob/master/mmaction/core/evaluation/ava_utils.py

for display_name in metrics:

print(f'display_name=\\tmetrics[display_name]')

可以看到,这一步就是输出操作了。

2.2 其他评价指标

现在已经找到了官方默认评价指标的处理和输出位置,那么大家仔细看object_detection_evaluation.py文件,会发现,还有很多评价指标没有输出。

2.3.1 总体评价指标

它们在哪里呢

首先,我们看到 object_detection_evaluation.py :

https://github.com/open-mmlab/mmaction2/blob/master/mmaction/core/evaluation/ava_evaluation/object_detection_evaluation.py

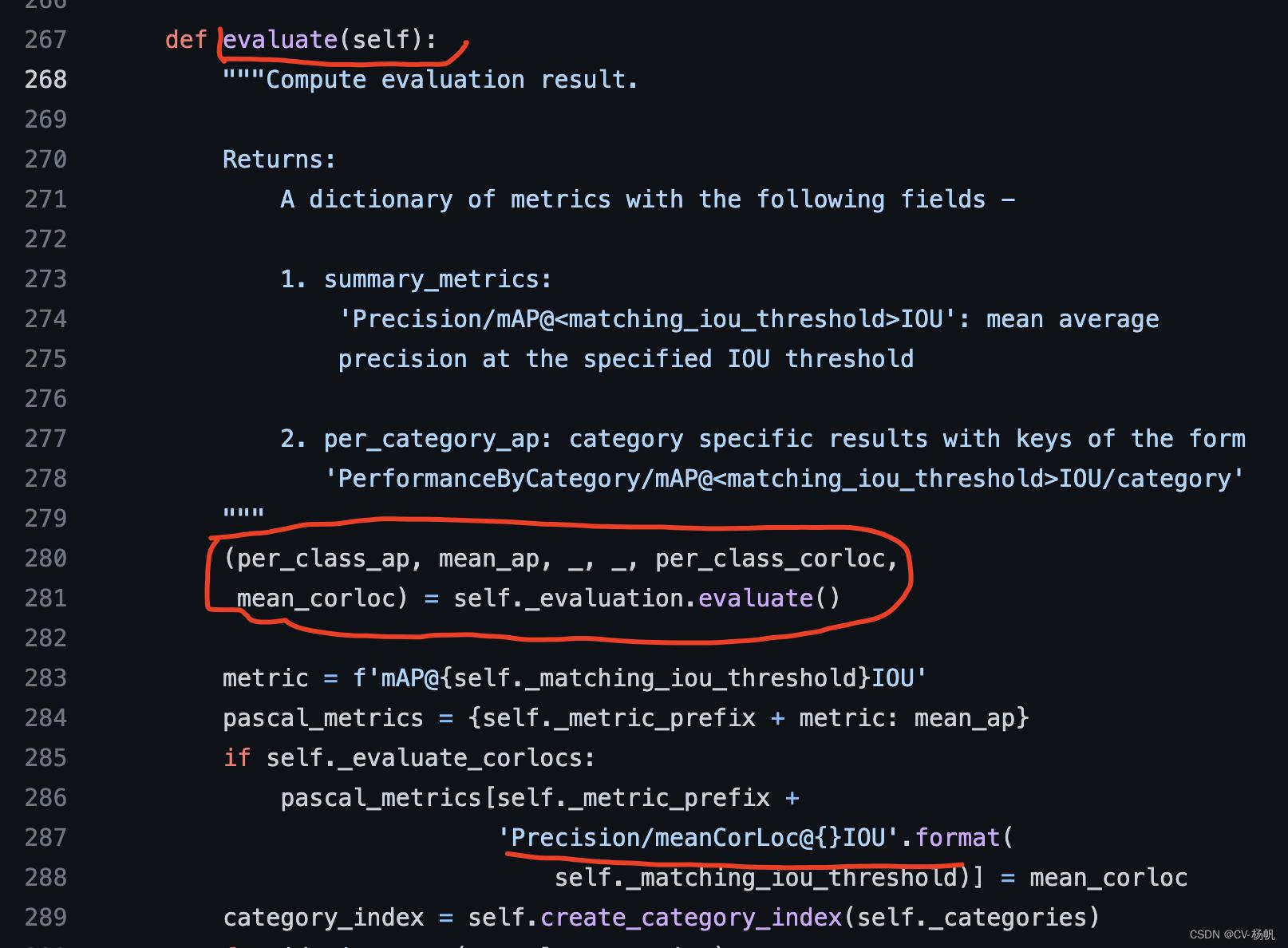

(per_class_ap, mean_ap, _, _, per_class_corloc,

mean_corloc) = self._evaluation.evaluate()

这两行代码就隐藏了一些信息,一共有6个计算结果:per_class_ap, mean_ap, _, _, per_class_corloc, mean_corloc,并且其中有2个被省略了,那么self._evaluation.evaluate()出自哪里,省略的2个又是什么呢?

答案就在这里 object_detection_evaluation.py :

https://github.com/open-mmlab/mmaction2/blob/master/mmaction/core/evaluation/ava_evaluation/object_detection_evaluation.py:

return ObjectDetectionEvalMetrics(

self.average_precision_per_class,

mean_ap,

self.precisions_per_class,

self.recalls_per_class,

self.corloc_per_class,

mean_corloc,

)

这样我们就看出了,被省略的原来是self.precisions_per_class,self.recalls_per_class

一个是每个动作(行为)的准确率,一个是每个动作(行为)的召回率,这不就是我们要的东西么。

找到了这6个值之后,我们就将其输出,看看其长啥样。

在object_detection_evaluation.py

https://github.com/open-mmlab/mmaction2/blob/master/mmaction/core/evaluation/ava_evaluation/object_detection_evaluation.py写入:

def evaluate(self):

"""Compute evaluation result.

Returns:

A dictionary of metrics with the following fields -

1. summary_metrics:

'Precision/mAP@<matching_iou_threshold>IOU': mean average

precision at the specified IOU threshold

2. per_category_ap: category specific results with keys of the form

'PerformanceByCategory/mAP@<matching_iou_threshold>IOU/category'

"""

"""

self.average_precision_per_class,

mean_ap,

self.precisions_per_class,

self.recalls_per_class,

self.corloc_per_class,

mean_corloc,

"""

#(per_class_ap, mean_ap, _, _, per_class_corloc,

# mean_corloc) = self._evaluation.evaluate()

(per_class_ap, mean_ap, precisions_per_class, recalls_per_class, per_class_corloc,

mean_corloc) = self._evaluation.evaluate()

metric = f'mAP@self._matching_iou_thresholdIOU'

pascal_metrics = self._metric_prefix + metric: mean_ap

if self._evaluate_corlocs:

pascal_metrics[self._metric_prefix +

'Precision/meanCorLoc@IOU'.format(

self._matching_iou_threshold)] = mean_corloc

category_index = self.create_category_index(self._categories)

# average_precision_per_class --> per_class_ap

print("per_class_ap[0]:",per_class_ap[0])

print("len(per_class_ap)",len(per_class_ap))

# mean_ap

print("mean_ap:",mean_ap)

# precisions_per_class

print("precisions_per_class[0]:",precisions_per_class[0])

print("len(precisions_per_class[0]):",len(precisions_per_class[0]))

print("len(precisions_per_class)",len(precisions_per_class))

# recalls_per_class

print("recalls_per_class[0]:",recalls_per_class[0])

print("len(recalls_per_class[0]):",len(recalls_per_class[0]))

print("len(recalls_per_class)",len(recalls_per_class))

# corloc_per_class --> per_class_corloc

print("per_class_corloc[0]:",per_class_corloc[0])

print("len(per_class_corloc)",len(per_class_corloc))

# mean_corloc

print("mean_corloc",mean_corloc)

input()

for idx in range(per_class_ap.size):

if idx + self._label_id_offset in category_index:

display_name = (

self._metric_prefix +

'PerformanceByCategory/AP@IOU/'.format(

self._matching_iou_threshold,

category_index[idx + self._label_id_offset]['name'],

))

pascal_metrics[display_name] = per_class_ap[idx]

# Optionally add CorLoc metrics.classes

if self._evaluate_corlocs:

display_name = (

self._metric_prefix +

'PerformanceByCategory/CorLoc@IOU/'.format(

self._matching_iou_threshold,

category_index[idx +

self._label_id_offset]['name'],

))

pascal_metrics[display_name] = per_class_corloc[idx]

return pascal_metrics

执行:

cd /home/MPCLST/mmaction2_YF/

python tools/test.py configs/detection/ava/my_slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb3.py ./work_dirs/22-8-7ava-lr0005/slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb/latest.pth --eval mAP

看看输出结果:

[>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>] 44/44, 8.1 task/s, elapsed: 5s, ETA: 0s

Evaluating mAP ...

==> 0.0463362 seconds to read file /home/MPCLST/Dataset/annotations/val.csv

==> 0.0469692 seconds to Reading detection results

==> 0.0420914 seconds to read file AVA_20220809_115528_result.csv

==> 0.0423815 seconds to Reading detection results

==> 0.00971556 seconds to Convert groundtruth

==> 0.41019 seconds to convert detections

per_class_ap[0]: 0.08483126725928619

len(per_class_ap) 71

mean_ap: 0.09254295488519894

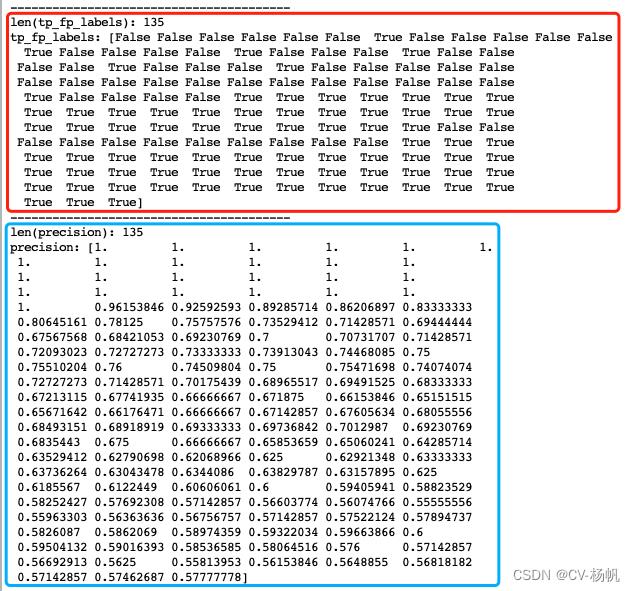

precisions_per_class[0]: [1. 1. 1. 1. 1. 1.

1. 1. 1. 1. 1. 1.

1. 1. 1. 0.9375 0.88235294 0.83333333

0.84210526 0.85 0.85714286 0.86363636 0.86956522 0.875

0.88 0.88461538 0.88888889 0.89285714 0.89655172 0.9

0.90322581 0.90625 0.90909091 0.91176471 0.91428571 0.91666667

0.91891892 0.92105263 0.8974359 0.9 0.87804878 0.85714286

0.8372093 0.81818182 0.82222222 0.80434783 0.80851064 0.8125

0.79591837 0.8 0.78431373 0.78846154 0.79245283 0.7962963

0.8 0.80357143 0.78947368 0.77586207 0.76271186 0.75

0.73770492 0.72580645 0.71428571 0.703125 0.69230769 0.68181818

0.67164179 0.66176471 0.66666667 0.67142857 0.67605634 0.66666667

0.65753425 0.64864865 0.64 0.63157895 0.62337662 0.61538462

0.62025316 0.6125 0.60493827 0.59756098 0.59036145 0.58333333

0.57647059 0.56976744 0.56321839 0.55681818 0.5505618 0.54444444

0.53846154 0.5326087 0.52688172 0.5212766 0.51578947 0.51041667

0.50515464 0.5 0.49494949 0.49 0.48514851 0.48039216

0.47572816 0.47115385 0.46666667 0.47169811 0.46728972 0.47222222

0.47706422 0.48181818 0.48648649 0.49107143 0.49557522 0.5

0.50434783 0.50862069 0.51282051 0.51694915 0.5210084 0.525

0.52892562 0.53278689 0.53658537 0.54032258 0.544 0.54761905

0.5511811 0.5546875 0.55813953 0.56153846 0.5648855 0.56818182

0.57142857 0.57462687 0.57777778]

len(precisions_per_class[0]): 135

len(precisions_per_class) 45

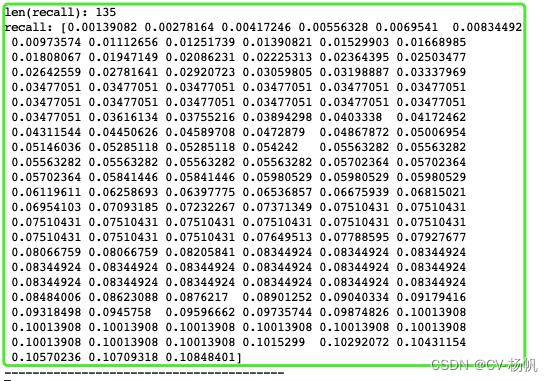

recalls_per_class[0]: [0.00139082 0.00278164 0.00417246 0.00556328 0.0069541 0.00834492

0.00973574 0.01112656 0.01251739 0.01390821 0.01529903 0.01668985

0.01808067 0.01947149 0.02086231 0.02086231 0.02086231 0.02086231

0.02225313 0.02364395 0.02503477 0.02642559 0.02781641 0.02920723

0.03059805 0.03198887 0.03337969 0.03477051 0.03616134 0.03755216

0.03894298 0.0403338 0.04172462 0.04311544 0.04450626 0.04589708

0.0472879 0.04867872 0.04867872 0.05006954 0.05006954 0.05006954

0.05006954 0.05006954 0.05146036 0.05146036 0.05285118 0.054242

0.054242 0.05563282 0.05563282 0.05702364 0.05841446 0.05980529

0.06119611 0.06258693 0.06258693 0.06258693 0.06258693 0.06258693

0.06258693 0.06258693 0.06258693 0.06258693 0.06258693 0.06258693

0.06258693 0.06258693 0.06397775 0.06536857 0.06675939 0.06675939

0.06675939 0.06675939 0.06675939 0.06675939 0.06675939 0.06675939

0.06815021 0.06815021 0.06815021 0.06815021 0.06815021 0.06815021

0.06815021 0.06815021 0.06815021 0.06815021 0.06815021 0.06815021

0.06815021 0.06815021 0.06815021 0.06815021 0.06815021 0.06815021

0.06815021 0.06815021 0.06815021 0.06815021 0.06815021 0.06815021

0.06815021 0.06815021 0.06815021 0.06954103 0.06954103 0.07093185

0.07232267 0.07371349 0.07510431 0.07649513 0.07788595 0.07927677

0.08066759 0.08205841 0.08344924 0.08484006 0.08623088 0.0876217

0.08901252 0.09040334 0.09179416 0.09318498 0.0945758 0.09596662

0.09735744 0.09874826 0.10013908 0.1015299 0.10292072 0.10431154

0.10570236 0.10709318 0.10848401]

len(recalls_per_class[0]): 135

len(recalls_per_class) 45

per_class_corloc[0]: 0.0

len(per_class_corloc) 71

mean_corloc 0.0

2.3.2 加载的进度条含义

先解释:

[>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>] 44/44, 8.1 task/s, elapsed: 5s, ETA: 0s

这里出现了44,它是什么意思呢?

我的测试集有4个视频,每个视频对应11张标注图片,那么总共有44张视频帧被标注。

2.3.3 average_precision_per_class

然后是:average_precision_per_class --> per_class_ap

per_class_ap[0]: 0.08483126725928619

len(per_class_ap) 71

可以看出per_class_ap就是对每个动作(行为)的ap值

2.3.4 mean_ap

mean_ap: 0.09254295488519894

就是最后所有动作(行为)的结果,mAP

2.3.5 precisions_per_class

precisions_per_class[0]: [1. 1. 1. 1. 1. 1.

1. 1. 1. 1. 1. 1.

1. 1. 1. 0.9375 0.88235294 0.83333333

0.84210526 0.85 0.85714286 0.86363636 0.86956522 0.875

0.88 0.88461538 0.88888889 0.89285714 0.89655172 0.9

0.90322581 0.90625 0.90909091 0.91176471 0.91428571 0.91666667

0.91891892 0.92105263 0.8974359 0.9 0.87804878 0.85714286

0.8372093 0.81818182 0.82222222 0.80434783 0.80851064 0.8125

0.79591837 0.8 0.78431373 0.78846154 0.79245283 0.7962963

0.8 0.80357143 0.78947368 0.77586207 0.76271186 0.75

0.73770492 0.72580645 0.71428571 0.703125 0.69230769 0.68181818

0.67164179 0.66176471 0.66666667 0.67142857 0.67605634 0.66666667

0.65753425 0.64864865 0.64 0.63157895 0.62337662 0.61538462

0.62025316 0.6125 0.60493827 0.59756098 0.59036145 0.58333333

0.57647059 0.56976744 0.56321839 0.55681818 0.5505618 0.54444444

0.53846154 0.5326087 0.52688172 0.5212766 0.51578947 0.51041667

0.50515464 0.5 0.49494949 0.49 0.48514851 0.48039216

0.47572816 0.47115385 0.46666667 0.47169811 0.46728972 0.47222222

0.47706422 0.48181818 0.48648649 0.49107143 0.49557522 0.5

0.50434783 0.50862069 0.51282051 0.51694915 0.5210084 0.525

0.52892562 0.53278689 0.53658537 0.54032258 0.544 0.54761905

0.5511811 0.5546875 0.55813953 0.56153846 0.5648855 0.56818182

0.57142857 0.57462687 0.57777778]

len(precisions_per_class[0]): 135

len(precisions_per_class) 45

到这里,就出现了一些让人摸不着头脑的数字了,我自定义的数据集有71类动作(行为),测试集4*11=44张

但是这里的135、45,看似和71与44毫无关系,那么135和44代表着什么意思呢?

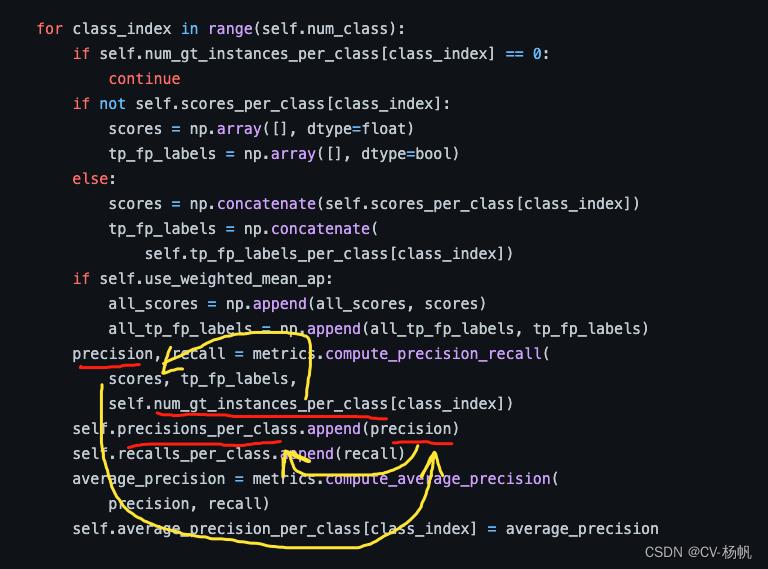

为了知道135和45的意思,我们需要到object_detection_evaluation.py

https://github.com/open-mmlab/mmaction2/blob/master/mmaction/core/evaluation/ava_evaluation/object_detection_evaluation.py:

def evaluate(self):

"""Compute evaluation result.

Returns:

A named tuple with the following fields -

average_precision: float numpy array of average precision for

each class.

mean_ap: mean average precision of all classes, float scalar

precisions: List of precisions, each precision is a float numpy

array

recalls: List of recalls, each recall is a float numpy array

corloc: numpy float array

mean_corloc: Mean CorLoc score for each class, float scalar

"""

if (self.num_gt_instances_per_class == 0).any():

logging.info(

'The following classes have no ground truth examples: %s',

np.squeeze(np.argwhere(self.num_gt_instances_per_class == 0)) +

self.label_id_offset)

if self.use_weighted_mean_ap:

all_scores = np.array([], dtype=float)

all_tp_fp_labels = np.array([], dtype=bool)

for class_index in range(self.num_class):

if self.num_gt_instances_per_class[class_index] == 0:

continue

if not self.scores_per_class[class_index]:

scores = np.array([], dtype=float)

tp_fp_labels = np.array([], dtype=bool)

else:

scores = np.concatenate(self.scores_per_class[class_index])

tp_fp_labels = np.concatenate(

self.tp_fp_labels_per_class[class_index])

if self.use_weighted_mean_ap:

all_scores = np.append(all_scores, scores)

all_tp_fp_labels = np.append(all_tp_fp_labels, tp_fp_labels)

precision, recall = metrics.compute_precision_recall(

scores, tp_fp_labels,

self.num_gt_instances_per_class[class_index])

self.precisions_per_class.append(precision)

self.recalls_per_class.append(recall)

average_precision = metrics.compute_average_precision(

precision, recall)

self.average_precision_per_class[class_index] = average_precision

self.corloc_per_class = metrics.compute_cor_loc(

self.num_gt_imgs_per_class,

self.num_images_correctly_detected_per_class)

if self.use_weighted_mean_ap:

num_gt_instances = np.sum(self.num_gt_instances_per_class)

precision, recall = metrics.compute_precision_recall(

all_scores, all_tp_fp_labels, num_gt_instances)

mean_ap = metrics.compute_average_precision(precision, recall)

else:

mean_ap = np.nanmean(self.average_precision_per_class)

mean_corloc = np.nanmean(self.corloc_per_class)

return ObjectDetectionEvalMetrics(

self.average_precision_per_class,

mean_ap,

self.precisions_per_class,

self.recalls_per_class,

self.corloc_per_class,

mean_corloc,

)

上面这段代码包含了非常多的信息

先说135的意思,从precisions_per_class这个名字可以大致知道,135与一个类别的准确率有关,但这一个类别的准确率怎么有135个呢?

看上图,可以知道,precisions_per_class.append由precision组成,precision中又有num_gt_instances_per_class

那么我们只能知道precision与num_gt_instances_per_class有关,并且precision还和scores有关,那么我们就要输出scores与num_gt_instances_per_class的结构与内容

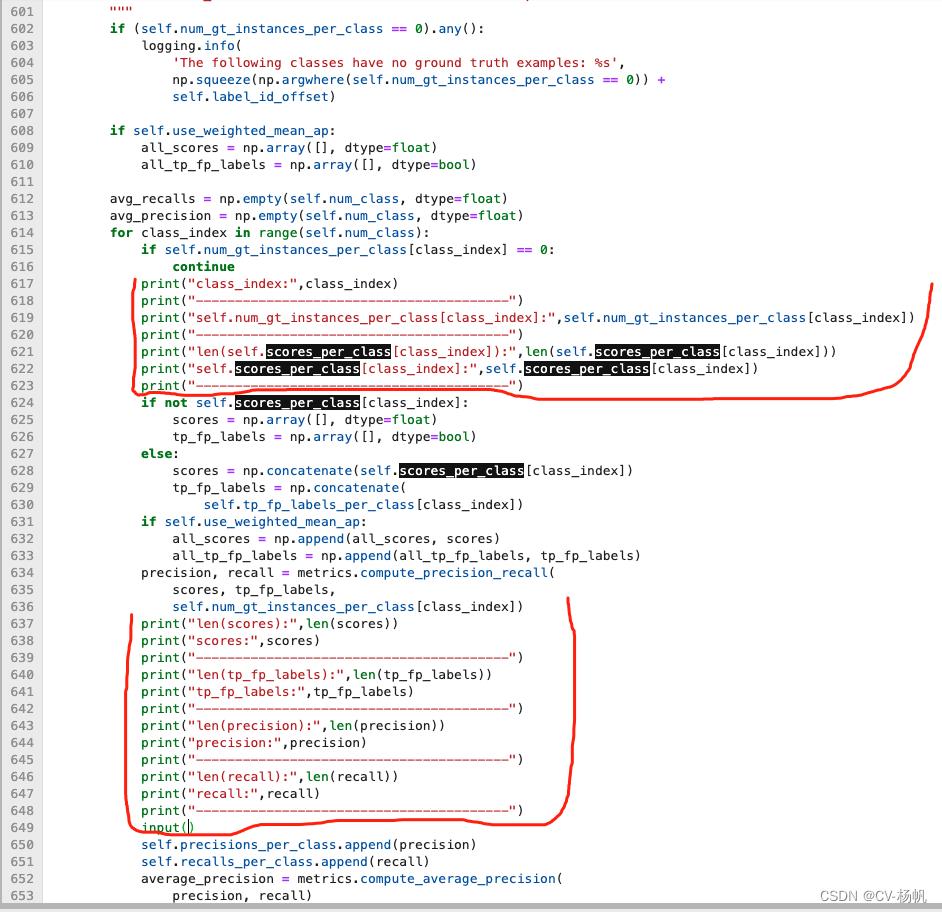

def evaluate(self):

"""Compute evaluation result.

Returns:

A named tuple with the following fields -

average_precision: float numpy array of average precision for

each class.

mean_ap: mean average precision of all classes, float scalar

precisions: List of precisions, each precision is a float numpy

array

recalls: List of recalls, each recall is a float numpy array

corloc: numpy float array

mean_corloc: Mean CorLoc score for each class, float scalar

"""

if (self.num_gt_instances_per_class == 0).any():

logging.info(

'The following classes have no ground truth examples: %s',

np.squeeze(np.argwhere(self.num_gt_instances_per_class == 0)) +

self.label_id_offset)

if self.use_weighted_mean_ap:

all_scores = np.array([], dtype=float)

all_tp_fp_labels = np.array([], dtype=bool)

avg_recalls = np.empty(self.num_class, dtype=float)

avg_precision = np.empty(self.num_class, dtype=float)

for class_index in range(self.num_class):

if self.num_gt_instances_per_class[class_index] == 0:

continue

print("class_index:",class_index)

print("----------------------------------------")

print("self.num_gt_instances_per_class[class_index]:",self.num_gt_instances_per_class[class_index])

print("----------------------------------------")

print("len(self.scores_per_class[class_index]):",len(self.scores_per_class[class_index]))

print("self.scores_per_class[class_index]:",self.scores_per_class[class_index])

print("----------------------------------------")

if not self.scores_per_class[class_index]:

scores = np.array([], dtype=float)

tp_fp_labels = np.array([], dtype=bool)

else:

scores = np.concatenate(self.scores_per_class[class_index])

tp_fp_labels = np.concatenate(

self.tp_fp_labels_per_class[class_index])

if self.use_weighted_mean_ap:

all_scores = np.append(all_scores, scores)

all_tp_fp_labels = np.append(all_tp_fp_labels, tp_fp_labels)

precision, recall = metrics.compute_precision_recall(

scores, tp_fp_labels,

self.num_gt_instances_per_class[class_index])

print("len(scores):",len(scores))

print("scores:",scores)

print("----------------------------------------")

print("len(tp_fp_labels):",len(tp_fp_labels))

print("tp_fp_labels:",tp_fp_labels)

print("----------------------------------------")

print("len(precision):",len(precision))

print("precision:",precision)

print("----------------------------------------")

print("len(recall):",len(recall))

print("recall:",recall)

print("----------------------------------------")

input()

self.precisions_per_class.append(precision)

self.recalls_per_class.append(recall)

average_precision = metrics.compute_average_precision(

precision, recall)

self.average_precision_per_class[class_index] = average_precision

avg_recalls[class_index] = recall.mean(axis=0)

avg_precision[class_index] = precision.mean(axis=0)

#print("avg_recalls",avg_recalls)

#print("len(avg_recalls)",len(avg_recalls))

#input()

self.corloc_per_class = metrics.compute_cor_loc(

self.num_gt_imgs_per_class,

self.num_images_correctly_detected_per_class)

if self.use_weighted_mean_ap:

num_gt_instances = np.sum(self.num_gt_instances_per_class)

precision, recall = metrics.compute_precision_recall(

all_scores, all_tp_fp_labels, num_gt_instances)

mean_ap = metrics.compute_average_precision(precision, recall)

else:

mean_ap = np.nanmean(self.average_precision_per_class)

mean_corloc = np.nanmean(self.corloc_per_class)

return ObjectDetectionEvalMetrics(

self.average_precision_per_class,

mean_ap,

self.precisions_per_class,

self.recalls_per_class,

self.corloc_per_class,

mean_corloc,

avg_recalls,

avg_precision,

self.num_gt_instances_per_class

)

root@62ef18cf36dde126125c0d60:/home/MPCLST/mmaction2_YF# python tools/test.py configs/detection/ava/my_slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb.py ./work_dirs/22-8-7ava-lr00125/slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb/best_mAP&以上是关于性能评价指标(Precision, Recall, F-score, MAP)的主要内容,如果未能解决你的问题,请参考以下文章

mmaction2 入门教程 02评价指标 每类动作(行为)数量 precision(准确率),recall(召回率)apmAP

Recall(召回率);Precision(准确率);F1-Meature(综合评价指标);true positives;false positives;false negatives.

常用的评价指标:accuracy、precision、recall、f1-score、ROC-AUC、PR-AUC

查全率(Recall),查准率(Precision),灵敏性(Sensitivity),特异性(Specificity),F1,PR曲线,ROC,AUC的应用场景