MatLab和OpenCV共同实现单目相机标定实验--附标定图片

Posted master_wang22327

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了MatLab和OpenCV共同实现单目相机标定实验--附标定图片相关的知识,希望对你有一定的参考价值。

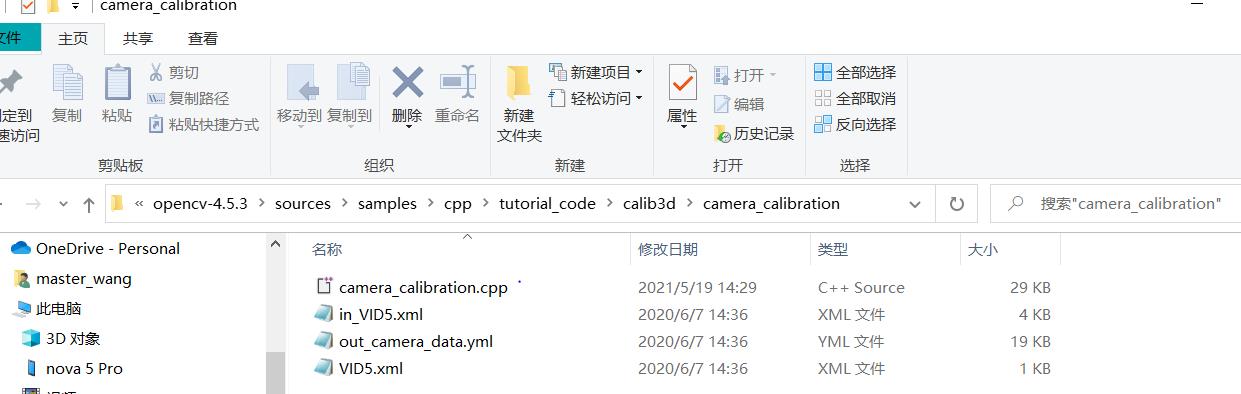

今天更新一下用OpenCV的C++代码实现单目相机标定,所用图片和上一篇文章相同,都是2000W像素的标定板图片。这里的标定代码调用的是opencv4.5.3源码的sample样例代码。可以在

E:\\opencv--c++\\opencv-4.5.3\\sources\\samples\\cpp\\tutorial_code\\calib3d\\camera_calibration找到所有标定程序所需的文件。

其中 in_VID5.xml代码主要是以XML文件存储标定配置,内容见下面代码

<?xml version="1.0"?>

<opencv_storage>

<Settings>

<!-- Number of inner corners per a item row and column. (square, circle) -->

<BoardSize_Width> 9</BoardSize_Width>

<BoardSize_Height>6</BoardSize_Height>

<!-- The size of a square in some user defined metric system (pixel, millimeter)-->

<Square_Size>50</Square_Size>

<!-- The type of input used for camera calibration. One of: CHESSBOARD CIRCLES_GRID ASYMMETRIC_CIRCLES_GRID -->

<Calibrate_Pattern>"CHESSBOARD"</Calibrate_Pattern>

<!-- The input to use for calibration.

To use an input camera -> give the ID of the camera, like "1"

To use an input video -> give the path of the input video, like "/tmp/x.avi"

To use an image list -> give the path to the XML or YAML file containing the list of the images, like "/tmp/circles_list.xml"

-->

<Input>"images/CameraCalibration/VID5/VID5.xml"</Input>

<!-- If true (non-zero) we flip the input images around the horizontal axis.-->

<Input_FlipAroundHorizontalAxis>0</Input_FlipAroundHorizontalAxis>

<!-- Time delay between frames in case of camera. -->

<Input_Delay>100</Input_Delay>

<!-- How many frames to use, for calibration. -->

<Calibrate_NrOfFrameToUse>25</Calibrate_NrOfFrameToUse>

<!-- Consider only fy as a free parameter, the ratio fx/fy stays the same as in the input cameraMatrix.

Use or not setting. 0 - False Non-Zero - True-->

<Calibrate_FixAspectRatio> 1 </Calibrate_FixAspectRatio>

<!-- If true (non-zero) tangential distortion coefficients are set to zeros and stay zero.-->

<Calibrate_AssumeZeroTangentialDistortion>1</Calibrate_AssumeZeroTangentialDistortion>

<!-- If true (non-zero) the principal point is not changed during the global optimization.-->

<Calibrate_FixPrincipalPointAtTheCenter> 1 </Calibrate_FixPrincipalPointAtTheCenter>

<!-- The name of the output log file. -->

<Write_outputFileName>"out_camera_data.xml"</Write_outputFileName>

<!-- If true (non-zero) we write to the output file the feature points.-->

<Write_DetectedFeaturePoints>1</Write_DetectedFeaturePoints>

<!-- If true (non-zero) we write to the output file the extrinsic camera parameters.-->

<Write_extrinsicParameters>1</Write_extrinsicParameters>

<!-- If true (non-zero) we write to the output file the refined 3D target grid points.-->

<Write_gridPoints>1</Write_gridPoints>

<!-- If true (non-zero) we show after calibration the undistorted images.-->

<Show_UndistortedImage>1</Show_UndistortedImage>

<!-- If true (non-zero) will be used fisheye camera model.-->

<Calibrate_UseFisheyeModel>0</Calibrate_UseFisheyeModel>

<!-- If true (non-zero) distortion coefficient k1 will be equals to zero.-->

<Fix_K1>0</Fix_K1>

<!-- If true (non-zero) distortion coefficient k2 will be equals to zero.-->

<Fix_K2>0</Fix_K2>

<!-- If true (non-zero) distortion coefficient k3 will be equals to zero.-->

<Fix_K3>0</Fix_K3>

<!-- If true (non-zero) distortion coefficient k4 will be equals to zero.-->

<Fix_K4>1</Fix_K4>

<!-- If true (non-zero) distortion coefficient k5 will be equals to zero.-->

<Fix_K5>1</Fix_K5>

</Settings>

</opencv_storage>

其中角点数为9行*6列,这里是OPenCV惯用的标定板角点尺寸。

其中两角点之间的真实距离为50mm。

标定板的模式选择chessboard即可,这是黑白棋盘标定板的意思(也有一些其他的奇奇怪怪的标定板样式,看你拍摄的图片是什么样式就选什么样式就好)。

input选项设置的是图片输入XML文件,里面的内容是这样的

<?xml version="1.0"?>

<opencv_storage>

<images>

images/CameraCalibraation/VID5/xx1.jpg

images/CameraCalibraation/VID5/xx2.jpg

images/CameraCalibraation/VID5/xx3.jpg

images/CameraCalibraation/VID5/xx4.jpg

images/CameraCalibraation/VID5/xx5.jpg

images/CameraCalibraation/VID5/xx6.jpg

images/CameraCalibraation/VID5/xx7.jpg

images/CameraCalibraation/VID5/xx8.jpg

</images>

</opencv_storage>只需要更改图片的路径即可,这里的xx1.jpg目的是读取以1.jpg结束的任何匹配项,比如left1.jpg。

Calibrate_NrOfFrameToUse这个参数设置的是选取几张图片用于标定程序,因为我上一篇文章已经选取的20张用于标定,所以这里可以更改为20.

Write_outputFileName参数设置的是标定结果存储文件的名字,仍然是XML文件格式.

至此OPenCV提供的相机标定样例程序中的外部参数文件大致配置都清楚了,下面给出的是我已经更改完的,用于实际标定的参数文件.

第一个实际用的VID5.xml,更改了图片文件的位置,共21张2000w像素的黑白棋盘图片,20张用于标定,1张用于检测标定结果。标定图片的资源已经上传了,大家可以更具情况下载更改

<?xml version="1.0"?>

<opencv_storage>

<images>

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/1.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/2.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/3.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/4.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/5.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/6.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/7.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/8.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/9.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/10.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/11.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/12.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/13.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/14.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/15.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/16.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/17.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/18.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/19.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/20.bmp

E:/c++opencv_Projection/camera_calibration/calib/real_captured_image/21.bmp

</images>

</opencv_storage>第二个文件是in_VID5.xml,这里我更改了上面描述的参数,25mm实际尺寸,输入文件的位置,20张标定图片等。

<?xml version="1.0"?>

<opencv_storage>

<Settings>

<!-- Number of inner corners per a item row and column. (square, circle) -->

<BoardSize_Width> 9</BoardSize_Width>

<BoardSize_Height>6</BoardSize_Height>

<!-- The size of a square in some user defined metric system (pixel, millimeter)-->

<Square_Size>25</Square_Size>

<!-- The type of input used for camera calibration. One of: CHESSBOARD CIRCLES_GRID ASYMMETRIC_CIRCLES_GRID -->

<Calibrate_Pattern>"CHESSBOARD"</Calibrate_Pattern>

<!-- The input to use for calibration.

To use an input camera -> give the ID of the camera, like "1"

To use an input video -> give the path of the input video, like "/tmp/x.avi"

To use an image list -> give the path to the XML or YAML file containing the list of the images, like "/tmp/circles_list.xml"

-->

<Input>"E:/c++opencv_Projection/camera_calibration/calib/VID5.xml"</Input>

<!-- If true (non-zero) we flip the input images around the horizontal axis.-->

<Input_FlipAroundHorizontalAxis>0</Input_FlipAroundHorizontalAxis>

<!-- Time delay between frames in case of camera. -->

<Input_Delay>100</Input_Delay>

<!-- How many frames to use, for calibration. -->

<Calibrate_NrOfFrameToUse>20</Calibrate_NrOfFrameToUse>

<!-- Consider only fy as a free parameter, the ratio fx/fy stays the same as in the input cameraMatrix.

Use or not setting. 0 - False Non-Zero - True-->

<Calibrate_FixAspectRatio> 1 </Calibrate_FixAspectRatio>

<!-- If true (non-zero) tangential distortion coefficients are set to zeros and stay zero.-->

<Calibrate_AssumeZeroTangentialDistortion>1</Calibrate_AssumeZeroTangentialDistortion>

<!-- If true (non-zero) the principal point is not changed during the global optimization.-->

<Calibrate_FixPrincipalPointAtTheCenter> 1 </Calibrate_FixPrincipalPointAtTheCenter>

<!-- The name of the output log file. -->

<Write_outputFileName>"out_camera_data.xml"</Write_outputFileName>

<!-- If true (non-zero) we write to the output file the feature points.-->

<Write_DetectedFeaturePoints>1</Write_DetectedFeaturePoints>

<!-- If true (non-zero) we write to the output file the extrinsic camera parameters.-->

<Write_extrinsicParameters>1</Write_extrinsicParameters>

<!-- If true (non-zero) we write to the output file the refined 3D target grid points.-->

<Write_gridPoints>1</Write_gridPoints>

<!-- If true (non-zero) we show after calibration the undistorted images.-->

<Show_UndistortedImage>1</Show_UndistortedImage>

<!-- If true (non-zero) will be used fisheye camera model.-->

<Calibrate_UseFisheyeModel>0</Calibrate_UseFisheyeModel>

<!-- If true (non-zero) distortion coefficient k1 will be equals to zero.-->

<Fix_K1>0</Fix_K1>

<!-- If true (non-zero) distortion coefficient k2 will be equals to zero.-->

<Fix_K2>0</Fix_K2>

<!-- If true (non-zero) distortion coefficient k3 will be equals to zero.-->

<Fix_K3>0</Fix_K3>

<!-- If true (non-zero) distortion coefficient k4 will be equals to zero.-->

<Fix_K4>1</Fix_K4>

<!-- If true (non-zero) distortion coefficient k5 will be equals to zero.-->

<Fix_K5>1</Fix_K5>

</Settings>

</opencv_storage>

接下来是源码的解析和部分内容更改。这里用的是OPenCV4.5.3,源码里面有大量的代码判断是否是fisheyecamera和是否是视频流等其他模式的输入,因为我们只用image list当作输入,所以就只需要看和我们标定程序相关的代码即可。部分注释写在代码里面了

#include <iostream>

#include <sstream>

#include <string>

#include <ctime>

#include <cstdio>

#pragma warning (disable: 4996)//这是为了解决一个报错

#include <opencv2/core.hpp>

#include <opencv2/core/utility.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/calib3d.hpp>

#include <opencv2/imgcodecs.hpp>

#include <opencv2/videoio.hpp>

#include <opencv2/highgui.hpp>

using namespace cv;

using namespace std;

class Settings

public:

Settings() : goodInput(false)

enum Pattern NOT_EXISTING, CHESSBOARD, CIRCLES_GRID, ASYMMETRIC_CIRCLES_GRID ;

enum InputType INVALID, CAMERA, VIDEO_FILE, IMAGE_LIST ;

void write(FileStorage& fs) const //Write serialization for this class

fs << ""

<< "BoardSize_Width" << boardSize.width

<< "BoardSize_Height" << boardSize.height

<< "Square_Size" << squareSize

<< "Calibrate_Pattern" << patternToUse

<< "Calibrate_NrOfFrameToUse" << nrFrames

<< "Calibrate_FixAspectRatio" << aspectRatio

<< "Calibrate_AssumeZeroTangentialDistortion" << calibZeroTangentDist

<< "Calibrate_FixPrincipalPointAtTheCenter" << calibFixPrincipalPoint

<< "Write_DetectedFeaturePoints" << writePoints

<< "Write_extrinsicParameters" << writeExtrinsics

<< "Write_gridPoints" << writeGrid

<< "Write_outputFileName" << outputFileName

<< "Show_UndistortedImage" << showUndistorted

<< "Input_FlipAroundHorizontalAxis" << flipVertical

<< "Input_Delay" << delay

<< "Input" << input

<< "";

void read(const FileNode& node) //Read serialization for this class

node["BoardSize_Width" ] >> boardSize.width;

node["BoardSize_Height"] >> boardSize.height;

node["Calibrate_Pattern"] >> patternToUse;

node["Square_Size"] >> squareSize;

node["Calibrate_NrOfFrameToUse"] >> nrFrames;

node["Calibrate_FixAspectRatio"] >> aspectRatio;

node["Write_DetectedFeaturePoints"] >> writePoints;

node["Write_extrinsicParameters"] >> writeExtrinsics;

node["Write_gridPoints"] >> writeGrid;

node["Write_outputFileName"] >> outputFileName;

node["Calibrate_AssumeZeroTangentialDistortion"] >> calibZeroTangentDist;

node["Calibrate_FixPrincipalPointAtTheCenter"] >> calibFixPrincipalPoint;

node["Calibrate_UseFisheyeModel"] >> useFisheye;

node["Input_FlipAroundHorizontalAxis"] >> flipVertical;

node["Show_UndistortedImage"] >> showUndistorted;

node["Input"] >> input;

node["Input_Delay"] >> delay;

node["Fix_K1"] >> fixK1;

node["Fix_K2"] >> fixK2;

node["Fix_K3"] >> fixK3;

node["Fix_K4"] >> fixK4;

node["Fix_K5"] >> fixK5;

validate();

void validate()

goodInput = true;

if (boardSize.width <= 0 || boardSize.height <= 0)

cerr << "Invalid Board size: " << boardSize.width << " " << boardSize.height << endl;

goodInput = false;

if (squareSize <= 10e-6)

cerr << "Invalid square size " << squareSize << endl;

goodInput = false;

if (nrFrames <= 0)

cerr << "Invalid number of frames " << nrFrames << endl;

goodInput = false;

if (input.empty()) // Check for valid input

inputType = INVALID;

else

if (input[0] >= '0' && input[0] <= '9')

stringstream ss(input);

ss >> cameraID;

inputType = CAMERA;

else

if (isListOfImages(input) && readStringList(input, imageList))

inputType = IMAGE_LIST;

nrFrames = (nrFrames < (int)imageList.size()) ? nrFrames : (int)imageList.size();

else

inputType = VIDEO_FILE;

if (inputType == CAMERA)

inputCapture.open(cameraID);

if (inputType == VIDEO_FILE)

inputCapture.open(input);

if (inputType != IMAGE_LIST && !inputCapture.isOpened())

inputType = INVALID;

if (inputType == INVALID)

cerr << " Input does not exist: " << input;

goodInput = false;

flag = 0;

if(calibFixPrincipalPoint) flag |= CALIB_FIX_PRINCIPAL_POINT;

if(calibZeroTangentDist) flag |= CALIB_ZERO_TANGENT_DIST;

if(aspectRatio) flag |= CALIB_FIX_ASPECT_RATIO;

if(fixK1) flag |= CALIB_FIX_K1;

if(fixK2) flag |= CALIB_FIX_K2;

if(fixK3) flag |= CALIB_FIX_K3;

if(fixK4) flag |= CALIB_FIX_K4;

if(fixK5) flag |= CALIB_FIX_K5;

if (useFisheye)

// the fisheye model has its own enum, so overwrite the flags

flag = fisheye::CALIB_FIX_SKEW | fisheye::CALIB_RECOMPUTE_EXTRINSIC;

if(fixK1) flag |= fisheye::CALIB_FIX_K1;

if(fixK2) flag |= fisheye::CALIB_FIX_K2;

if(fixK3) flag |= fisheye::CALIB_FIX_K3;

if(fixK4) flag |= fisheye::CALIB_FIX_K4;

if (calibFixPrincipalPoint) flag |= fisheye::CALIB_FIX_PRINCIPAL_POINT;

calibrationPattern = NOT_EXISTING;

if (!patternToUse.compare("CHESSBOARD")) calibrationPattern = CHESSBOARD;

if (!patternToUse.compare("CIRCLES_GRID")) calibrationPattern = CIRCLES_GRID;

if (!patternToUse.compare("ASYMMETRIC_CIRCLES_GRID")) calibrationPattern = ASYMMETRIC_CIRCLES_GRID;

if (calibrationPattern == NOT_EXISTING)

cerr << " Camera calibration mode does not exist: " << patternToUse << endl;

goodInput = false;

atImageList = 0;

Mat nextImage()

Mat result;

if( inputCapture.isOpened() )

Mat view0;

inputCapture >> view0;

view0.copyTo(result);

else if( atImageList < imageList.size() )

result = imread(imageList[atImageList++], IMREAD_COLOR);

return result;

static bool readStringList( const string& filename, vector<string>& l )

l.clear();

FileStorage fs(filename, FileStorage::READ);

if( !fs.isOpened() )

return false;

FileNode n = fs.getFirstTopLevelNode();

if( n.type() != FileNode::SEQ )

return false;

FileNodeIterator it = n.begin(), it_end = n.end();

for( ; it != it_end; ++it )

l.push_back((string)*it);

return true;

static bool isListOfImages( const string& filename)

string s(filename);

// Look for file extension

if( s.find(".xml") == string::npos && s.find(".yaml") == string::npos && s.find(".yml") == string::npos )

return false;

else

return true;

public:

Size boardSize; // The size of the board -> Number of items by width and height

Pattern calibrationPattern; // One of the Chessboard, circles, or asymmetric circle pattern

float squareSize; // The size of a square in your defined unit (point, millimeter,etc).

int nrFrames; // The number of frames to use from the input for calibration

float aspectRatio; // The aspect ratio

int delay; // In case of a video input

bool writePoints; // Write detected feature points

bool writeExtrinsics; // Write extrinsic parameters

bool writeGrid; // Write refined 3D target grid points

bool calibZeroTangentDist; // Assume zero tangential distortion

bool calibFixPrincipalPoint; // Fix the principal point at the center

bool flipVertical; // Flip the captured images around the horizontal axis

string outputFileName; // The name of the file where to write

bool showUndistorted; // Show undistorted images after calibration

string input; // The input ->

bool useFisheye; // use fisheye camera model for calibration

bool fixK1; // fix K1 distortion coefficient

bool fixK2; // fix K2 distortion coefficient

bool fixK3; // fix K3 distortion coefficient

bool fixK4; // fix K4 distortion coefficient

bool fixK5; // fix K5 distortion coefficient

int cameraID;

vector<string> imageList;

size_t atImageList;

VideoCapture inputCapture;

InputType inputType;

bool goodInput;

int flag;

private:

string patternToUse;

;

static inline void read(const FileNode& node, Settings& x, const Settings& default_value = Settings())

if(node.empty())

x = default_value;

else

x.read(node);

enum DETECTION = 0, CAPTURING = 1, CALIBRATED = 2 ;

bool runCalibrationAndSave(Settings& s, Size imageSize, Mat& cameraMatrix, Mat& distCoeffs,

vector<vector<Point2f> > imagePoints, float grid_width, bool release_object);

int main(int argc, char* argv[])

//注意下面的@settings需要输入in_VID5.xml的绝对地址作为输入,d再settings里面已经设置了可以不设置,winsize是亚像素角点检测的半个检测框大小

const String keys

= "help h usage ? | | print this message "

"@settings |E:/c++opencv_Projection/camera_calibration/calib/in_VID5.xml| input setting file"

"d | | actual distance between top-left and top-right corners of the calibration grid "

"winSize | 5 | Half of search window for cornerSubPix ";

CommandLineParser parser(argc, argv, keys);

parser.about("This is a camera calibration sample.\\n"

"Usage: camera_calibration [configuration_file -- default ./default.xml]\\n"

"Near the sample file you'll find the configuration file, which has detailed help of "

"how to edit it. It may be any OpenCV supported file format XML/YAML.");

if (!parser.check())

parser.printErrors();

return 0;

if (parser.has("help"))

parser.printMessage();

return 0;

//! [file_read]

Settings s;

const string inputSettingsFile = parser.get<string>(0);

FileStorage fs(inputSettingsFile, FileStorage::READ); // Read the settings

if (!fs.isOpened())

cout << "Could not open the configuration file: \\"" << inputSettingsFile << "\\"" << endl;

parser.printMessage();

return -1;

fs["Settings"] >> s;

fs.release(); // close Settings file

//! [file_read]

//FileStorage fout("settings.yml", FileStorage::WRITE); // write config as YAML

//fout << "Settings" << s;

if (!s.goodInput)

cout << "Invalid input detected. Application stopping. " << endl;

return -1;

int winSize = parser.get<int>("winSize");

float grid_width = s.squareSize * (s.boardSize.width - 1);

bool release_object = false;

if (parser.has("d"))

grid_width = parser.get<float>("d");

release_object = true;

vector<vector<Point2f> > imagePoints;

Mat cameraMatrix, distCoeffs;

Size imageSize;

int mode = s.inputType == Settings::IMAGE_LIST ? CAPTURING : DETECTION;

clock_t prevTimestamp = 0;

const Scalar RED(0,0,255), GREEN(0,255,0);

const char ESC_KEY = 27;

//! [get_input]

for(;;)

Mat view;

bool blinkOutput = false;

view = s.nextImage();

//----- If no more image, or got enough, then stop calibration and show result -------------

if( mode == CAPTURING && imagePoints.size() >= (size_t)s.nrFrames )

if (runCalibrationAndSave(s, imageSize, cameraMatrix, distCoeffs, imagePoints, grid_width,

release_object))

mode = CALIBRATED;

cout << "mode=capturing and imagepoints="<<imagePoints.size()<<"mode=calibrated"<< endl;//调用显示

else

mode = DETECTION;

if(view.empty()) // If there are no more images stop the loop

// if calibration threshold was not reached yet, calibrate now

if( mode != CALIBRATED && !imagePoints.empty() )

runCalibrationAndSave(s, imageSize, cameraMatrix, distCoeffs, imagePoints, grid_width,

release_object);

break;

//! [get_input]

imageSize = view.size(); // Format input image.

if( s.flipVertical ) flip( view, view, 0 );

//! [find_pattern]

vector<Point2f> pointBuf;

bool found;

int chessBoardFlags = CALIB_CB_ADAPTIVE_THRESH | CALIB_CB_NORMALIZE_IMAGE;

if(!s.useFisheye)

// fast check erroneously fails with high distortions like fisheye

chessBoardFlags |= CALIB_CB_FAST_CHECK;

switch( s.calibrationPattern ) // Find feature points on the input format

case Settings::CHESSBOARD:

found = findChessboardCorners( view, s.boardSize, pointBuf, chessBoardFlags);

break;

case Settings::CIRCLES_GRID:

found = findCirclesGrid( view, s.boardSize, pointBuf );

break;

case Settings::ASYMMETRIC_CIRCLES_GRID:

found = findCirclesGrid( view, s.boardSize, pointBuf, CALIB_CB_ASYMMETRIC_GRID );

break;

default:

found = false;

break;

//! [find_pattern]

//! [pattern_found]

if ( found) // If done with success,

// improve the found corners' coordinate accuracy for chessboard

if( s.calibrationPattern == Settings::CHESSBOARD)

Mat viewGray;

cvtColor(view, viewGray, COLOR_BGR2GRAY);

cornerSubPix( viewGray, pointBuf, Size(winSize,winSize),

Size(-1,-1), TermCriteria( TermCriteria::EPS+TermCriteria::COUNT, 30, 0.0001 ));

if( mode == CAPTURING && // For camera only take new samples after delay time

(!s.inputCapture.isOpened() || clock() - prevTimestamp > s.delay*1e-3*CLOCKS_PER_SEC) )

imagePoints.push_back(pointBuf);

prevTimestamp = clock();

blinkOutput = s.inputCapture.isOpened();

// Draw the corners.

drawChessboardCorners( view, s.boardSize, Mat(pointBuf), found );

//! [pattern_found]

//----------------------------- Output Text ------------------------------------------------

//! [output_text]

string msg = (mode == CAPTURING) ? "100/100" :

mode == CALIBRATED ? "Calibrated" : "Press 'g' to start";

int baseLine = 0;

Size textSize = getTextSize(msg, 1, 10, 3, &baseLine);

Point textOrigin(view.cols - 2*textSize.width - 10, view.rows - 2*baseLine - 10);

if( mode == CAPTURING )

if(s.showUndistorted)

msg = cv::format( "%d/%d Undist", (int)imagePoints.size(), s.nrFrames );

else

msg = cv::format( "%d/%d", (int)imagePoints.size(), s.nrFrames );

putText( view, msg, textOrigin, 1, 10, mode == CALIBRATED ? GREEN : RED, 3);

if( blinkOutput )

bitwise_not(view, view);

//! [output_text]

//------------------------- Video capture output undistorted ------------------------------

//! [output_undistorted]

if( mode == CALIBRATED && s.showUndistorted )

Mat temp = view.clone();

if (s.useFisheye)

Mat newCamMat;

fisheye::estimateNewCameraMatrixForUndistortRectify(cameraMatrix, distCoeffs, imageSize,

Matx33d::eye(), newCamMat, 1);

cv::fisheye::undistortImage(temp, view, cameraMatrix, distCoeffs, newCamMat);

else

undistort(temp, view, cameraMatrix, distCoeffs);

//! [output_undistorted]

//------------------------------ Show image and check for input commands -------------------

//! [await_input]

namedWindow("Image View ", WINDOW_NORMAL);

imshow("Image View ",view); //显示corner结果

waitKey(50);

cout<<"正在处理图片!" << endl;

char key = (char)waitKey(s.inputCapture.isOpened() ? 50 : s.delay);

if( key == ESC_KEY )

break;

if( key == 'u' && mode == CALIBRATED )

s.showUndistorted = !s.showUndistorted;

if( s.inputCapture.isOpened() && key == 'g' )

mode = CAPTURING;

imagePoints.clear();

//! [await_input]

//for循环结束

// -----------------------Show the undistorted image for the image list ------------------------

//! [show_results]

if( s.inputType == Settings::IMAGE_LIST && s.showUndistorted && !cameraMatrix.empty())

Mat view, rview, map1, map2;

if (s.useFisheye)

Mat newCamMat;

fisheye::estimateNewCameraMatrixForUndistortRectify(cameraMatrix, distCoeffs, imageSize,

Matx33d::eye(), newCamMat, 1);

fisheye::initUndistortRectifyMap(cameraMatrix, distCoeffs, Matx33d::eye(), newCamMat, imageSize,

CV_16SC2, map1, map2);

else

initUndistortRectifyMap(

cameraMatrix, distCoeffs, Mat(),

getOptimalNewCameraMatrix(cameraMatrix, distCoeffs, imageSize, 1, imageSize, 0), imageSize,

CV_16SC2, map1, map2);

for(size_t i = 0; i < s.imageList.size(); i++ )

view = imread(s.imageList[i], IMREAD_COLOR);

if(view.empty())

continue;

remap(view, rview, map1, map2, INTER_LINEAR);

imshow("Calibration View", rview);

waitKey(500);

char c = (char)waitKey();

if( c == ESC_KEY || c == 'q' || c == 'Q' )

break;

//! [show_results]

return 0;

//! [compute_errors]

static double computeReprojectionErrors( const vector<vector<Point3f> >& objectPoints,

const vector<vector<Point2f> >& imagePoints,

const vector<Mat>& rvecs, const vector<Mat>& tvecs,

const Mat& cameraMatrix , const Mat& distCoeffs,

vector<float>& perViewErrors, bool fisheye)

vector<Point2f> imagePoints2;

size_t totalPoints = 0;

double totalErr = 0, err;

perViewErrors.resize(objectPoints.size());

for(size_t i = 0; i < objectPoints.size(); ++i )

if (fisheye)

fisheye::projectPoints(objectPoints[i], imagePoints2, rvecs[i], tvecs[i], cameraMatrix,

distCoeffs);

else

projectPoints(objectPoints[i], rvecs[i], tvecs[i], cameraMatrix, distCoeffs, imagePoints2);

err = norm(imagePoints[i], imagePoints2, NORM_L2);

size_t n = objectPoints[i].size();

perViewErrors[i] = (float) std::sqrt(err*err/n);

totalErr += err*err;

totalPoints += n;

return std::sqrt(totalErr/totalPoints);

//! [compute_errors]

//! [board_corners]

static void calcBoardCornerPositions(Size boardSize, float squareSize, vector<Point3f>& corners,

Settings::Pattern patternType /*= Settings::CHESSBOARD*/)

corners.clear();

switch(patternType)

case Settings::CHESSBOARD:

case Settings::CIRCLES_GRID:

for( int i = 0; i < boardSize.height; ++i )

for( int j = 0; j < boardSize.width; ++j )

corners.push_back(Point3f(j*squareSize, i*squareSize, 0));

break;

case Settings::ASYMMETRIC_CIRCLES_GRID:

for( int i = 0; i < boardSize.height; i++ )

for( int j = 0; j < boardSize.width; j++ )

corners.push_back(Point3f((2*j + i % 2)*squareSize, i*squareSize, 0));

break;

default:

break;

//! [board_corners]

static bool runCalibration( Settings& s, Size& imageSize, Mat& cameraMatrix, Mat& distCoeffs,

vector<vector<Point2f> > imagePoints, vector<Mat>& rvecs, vector<Mat>& tvecs,

vector<float>& reprojErrs, double& totalAvgErr, vector<Point3f>& newObjPoints,

float grid_width, bool release_object)

//! [fixed_aspect]

cameraMatrix = Mat::eye(3, 3, CV_64F);

if( !s.useFisheye && s.flag & CALIB_FIX_ASPECT_RATIO )

cameraMatrix.at<double>(0,0) = s.aspectRatio;

//! [fixed_aspect]

if (s.useFisheye)

distCoeffs = Mat::zeros(4, 1, CV_64F);

else

distCoeffs = Mat::zeros(8, 1, CV_64F);

vector<vector<Point3f> > objectPoints(1);

calcBoardCornerPositions(s.boardSize, s.squareSize, objectPoints[0], s.calibrationPattern);

objectPoints[0][s.boardSize.width - 1].x = objectPoints[0][0].x + grid_width;

newObjPoints = objectPoints[0];

objectPoints.resize(imagePoints.size(),objectPoints[0]);

//Find intrinsic and extrinsic camera parameters

double rms;

if (s.useFisheye)

Mat _rvecs, _tvecs;

rms = fisheye::calibrate(objectPoints, imagePoints, imageSize, cameraMatrix, distCoeffs, _rvecs,

_tvecs, s.flag);

rvecs.reserve(_rvecs.rows);

tvecs.reserve(_tvecs.rows);

for(int i = 0; i < int(objectPoints.size()); i++)

rvecs.push_back(_rvecs.row(i));

tvecs.push_back(_tvecs.row(i));

else

int iFixedPoint = -1;

if (release_object)

iFixedPoint = s.boardSize.width - 1;

rms = calibrateCameraRO(objectPoints, imagePoints, imageSize, iFixedPoint,

cameraMatrix, distCoeffs, rvecs, tvecs, newObjPoints,

s.flag | CALIB_USE_LU);

if (release_object)

cout << "New board corners: " << endl;

cout << newObjPoints[0] << endl;

cout << newObjPoints[s.boardSize.width - 1] << endl;

cout << newObjPoints[s.boardSize.width * (s.boardSize.height - 1)] << endl;

cout << newObjPoints.back() << endl;

cout << "Re-projection error reported by calibrateCamera: "<< rms << endl;

bool ok = checkRange(cameraMatrix) && checkRange(distCoeffs);

objectPoints.clear();

objectPoints.resize(imagePoints.size(), newObjPoints);

totalAvgErr = computeReprojectionErrors(objectPoints, imagePoints, rvecs, tvecs, cameraMatrix,

distCoeffs, reprojErrs, s.useFisheye);

return ok;

// Print camera parameters to the output file

static void saveCameraParams( Settings& s, Size& imageSize, Mat& cameraMatrix, Mat& distCoeffs,

const vector<Mat>& rvecs, const vector<Mat>& tvecs,

const vector<float>& reprojErrs, const vector<vector<Point2f> >& imagePoints,

double totalAvgErr, const vector<Point3f>& newObjPoints )

FileStorage fs( s.outputFileName, FileStorage::WRITE );

time_t tm;

time( &tm );

struct tm *t2 = localtime( &tm );

char buf[1024];

strftime( buf, sizeof(buf), "%c", t2 );

fs << "calibration_time" << buf;

if( !rvecs.empty() || !reprojErrs.empty() )

fs << "nr_of_frames" << (int)std::max(rvecs.size(), reprojErrs.size());

fs << "image_width" << imageSize.width;

fs << "image_height" << imageSize.height;

fs << "board_width" << s.boardSize.width;

fs << "board_height" << s.boardSize.height;

fs << "square_size" << s.squareSize;

if( !s.useFisheye && s.flag & CALIB_FIX_ASPECT_RATIO )

fs << "fix_aspect_ratio" << s.aspectRatio;

if (s.flag)

std::stringstream flagsStringStream;

if (s.useFisheye)

flagsStringStream << "flags:"

<< (s.flag & fisheye::CALIB_FIX_SKEW ? " +fix_skew" : "")

<< (s.flag & fisheye::CALIB_FIX_K1 ? " +fix_k1" : "")

<< (s.flag & fisheye::CALIB_FIX_K2 ? " +fix_k2" : "")

<< (s.flag & fisheye::CALIB_FIX_K3 ? " +fix_k3" : "")

<< (s.flag & fisheye::CALIB_FIX_K4 ? " +fix_k4" : "")

<< (s.flag & fisheye::CALIB_RECOMPUTE_EXTRINSIC ? " +recompute_extrinsic" : "");

else

flagsStringStream << "flags:"

<< (s.flag & CALIB_USE_INTRINSIC_GUESS ? " +use_intrinsic_guess" : "")

<< (s.flag & CALIB_FIX_ASPECT_RATIO ? " +fix_aspectRatio" : "")

<< (s.flag & CALIB_FIX_PRINCIPAL_POINT ? " +fix_principal_point" : "")

<< (s.flag & CALIB_ZERO_TANGENT_DIST ? " +zero_tangent_dist" : "")

<< (s.flag & CALIB_FIX_K1 ? " +fix_k1" : "")

<< (s.flag & CALIB_FIX_K2 ? " +fix_k2" : "")

<< (s.flag & CALIB_FIX_K3 ? " +fix_k3" : "")

<< (s.flag & CALIB_FIX_K4 ? " +fix_k4" : "")

<< (s.flag & CALIB_FIX_K5 ? " +fix_k5" : "");

fs.writeComment(flagsStringStream.str());

fs << "flags" << s.flag;

fs << "fisheye_model" << s.useFisheye;

fs << "camera_matrix" << cameraMatrix;

fs << "distortion_coefficients" << distCoeffs;

fs << "avg_reprojection_error" << totalAvgErr;

if (s.writeExtrinsics && !reprojErrs.empty())

fs << "per_view_reprojection_errors" << Mat(reprojErrs);

if(s.writeExtrinsics && !rvecs.empty() && !tvecs.empty() )

CV_Assert(rvecs[0].type() == tvecs[0].type());

Mat bigmat((int)rvecs.size(), 6, CV_MAKETYPE(rvecs[0].type(), 1));

bool needReshapeR = rvecs[0].depth() != 1 ? true : false;

bool needReshapeT = tvecs[0].depth() != 1 ? true : false;

for( size_t i = 0; i < rvecs.size(); i++ )

Mat r = bigmat(Range(int(i), int(i+1)), Range(0,3));

Mat t = bigmat(Range(int(i), int(i+1)), Range(3,6));

if(needReshapeR)

rvecs[i].reshape(1, 1).copyTo(r);

else

//*.t() is MatExpr (not Mat) so we can use assignment operator

CV_Assert(rvecs[i].rows == 3 && rvecs[i].cols == 1);

r = rvecs[i].t();

if(needReshapeT)

tvecs[i].reshape(1, 1).copyTo(t);

else

CV_Assert(tvecs[i].rows == 3 && tvecs[i].cols == 1);

t = tvecs[i].t();

fs.writeComment("a set of 6-tuples (rotation vector + translation vector) for each view");

fs << "extrinsic_parameters" << bigmat;

if(s.writePoints && !imagePoints.empty() )

Mat imagePtMat((int)imagePoints.size(), (int)imagePoints[0].size(), CV_32FC2);

for( size_t i = 0; i < imagePoints.size(); i++ )

Mat r = imagePtMat.row(int(i)).reshape(2, imagePtMat.cols);

Mat imgpti(imagePoints[i]);

imgpti.copyTo(r);

fs << "image_points" << imagePtMat;

if( s.writeGrid && !newObjPoints.empty() )

fs << "grid_points" << newObjPoints;

//! [run_and_save]

bool runCalibrationAndSave(Settings& s, Size imageSize, Mat& cameraMatrix, Mat& distCoeffs,

vector<vector<Point2f> > imagePoints, float grid_width, bool release_object)

vector<Mat> rvecs, tvecs;

vector<float> reprojErrs;

double totalAvgErr = 0;

vector<Point3f> newObjPoints;

bool ok = runCalibration(s, imageSize, cameraMatrix, distCoeffs, imagePoints, rvecs, tvecs, reprojErrs,

totalAvgErr, newObjPoints, grid_width, release_object);

cout << (ok ? "Calibration succeeded" : "Calibration failed")

<< ". avg re projection error = " << totalAvgErr << endl;

if (ok)

saveCameraParams(s, imageSize, cameraMatrix, distCoeffs, rvecs, tvecs, reprojErrs, imagePoints,

totalAvgErr, newObjPoints);

return ok;

//! [run_and_save]

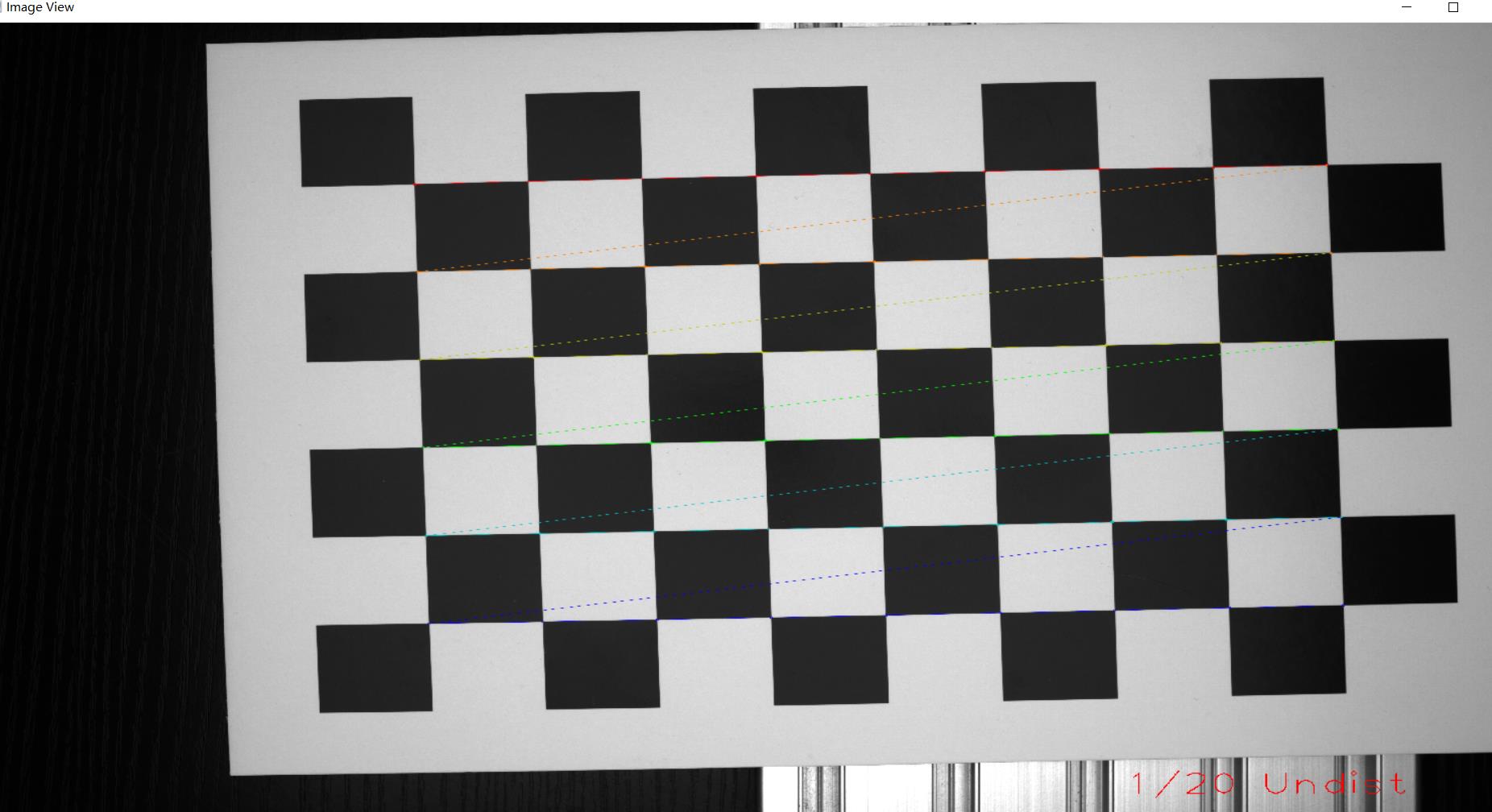

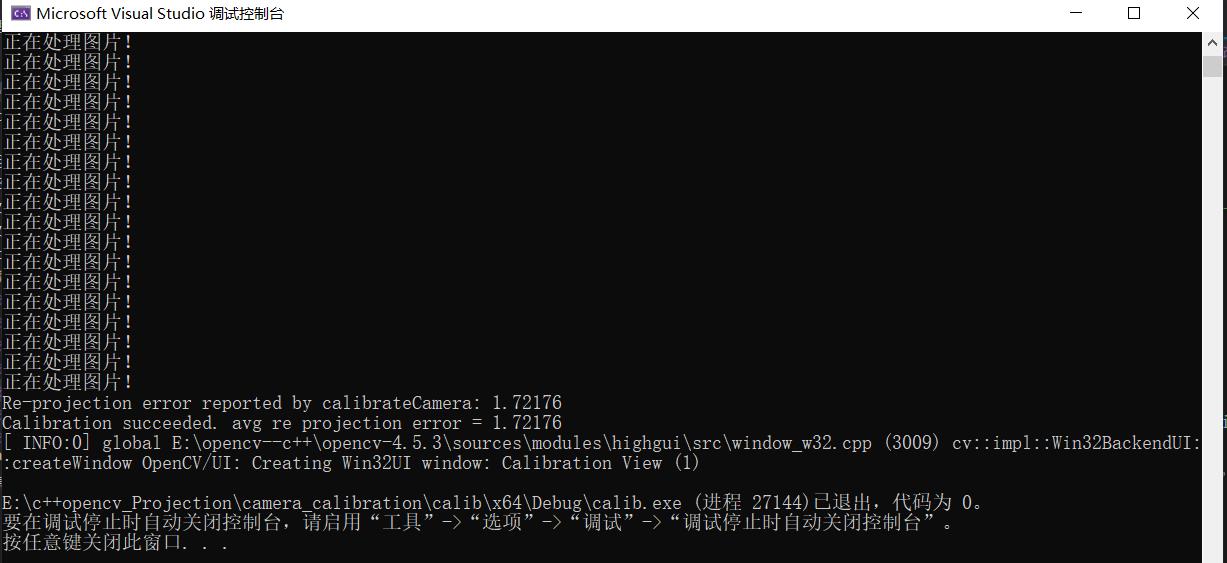

上面的代码除了类的封装之外,主要是最后几个函数的相互调用,搞懂之后也简单的。贴一张运行图

程序运行结果如下,总的重投影误差为1.72pixel,相比matlab的0.59的标定精度误差是相当的高了,这里就需要对源码和角点检测的部分代码进行更改了,以获取更小的reprojection-error.

以上是关于MatLab和OpenCV共同实现单目相机标定实验--附标定图片的主要内容,如果未能解决你的问题,请参考以下文章