阿旭机器学习实战33中文文本分类之情感分析--朴素贝叶斯KNN逻辑回归

Posted 阿_旭

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了阿旭机器学习实战33中文文本分类之情感分析--朴素贝叶斯KNN逻辑回归相关的知识,希望对你有一定的参考价值。

【阿旭机器学习实战】系列文章主要介绍机器学习的各种算法模型及其实战案例,欢迎点赞,关注共同学习交流。

目录

1.查看原始数据结构

关注GZH:阿旭算法与机器学习,回复:“ML33”即可获取本文数据集、源码与项目文档

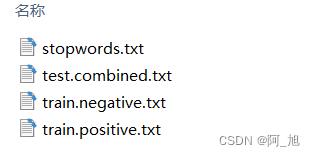

数据集共有4个文件:

stopwords.txt为停用词文件;

train.negative.txt为训练用负面数据文件;

train.positive.txt为训练用正面数据文件;

test.combined.txxt为测试用数据文件。

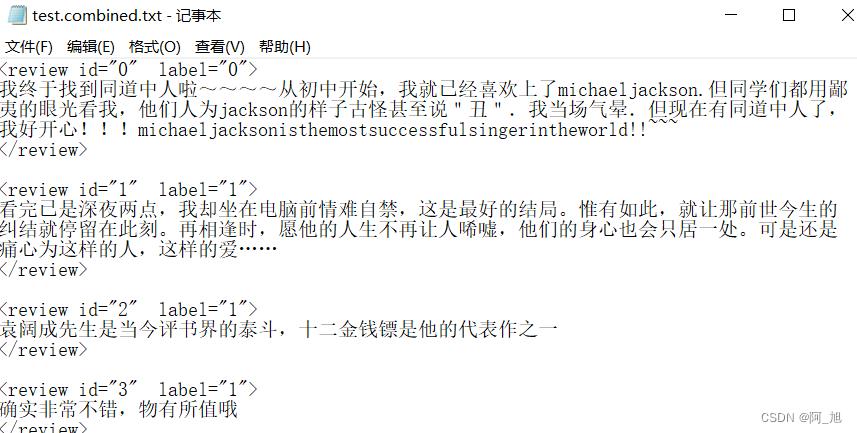

文件内容如下:

2.导入数据并进行数据处理

from matplotlib import pyplot as plt

import jieba # 分词

import re # 正则

from sklearn.feature_extraction.text import TfidfVectorizer

import numpy as np

2.1 提取数据与标签

def read_data(path, is_pos=None):

"""

给定文件的路径,读取文件

path: path to the data

is_pos: 是否数据是postive samples.

return: (list of review texts, list of labels)

"""

reviews, labels = [], []

with open(path, 'r',encoding='utf-8') as file:

review_start = False

review_text = []

for line in file:

line = line.strip()

if not line: continue

if not review_start and line.startswith("<review"):

review_start = True

if "label" in line:

labels.append(int(line.split('"')[-2]))

continue

if review_start and line == "</review>":

review_start = False

reviews.append(" ".join(review_text))

review_text = []

continue

if review_start:

review_text.append(line)

if is_pos:

labels = [1]*len(reviews)

elif not is_pos is None:

labels = [0]*len(reviews)

return reviews, labels

def process_file():

"""

读取训练数据和测试数据,并对它们做一些预处理

"""

train_pos_file = "data_sentiment/train.positive.txt"

train_neg_file = "data_sentiment/train.negative.txt"

test_comb_file = "data_sentiment/test.combined.txt"

# 读取文件部分,把具体的内容写入到变量里面

train_pos_cmts, train_pos_lbs = read_data(train_pos_file, True)

train_neg_cmts, train_neg_lbs = read_data(train_neg_file, False)

train_comments = train_pos_cmts + train_neg_cmts

train_labels = train_pos_lbs + train_neg_lbs

test_comments, test_labels = read_data(test_comb_file)

return train_comments, train_labels, test_comments, test_labels

train_comments, train_labels, test_comments, test_labels = process_file()

train_comments[:5]

['发短信特别不方便!背后的屏幕很大用起来不舒服,是手触屏的!切换屏幕很麻烦!',

'手感超好,而且黑色相比白色在转得时候不容易眼花,找童年的记忆啦。',

'!!!!!',

'先付款的 有信用',

'价格 质量 售后 都很满意']

# 训练数据和测试数据大小

print (len(train_comments), len(test_comments))

print (train_comments[1], train_labels[1])

8064 2500

手感超好,而且黑色相比白色在转得时候不容易眼花,找童年的记忆啦。 1

2.2 过滤停用词

def load_stopwords(path):

"""

从外部文件中导入停用词

"""

stopwords = set()

with open(path, 'r',encoding='utf-8') as in_file:

for line in in_file:

stopwords.add(line.strip())

return stopwords

def clean_non_chinese_symbols(text):

"""

处理非中文字符

"""

text = re.sub('[!!]+', "!", text)

text = re.sub('[??]+', "?", text)

text = re.sub("[a-zA-Z#$%&\\'()*+,-./:;:<=>@,。★、…【】《》“”‘’[\\\\]^_`|~]+", " UNK ", text)

return re.sub("\\s+", " ", text)

def clean_numbers(text):

"""

处理数字符号 128 190 NUM

"""

return re.sub("\\d+", ' NUM ', text)

def preprocess_text(text, stopwords):

"""

文本的预处理过程

"""

text = clean_non_chinese_symbols(text)

text = clean_numbers(text)

text = " ".join([term for term in jieba.cut(text) if term and not term in stopwords])

return text

path_stopwords = "./data_sentiment/stopwords.txt"

stopwords = load_stopwords(path_stopwords)

# 对于train_comments, test_comments进行字符串的处理,几个考虑的点:

# 1. 停用词过滤

# 2. 去掉特殊符号

# 3. 去掉数字(比如价格..)

# 4. ...

# 需要注意的点是,由于评论数据本身很短,如果去掉的太多,很可能字符串长度变成0

# 预处理部部分,可以自行选择合适的方案,只要注释就可以。

train_comments_new = [preprocess_text(comment, stopwords) for comment in train_comments]

test_comments_new = [preprocess_text(comment, stopwords) for comment in test_comments]

print (train_comments_new[0], test_comments_new[0])

发短信 特别 不 方便 ! 背后 屏幕 很大 起来 不 舒服 UNK 手触 屏 ! 切换 屏幕 很 麻烦 ! 终于 找到 同道中人 初中 UNK 已经 喜欢 上 UNK 同学 都 鄙夷 眼光 看 UNK 人为 UNK 样子 古怪 说 " 丑 " 当场 气晕 现在 同道中人 UNK 好开心 ! UNK ! UNK

2.3 TfidfVectorizer将文本向量化

# 利用tf-idf从文本中提取特征,写到数组里面.

# 参考:https://scikit-learn.org/stable/modules/generated/sklearn.feature_extraction.text.TfidfVectorizer.html

tfidf = TfidfVectorizer()

X_train = tfidf.fit_transform(train_comments_new) # 训练数据的特征

y_train = train_labels # 训练数据的label

X_test = tfidf.transform(test_comments_new) # 测试数据的特征

y_test = test_labels# 测试数据的label

print (np.shape(X_train), np.shape(X_test), np.shape(y_train), np.shape(y_test))

(8064, 23101) (2500, 23101) (8064,) (2500,)

3.利用不同模型进行训练与评估

3.1 朴素贝叶斯模型

from sklearn.naive_bayes import MultinomialNB

from sklearn.metrics import accuracy_score

clf = MultinomialNB()

# 利用朴素贝叶斯做训练

clf.fit(X_train, y_train)

y_pred = clf.predict(X_test)

print("accuracy on test data: ", accuracy_score(y_test, y_pred))

accuracy on test data: 0.6368

3.2 k近邻模型

from sklearn.neighbors import KNeighborsClassifier

clf = KNeighborsClassifier(n_neighbors=1)

clf.fit(X_train, y_train)

y_pred = clf.predict(X_test)

print("accuracy on test data: ", accuracy_score(y_test, y_pred))

accuracy on test data: 0.524

3.3 逻辑回归模型

from sklearn.linear_model import LogisticRegression

clf = LogisticRegression(solver='liblinear')

clf.fit(X_train, y_train)

y_pred = clf.predict(X_test)

print("accuracy on test data: ", accuracy_score(y_test, y_pred))

accuracy on test data: 0.7136

如果文章对你有帮助,感谢点赞+关注!

关注下方GZH:阿旭算法与机器学习,回复:“ML33”即可获取本文数据集、源码与项目文档,欢迎共同学习交流

以上是关于阿旭机器学习实战33中文文本分类之情感分析--朴素贝叶斯KNN逻辑回归的主要内容,如果未能解决你的问题,请参考以下文章