Kubernetes和Jenkins——基于Kubernetes构建Jenkins持续集成平台

Posted stan Z

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Kubernetes和Jenkins——基于Kubernetes构建Jenkins持续集成平台相关的知识,希望对你有一定的参考价值。

基于Kubernetes/K8S构建Jenkins持续集成平台

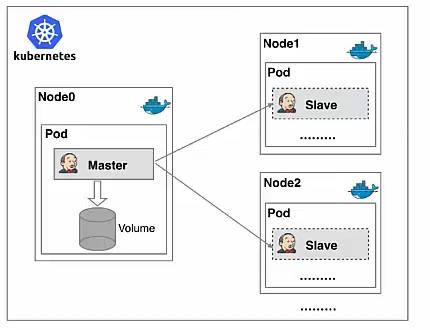

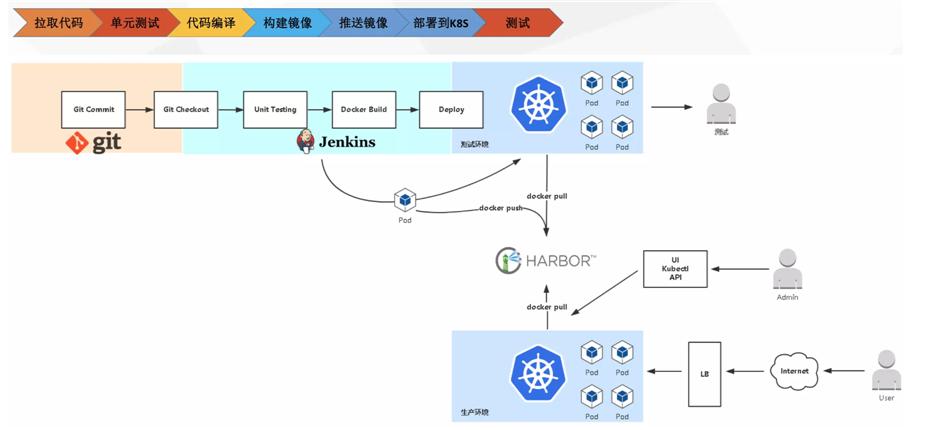

Kubernetes+Docker+Jenkins持续集成架构图

- 构建K8S集群

- Jenkins调度K8S API

- 动态生成 Jenkins Slave pod

- Slave pod 拉取 Git 代码/编译/打包镜像

- 推送到镜像仓库 Harbor

- Slave 工作完成,Pod 自动销毁

- 部署到测试或生产 Kubernetes平台

Kubernetes+Docker+Jenkins持续集成方案好处

-

服务高可用

- 当 Jenkins Master 出现故障时,Kubernetes 会自动创建一个新的 Jenkins Master容器,并且将 Volume 分配给新创建的容器,保证数据不丢失,从而达到集群服务高可用

-

动态伸缩,合理使用资源

- 每次运行 Job 时,会自动创建一个 Jenkins Slave,Job 完成后,Slave自动注销并删除容器,资源自动释放

- 而且 Kubernetes 会根据每个资源的使用情况,动态分配Slave 到空闲的节点上创建,降低出现因某节点资源利用率高,还排队等待在该节点的情况。

-

扩展性好

当 Kubernetes 集群的资源严重不足而导致 Job 排队等待时,可以很容易的添加一个Kubernetes Node 到集群中,从而实现扩展。

Kubeadm安装Kubernetes

K8S详细可以参考:Kubernetes

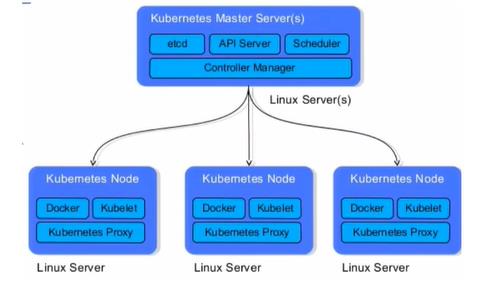

Kubernetes的架构

- API Server:用于暴露Kubernetes API,任何资源的请求的调用操作都是通过kube-apiserver提供的接口进行的。

- Etcd:是Kubernetes提供默认的存储系统,保存所有集群数据,使用时需要为etcd数据提供备份计划。

- Controller-Manager:作为集群内部的管理控制中心,负责集群内的Node、Pod副本、服务端点(Endpoint)、命名空间(Namespace)、服务账号(ServiceAccount)、资源定额(ResourceQuota)的管理,当某个Node意外宕机时,Controller Manager会及时发现并执行自动化修复流程,确保集群始终处于预期的工作状态。

- Scheduler:监视新创建没有分配到Node的Pod,为Pod选择一个Node。

- Kubelet:负责维护容器的生命周期,同时负责Volume和网络的管理

- Kube proxy:是Kubernetes的核心组件,部署在每个Node节点上,它是实现Kubernetes Service的通信与负载均衡机制的重要组件

安装环境准备

| 主机 | ip | 安装的软件 |

|---|---|---|

| k8s-master | 192.168.188.116 | kube-apiserver、kube-controller-manager、kube-scheduler、docker、etcd、calico,NFS |

| k8s-node1 | 192.168.188.117 | kubelet、kubeproxy、Docker |

| k8s-node2 | 192.168.188.118 | kubelet、kubeproxy、Docker |

| harbor服务器 | 192.168.188.119 | Harbor |

| gitlab服务器 | 192.168.188.120 | Gitlab |

三台k8s服务器都需要完成

关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

关闭selinux

# 临时关闭

setenforce 0

# 永久关闭

sed -i 's/enforcing/disabled/' /etc/selinux/config

关闭swap

# 临时

swapoff -a

# 永久关闭

sed -ri 's/.*swap.*/#&/' /etc/fstab

# 根据规划设置主机名【master节点上操作】

hostnamectl set-hostname master

# 根据规划设置主机名【node1节点操作】

hostnamectl set-hostname node1

# 根据规划设置主机名【node2节点操作】

hostnamectl set-hostname node2

添加ip到hosts

cat >> /etc/hosts << EOF

192.168.188.116 master

192.168.188.117 node1

192.168.188.118 node2

EOF

设置系统参数

设置允许路由转发,不对bridge的数据进行处理

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

EOF

执行文件

sysctl -p /etc/sysctl.d/k8s.conf

kube-proxy开启ipvs的前置条件

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash

/etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

安装Docker、kubelet、kubeadm、kubectl

所有节点安装Docker、kubeadm、kubelet,Kubernetes默认CRI(容器运行时)为Docker,因此先安装Docker

安装Docker

首先配置一下Docker的阿里yum源

安装需要的安装包

yum install -y yum-utils

设置镜像仓库

我们用阿里云

yum-config-manager \\

--add-repo \\

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

更新yum软件包索引

yum makecache fast

安装docker docker-ce 社区

yum -y install docker-ce

查看版本

docker version

设置开机启动

systemctl enable docker --now

配置docker的镜像源

mkdir -p /etc/docker

这个是我自己阿里云的加速 每个人都不一样 可以去阿里云官方查看

tee /etc/docker/daemon.json <<-EOF

"registry-mirrors": ["https://m0rsqupc.mirror.aliyuncs.com"]

EOF

验证

[root@master ~]# cat /etc/docker/daemon.json

"registry-mirrors": ["https://m0rsqupc.mirror.aliyuncs.com"]

然后重启docker

systemctl restart docker

安装kubelet、kubeadm、kubectl

- kubeadm: 用来初始化集群的指令

- kubelet: 在集群中的每个节点上用来启动 pod 和 container 等

- kubectl: 用来与集群通信的命令行工具

添加kubernetes软件源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

安装kubelet、kubeadm、kubectl,同时指定版本

yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0

systemctl enable kubelet --now

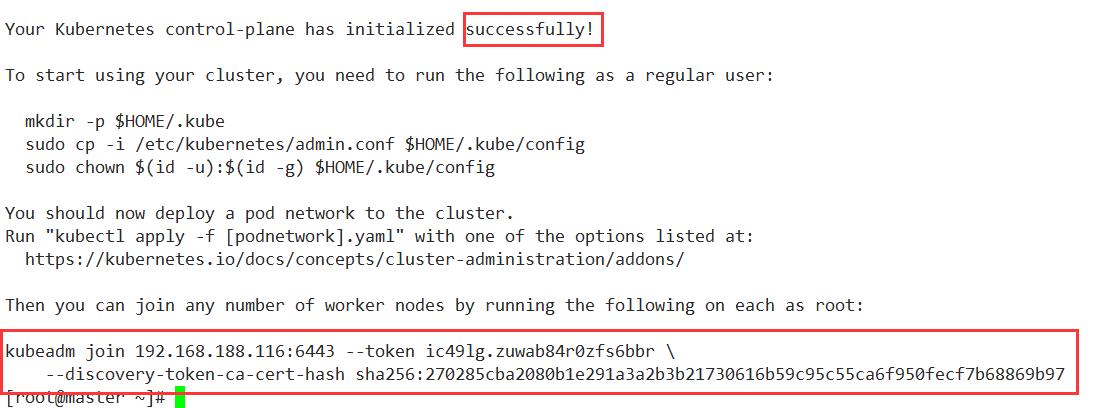

部署Kubernetes Master(master节点)

kubeadm init --kubernetes-version=1.18.0 --apiserver-advertise-address=192.168.188.116 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.18.0 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16

由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址,(执行上述命令会比较慢,因为后台其实已经在拉取镜像了)

我们 docker images 命令即可查看已经拉取的镜像

表示kubernetes的镜像已经安装成功

红色圈出来的部分 是下面加入从节点需要使用的命令

kubeadm join 192.168.188.116:6443 --token ic49lg.zuwab84r0zfs6bbr \\

--discovery-token-ca-cert-hash sha256:270285cba2080b1e291a3a2b3b21730616b59c95c55ca6f950fecf7b68869b97

使用kubectl工具

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

查看运行的节点

kubectl get nodes

目前有一个master节点已经运行了,但是还处于未准备状态

安装Calico

Calico是一个网络组件,作用是实现master和子节点实现网络通讯功能

mkdir k8s

cd k8s

wget https://docs.projectcalico.org/v3.10/getting-started/kubernetes/installation/hosted/kubernetes-datastore/calico-networking/1.7/calico.yaml

sed -i 's/192.168.0.0/10.244.0.0/g' calico.yaml

安装calico的组件

kubectl apply -f calico.yaml

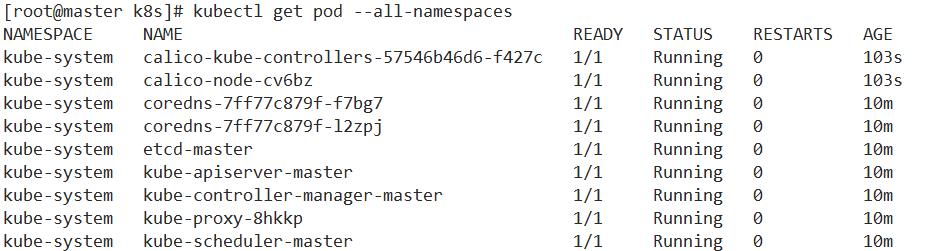

查看pod

kubectl get pod --all-namespaces

必须是全部READY 状态必须是Running

查看更详细的信息

kubectl get pod --all-namespaces -o wide

加入Kubernetes Node(Slave节点)

需要到 node1 和 node2服务器,向集群添加新节点

执行在kubeadm init输出的kubeadm join命令

以下的命令是在master初始化完成后,每个人的都不一样!!!需要复制自己生成的

kubeadm join 192.168.188.116:6443 --token ic49lg.zuwab84r0zfs6bbr \\

--discovery-token-ca-cert-hash sha256:270285cba2080b1e291a3a2b3b21730616b59c95c55ca6f950fecf7b68869b97

查看kubelet是否开启

systemctl status kubelet

然后查看node信息

kubectl get nodes

[root@master k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 17m v1.18.0

node1 Ready <none> 2m27s v1.18.0

node2 Ready <none> 2m25s v1.18.0

如果Status全部为Ready,代表集群环境搭建成功

kubectl常用命令

kubectl get nodes 查看所有主从节点的状态

kubectl get ns 获取所有namespace资源

kubectl get pods -n $nameSpace 获取指定namespace的pod

kubectl describe pod的名称 -n $nameSpace 查看某个pod的执行过程

kubectl logs --tail=1000 pod的名称 | less 查看日志

kubectl create -f xxx.yml 通过配置文件创建一个集群资源对象

kubectl delete -f xxx.yml 通过配置文件删除一个集群资源对象

kubectl delete pod名称 -n $nameSpace 通过pod删除集群资源

kubectl get service -n $nameSpace 查看pod的service情况

基于Kubernetes构建Jenkins持续集成平台

- 在K8S的基础上创建一个Jenkins主节点

- 在K8S上再创建Jenkins的从节点,来帮助我们进行项目的构建

安装和配置NFS

NFS(Network File System),它最大的功能就是可以通过网络,让不同的机器、不同的操作系统可以共享彼此的文件。我们可以利用NFS共享Jenkins运行的配置文件、Maven的仓库依赖文件等

我们把NFS服务器安装在K8S主节点上

安装NFS服务(这个需要在所有K8S的节点上安装)

yum install -y nfs-utils

创建共享目录(这个只需要在master节点)

mkdir -p /opt/nfs/jenkins

编写NFS的共享配置

vim /etc/exports

/opt/nfs/jenkins *(rw,no_root_squash)

*代表对所有IP都开放此目录,rw是读写,no_root_squash不压制root权限

启动服务

systemctl enable nfs --now

查看NFS共享目录

showmount -e 192.168.188.116

[root@node1 ~]# showmount -e 192.168.188.116

Export list for 192.168.188.116:

/opt/nfs/jenkins *

在Kubernetes安装Jenkins-Master

创建NFS client provisioner

nfs-client-provisioner 是一个Kubernetes的简易NFS的外部provisioner,本身不提供NFS,需要现有的NFS服务器提供存储

需要编写yaml了

- StorageClass.yaml

- 持久化存储Storageclass

kubectl explain StorageClass查看kind和version- 定义名称为

managed-nfs-storage

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "true"

- deployment.yaml

- 部署NFS client provisioner

apiVersion: storage.k8s.io/v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: lizhenliang/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.188.116

- name: NFS_PATH

value: /opt/nfs/jenkins/

volumes:

- name: nfs-client-root

nfs:

server: 192.168.188.116

path: /opt/nfs/jenkins/

最后是一个权限文件rbac.yaml

kind: ServiceAccount

apiVersion: v1

metadata:

name: nfs-client-provisioner

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

构建pod

将三个文件都写在同一个目录里面

kubectl create -f .

[root@master nfs-client]# kubectl create -f .

storageclass.storage.k8s.io/managed-nfs-storage created

serviceaccount/nfs-client-provisioner created

deployment.apps/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

Error from server (AlreadyExists): error when creating "rbac.yaml": serviceaccounts "nfs-client-provisioner" already exists

查看pod

kubectl get pod

[root@master nfs-client]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-795b4df87d-zchrq 1/1 Running 0 2m4s

安装Jenkins-Master

还是需要我们自己写Jenkins的Yaml文件

- rbac.yaml

- 和权限有关的信息,把jenkins主节点的一些权限加入到k8s的管理下

kind: Role

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: jenkins

namespace: kube-ops

rules:

- apiGroups: ["extensions", "apps"]

resources: ["deployments"]

verbs: ["create", "delete", "get", "list", "watch", "patch", "update"]

- apiGroups: [""]

resources: ["services"]

verbs: ["create", "delete", "get", "list", "watch", "patch", "update"]

- apiGroups: [""]

resources: ["pods"]

verbs: ["create","delete","get","list","patch","update","watch"]

- apiGroups: [""]

resources: ["pods/exec"]

verbs: ["create","delete","get","list","patch","update","watch"]

- apiGroups: [""]

resources: ["pods/log"]

verbs: ["get","list","watch"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: jenkins

namespace: kube-ops

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: jenkins

subjects:

- kind: ServiceAccount

name: jenkins

namespace: kube-ops

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: jenkinsClusterRole

namespace: kube-ops

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["create","delete","get","list","patch","update","watch"]

- apiGroups: [""]

resources: ["pods/exec"]

verbs: ["create","delete","get","list","patch","update","watch"]

- apiGroups: [""]

resources: ["pods/log"]

verbs: ["get","list","watch"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: jenkinsClusterRuleBinding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: jenkinsClusterRole

subjects:

- kind: ServiceAccount

name: jenkins

namespace: kube-ops

- ServiceaAcount.yaml

- jenkins主节点的一些ServiceaAcount信息

apiVersion: v1

kind: ServiceAccount

metadata:

name: jenkins

namespace: kube-ops

- StatefulSet.yaml

- 是一个有状态应用,里面就定义了之前NFS的信息

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: jenkins

labels:

name: jenkins

namespace: kube-ops

spec:

serviceName: jenkins

selector:

matchLabels:

app: jenkins

replicas: 1

updateStrategy:

type: RollingUpdate

template:

metadata:

name: jenkins

labels:

app: jenkins

spec:

terminationGracePeriodSeconds: 10

serviceAccountName: jenkins

containers:

- name: jenkins

image: jenkins/jenkins:lts-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

name: web

protocol: TCP

- containerPort: 50000

name: agent

protocol: TCP

resources:

limits:

cpu: 1

memory: 1Gi

requests:

cpu: 0.5

memory: 500Mi

env:

- name: LIMITS_MEMORY

valueFrom:

resourceFieldRef:

resource: limits.memory

divisor: 1Mi

- name: JAVA_OPTS

value: -Xmx$(LIMITS_MEMORY)m -XshowSettings:vm -Dhudson.slaves.NodeProvisioner.initialDelay=0 -Dhudson.slaves.NodeProvisioner.MARGIN=50 -Dhudson.slaves.NodeProvisioner.MARGIN0=0.85

volumeMounts:

- name: jenkins-home

mountPath: /var/jenkins_home

livenessProbe:

httpGet:

path: /login

port: 8080

initialDelaySeconds: 60

timeoutSeconds: 5

failureThreshold: 12

readinessProbe:

httpGet:

path: /login

port: 8080

initialDelaySeconds: 60

timeoutSeconds: 5

failureThreshold: 12

securityContext:

fsGroup: 1000

volumeClaimTemplates:

- metadata:

name: jenkins-home

spec:

storageClassName: "managed-nfs-storage"

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

- Service.yaml

- 对外暴露一些端口

apiVersion: v1

kind: Service

metadata:

name: jenkins

namespace: kube-ops

labels:

app: jenkins

spec:

selector:

app: jenkins

type: NodePort

ports:

以上是关于Kubernetes和Jenkins——基于Kubernetes构建Jenkins持续集成平台的主要内容,如果未能解决你的问题,请参考以下文章

Kubernetes和Jenkins——基于Kubernetes构建Jenkins持续集成平台

[系统集成] 基于Kubernetes 部署 jenkins 并动态分配资源

有容云案例系列基于Jenkins和Kubernetes的CI工作流