神经网络机器翻译Neural Machine Translation: Achieving Open Vocabulary Neural MT

Posted clear-

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了神经网络机器翻译Neural Machine Translation: Achieving Open Vocabulary Neural MT相关的知识,希望对你有一定的参考价值。

端到端的神经网络机器翻译(End-to-End Neural Machine Translation)是近几年兴起的一种全新的机器翻译方法。前面介绍了NMT的基本RNN Encoder-Decoder结构以及Attention Mechanism。但是经典的Encoder-Decoder结构存在一个明显的问题,就是源端与目标端都使用固定大小的词典,OOV词通常用一个UNK表示,如果目标端词典太大则会导致softmax计算复杂度变大。为了解决这一问题,研究者们也提出了一些工作。

转载请注明出处:http://blog.csdn.net/u011414416/article/details/51108193

本文将简要介绍如下工作:

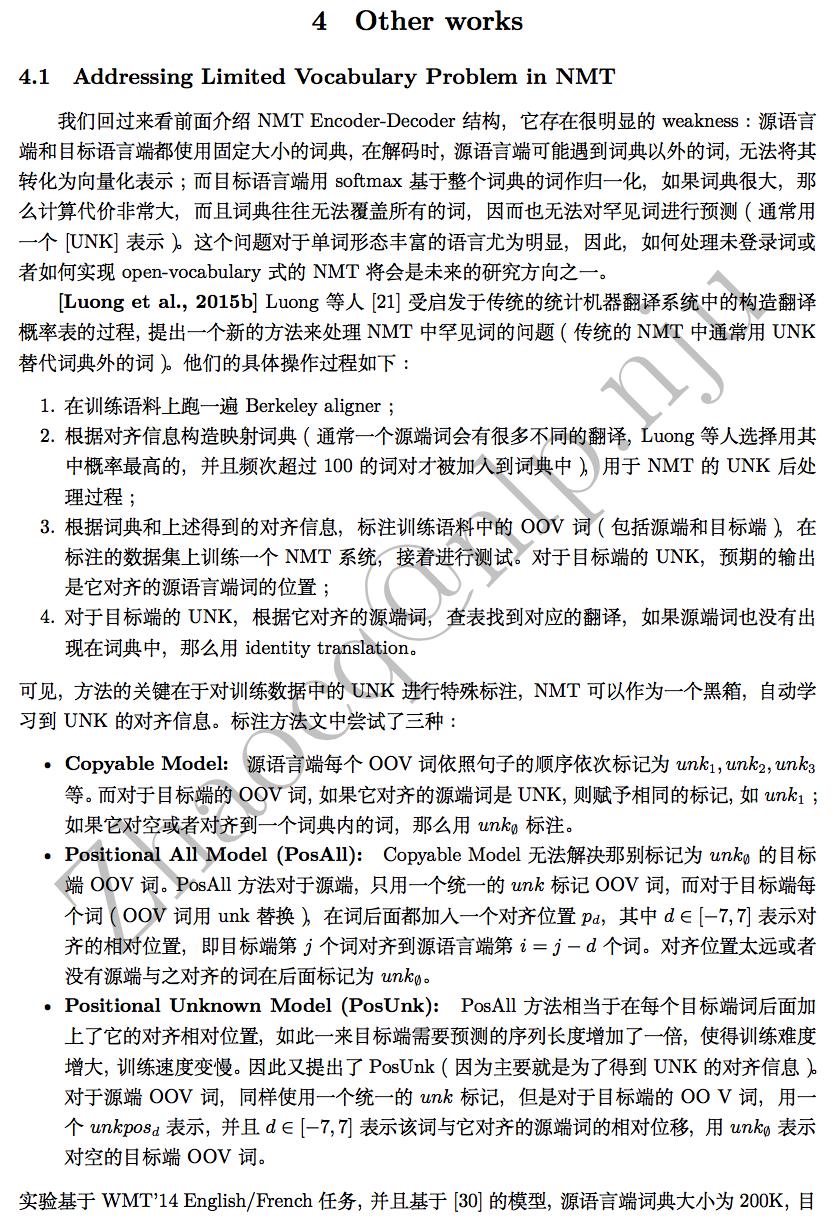

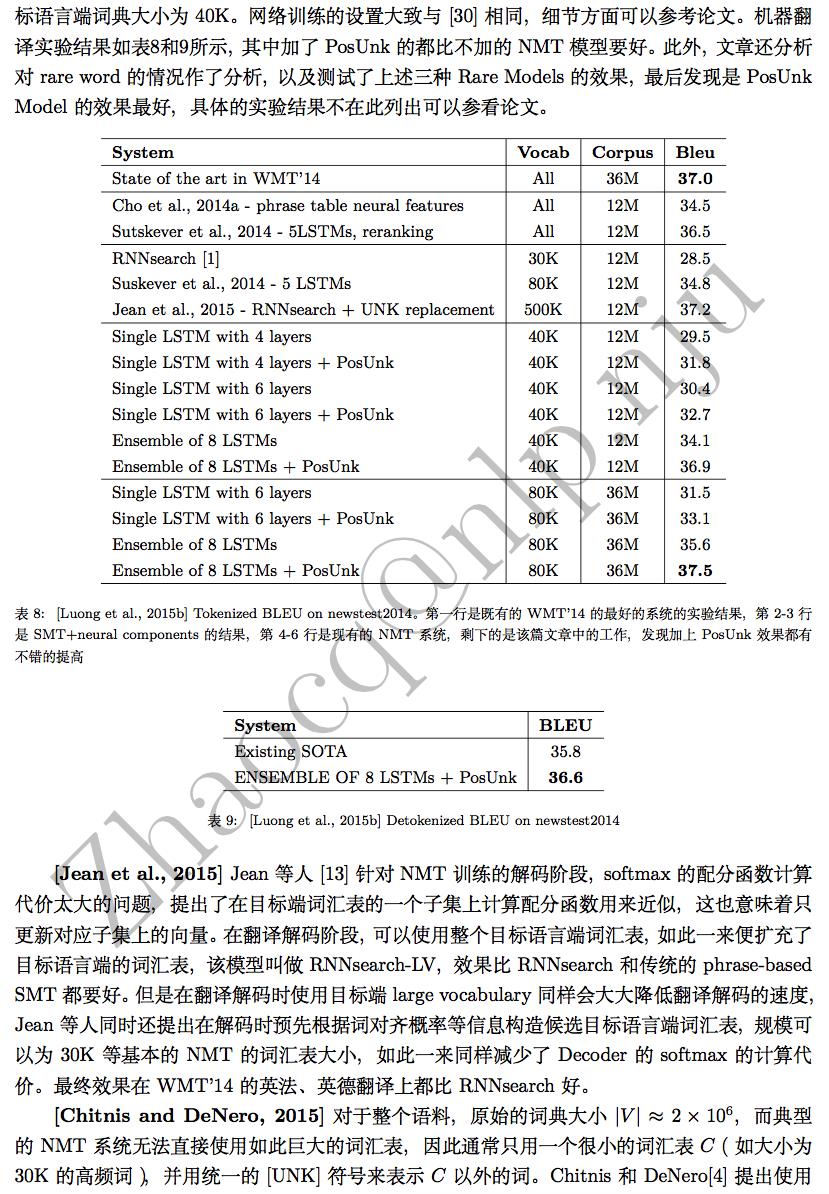

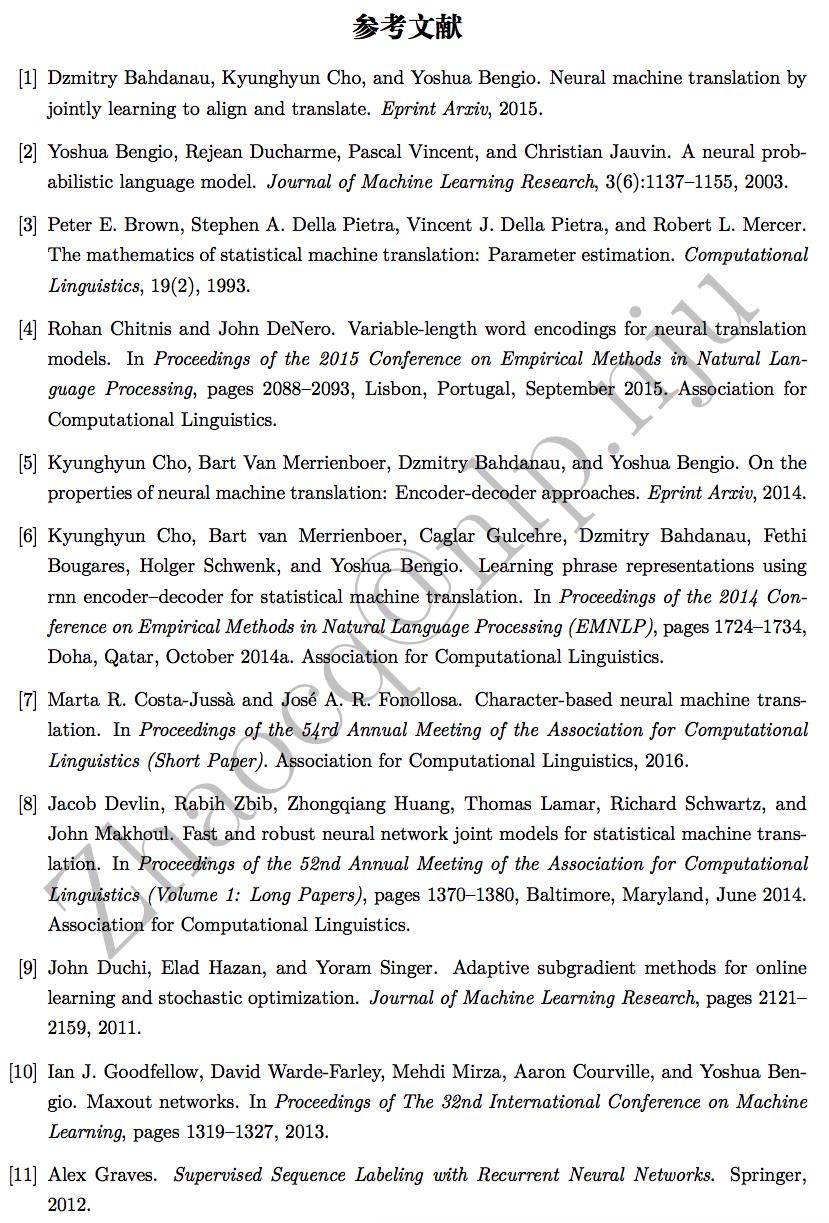

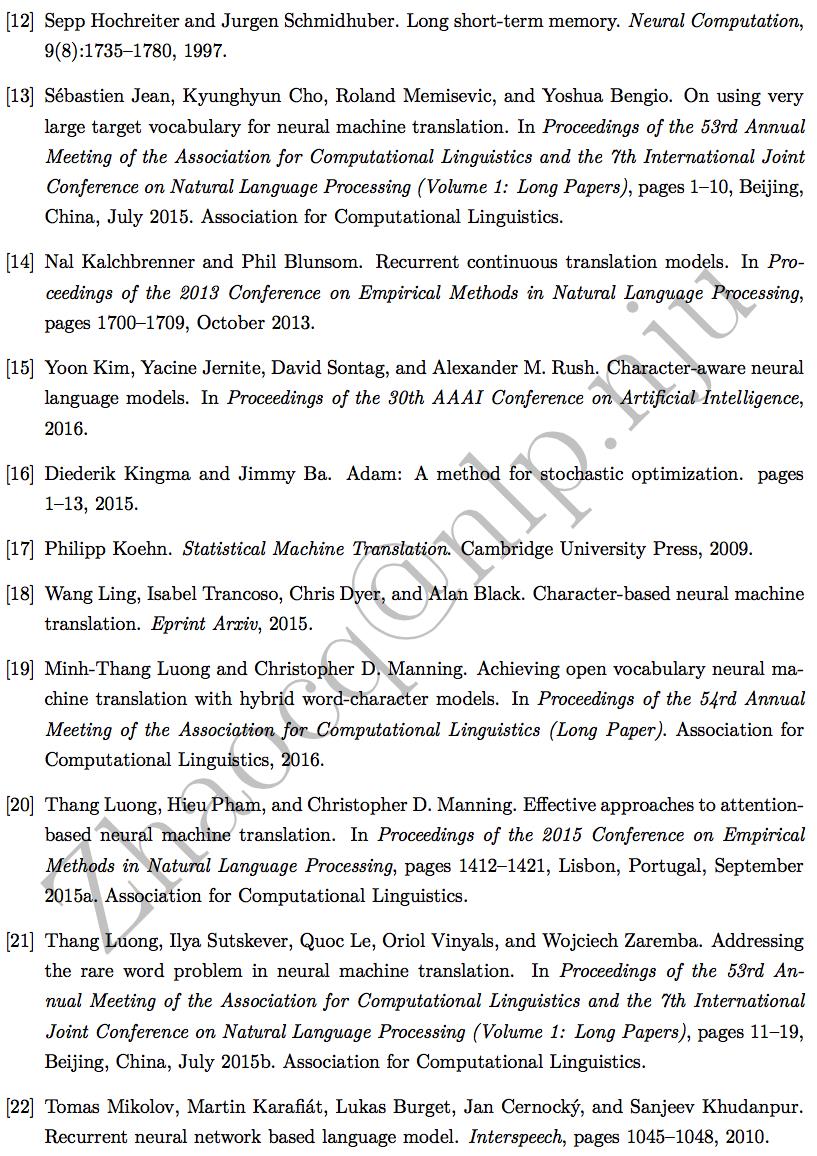

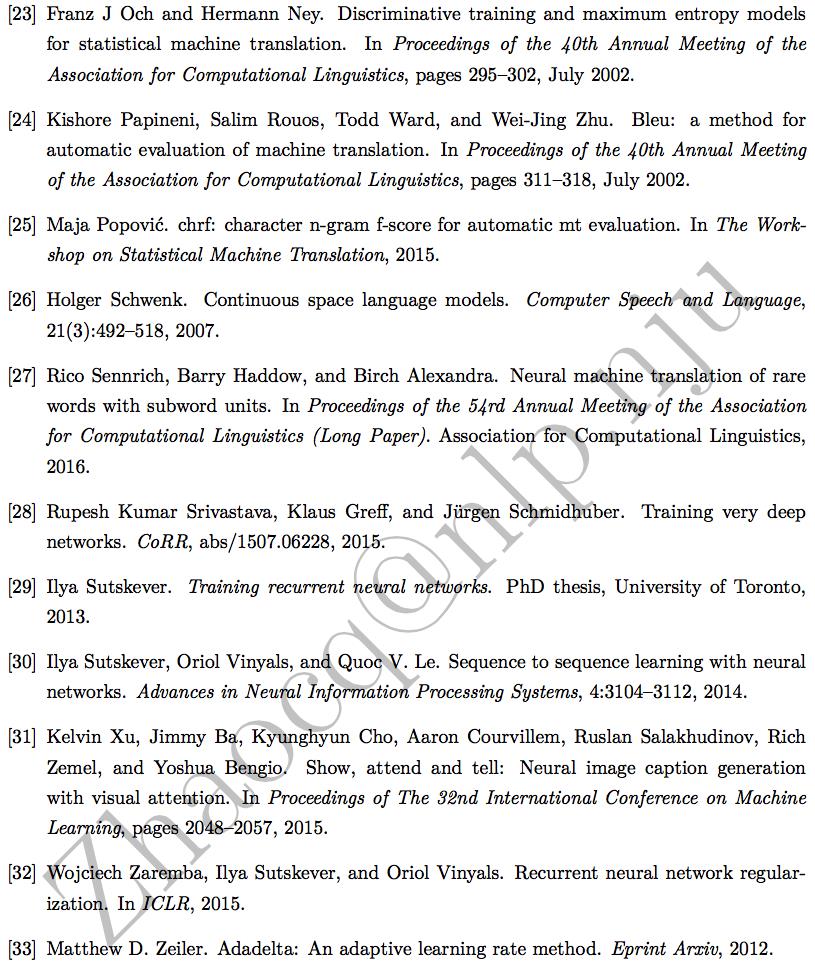

Thang Luong, Ilya Sutskever, Quoc Le, Oriol Vinyals, and Wojciech Zaremba. Addressing the rare word problem in neural machine translation. In ACL 2015 (Long Paper).

Sébastien Jean, Kyunghyun Cho, Roland Memisevic, and Yoshua Bengio. On using very large target vocabulary for neural machine translation. In ACL 2015 (Long Paper).

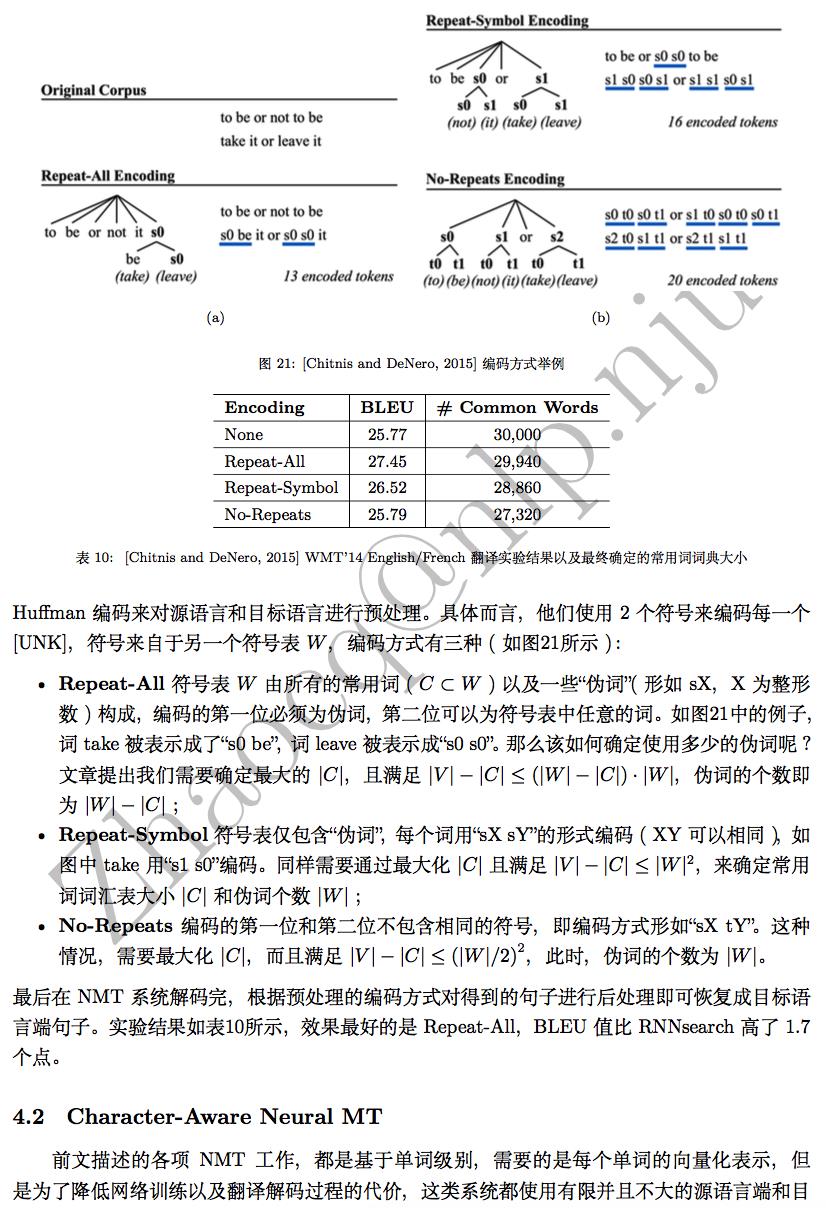

Rohan Chitnis and John DeNero. Variable-length word encodings for neural translation models. in EMNLP 2015.

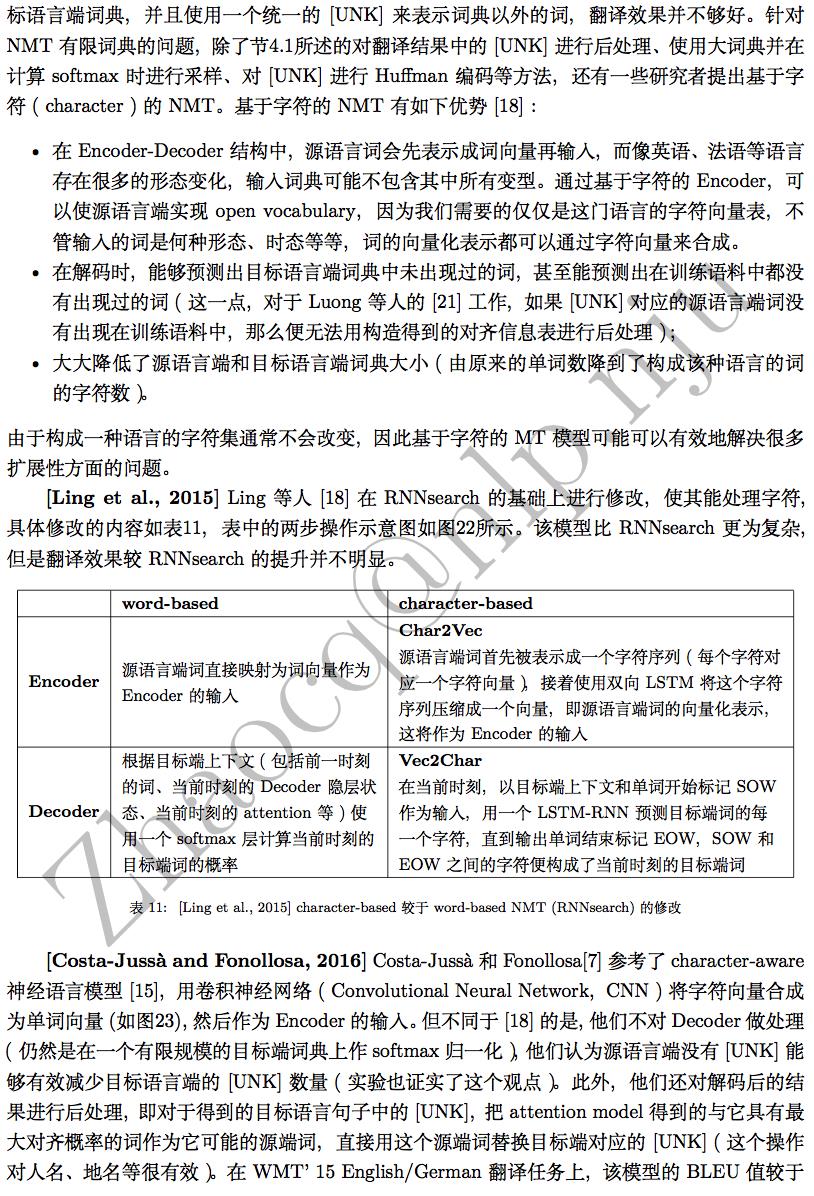

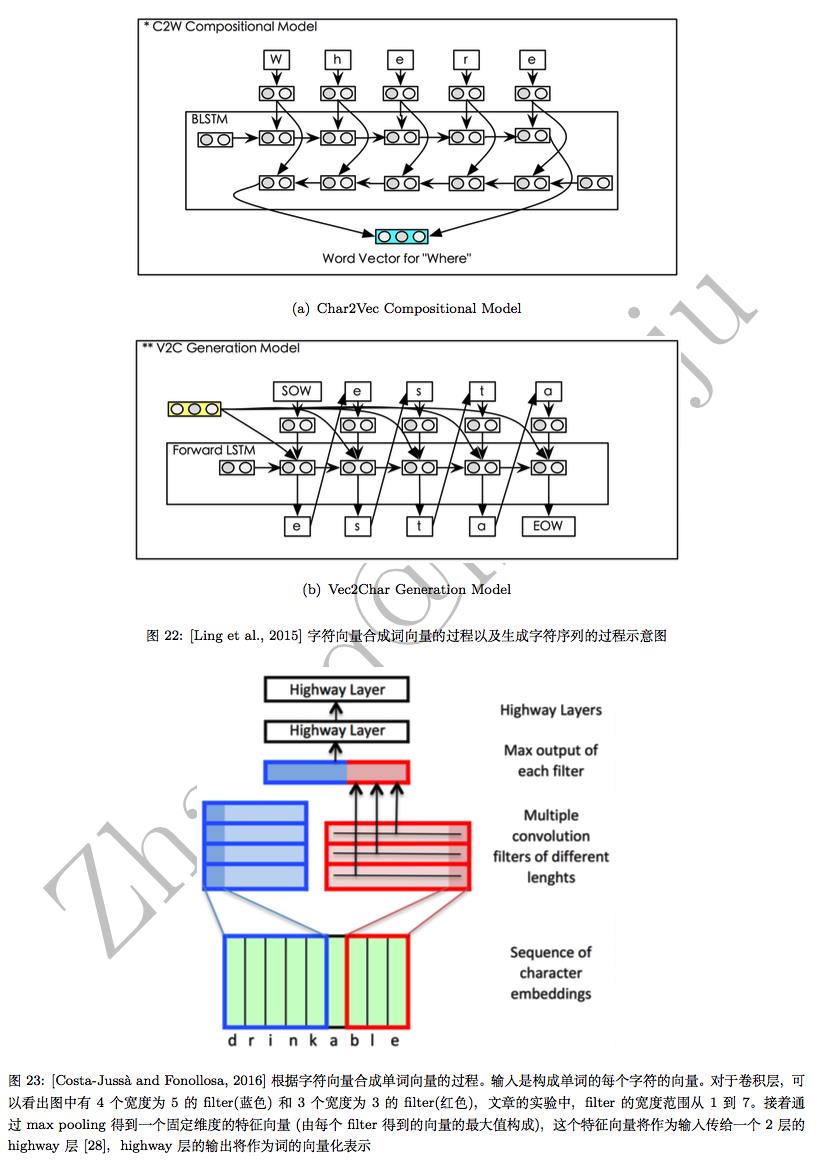

Wang Ling, Isabel Trancoso, Chris Dyer, and Alan Black. Character-based neural machine translation. Sprint Arxiv, 2015.

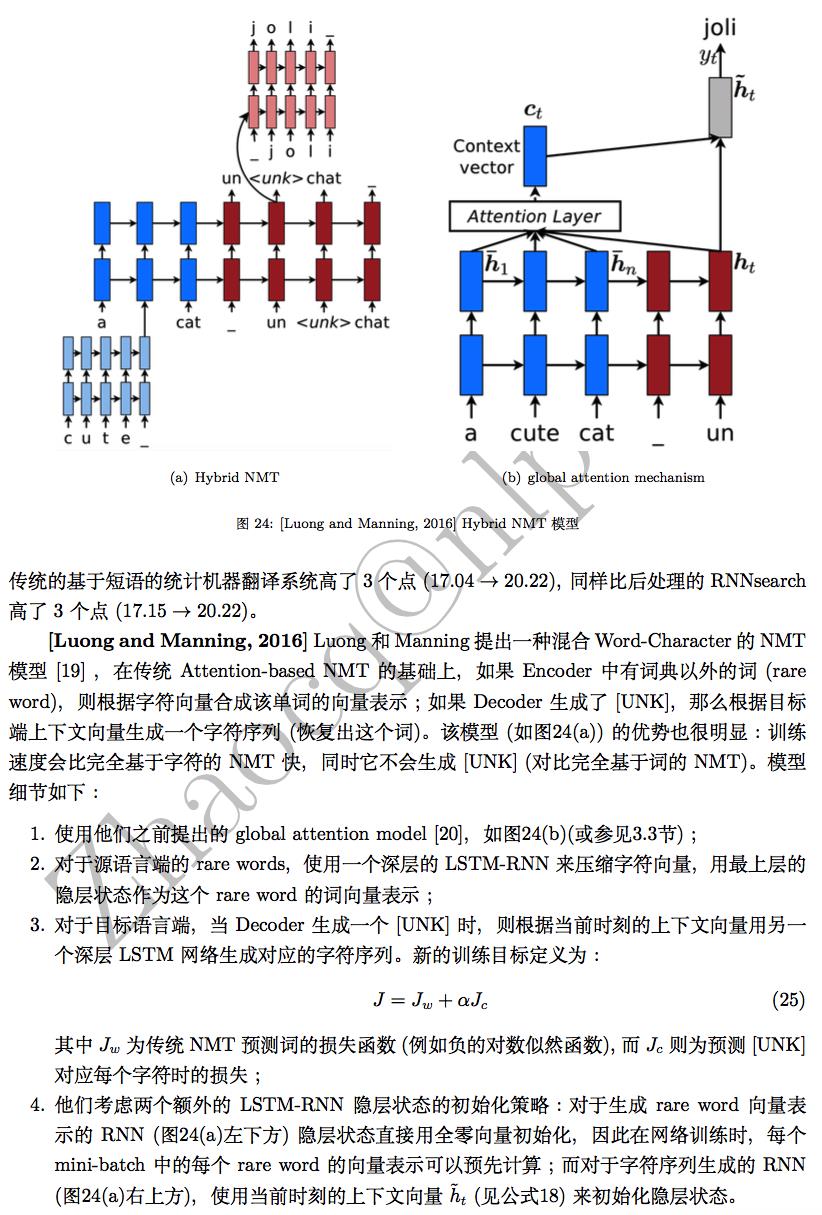

Marta R. Costa-Jussa and Jose A. R. Fonollosa. Character-based neural machine translation. In ACL 2016 (Short Paper).

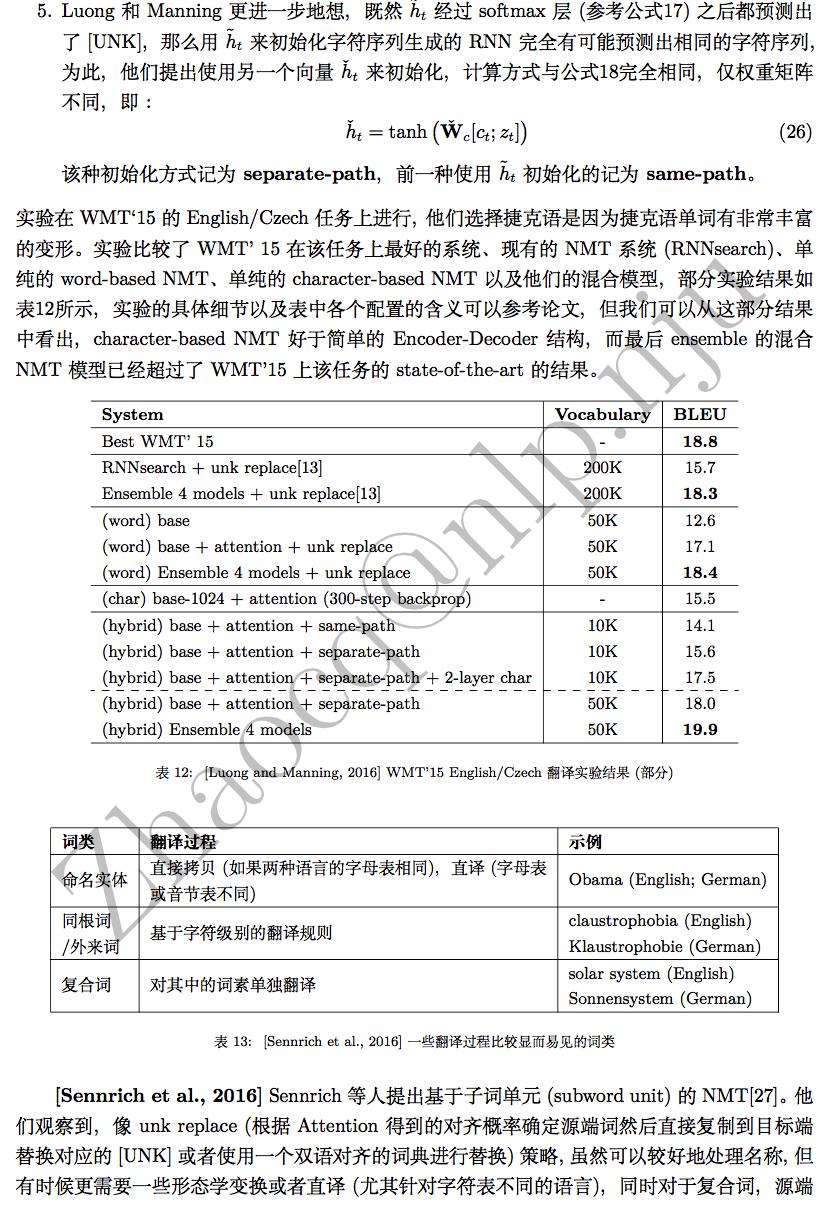

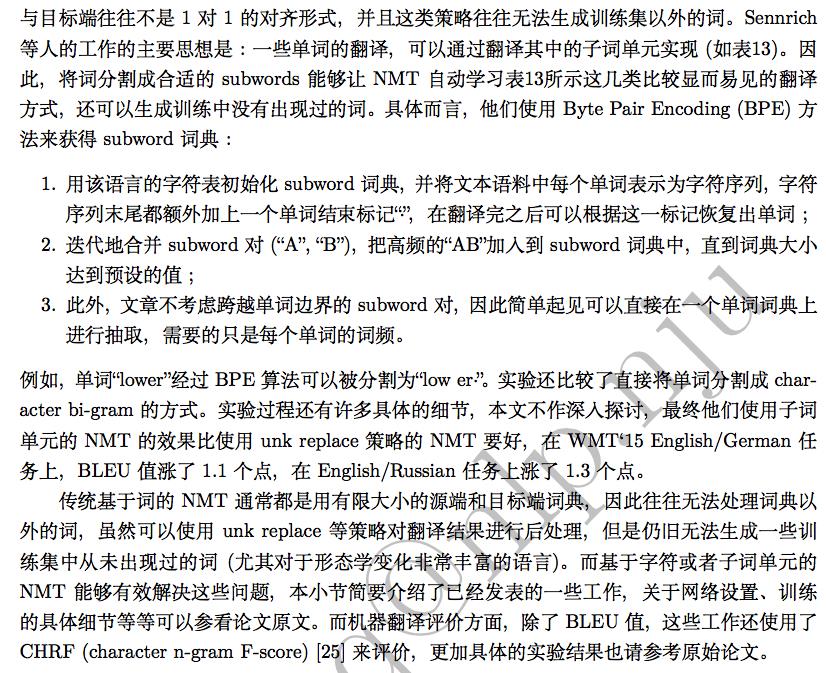

Minh-Thang Luong and Christopher D. Manning. Achieving open vocabulary neural machine translation with hybrid word-character models. In ACL 2016 (Long Paper).

Rico Sennrich, Barry Haddow, and Birch Alexandra. Neural machine translation of rare words with subword units. In ACL 2016 (Long Paper).

本文不讨论具体的NMT网络设置、训练细节、详细的结果等等,如果要深入钻研具体的工作,还请查阅论文原文。此外,由于ACL 2016只出了收录结果,论文集还未放出,本文参考的相关论文都是各作者挂在arXiv上的版本,如果想深入研究,也请等ACL2016论文集放出。

以上是关于神经网络机器翻译Neural Machine Translation: Achieving Open Vocabulary Neural MT的主要内容,如果未能解决你的问题,请参考以下文章

神经网络机器翻译Neural Machine Translation: Encoder-Decoder Architecture

神经网络机器翻译Neural Machine Translation: Attention Mechanism

神经网络机器翻译Neural Machine Translation: Attention Mechanism

《Neural Machine Translation: Challenges, Progress and Future》译文分享

神经网络机器翻译Neural Machine Translation: Encoder-Decoder Architecture