csharp Azure事件中心

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了csharp Azure事件中心相关的知识,希望对你有一定的参考价值。

Event Hubs is a big data streaming platform and event ingestion service.

Event Hubs represents the "front door" for an event pipeline, often called an event ingestor in solution architectures.

An event ingestor is a component or service that sits between event publishers and event consumers to decouple the production

of an event stream from the consumption of those events.

Data sent to an event hub can be transformed and stored by using any

real-time analytics provider or batching/storage adapters.

Scenarios:

- Anomaly detection (fraud/outliers)

- Application logging

- Analytics pipelines, such as clickstreams

- Live dashboarding

- Archiving data

- Transaction processing

- User telemetry processing

- Device telemetry streaming

# Why use Event Hubs?

- Easy way to process and get timely insights from data sources.

- Event Hubs is a fully managed PaaS.

- Support for real-time and batch processing.

- Grow to gigabytes or terabytes.

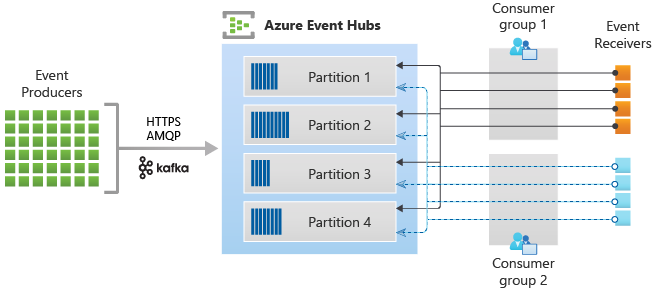

# Key architecture components

- Event producers: sends data to an event hub - HTTPS or AMQP 1.0 or Apache Kafka.

- Partitions: consumers only read a partition of the stream.

- Consumer groups: A view of a hub. Reads the stream independently at own pace.

- Throughput units: Pre-purchased units of capacity.

- Event receivers: Reads event data from an event hub via AMQP 1.0 session.

# Structure

## Publishing

Event Hubs ensures that all events sharing a partition key value are delivered in order, and to the same partition.

Each publisher uses its own unique identifier when publishing events to an event hub, using the following mechanism:

```HTTP

//[my namespace].servicebus.windows.net/[event hub name]/publishers/[my publisher name]

```

When using publisher policies, the `PartitionKey` value is set to the publisher name.

## Capture

Automatically capture streaming data and save it to choice of Blob storage account, or Data Lake Service account.

## Partitions

Message streaming through partitioned consumer pattern - each consumer only reads a specific partition of the stream.

Partition is an ordered sequence of events.

New events are added to the end of this sequence.

A partition can be thought of as a "commit log."

var connectionStringBuilder = new EventHubsConnectionStringBuilder("Endpoint=sb://ric01ehub.servicebus.windows.net/;SharedAcc...")

{

EntityPath = "ric01hub01"

};

private EventHubClient eventHubClient = EventHubClient.CreateFromConnectionString(connectionStringBuilder.ToString());

\!h await eventHubClient.SendAsync(new EventData(Encoding.UTF8.GetBytes("Some message")));

var eventProcessorHost = new EventProcessorHost(

"ric01hub01", // hub name

PartitionReceiver.DefaultConsumerGroupName,

EventHubConnectionString,

StorageConnectionString,

StorageContainerName);

// Registers the Event Processor Host and starts receiving messages

await eventProcessorHost.RegisterEventProcessorAsync<SimpleEventProcessor>();

public class SimpleEventProcessor : IEventProcessor

{

public Task ProcessEventsAsync(PartitionContext context, IEnumerable<EventData> messages)

{

foreach (var eventData in messages)

{

var data = Encoding.UTF8.GetString(eventData.Body.Array, eventData.Body.Offset, eventData.Body.Count);

Console.WriteLine($"Message received. Partition: '{context.PartitionId}', Data: '{data}'");

}

return context.CheckpointAsync();

}

// other methods ProcessErrorAsync, OpenAsync, CloseAsync

}以上是关于csharp Azure事件中心的主要内容,如果未能解决你的问题,请参考以下文章