Python 目录扫描脚本

Posted K'illCode

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Python 目录扫描脚本相关的知识,希望对你有一定的参考价值。

前言

最近开始学习Pthon安全编程,记录一下。

思路

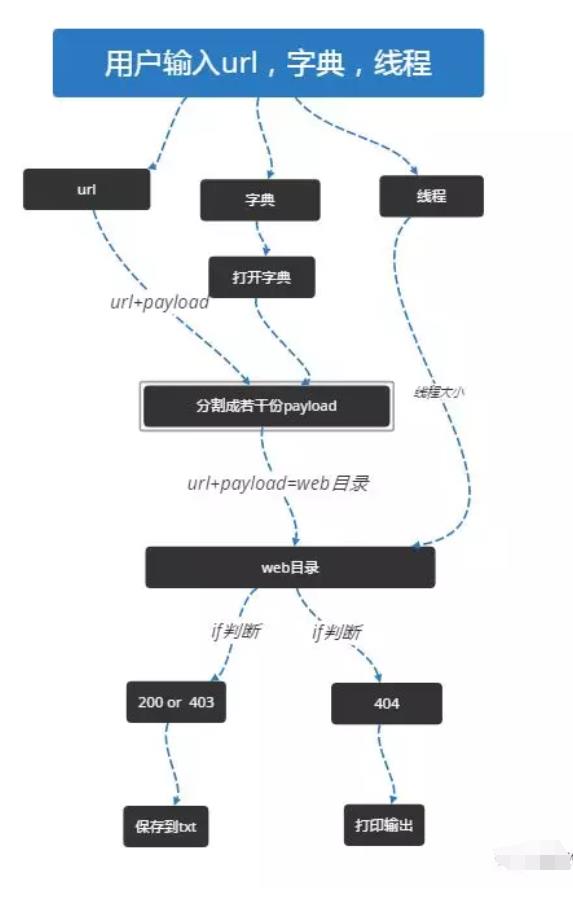

目录扫描器一般有几个功能点:输入url,字典,线程,所以说,我们要实现这3个功能点,差不多就做了一半了。我们再来看看目录扫描器的工作流程

画的有点丑,不过简而言之就是用户输入url和字典,然后把两者拼接起来,通过状态码来判断这个文件存不存在

代码实现

import sys

import os

import queue

import requests

import time

import threading

q=queue.Queue()

def scan():

while not q.empty(): ### 只要字典里不为空,就一直循环

dir=q.get() ### 把存储的payload取出来

urls=url+dir ### url+payload就是一个payload

urls=urls.replace('\\n','') ### 利用回车来分割开来,不然打印的时候不显示

code=requests.get(urls).status_code ### 把拼接的url发起http请求

if code==200 or code==403: ### 如果返回包状态码为200或者403,就打印url+状态码

print(urls+'|'+str(code))

f=open('ok.txt','a+') ###

f.write(urls)

f.close()

然后把结果以追加的方式存储到ok.txt中,然后关闭文件

else:

print(urls+'|'+str(code))

time.sleep(1)

### 不然就打印url+状态码,并延时一秒

def show():

print(" _ _ ")

print(" __| |(_) _ __ ___ ___ __ _ _ __ ")

print(" / _` || || '__| / __| / __|/ _` || '_ \\ ")

print(" | (_| || || | \\__ \\| (__| (_| || | | |")

print(" \\__,_||_||_| |___/ \\___|\\__,_||_| |_|")

print(" ")

print("说明:网址+字典文件+线程,例如:filesan.py http://www.xxx.cn 1.txt 10")

print("Author:KingHandles5")

if __name__ == '__main__':

path=os.path.dirname(os.path.realpath(__file__)) ### 这里的功能是获取当前的路径

if len(sys.argv)<4: ### 小于4的话,打印banner

show()

sys.exit()

url=sys.argv[1] ### 用户输入的url

txt=sys.argv[2] ### 用户输入的字典

xc=sys.argv[3] ### 用户输入的线程

for dir in open(path+"/"+txt):

q.put(dir)

### 当前路径加上字典名就是绝对路径,然后循环字典里的payload

for i in range(int(xc)):

t = threading.Thread(target=scan)

t.start()

### 多线程实现

优化版本,Python脚本的特点:

1.基本完善

2.界面美观(只是画了个图案)

3.可选参数增加了线程数

4.User Agent细节处理

5.多线程显示进度

扫描目标:Metasploitable Linux

代码:WebDirScanner.py:

# -*- coding:utf-8 -*-

__author__ = "Yiqing"

import sys

import threading

import random

from Queue import Queue

from optparse import OptionParser

try:

import requests

except Exception:

print "[!] You need to install requests module!"

print "[!] Usage:pip install requests"

exit()

class WebDirScan:

"""

Web目录扫描器

"""

def __init__(self, options):

self.url = options.url

self.file_name = options.file_name

self.count = options.count

class DirScan(threading.Thread):

"""

多线程

"""

def __init__(self, queue, total):

threading.Thread.__init__(self)

self._queue = queue

self._total = total

def run(self):

while not self._queue.empty():

url = self._queue.get()

# 多线程显示进度

threading.Thread(target=self.msg).start()

try:

r = requests.get(url=url, headers=get_user_agent(), timeout=5)

if r.status_code == 200:

sys.stdout.write('\\r' + '[+]%s\\t\\t\\n' % url)

# 保存到本地文件,以html的格式

result = open('result.html', 'a+')

result.write('<a href="' + url + '" rel="external nofollow" target="_blank">' + url + '</a>')

result.write('\\r\\n</br>')

result.close()

except Exception:

pass

def msg(self):

"""

显示进度

:return:None

"""

per = 100 - float(self._queue.qsize()) / float(self._total) * 100

percent = "%s Finished| %s All| Scan in %1.f %s" % (

(self._total - self._queue.qsize()), self._total, per, '%')

sys.stdout.write('\\r' + '[*]' + percent)

def start(self):

result = open('result.html', 'w')

result.close()

queue = Queue()

f = open('dict.txt', 'r')

for i in f.readlines():

queue.put(self.url + "/" + i.rstrip('\\n'))

total = queue.qsize()

threads = []

thread_count = int(self.count)

for i in range(thread_count):

threads.append(self.DirScan(queue, total))

for thread in threads:

thread.start()

for thread in threads:

thread.join()

def get_user_agent():

"""

User Agent的细节处理

:return:

"""

user_agent_list = [

'User-Agent': 'Mozilla/4.0 (Mozilla/4.0; MSIE 7.0; Windows NT 5.1; FDM; SV1; .NET CLR 3.0.04506.30)',

'User-Agent': 'Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.0; en) Opera 11.00',

'User-Agent': 'Mozilla/5.0 (X11; U; Linux i686; de; rv:1.9.0.2) Gecko/2008092313 Ubuntu/8.04 (hardy) Firefox/3.0.2',

'User-Agent': 'Mozilla/5.0 (X11; U; Linux i686; en-GB; rv:1.9.1.15) Gecko/20101027 Fedora/3.5.15-1.fc12 Firefox/3.5.15',

'User-Agent': 'Mozilla/5.0 (X11; U; Linux i686; en-US) AppleWebKit/534.10 (KHTML, like Gecko) Chrome/8.0.551.0 Safari/534.10',

'User-Agent': 'Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.2) Gecko/2008092809 Gentoo Firefox/3.0.2',

'User-Agent': 'Mozilla/5.0 (X11; U; Linux x86_64; en-US) AppleWebKit/534.10 (KHTML, like Gecko) Chrome/7.0.544.0',

'User-Agent': 'Opera/9.10 (Windows NT 5.2; U; en)',

'User-Agent': 'Mozilla/5.0 (iPhone; U; CPU OS 3_2 like Mac OS X; en-us) AppleWebKit/531.21.10 (KHTML, like Gecko)',

'User-Agent': 'Opera/9.80 (X11; U; Linux i686; en-US; rv:1.9.2.3) Presto/2.2.15 Version/10.10',

'User-Agent': 'Mozilla/5.0 (Windows; U; Windows NT 5.1; ru-RU) AppleWebKit/533.18.1 (KHTML, like Gecko) Version/5.0.2 Safari/533.18.5',

'User-Agent': 'Mozilla/5.0 (Windows; U; Windows NT 5.1; ru; rv:1.9b3) Gecko/2008020514 Firefox/3.0b3',

'User-Agent': 'Mozilla/5.0 (Macintosh; U; PPC Mac OS X 10_4_11; fr) AppleWebKit/533.16 (KHTML, like Gecko) Version/5.0 Safari/533.16',

'User-Agent': 'Mozilla/5.0 (Macintosh; U; Intel Mac OS X 10_6_6; en-US) AppleWebKit/534.20 (KHTML, like Gecko) Chrome/11.0.672.2 Safari/534.20',

'User-Agent': 'Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.1; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; InfoPath.2)',

'User-Agent': 'Mozilla/4.0 (compatible; MSIE 6.0; X11; Linux x86_64; en) Opera 9.60',

'User-Agent': 'Mozilla/5.0 (Macintosh; U; Intel Mac OS X 10_6_2; en-US) AppleWebKit/533.4 (KHTML, like Gecko) Chrome/5.0.366.0 Safari/533.4',

'User-Agent': 'Mozilla/5.0 (Windows NT 6.0; U; en; rv:1.8.1) Gecko/20061208 Firefox/2.0.0 Opera 9.51'

]

return random.choice(user_agent_list)

def main():

"""

主函数

:return: None

"""

print '''

____ _ ____

| _ \\(_)_ __/ ___| ___ __ _ _ __

| | | | | '__\\___ \\ / __/ _` | '_ \\

| |_| | | | ___) | (_| (_| | | | |

|____/|_|_| |____/ \\___\\__,_|_| |_|

Welcome to WebDirScan

Version:1.0 Author: %s

''' % __author__

parser = OptionParser('python WebDirScanner.py -u <Target URL> -f <Dictionary file name> [-t <Thread_count>]')

parser.add_option('-u', '--url', dest='url', type='string', help='target url for scan')

parser.add_option('-f', '--file', dest='file_name', type='string', help='dictionary filename')

parser.add_option('-t', '--thread', dest='count', type='int', default=10, help='scan thread count')

(options, args) = parser.parse_args()

if options.url and options.file_name:

dirscan = WebDirScan(options)

dirscan.start()

sys.exit(1)

else:

parser.print_help()

sys.exit(1)

if __name__ == '__main__':

main()使用:

后记

文章写得很基础,大牛勿喷

以上是关于Python 目录扫描脚本的主要内容,如果未能解决你的问题,请参考以下文章