Be Your Own Teacher: Improve the Performance of Convolutional Neural Networks via Self Distillation

Posted 爆米花好美啊

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Be Your Own Teacher: Improve the Performance of Convolutional Neural Networks via Self Distillation相关的知识,希望对你有一定的参考价值。

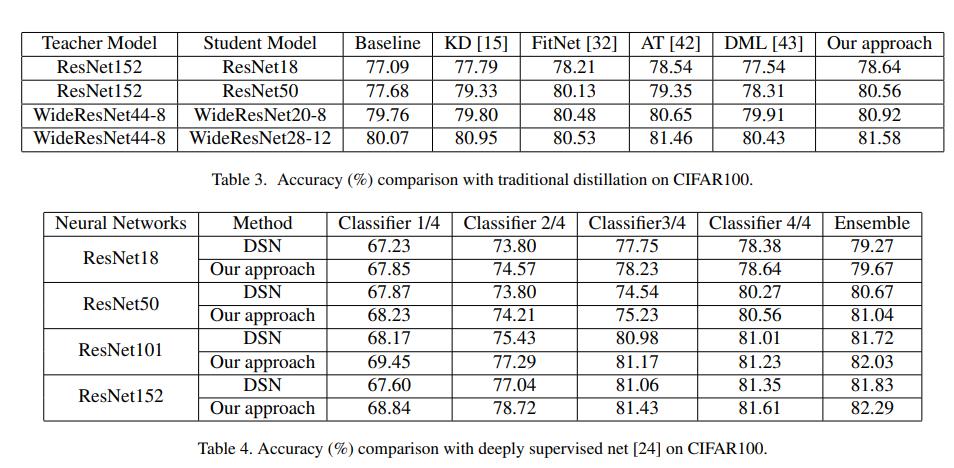

- Loss Source 1: Cross entropy loss,各个阶段的分类器都有

- Loss Source 2: KL loss,深层的分类器作为浅层分类器的teacher

- Loss Source 3: L2 loss from hints,深层分类器的特征和浅层分类器的特征做L2 loss,bottleneck即feature adaptation,为了使student和teacher一样大

以上是关于Be Your Own Teacher: Improve the Performance of Convolutional Neural Networks via Self Distillation的主要内容,如果未能解决你的问题,请参考以下文章