在MapReduce中运行WordCount以及字数统计中遇到的问题

Posted fadeless_3

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了在MapReduce中运行WordCount以及字数统计中遇到的问题相关的知识,希望对你有一定的参考价值。

运行环境:Ubantu的eclipse下

此操作需要在配置好了hadoop和hdfs的基础上运行MapReduce

常见问题:

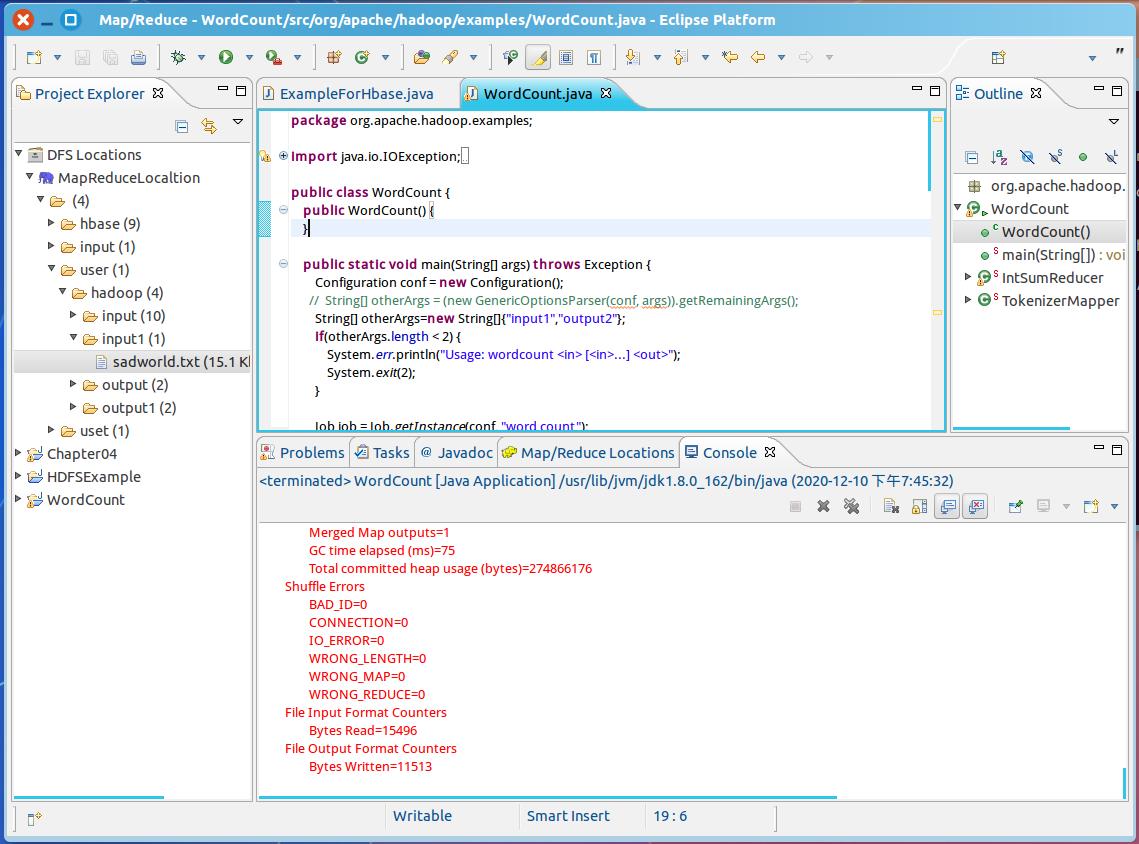

- 打开eclipse后查看不到MapReduceLocaltion,原因可能是没有开启hadoop,或者eclipse文件没有配置好

- 运行WordCount.java,代码没有错误,运行报错问题,原因可能是output下已存在输出文件,解决方法,换一个输出文件夹,如output2,或者删除output文件夹下的文件

- 代码中出现红叉,提示当前jdk不符合当前代码的运行,右键红叉点击兼容,编译器会自动向下兼容。(只能进行高版本jdk兼容低版本jdk)

- 路径错误,导致文件无法执行

- 打开eclipse后的MapReduceLocation后,打开后,文件目录下无文件,可通过命令行方式建立文件夹input(在/usr/local/hadoop下,开启hadoop后执行)

$./bin/hdfs dfs –mkdir –p /user/hadoop/input运行过程

- 开启hadoop(这里需要进到/usr/local/hadoop目录下开启)

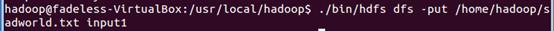

- 将本地/home/hadoop/sadworld.txt(所需要字符统计的)文件上传到hdfs上

- 创建MapReduce 项目(WordCount.java文件)

并编写WordCount.java程序(这里的输入文件是input1,输出文件是output2)

package org.apache.hadoop.examples;

import java.io.IOException;

import java.util.Iterator;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class WordCount

public WordCount()

public static void main(String[] args)

throws Exception

Configuration conf = new Configuration();

// String[] otherArgs = (new GenericOptionsParser(conf,args)).getRemainingArgs();

String[] otherArgs=new String[]“input1”,”output2”;

if(otherArgs.length < 2)

System.err.println("Usage:wordcount <in> [<in>...] <out>");

System.exit(2);

Job job = Job.getInstance(conf,"word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(WordCount.TokenizerMapper.class);

job.setCombinerClass(WordCount.IntSumReducer.class);

job.setReducerClass(WordCount.IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

for(int i = 0; i < otherArgs.length - 1; ++i)

FileInputFormat.addInputPath(job,new Path(otherArgs[i]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[otherArgs.length - 1]));

System.exit(job.waitForCompletion(true)?0:1);

public static class IntSumReducer extends

Reducer<Text, IntWritable, Text, IntWritable>

private IntWritable result = new IntWritable();

public IntSumReducer()

public void reduce(Text key,Iterable<IntWritable> values, Reducer<Text, IntWritable, Text,IntWritable>.Context context) throws IOException,InterruptedException

int sum = 0;

IntWritable val;

for(Iterator i$ =

values.iterator(); i$.hasNext(); sum += val.get())

val = (IntWritable)i$.next();

this.result.set(sum);

context.write(key, this.result);

public static class TokenizerMapper extends

Mapper<Object, Text, Text, IntWritable>

private static final IntWritable one = new IntWritable(1);

private Text word = new Text();

public TokenizerMapper()

public void map(Object key, Text value,

Mapper<Object, Text, Text, IntWritable>.Context context) throws IOException, InterruptedException

StringTokenizer itr = new

StringTokenizer(value.toString());

while(itr.hasMoreTokens())

this.word.set(itr.nextToken());

context.write(this.word, one);

-

运行WordCount程序,统计sadworld.txt中字符出现的次数

-

执行完毕,查看字符统计情况

以上是关于在MapReduce中运行WordCount以及字数统计中遇到的问题的主要内容,如果未能解决你的问题,请参考以下文章