GPUNvidia CUDA 编程中级教程——数据复制与计算的重叠

Posted 从善若水

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了GPUNvidia CUDA 编程中级教程——数据复制与计算的重叠相关的知识,希望对你有一定的参考价值。

博主未授权任何人或组织机构转载博主任何原创文章,感谢各位对原创的支持!

博主链接

本人就职于国际知名终端厂商,负责modem芯片研发。

在5G早期负责终端数据业务层、核心网相关的开发工作,目前牵头6G算力网络技术标准研究。

博客内容主要围绕:

5G/6G协议讲解

算力网络讲解(云计算,边缘计算,端计算)

高级C语言讲解

Rust语言讲解

数据复制与计算的重叠

CUDA 流简介

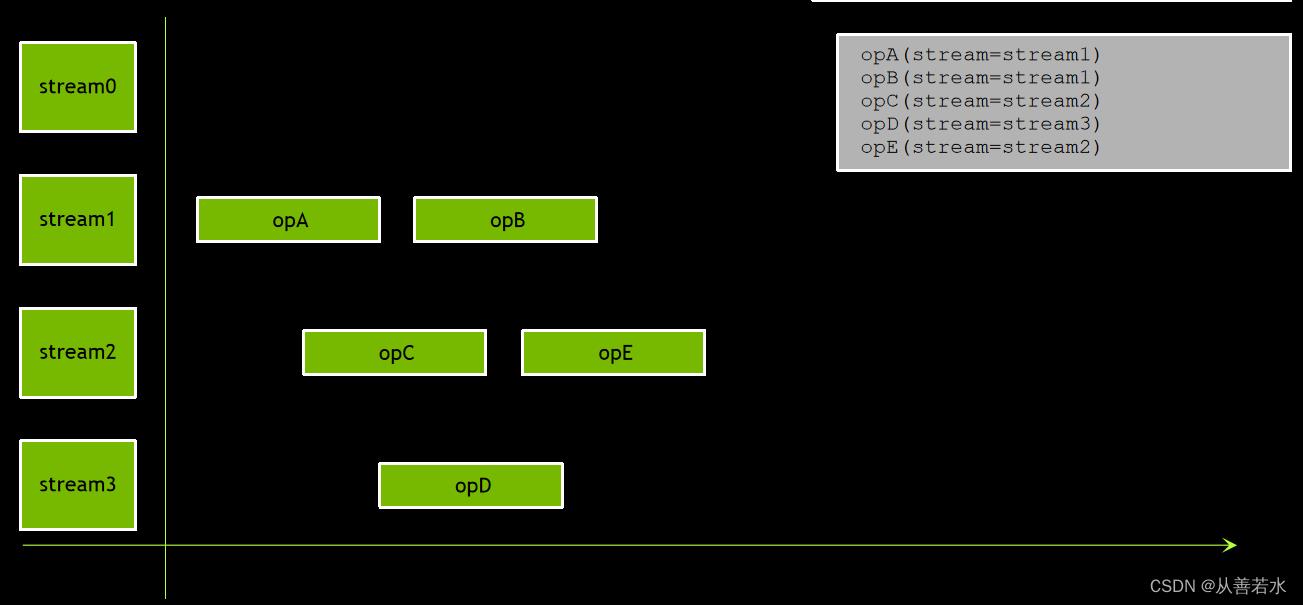

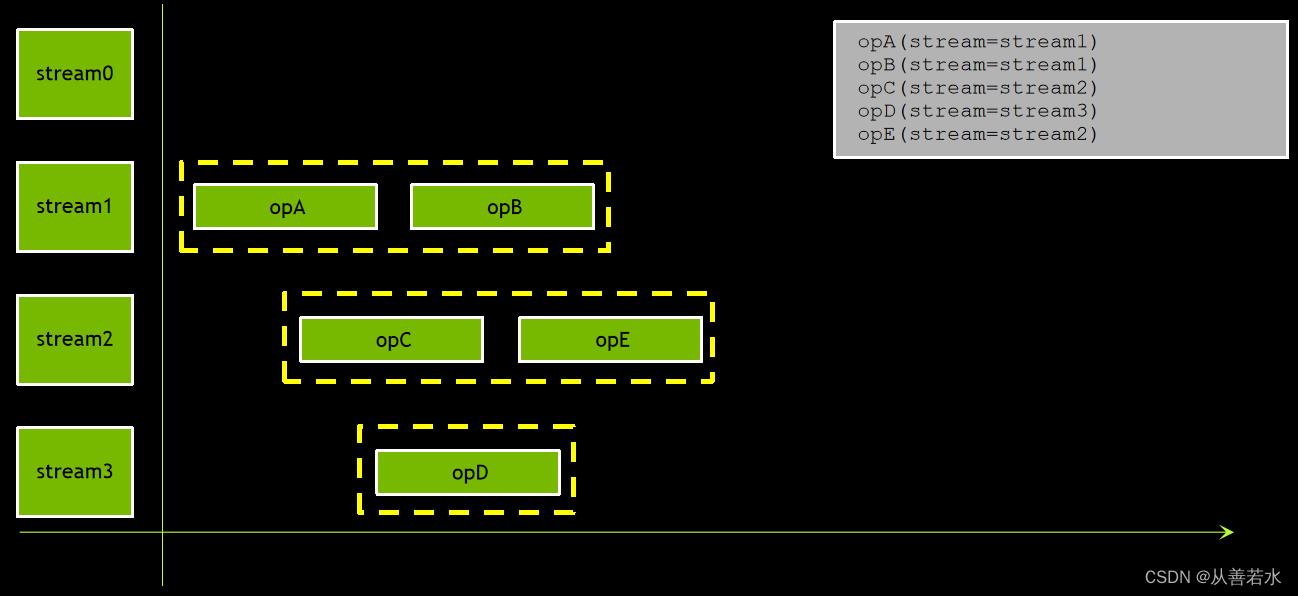

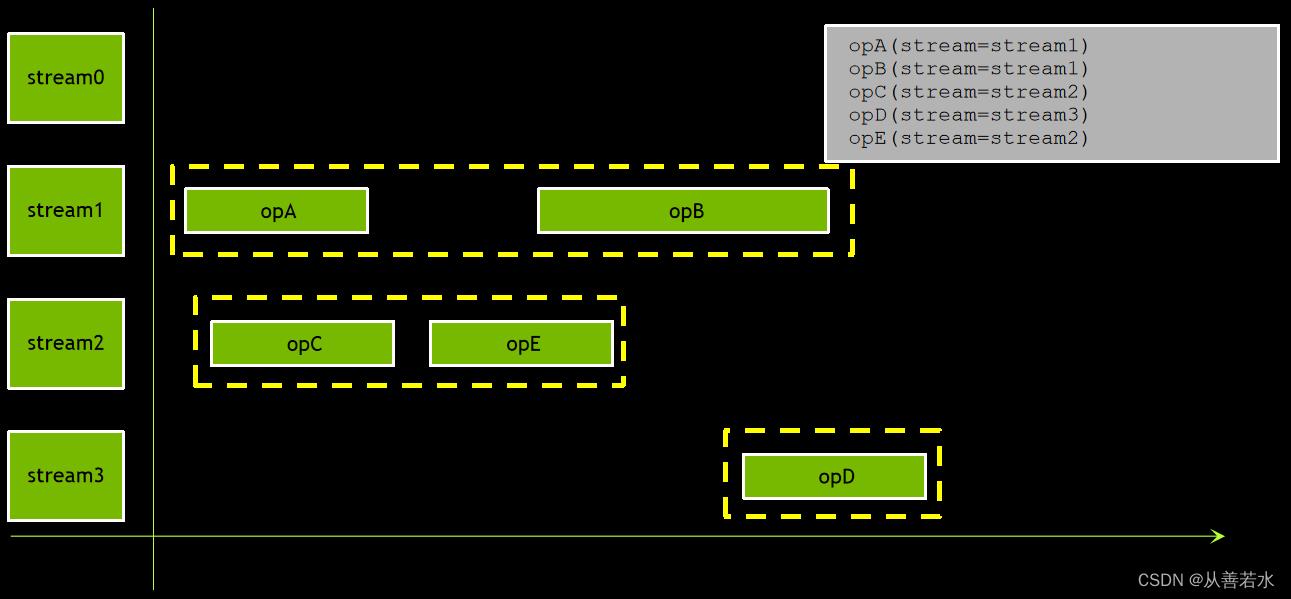

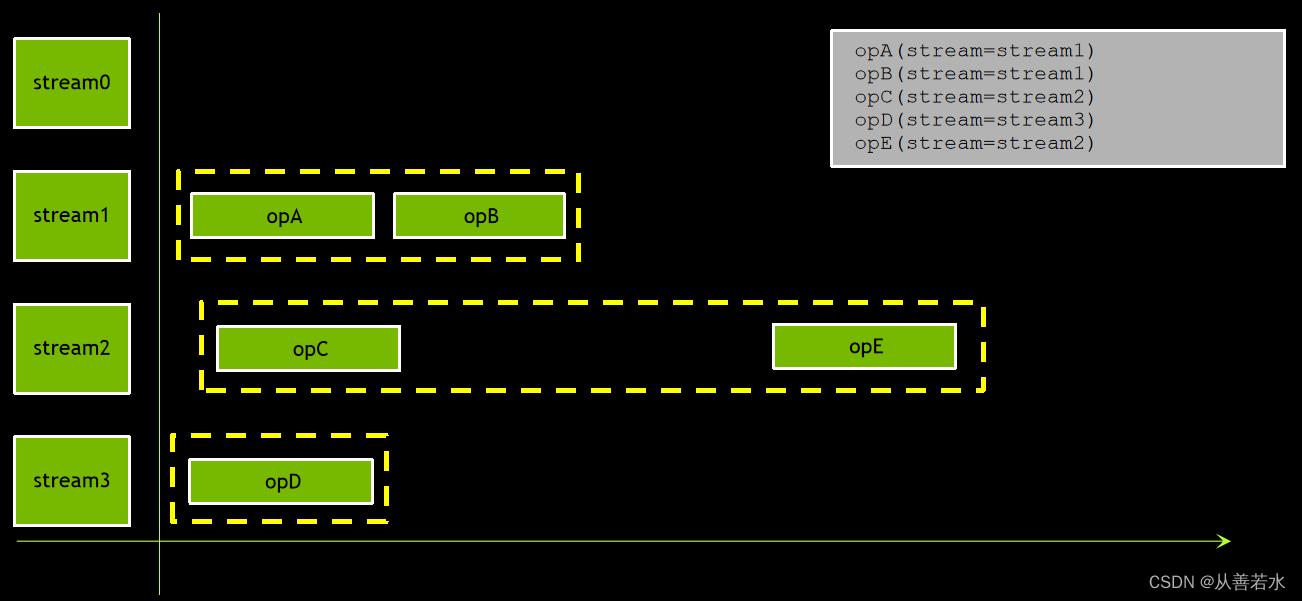

流是一个 GPU 操作序列,依发布顺序执行,CUDA 编程人员能创建并利用多个流。名为默认流的特殊流(此处标记为 stream0) ,其他所有流均称为非默认流(此处标记为 streams 1-3)。同一流中的操作将依发布顺序执行。然而,不同的非默认流中启动的操作并无固定的执行顺序。

非默认流的行为

- 发布到同一流中的操作将依发布顺序执行

- 不同非默认流中的操作顺序不固定,例如可能是下面的几种情况:

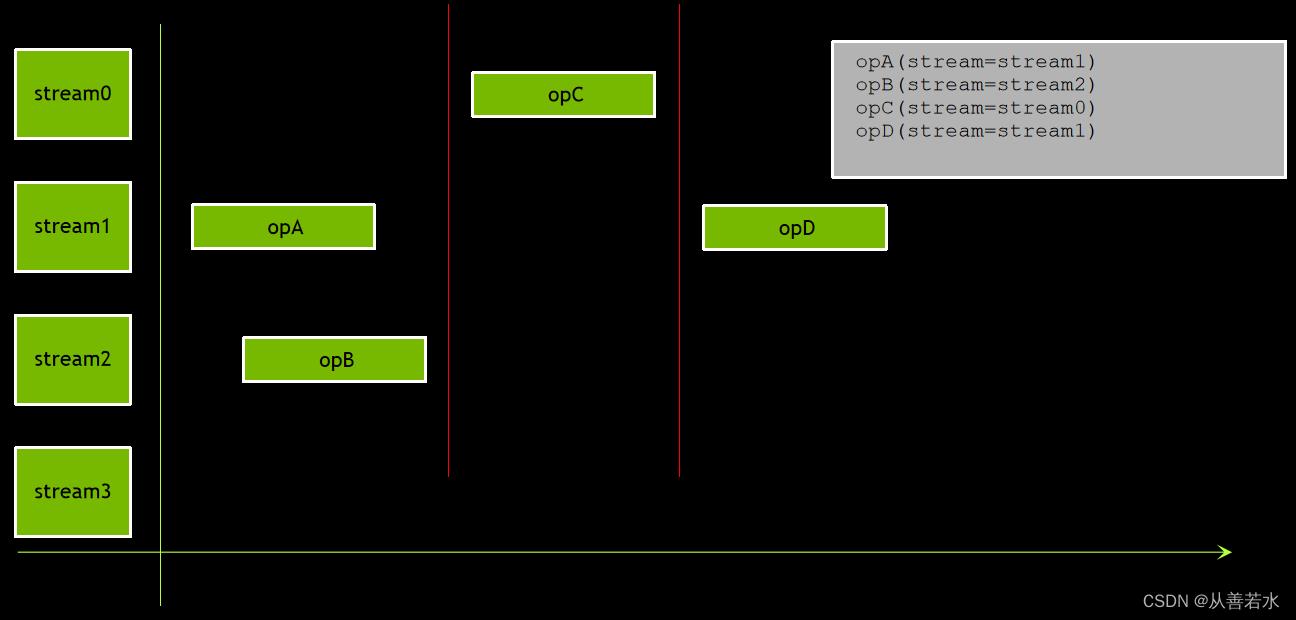

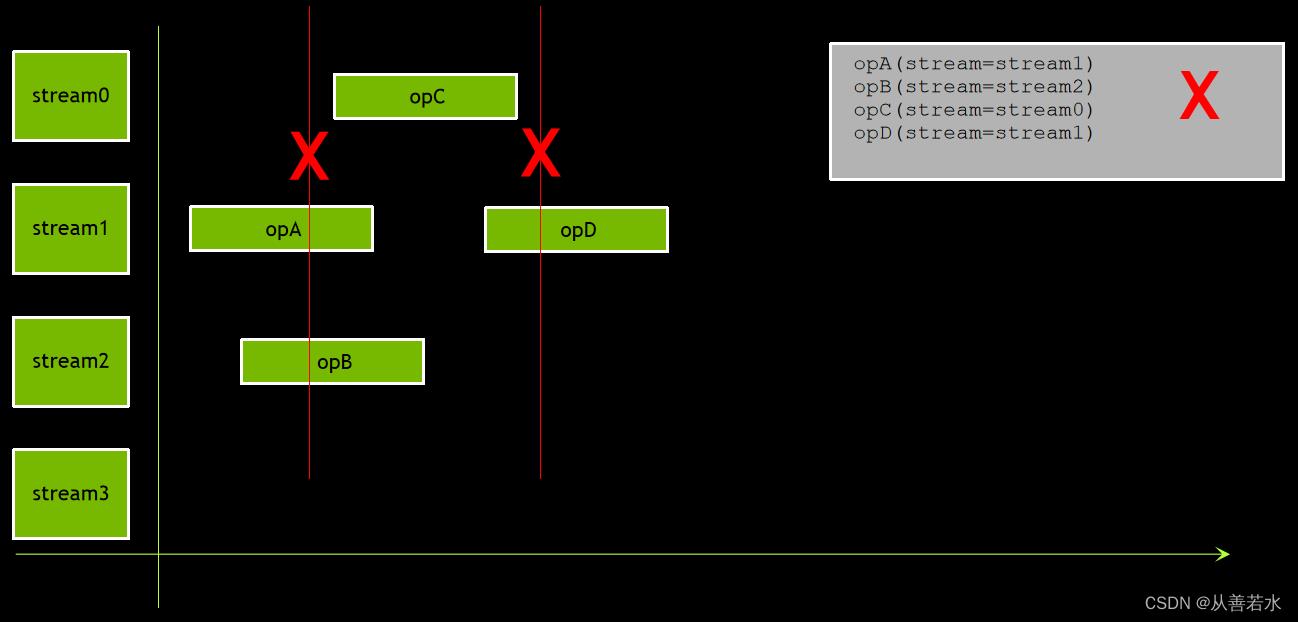

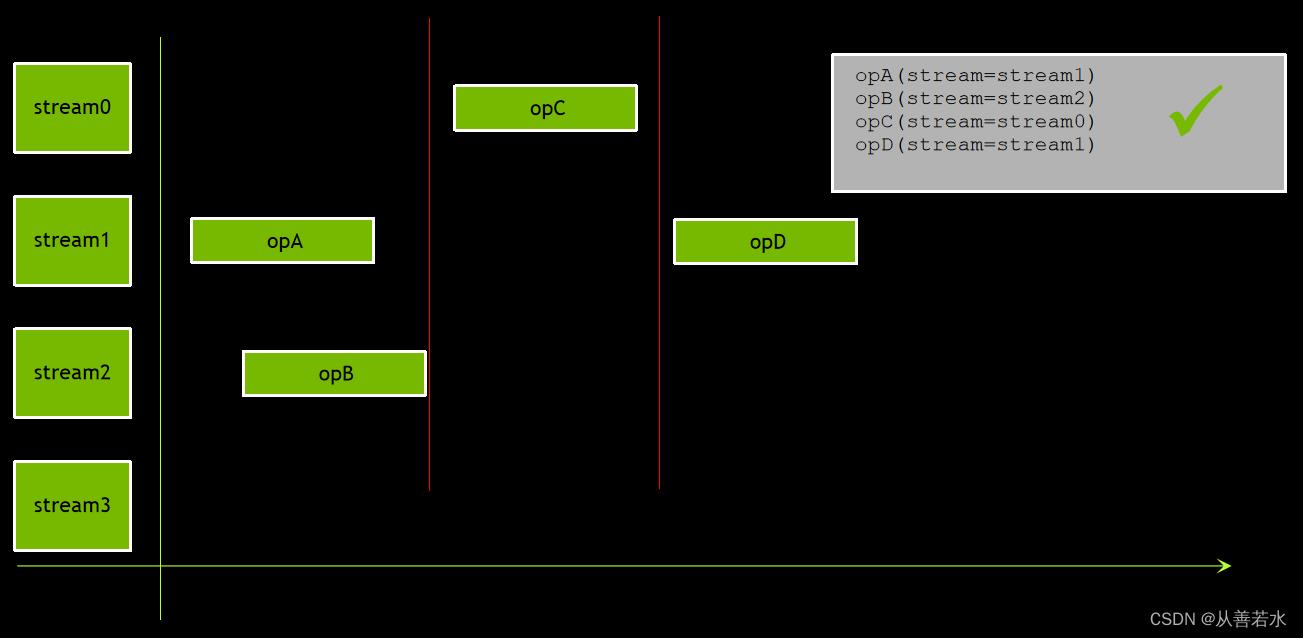

默认流的行为

默认流较为特殊。默认流中执行任何操作期间,任何非默认流中皆不可同时执行任何操作,默认流将等待非默认流全部执行完毕后再开始运行,而且在其执行完毕后,其他非默认流才能开始执行。

默认流与非默认流不会发生重叠。

CUDA 编程中的流

许多 CUDA 运行时函数都需指定流参数,参数默认值均为 0,即默认流。核函数一律在流中启动,启动后,核函数默认值为 0,即默认流,可使用第 4 个启动配置参数,在非默认流中启动核函数,

kernel<<<grid, block, shared_memory, stream>>>()

小练习:在非默认流中启动核函数

原始的code如下:

#include <cstdint>

#include <iostream>

#include "helpers.cuh"

#include "encryption.cuh"

void encrypt_cpu(uint64_t * data, uint64_t num_entries,

uint64_t num_iters, bool parallel=true)

#pragma omp parallel for if (parallel)

for (uint64_t entry = 0; entry < num_entries; entry++)

data[entry] = permute64(entry, num_iters);

__global__

void decrypt_gpu(uint64_t * data, uint64_t num_entries,

uint64_t num_iters)

const uint64_t thrdID = blockIdx.x*blockDim.x+threadIdx.x;

const uint64_t stride = blockDim.x*gridDim.x;

for (uint64_t entry = thrdID; entry < num_entries; entry += stride)

data[entry] = unpermute64(data[entry], num_iters);

bool check_result_cpu(uint64_t * data, uint64_t num_entries,

bool parallel=true)

uint64_t counter = 0;

#pragma omp parallel for reduction(+: counter) if (parallel)

for (uint64_t entry = 0; entry < num_entries; entry++)

counter += data[entry] == entry;

return counter == num_entries;

int main (int argc, char * argv[])

Timer timer;

Timer overall;

const uint64_t num_entries = 1UL << 26;

const uint64_t num_iters = 1UL << 10;

const bool openmp = true;

timer.start();

uint64_t * data_cpu, * data_gpu;

cudaMallocHost(&data_cpu, sizeof(uint64_t)*num_entries);

cudaMalloc (&data_gpu, sizeof(uint64_t)*num_entries);

timer.stop("allocate memory");

check_last_error();

timer.start();

encrypt_cpu(data_cpu, num_entries, num_iters, openmp);

timer.stop("encrypt data on CPU");

overall.start();

timer.start();

cudaMemcpy(data_gpu, data_cpu,

sizeof(uint64_t)*num_entries, cudaMemcpyHostToDevice);

timer.stop("copy data from CPU to GPU");

check_last_error();

timer.start();

decrypt_gpu<<<80*32, 64>>>(data_gpu, num_entries, num_iters);

timer.stop("decrypt data on GPU");

check_last_error();

timer.start();

cudaMemcpy(data_cpu, data_gpu,

sizeof(uint64_t)*num_entries, cudaMemcpyDeviceToHost);

timer.stop("copy data from GPU to CPU");

overall.stop("total time on GPU");

check_last_error();

timer.start();

const bool success = check_result_cpu(data_cpu, num_entries, openmp);

std::cout << "STATUS: test "

<< ( success ? "passed" : "failed")

<< std::endl;

timer.stop("checking result on CPU");

timer.start();

cudaFreeHost(data_cpu);

cudaFree (data_gpu);

timer.stop("free memory");

check_last_error();

解决方案如下:

#include <cstdint>

#include <iostream>

#include "helpers.cuh"

#include "encryption.cuh"

void encrypt_cpu(uint64_t * data, uint64_t num_entries,

uint64_t num_iters, bool parallel=true)

#pragma omp parallel for if (parallel)

for (uint64_t entry = 0; entry < num_entries; entry++)

data[entry] = permute64(entry, num_iters);

__global__

void decrypt_gpu(uint64_t * data, uint64_t num_entries,

uint64_t num_iters)

const uint64_t thrdID = blockIdx.x*blockDim.x+threadIdx.x;

const uint64_t stride = blockDim.x*gridDim.x;

for (uint64_t entry = thrdID; entry < num_entries; entry += stride)

data[entry] = unpermute64(data[entry], num_iters);

bool check_result_cpu(uint64_t * data, uint64_t num_entries,

bool parallel=true)

uint64_t counter = 0;

#pragma omp parallel for reduction(+: counter) if (parallel)

for (uint64_t entry = 0; entry < num_entries; entry++)

counter += data[entry] == entry;

return counter == num_entries;

int main (int argc, char * argv[])

Timer timer;

Timer overall;

const uint64_t num_entries = 1UL << 26;

const uint64_t num_iters = 1UL << 10;

const bool openmp = true;

timer.start();

uint64_t * data_cpu, * data_gpu;

cudaMallocHost(&data_cpu, sizeof(uint64_t)*num_entries);

cudaMalloc (&data_gpu, sizeof(uint64_t)*num_entries);

timer.stop("allocate memory");

check_last_error();

timer.start();

encrypt_cpu(data_cpu, num_entries, num_iters, openmp);

timer.stop("encrypt data on CPU");

overall.start();

timer.start();

cudaMemcpy(data_gpu, data_cpu,

sizeof(uint64_t)*num_entries, cudaMemcpyHostToDevice);

timer.stop("copy data from CPU to GPU");

check_last_error();

timer.start();

// Create non-default stream.

cudaStream_t stream;

cudaStreamCreate(&stream);

// Launch kernel in non-default stream.

decrypt_gpu<<<80*32, 64, 0, stream>>>(data_gpu, num_entries, num_iters);

// Destroy non-default stream.

cudaStreamDestroy(stream);

timer.stop("decrypt data on GPU");

check_last_error();

timer.start();

cudaMemcpy(data_cpu, data_gpu,

sizeof(uint64_t)*num_entries, cudaMemcpyDeviceToHost);

timer.stop("copy data from GPU to CPU");

overall.stop("total time on GPU");

check_last_error();

timer.start();

const bool success = check_result_cpu(data_cpu, num_entries, openmp);

std::cout << "STATUS: test "

<< ( success ? "passed" : "failed")

<< std::endl;

timer.stop("checking result on CPU");

timer.start();

cudaFreeHost(data_cpu);

cudaFree (data_gpu);

timer.stop("free memory");

check_last_error();

重点函数讲解:

- 创建非默认流:要创建新的非默认流,请向 cudaStreamCreate 传递一个 cudaStream_t 指针:

cudaStream_t stream; cudaStreamCreate(&stream); - 在非默认流中启动核函数:要在非默认流中启动核函数,请传递一个非默认流标识符作为该函数的第 4 个启动配置参数。由于核函数的第 3 个启动配置参数定义了动态分配的共享内存,因此如果您不打算修改其默认值,则可能需向其传递 0(其默认值):

cudaStream_t stream; cudaStreamCreate(&stream); kernel<<<grid, blocks, 0, stream>>>(); - 销毁非默认流:完成相关操作后,您可以向 cudaStreamDestroy 传递一个非默认流标识符来销毁非默认流:

cudaStream_t stream; cudaStreamCreate(&stream); kernel<<<grid, blocks, 0, stream>>>(); cudaStreamDestroy(stream);

非默认流中的内存复制

分配固定内存

- 为了要异步复制数据,CUDA 需对其位置作出假设。典型的主机内存使用 分页技术,这样除了 RAM 之外,数据还可存储在某个备份存储设备上(如物理磁盘)。

- 固定(或锁页)内存会绕过主机操作系统分页,在 RAM 中存储所分配的内存。在非默认流中异步传输内存时,必须使用锁页(或固定)内存。

- 固定内存会阻止将数据存储在某个备份存储设备上,因此是一个受限资源,请务必当心不要过度使用它。

固定主机内存通过 cudaMallocHost 进行分配:

const uint64_t num_entries = 1UL << 26;

uint64_t *data_cpu;

cudaMallocHost(&data_cpu, sizeof(uint64_t)*num_entries);

非默认流中主机到设备的内存传输

通过使用类似于 cudaMemcpy 的 cudaMemcpyAsync,您可在非默认流中将固定主机内存传输到 GPU 显存,但需提供第 5 个流标识符参数:

cudaStream_t stream;

cudaStreamCreate(&stream);

const uint64_t num_entries = 1UL << 26;

uint64_t *data_cpu, *data_gpu;

cudaMallocHost(&data_cpu, sizeof(uint64_t)*num_entries);

cudaMalloc(&data_gpu, sizeof(uint64_t)*num_entries);

cudaMemcpyAsync(data_gpu,

data_cpu,

sizeof(uint64_t)*num_entries,

cudaMemcpyHostToDevice,

stream);

非默认流中设备到主机的内存传输

通过使用 cudaMemcpyAsync,您也可在非默认流中将 GPU 显存传输到固定主机内存:

// Assume data is already present on the GPU, and that `data_cpu` is pinned.

cudaMemcpyAsync(data_cpu,

data_gpu,

sizeof(uint64_t)*num_entries,

cudaMemcpyDeviceToHost,

stream);

与所有现代 GPU 一样,具有 2 个或更多复制引擎的 GPU 设备可以同时在不同的非默认流中执行主机到设备和设备到主机的内存传输。

流同步

使用cudaStreamSynchronize可导致主机代码阻塞,直到给定的流完成其操作为止。 当需要保证完成流工作时,例如,当主机代码需要等待非默认流中的异步内存传输完成时,应使用流同步:

// Assume data is already present on the GPU, and that `data_cpu` is pinned.

cudaMemcpyAsync(data_cpu,

data_gpu,

sizeof(uint64_t)*num_entries,

cudaMemcpyDeviceToHost,

stream);

// Block until work (in this case memory transfer to host) in `stream` is complete.

cudaStreamSyncronize(stream);

// `data_cpu` transfer to host via `stream` is now guaranteed to be complete.

checkResultCpu(data_cpu);

小练习:在非默认流中执行内存传输

原始code如下:

#include <cstdint>

#include <iostream>

#include "helpers.cuh"

#include "encryption.cuh"

void encrypt_cpu(uint64_t * data, uint64_t num_entries,

uint64_t num_iters, bool parallel=true)

#pragma omp parallel for if (parallel)

for (uint64_t entry = 0; entry < num_entries; entry++)

data[entry] = permute64(entry, num_iters);

__global__

void decrypt_gpu(uint64_t * data, uint64_t num_entries,

uint64_t num_iters)

const uint64_t thrdID = blockIdx.x*blockDim.x+threadIdx.x;

const uint64_t stride = blockDim.x*gridDim.x;

for (uint64_t entry = thrdID; entry < num_entries; entry += stride)

data[entry] = unpermute64(data[entry], num_iters);

bool check_result_cpu(uint64_t * data, uint64_t num_entries,

bool parallel=true)

uint64_t counter = 0;

#pragma omp parallel for reduction(+: counter) if (parallel)

for (uint64_t entry = 0; entry < num_entries; entry++)

counter += data[entry] == entry;

return counter == num_entries;

int main (int argc, char * argv[])

Timer timer;

Timer overall;

const uint64_t num_entries = 1UL << 26;

const uint64_t num_iters = 1UL << 10;

const bool openmp = true;

timer.start();

uint64_t * data_cpu, * data_gpu;

cudaMallocHost(&data_cpu, sizeof(uint64_t)*num_entries);

cudaMalloc (&data_gpu, sizeof(uint64_t)*num_entries);

timer.stop("allocate memory");

check_last_error();

timer.start();

encrypt_cpu(data_cpu, num_entries, num_iters, openmp);

timer.stop("encrypt data on CPU");

overall.start();

timer.start();

cudaMemcpy(data_gpu, data_cpu,

sizeof(uint64_t)*num_entries, cudaMemcpyHostToDevice);

timer.stop("copy data from CPU to GPU");

check_last_error();

timer.start();

decrypt_gpu<<<80*32, 64>>>(data_gpu, num_entries, num_iters);

timer.stop("decrypt data on GPU");

check_last_error();

timer.start();

cudaMemcpy(data_cpu, data_gpu,

sizeof(uint64_t)*num_entries, cudaMemcpyDeviceToHost);

timer.stop("copy data from GPU to CPU");

overall以上是关于GPUNvidia CUDA 编程中级教程——数据复制与计算的重叠的主要内容,如果未能解决你的问题,请参考以下文章