视频监控和ue4结合

Posted 北京天地创科技有限公司

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了视频监控和ue4结合相关的知识,希望对你有一定的参考价值。

由于公司做了一个展馆项目,甲方要在程序内接入监控视频,而且是接入600个,果断没有头绪,好在ue4给出了官方实列,再开几个线程就 le.废话不多说直接上代码。

先把OpenCV的插件接上去,接其他应用SDK下篇文章介绍。

在Actor使用的方法.

如果使用主线程解析视频的话,进程启动会卡一段时间,一个监控有时候就会卡顿半分钟,六个会更卡,所以果断开了六个线程.

UFUNCTION(BlueprintCallable, Category = Webcam)

void OpenWebCamera(TArray< FString> urlAr);

UFUNCTION(BlueprintCallable, Category = Webcam)

void OpenLocalCamera(TArray< int32> ID);

UFUNCTION(BlueprintCallable, Category = Webcam)

void CloseCamera();

TArray< RSTDataHandle*>Camer1RSTDataHandleArr;

TArray<FRunnableThread*> m_RecvThreadArr;

virtual void BeginDestroy()override;

//根据RSTP连接的数量创建线程

gamezaivoid ACamReader::OpenWebCamera(TArray< FString> urlAr)

for (int32 i = 0; i < 6; i++)

RSTDataHandle*tempSTDataHandle = new RSTDataHandle();

Camer1RSTDataHandleArr.Add(tempSTDataHandle);

FRunnableThread *tempRunnableThread = FRunnableThread::Create(new FReceiveThread(tempSTDataHandle, urlAr[i]), TEXT("RecvThread"), 128 * 1024, TPri_AboveNormal, FPlatformAffinity::GetPoolThreadMask());

m_RecvThreadArr.Add(tempRunnableThread);

void ACamReader::OpenLocalCamera(TArray< int32> IDArr)

if (Camer1RSTDataHandleArr.Num() > 0)

for (int32 i = 0; i < Camer1RSTDataHandleArr.Num(); i++)

Camer1RSTDataHandleArr[i]->OpenLocalCamera(IDArr[i]);

void ACamReader::CloseCamera()

if (Camer1RSTDataHandleArr.Num() > 0)

for (int32 i = 0; i < Camer1RSTDataHandleArr.Num(); i++)

Camer1RSTDataHandleArr[i]->CloseCamera();

//

//获取取得的视频图片

UTexture2D* ACamReader::GetTexture2D(int Index)

if (Camer1RSTDataHandleArr.Num() > 0)

for (int32 i = 0; i < Camer1RSTDataHandleArr.Num(); i++)

if (Index==i)

if (Camer1RSTDataHandleArr[i]->GetThisUTexture2D())

return Camer1RSTDataHandleArr[i]->GetThisUTexture2D();

return nullptr;

TArray<UTexture2D*> ACamReader::GetAllTexture2D()

if (Camer1RSTDataHandleArr.Num() > 0)

for (int32 i = 0; i < Camer1RSTDataHandleArr.Num(); i++)

if (Camer1RSTDataHandleArr[i]->GetThisUTexture2D())

CurrenTextureArr.Add(Camer1RSTDataHandleArr[i]->GetThisUTexture2D());

return CurrenTextureArr;

void AWebcamReader::InitStream()

if (Camer1RSTDataHandleArr.Num() > 0)

for (int32 i = 0; i < Camer1RSTDataHandleArr.Num(); i++)

Camer1RSTDataHandleArr[i]->InitStream();

//这个地方在Editor里面运行会有随机崩溃,最好用宏定义在debuggame下测试,这里用的是老代码就不占了.

void AWebcamReader::BeginDestroy()

Super::BeginDestroy();

if (Camer1RSTDataHandleArr.Num() > 0)

for (int32 i = 0; i < Camer1RSTDataHandleArr.Num(); i++)

delete Camer1RSTDataHandleArr[i];

Camer1RSTDataHandleArr[i] = nullptr;

Camer1RSTDataHandleArr.Reset();

if (m_RecvThreadArr.Num() > 0)

for (int32 i = 0; i < m_RecvThreadArr.Num(); i++)

m_RecvThreadArr[i]->Kill(true);

delete m_RecvThreadArr[i];

m_RecvThreadArr[i] = nullptr;

m_RecvThreadArr.Reset();

4.下面看一下在线程里干了什么事.

//构造一个数据类

FReceiveThread::FReceiveThread(RSTDataHandle *sTDataHandle, FString url):STDataHandle(sTDataHandle),URL(url)

FReceiveThread::~FReceiveThread()

stopping = true;

delete STDataHandle;

STDataHandle = nullptr;

bool FReceiveThread::Init()

stopping = false;

return true;

//更新数据

uint32 FReceiveThread::Run()

STDataHandle->OpenWebCamera(URL);

//接收数据包

while (!stopping) //线程计数器控制

STDataHandle->Tick(0.01f);

//Sleep一下吧,要不然占用CPU过高,

FPlatformProcess::Sleep(0.01f);

return 1;

5.线程里干的事很简单吧,下面看一下数据类里面的数据怎么处理的.

E数据// Fill out your copyright notice in the Description page of Project Settings.

#include "RSTDataHandle.h"

RSTDataHandle::RSTDataHandle()

VideoTexture = nullptr;

CameraID = 0;

isStreamOpen = false;

VideoSize = FVector2D(0, 0);

ShouldResize = false;

ResizeDeminsions = FVector2D(320, 240);

stream = cv::VideoCapture();

frame = cv::Mat();

Mutex = new FCriticalSection();

RSTDataHandle::~RSTDataHandle()

//够简单吧,就干这么多事,其他的数据都是UE官方插件做好的,你只负责调用即可.

void RSTDataHandle::Tick(float DeltaTime)

UpdateFrame();

DoProcessing();

UpdateTexture();

OnNextVideoFrame();

void RSTDataHandle::OnNextVideoFrame()

void RSTDataHandle::UpdateFrame()

if (stream.isOpened())

stream.read(frame);

if (ShouldResize)

cv::resize(frame, frame, size);

else

isStreamOpen = false;

void RSTDataHandle::DoProcessing()

void RSTDataHandle::UpdateTexture()

if (isStreamOpen && frame.data)

// Copy Mat data to Data array

for (int y = 0; y < VideoSize.Y; y++)

for (int x = 0; x < VideoSize.X; x++)

int i = x + (y * VideoSize.X);

Data[i].B = frame.data[i * 3 + 0];

Data[i].G = frame.data[i * 3 + 1];

Data[i].R = frame.data[i * 3 + 2];

// Update texture 2D

UpdateTextureRegions(VideoTexture, (int32)0, (uint32)1, VideoUpdateTextureRegion, (uint32)(4 * VideoSize.X), (uint32)4, (uint8*)Data.GetData(), false);

void RSTDataHandle::OpenWebCamera(FString url)

stream = cv::VideoCapture(TCHAR2STRING(url.GetCharArray().GetData()));

if (stream.isOpened())

InitStream();

UE_LOG(LogTemp, Warning, TEXT("stream.OpenSucess"));

else

OpenWebCamera(url);

UE_LOG(LogTemp, Warning, TEXT("stream.isOpened()"));

void RSTDataHandle::OpenLocalCamera(int32 ID)

CameraID = ID;

stream.open(CameraID);

InitStream();

void RSTDataHandle::CloseCamera()

if (stream.isOpened())

stream.release();

UTexture2D* RSTDataHandle::GetThisUTexture2D()

FScopeLock ScopeLock(Mutex);

return VideoTexture;

void RSTDataHandle::InitStream()

if (stream.isOpened() && !isStreamOpen)

// Initialize stream

isStreamOpen = true;

UpdateFrame();

VideoSize = FVector2D(frame.cols, frame.rows);

size = cv::Size(ResizeDeminsions.X, ResizeDeminsions.Y);

VideoTexture = UTexture2D::CreateTransient(VideoSize.X, VideoSize.Y);

VideoTexture->UpdateResource();

VideoUpdateTextureRegion = new FUpdateTextureRegion2D(0, 0, 0, 0, VideoSize.X, VideoSize.Y);

// Initialize data array

Data.Init(FColor(0, 0, 0, 255), VideoSize.X * VideoSize.Y);

// Do first frame

DoProcessing();

UpdateTexture();

OnNextVideoFrame();

void RSTDataHandle::UpdateTextureRegions(UTexture2D* Texture, int32 MipIndex, uint32 NumRegions, FUpdateTextureRegion2D* Regions, uint32 SrcPitch, uint32 SrcBpp, uint8* SrcData, bool bFreeData)

if (Texture->Resource)

struct FUpdateTextureRegionsData

FTexture2DResource* Texture2DResource;

int32 MipIndex;

uint32 NumRegions;

FUpdateTextureRegion2D* Regions;

uint32 SrcPitch;

uint32 SrcBpp;

uint8* SrcData;

;

FUpdateTextureRegionsData* RegionData = new FUpdateTextureRegionsData;

RegionData->Texture2DResource = (FTexture2DResource*)Texture->Resource;

RegionData->MipIndex = MipIndex;

RegionData->NumRegions = NumRegions;

RegionData->Regions = Regions;

RegionData->SrcPitch = SrcPitch;

RegionData->SrcBpp = SrcBpp;

RegionData->SrcData = SrcData;

ENQUEUE_UNIQUE_RENDER_COMMAND_TWOPARAMETER(

UpdateTextureRegionsData,

FUpdateTextureRegionsData*, RegionData, RegionData,

bool, bFreeData, bFreeData,

for (uint32 RegionIndex = 0; RegionIndex < RegionData->NumRegions; ++RegionIndex)

int32 CurrentFirstMip = RegionData->Texture2DResource->GetCurrentFirstMip();

if (RegionData->MipIndex >= CurrentFirstMip)

RHIUpdateTexture2D(

RegionData->Texture2DResource->GetTexture2DRHI(),

RegionData->MipIndex - CurrentFirstMip,

RegionData->Regions[RegionIndex],

RegionData->SrcPitch,

RegionData->SrcData

+ RegionData->Regions[RegionIndex].SrcY * RegionData->SrcPitch

+ RegionData->Regions[RegionIndex].SrcX * RegionData->SrcBpp

);

if (bFreeData)

FMemory::Free(RegionData->Regions);

FMemory::Free(RegionData->SrcData);

delete RegionData;

);

6.接监控也并不难吧,只是里面的细节比较多.这里就不上监控的图细聊了.

7.还有如果监控那边设置的分辨率过高,用的是主码, ,码率过高,的话接多个监控CPU直接100,电脑会很卡的.当时测试一个监控,后来测试6个,电脑卡的直接要关机了,以为自己的代码有问题,纠结了很久才知道是监控设置的问题.如果不要求视频是高清的,同时接六个一点都不比VLC效果差,还有一个坑,一个监控可能会有5路或者六路的限制.

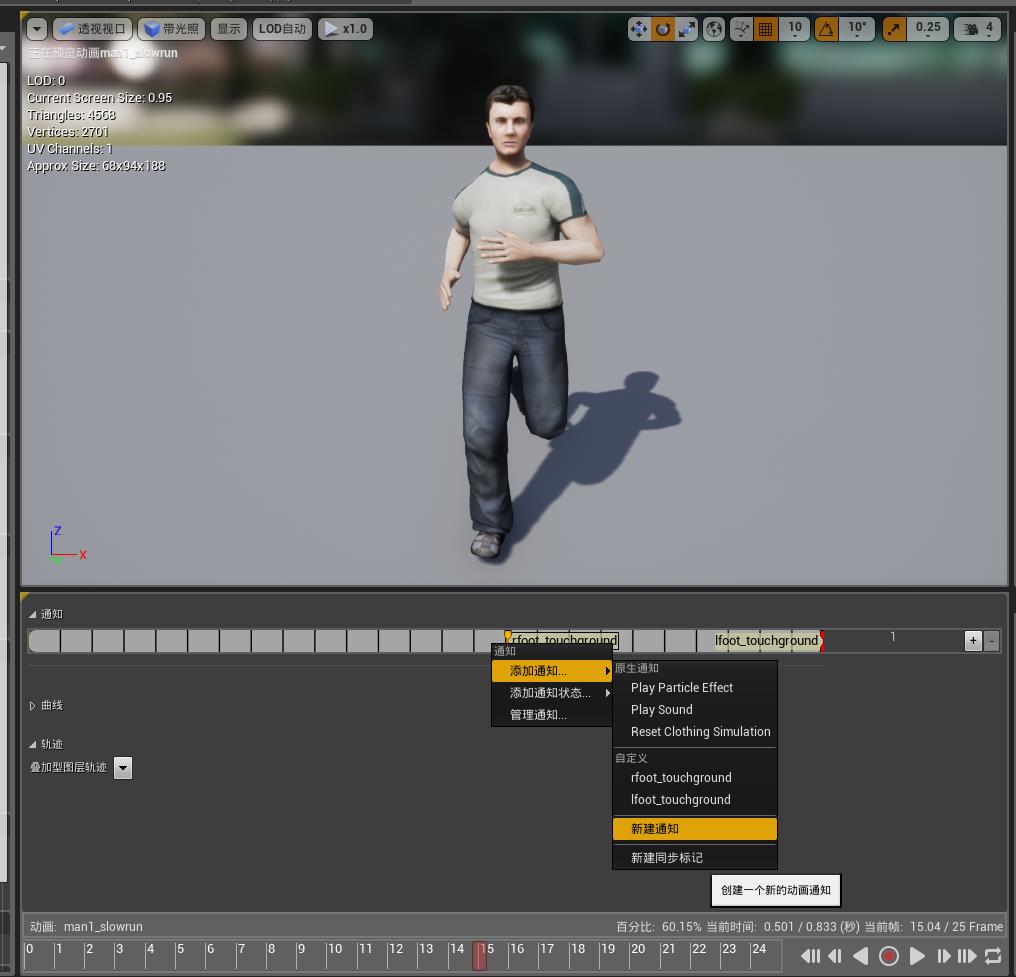

ue4音效动画结合实例

在游戏中,许多音效需要在动画恰当的时机出现,例如行走、奔跑,就需要恰好在足部落地瞬间播放。

而AnimNotify就能非常方便地处理此类问题。

AnimNotify,顾名思义就是动画通知,能在特定的动画片段播放到特定进度时“发出消息”。

目前我们的工程有前、后、左、右、左前、右前、左后、右后八向的跑动动画。

先以向前跑为例,用右键添加通知的方式,分别在右脚、左脚落地时添加了lfoot_touchground与rfoot_touchground的两个自定义通知

当然直接添加playsound通知也是可以的,能很方便地直接设置声音,但为了功能的可扩展性我们还是使用自定义通知。

然而我们如何才能获取这些通知呢?

if (mesh) { UAnimInstance* anim=mesh->GetAnimInstance(); if (anim) { TArray<const struct FAnimNotifyEvent*> AnimNotifies=anim->NotifyQueue.AnimNotifies; range(i,0,AnimNotifies.Num()) { FString NotifyName=AnimNotifies[i]->NotifyName.ToString(); /*GEngine->A/ddOnScreenDebugMessage (-1, 5.f, FColor::Red, FString::FromInt(i)+" "+ NotifyName);*/ if (NotifyName=="lfoot_touchground") { audio_lfoot->SetSound(sb_walks[0]); audio_lfoot->Play(); } else if (NotifyName == "rfoot_touchground") { audio_rfoot->SetSound(sb_walks[1]); audio_rfoot->Play(); } else { } } } }

没错,就是这么简单anim->NotifyQueue即为动画通知队列,AnimNotifies就能获取当前所有通知事件,上述代码去掉注释即可打印当前帧所有动画通知名称。

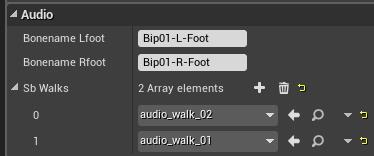

为了实现播放音效,我们还需要绑定在左右脚的AudioComponent,如果当前通知队列中有对应的通知,就先SetSound设置将要播放的声音资源后再Play。

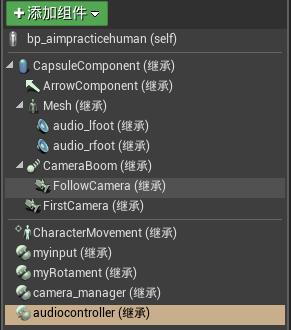

我把所有需要绑定在Chracter的Mesh上的AudioComponent“打包装入“了一个叫AudioController的类,在Character的构造函数中进行了AudioController的构造,并将AudioController中的各音效组件绑定到对应的socket上。

AudioController.h文件

// Fill out your copyright notice in the Description page of Project Settings. #pragma once #include "Components/ActorComponent.h" #include "Components/AudioComponent.h" #include "Components/SkeletalMeshComponent.h" #include "AudioController.generated.h" UCLASS( ClassGroup=(Custom), meta=(BlueprintSpawnableComponent) ) class MYSOUNDANDEFFECTS_API UAudioController : public UActorComponent { GENERATED_BODY() public: // Sets default values for this component\'s properties UAudioController(); //UAudioController(USkeletalMeshComponent* mesh_); protected: // Called when the game starts virtual void BeginPlay() override; public: // Called every frame virtual void TickComponent(float DeltaTime, ELevelTick TickType, FActorComponentTickFunction* ThisTickFunction) override; UPROPERTY(Category = Audio, VisibleDefaultsOnly, BlueprintReadOnly, meta = (AllowPrivateAccess = "true")) UAudioComponent* audio_lfoot = NULL; UPROPERTY(Category = Audio, EditDefaultsOnly, BlueprintReadOnly, meta = (AllowPrivateAccess = "true")) FString bonename_lfoot = "Bip01-L-Foot"; UPROPERTY(Category = Audio, VisibleDefaultsOnly, BlueprintReadOnly, meta = (AllowPrivateAccess = "true")) UAudioComponent* audio_rfoot = NULL; UPROPERTY(Category = Audio, EditDefaultsOnly, BlueprintReadOnly, meta = (AllowPrivateAccess = "true")) FString bonename_rfoot = "Bip01-R-Foot"; USkeletalMeshComponent* mesh = NULL; void my_init(USkeletalMeshComponent* mesh_); UPROPERTY(Category = Audio, EditDefaultsOnly, BlueprintReadOnly, meta = (AllowPrivateAccess = "true")) TArray<class USoundBase*> sb_walks; };

AudioController.cpp文件

// Fill out your copyright notice in the Description page of Project Settings. #include "MySoundAndEffects.h" #include "Animation/AnimInstance.h" #include "AudioController.h" // Sets default values for this component\'s properties UAudioController::UAudioController() { // Set this component to be initialized when the game starts, and to be ticked every frame. You can turn these features // off to improve performance if you don\'t need them. PrimaryComponentTick.bCanEverTick = true; // ... audio_lfoot = CreateDefaultSubobject<UAudioComponent>("audio_lfoot"); audio_rfoot = CreateDefaultSubobject<UAudioComponent>("audio_rfoot"); } //UAudioController::UAudioController(USkeletalMeshComponent* mesh_) //{ // // Set this component to be initialized when the game starts, and to be ticked every frame. You can turn these features // // off to improve performance if you don\'t need them. // PrimaryComponentTick.bCanEverTick = true; // // // ... // // // //} void UAudioController::my_init(USkeletalMeshComponent* mesh_) { this->mesh = mesh_; //UAudioController(); audio_lfoot->SetupAttachment(mesh_, FName(*bonename_lfoot)); audio_rfoot->SetupAttachment(mesh_, FName(*bonename_rfoot)); } // Called when the game starts void UAudioController::BeginPlay() { Super::BeginPlay(); // ... } // Called every frame void UAudioController::TickComponent(float DeltaTime, ELevelTick TickType, FActorComponentTickFunction* ThisTickFunction) { Super::TickComponent(DeltaTime, TickType, ThisTickFunction); // ... if (mesh) { UAnimInstance* anim=mesh->GetAnimInstance(); if (anim) { TArray<const struct FAnimNotifyEvent*> AnimNotifies=anim->NotifyQueue.AnimNotifies; range(i,0,AnimNotifies.Num()) { FString NotifyName=AnimNotifies[i]->NotifyName.ToString(); /*GEngine->A/ddOnScreenDebugMessage (-1, 5.f, FColor::Red, FString::FromInt(i)+" "+ NotifyName);*/ if (NotifyName=="lfoot_touchground") { audio_lfoot->SetSound(sb_walks[0]); audio_lfoot->Play(); } else if (NotifyName == "rfoot_touchground") { audio_rfoot->SetSound(sb_walks[1]); audio_rfoot->Play(); } else { } } } } else { throw std::exception("mesh not exist!!"); } }

还有character类中audiocontroller的定义和创建:

AAimPraticeHuman::AAimPraticeHuman()

{

#ifdef AudioController_h

audiocontroller = CreateDefaultSubobject<UAudioController>("audiocontroller");

audiocontroller->my_init(GetMesh());

#endif

}

UPROPERTY(Category = Audio, VisibleDefaultsOnly, BlueprintReadOnly, meta = (AllowPrivateAccess = "true"))

class UAudioController* audiocontroller = NULL;

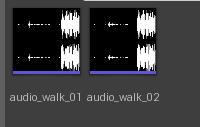

最后就是音效导入,看似简单其实有很多细节。

建议直接拖入wav格式文件,如果导入失败应该是wav文件具体参数的问题。

(我用Audition把一长串的跑步音效分割出了两声脚碰地的声音,直接导入ue4报错,然而先用格式工厂转一下就ok了)

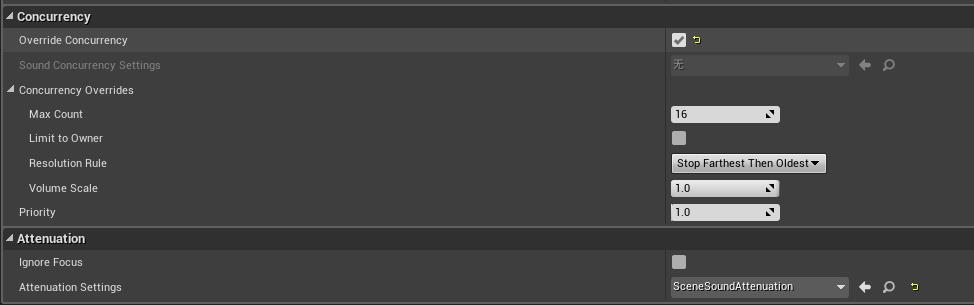

当然现在的音效还不能直接用于游戏脚步,还需要先设置并发和立体效果。

Concurrency项勾选override即可,使用”先远后旧“的并发停止策略问题也不大。

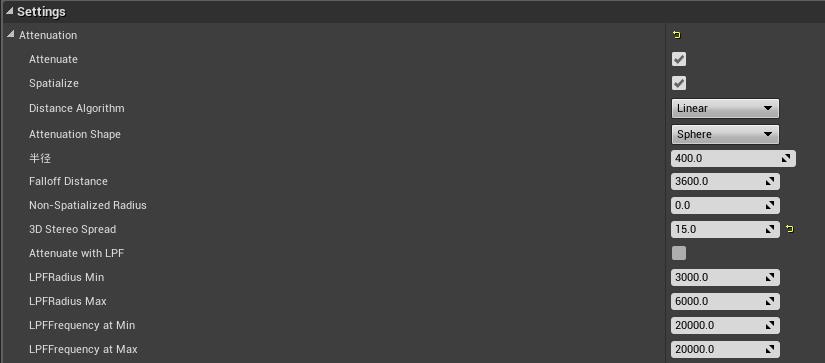

Attenuation项我新建了一个叫SceneSoundAttenuation蓝图,设置如下:

这些选项看字面都比较好理解,3D Stereo Spread的意思其实就是左右耳间距。

最后别忘了设置character蓝图的sb_walks数组的值,以及左右脚的socketname

大功告成啦!

具体效果。。。反正你们也听不到。。。

------------------------------------------------

下期预告:美妙的IK(反向动力学)

以上是关于视频监控和ue4结合的主要内容,如果未能解决你的问题,请参考以下文章