Android Multimedia框架总结(二十六)利用FFmpeg进行解码直播流

Posted 码农突围

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Android Multimedia框架总结(二十六)利用FFmpeg进行解码直播流相关的知识,希望对你有一定的参考价值。

转载请把头部出处链接和尾部二维码一起转载,本文出自逆流的鱼yuiop:http://blog.csdn.net/hejjunlin/article/details/59225373

早在去年九月份时,写过一篇《手把手图文并茂教你用Android Studio编译FFmpeg库并移植》,今天用去年编译好的3.1.3的ffmpeg,进行在android平台上解码直播流。看下Agenda:

- 环境

- Java代码

- ndk代码

- 解码运行

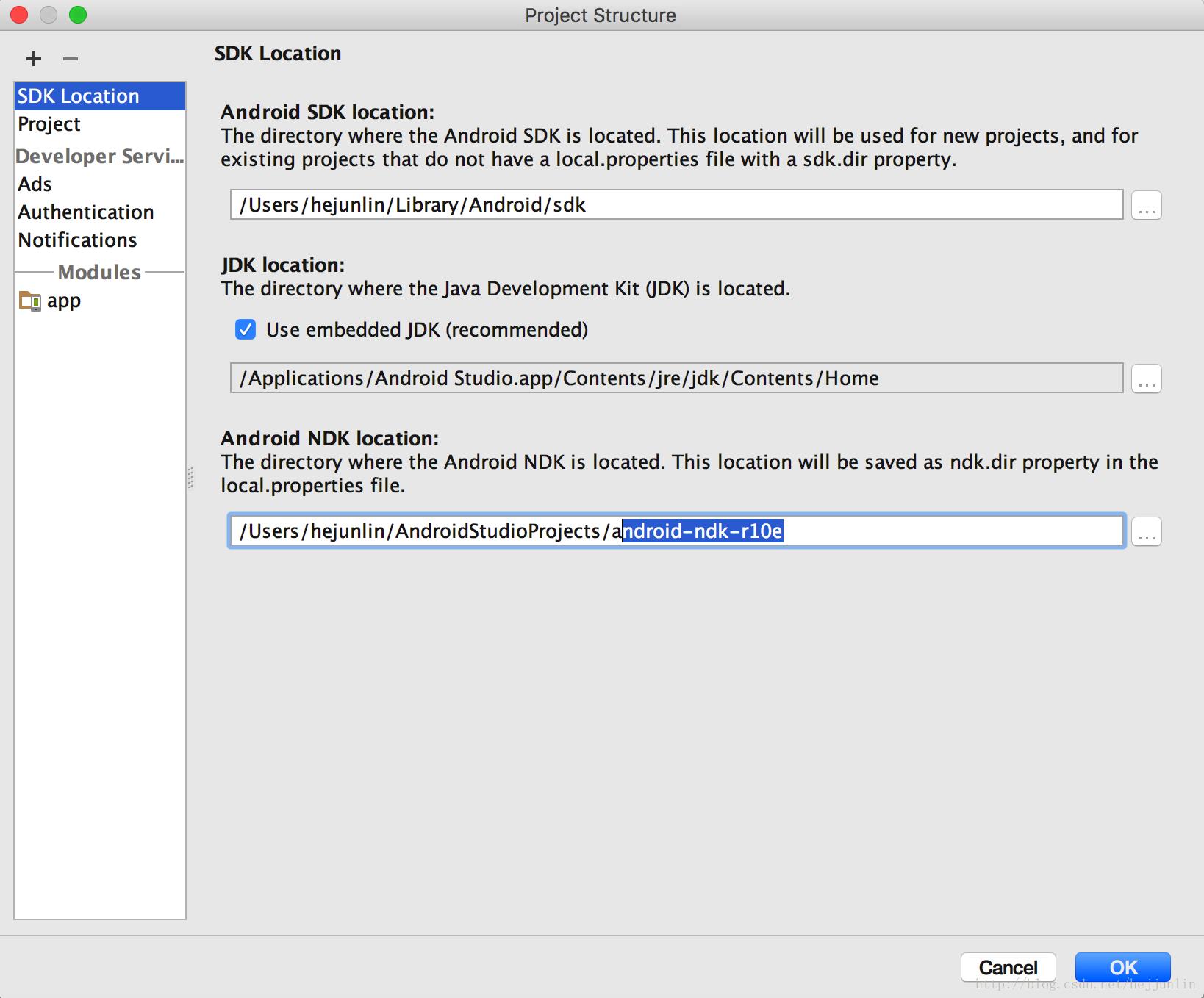

环境:

- Mac OX

- Android Studio 2.2

- android-ndk-r10e

- FFmpeg 3.1.3

Android Studio + NDK的环境配置,由于很简单,这里就不再脑补了。

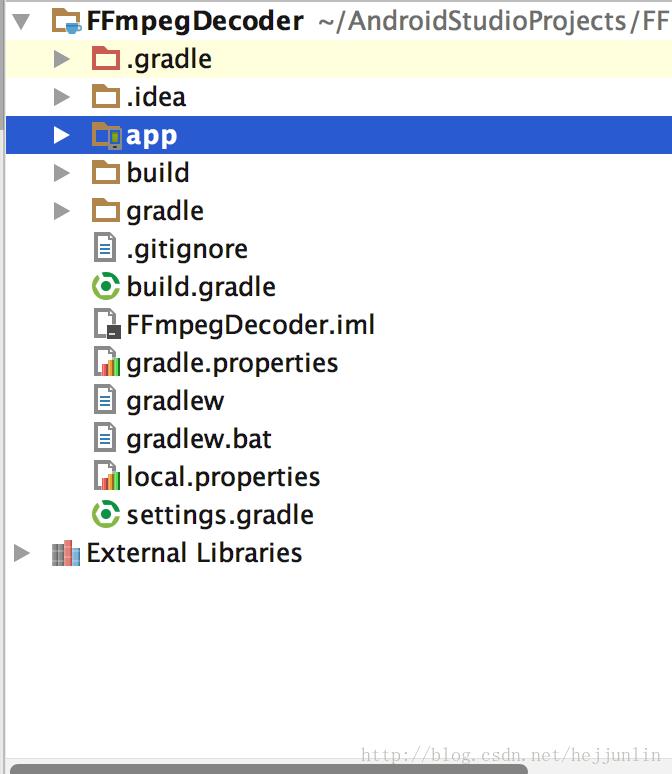

建立一个工程

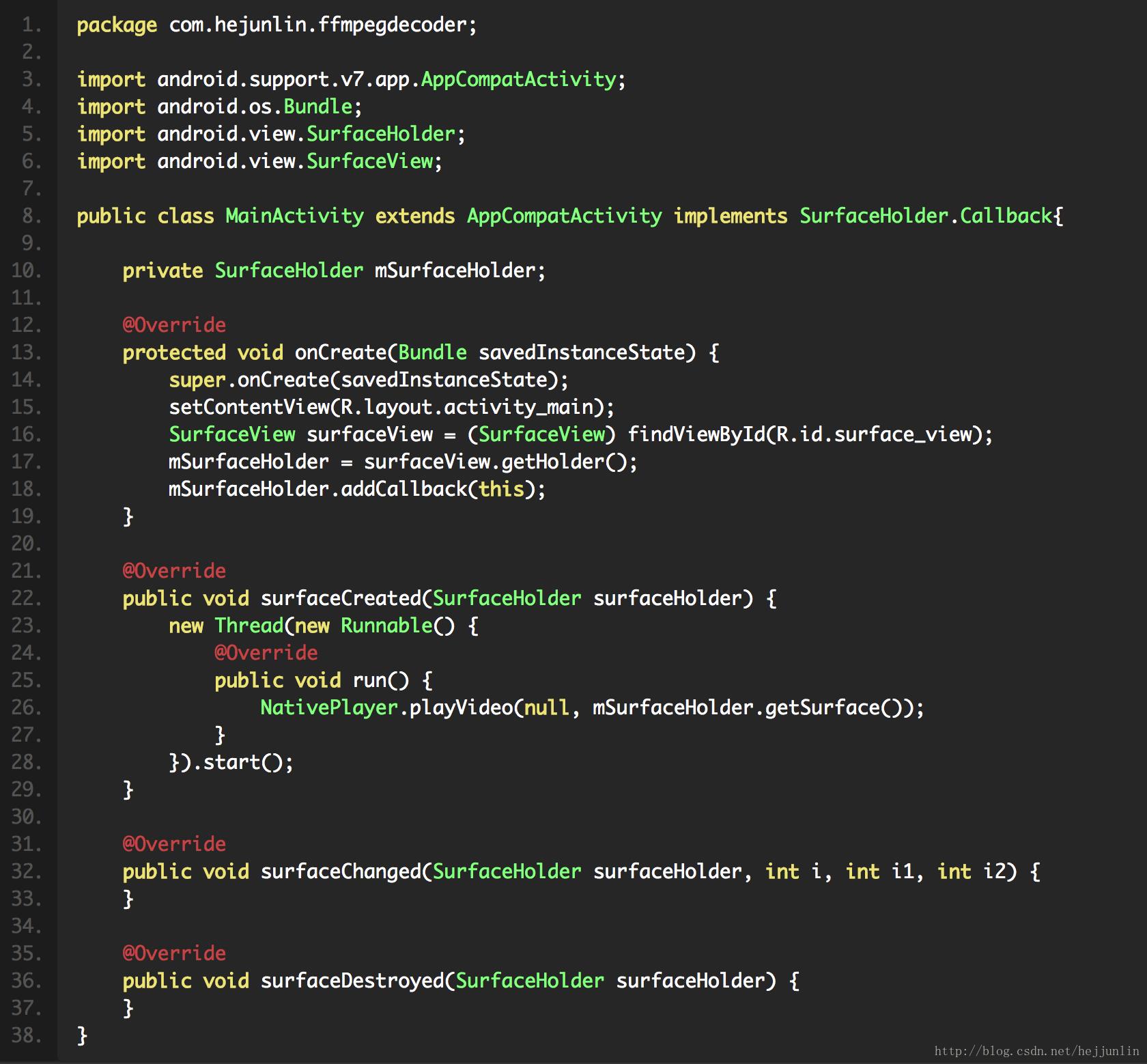

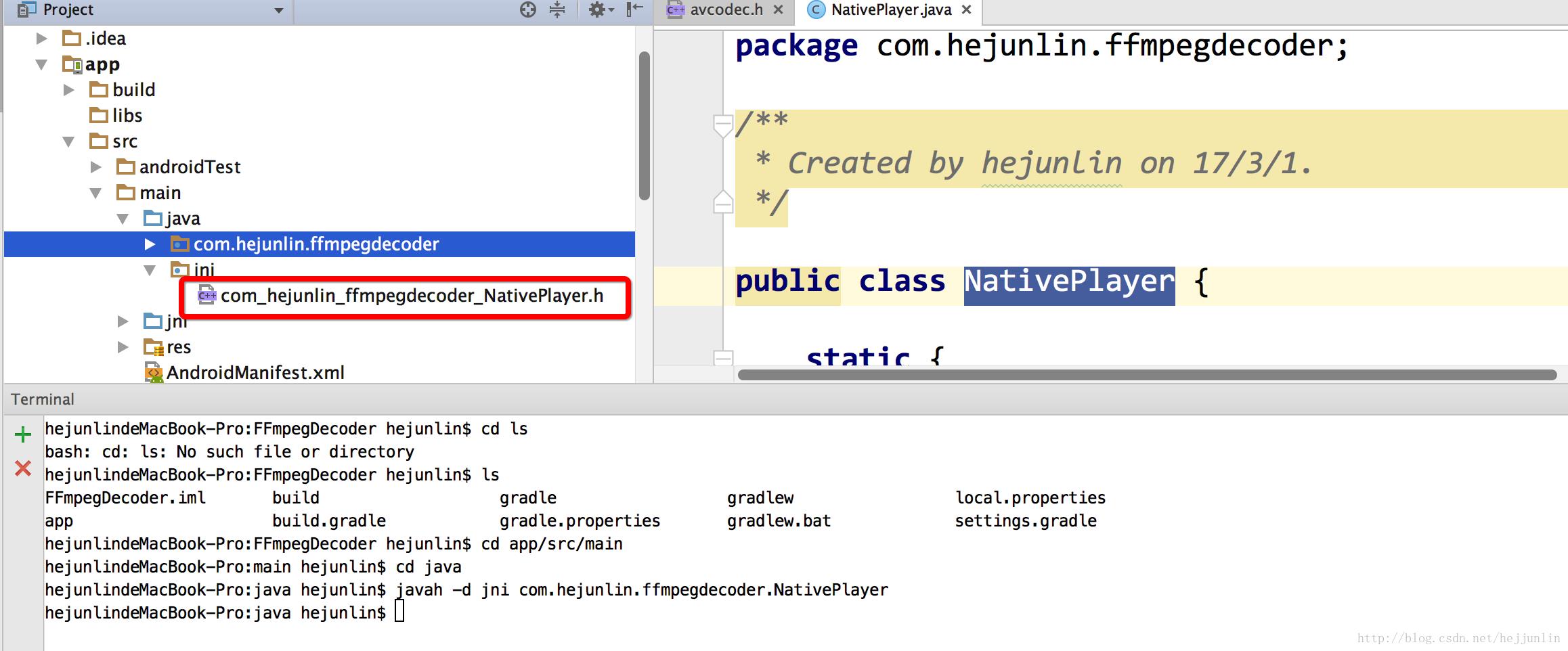

写Java代码:

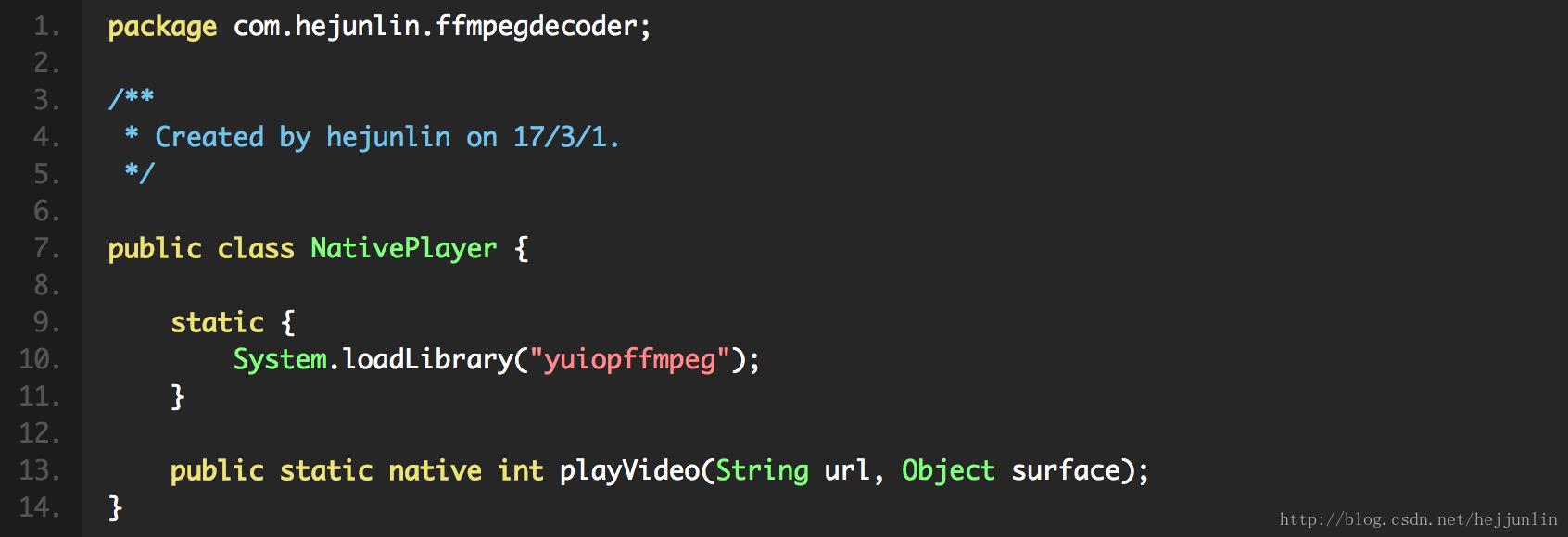

NativePlayer:

生成头文件:javah -d jni com.hejunlin.ffmpegdecoder.NativePlayer

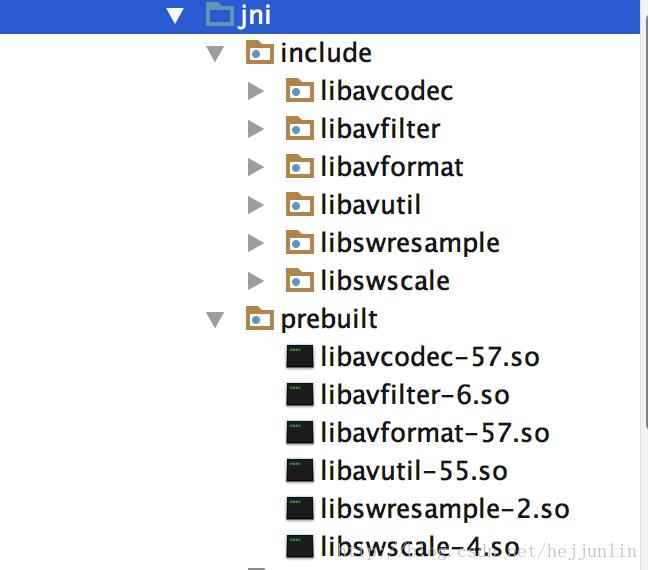

拷贝ffmpeg3.1.3中android目录下的include文件,共5个子文件夹,以及对应的5个so,到prebuilt下,include,prebuilt这个可以自己建立一个folder。

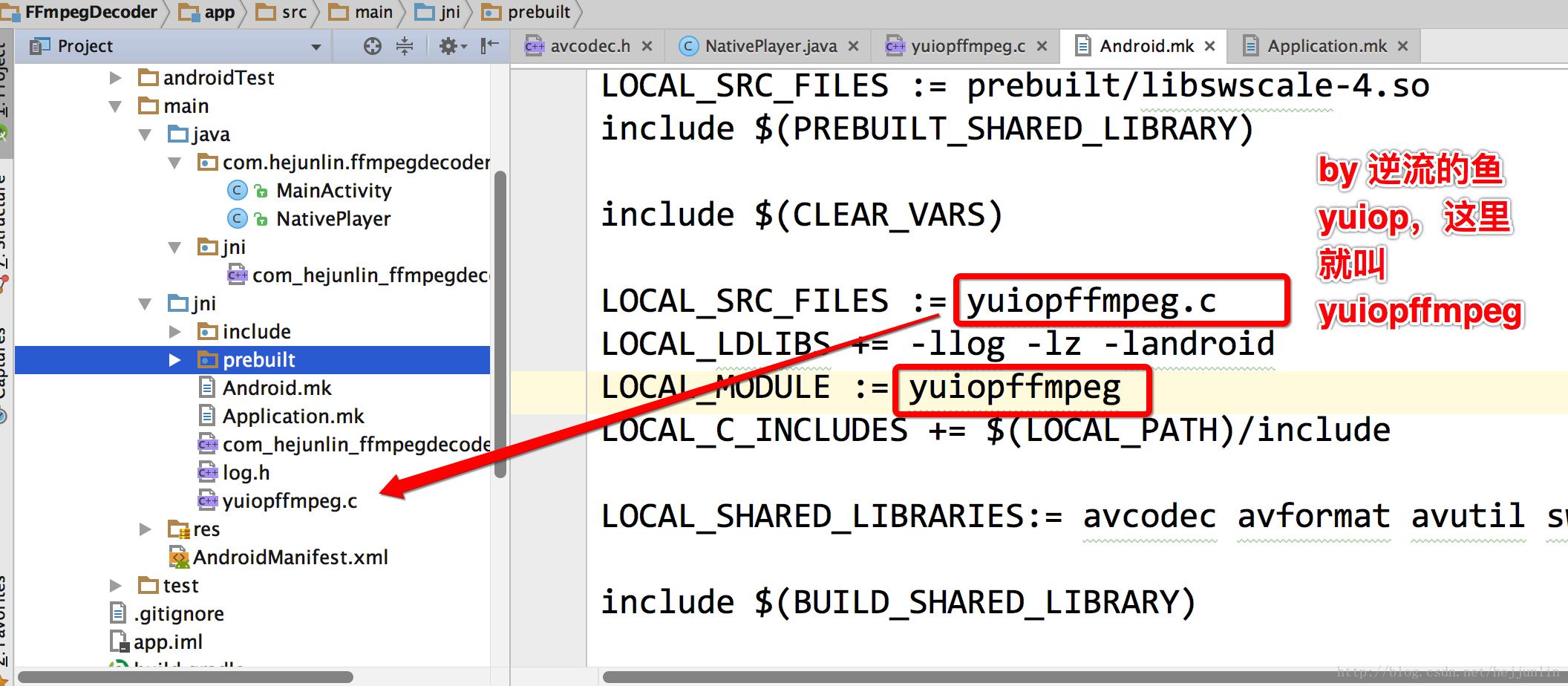

然后写make文件:

LOCAL_PATH := $(call my-dir)

include $(CLEAR_VARS)

LOCAL_MODULE := avcodec

LOCAL_SRC_FILES := prebuilt/libavcodec-57.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := avformat

LOCAL_SRC_FILES := prebuilt/libavformat-57.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := avutil

LOCAL_SRC_FILES := prebuilt/libavutil-55.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := swresample

LOCAL_SRC_FILES := prebuilt/libswresample-2.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := swscale

LOCAL_SRC_FILES := prebuilt/libswscale-4.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_SRC_FILES := yuiopffmpeg.c

LOCAL_LDLIBS += -llog -lz -landroid

LOCAL_MODULE := yuiopffmpeg

LOCAL_C_INCLUDES += $(LOCAL_PATH)/include

LOCAL_SHARED_LIBRARIES:= avcodec avformat avutil swresample swscale

include $(BUILD_SHARED_LIBRARY)

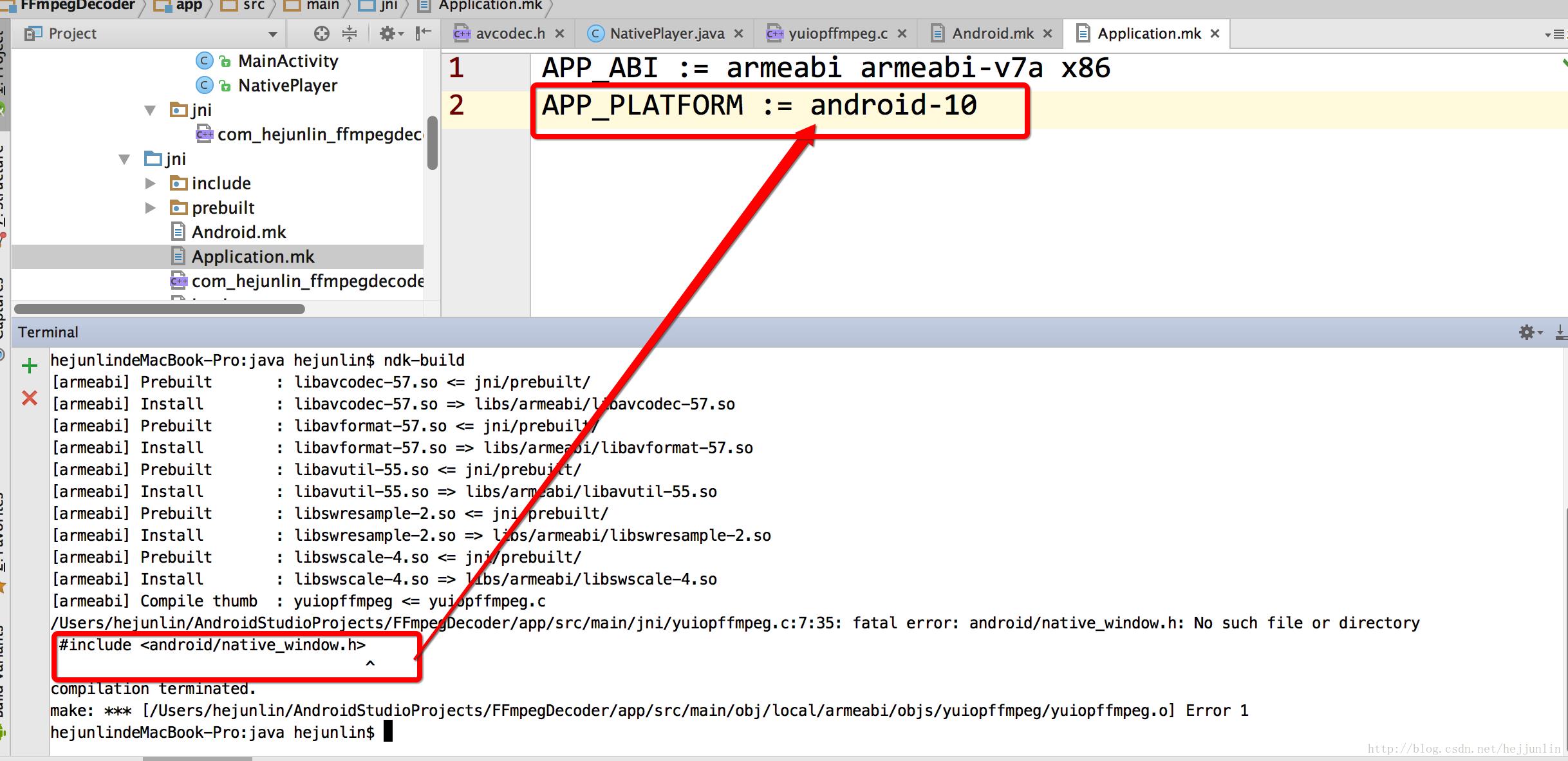

同时写下Application.mk,这个主要是用于编译不同平台的库,对应内容如下,由于引用了native_window.h,要加上一句APP_PLATFORM := android-10 :

APP_ABI := armeabi armeabi-v7a x86

APP_PLATFORM := android-10

接下来,最主要就是写jni相关代码,yuiopffmpeg.c这个文件,之前生成的那个头文件,拷贝里面那个方法名,贴到这里来

//

// Created by 何俊林 on 17/3/1.

//

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#include <android/native_window.h>

#include <android/native_window_jni.h>

#include "com_hejunlin_ffmpegdecoder_NativePlayer.h"

#include "log.h"

JNIEXPORT jint JNICALL Java_com_hejunlin_ffmpegdecoder_NativePlayer_playVideo

(JNIEnv * env, jclass clazz, jstring url, jobject surface)

{

LOGD("start playvideo... url");

const char * file_name = (*env)->GetStringUTFChars(env, url, JNI_FALSE);

av_register_all();

AVFormatContext * pFormatCtx = avformat_alloc_context();

// Open video file

if(avformat_open_input(&pFormatCtx, file_name, NULL, NULL)!=0) {

LOGE("Couldn't open file:%s\\n", file_name);

return -1; // Couldn't open file

}

// Retrieve stream information

if(avformat_find_stream_info(pFormatCtx, NULL)<0) {

LOGE("Couldn't find stream information.");

return -1;

}

// Find the first video stream

int videoStream = -1, i;

for (i = 0; i < pFormatCtx->nb_streams; i++) {

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO

&& videoStream < 0) {

videoStream = i;

}

}

if(videoStream==-1) {

LOGE("Didn't find a video stream.");

return -1; // Didn't find a video stream

}

// Get a pointer to the codec context for the video stream

AVCodecContext * pCodecCtx = pFormatCtx->streams[videoStream]->codec;

// Find the decoder for the video stream

AVCodec * pCodec = avcodec_find_decoder(pCodecCtx->codec_id);

if(pCodec==NULL) {

LOGE("Codec not found.");

return -1; // Codec not found

}

if(avcodec_open2(pCodecCtx, pCodec, NULL) < 0) {

LOGE("Could not open codec.");

return -1; // Could not open codec

}

// 获取native window

ANativeWindow* nativeWindow = ANativeWindow_fromSurface(env, surface);

// 获取视频宽高

int videoWidth = pCodecCtx->width;

int videoHeight = pCodecCtx->height;

// 设置native window的buffer大小,可自动拉伸

ANativeWindow_setBuffersGeometry(nativeWindow, videoWidth, videoHeight, WINDOW_FORMAT_RGBA_8888);

ANativeWindow_Buffer windowBuffer;

if(avcodec_open2(pCodecCtx, pCodec, NULL)<0) {

LOGE("Could not open codec.");

return -1; // Could not open codec

}

// Allocate video frame

AVFrame * pFrame = av_frame_alloc();

// 用于渲染

AVFrame * pFrameRGBA = av_frame_alloc();

if(pFrameRGBA == NULL || pFrame == NULL) {

LOGE("Could not allocate video frame.");

return -1;

}

// Determine required buffer size and allocate buffer

// buffer中数据就是用于渲染的,且格式为RGBA

int numBytes=av_image_get_buffer_size(AV_PIX_FMT_RGBA, pCodecCtx->width, pCodecCtx->height, 1);

uint8_t * buffer=(uint8_t *)av_malloc(numBytes*sizeof(uint8_t));

av_image_fill_arrays(pFrameRGBA->data, pFrameRGBA->linesize, buffer, AV_PIX_FMT_RGBA,

pCodecCtx->width, pCodecCtx->height, 1);

// 由于解码出来的帧格式不是RGBA的,在渲染之前需要进行格式转换

struct SwsContext *sws_ctx = sws_getContext(pCodecCtx->width,

pCodecCtx->height,

pCodecCtx->pix_fmt,

pCodecCtx->width,

pCodecCtx->height,

AV_PIX_FMT_RGBA,

SWS_BILINEAR,

NULL,

NULL,

NULL);

int frameFinished;

AVPacket packet;

while(av_read_frame(pFormatCtx, &packet)>=0) {

// Is this a packet from the video stream?

if(packet.stream_index==videoStream) {

// Decode video frame

avcodec_decode_video2(pCodecCtx, pFrame, &frameFinished, &packet);

// 并不是decode一次就可解码出一帧

if (frameFinished) {

// lock native window buffer

ANativeWindow_lock(nativeWindow, &windowBuffer, 0);

// 格式转换

sws_scale(sws_ctx, (uint8_t const * const *)pFrame->data,

pFrame->linesize, 0, pCodecCtx->height,

pFrameRGBA->data, pFrameRGBA->linesize);

// 获取stride

uint8_t * dst = windowBuffer.bits;

int dstStride = windowBuffer.stride * 4;

uint8_t * src = (uint8_t*) (pFrameRGBA->data[0]);

int srcStride = pFrameRGBA->linesize[0];

// 由于window的stride和帧的stride不同,因此需要逐行复制

int h;

for (h = 0; h < videoHeight; h++) {

memcpy(dst + h * dstStride, src + h * srcStride, srcStride);

}

ANativeWindow_unlockAndPost(nativeWindow);

}

}

av_packet_unref(&packet);

}

av_free(buffer);

av_free(pFrameRGBA);

// Free the YUV frame

av_free(pFrame);

// Close the codecs

avcodec_close(pCodecCtx);

// Close the video file

avformat_close_input(&pFormatCtx);

return 0;

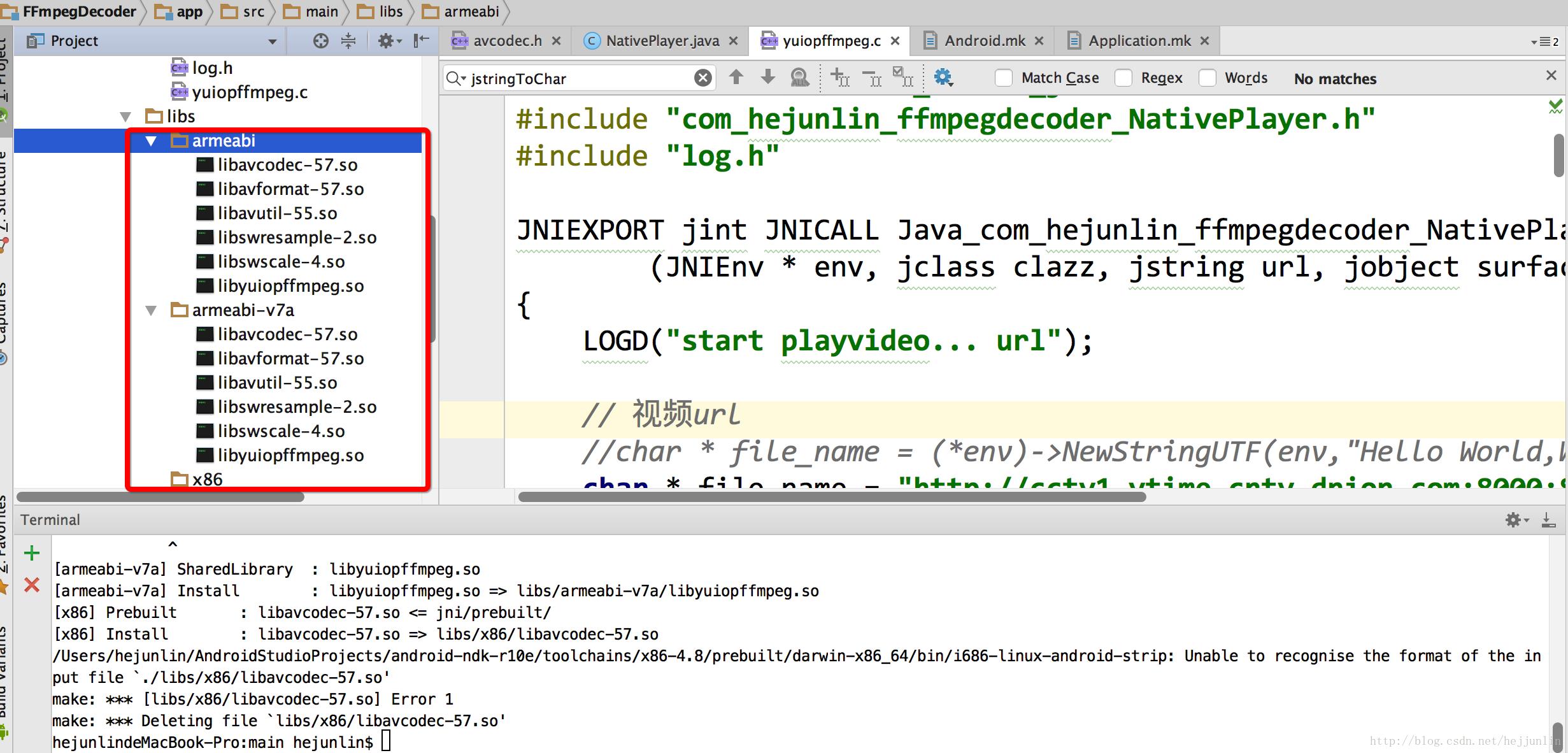

}这时就可以执行编译了,完成就能发现会多一个so,就是那个libyuiopffmpeg.so,这就是我编译出来的。

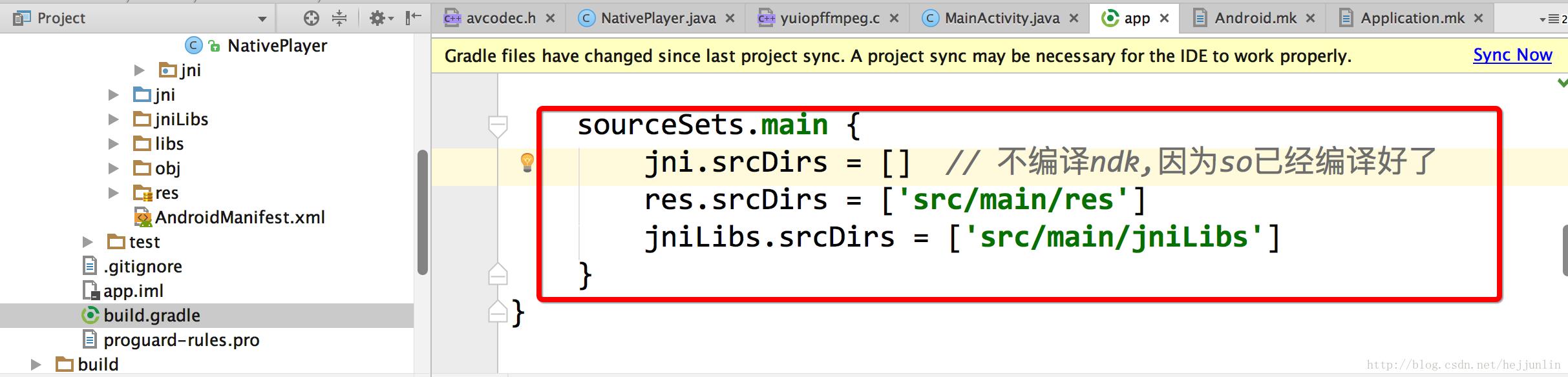

这时候,在Android studio下的main目录下,建立一个jniLibs,把刚刚那个so拷贝到这里就行了,就只要这个so就可以了。不同平台,如arm,x86,这些文件夹名字不能随便改。记得还要加如下配置,在build.gradle中。本文出自逆流的鱼yuiop:http://blog.csdn.net/hejjunlin/article/details/59225373

接下来就运行这个工程,运行时,又有问题。

然后发现原来没有加网络权限,在AndroidManifest.xml中,加上

<uses-permission android:name="android.permission.INTERNET" />最后,就可以运行了,效果图,如下:

log:

03-02 00:23:59.363 4117-4117/? W/Zygote: MzIsRooted false

03-02 00:23:59.366 4117-4117/? I/art: Late-enabling -Xcheck:jni

03-02 00:23:59.410 4117-4132/? E/art: Failed sending reply to debugger: Broken pipe

03-02 00:23:59.410 4117-4132/? I/art: Debugger is no longer active

03-02 00:23:59.530 4117-4117/? D/ActivityThread: hoder:android.app.IActivityManager$ContentProviderHolder@3001a389,provider,holder.Provider:android.content.ContentProviderProxy@357c8f8e

03-02 00:23:59.541 4117-4117/? D/Proxy: setHttpRequestCheckHandler

03-02 00:23:59.571 4117-4117/? I/InstantRun: Instant Run Runtime started. Android package is com.hejunlin.ffmpegdecoder, real application class is null.

03-02 00:23:59.640 4117-4117/? D/FlymeTrafficTracking: tag (29) com.hejunlin.ffmpegdecoder main uid 10087

03-02 00:23:59.641 4117-4117/? D/NetworkManagementSocketTagger: tagSocket(29) with statsTag=0xffffffff, statsUid=-1

03-02 00:23:59.652 4117-4117/? D/ActivityThread: BIND_APPLICATION handled : 0 / AppBindData{appInfo=ApplicationInfo{9d7ef45 com.hejunlin.ffmpegdecoder}}

03-02 00:23:59.653 4117-4117/? V/ActivityThread: Handling launch of ActivityRecord{1036169a token=android.os.BinderProxy@3706bbcb {com.hejunlin.ffmpegdecoder/com.hejunlin.ffmpegdecoder.MainActivity}}

03-02 00:23:59.788 4117-4117/? V/ActivityThread: ActivityRecord{1036169a token=android.os.BinderProxy@3706bbcb {com.hejunlin.ffmpegdecoder/com.hejunlin.ffmpegdecoder.MainActivity}}: app=android.app.Application@1f0d3ac1, appName=com.hejunlin.ffmpegdecoder, pkg=com.hejunlin.ffmpegdecoder, comp={com.hejunlin.ffmpegdecoder/com.hejunlin.ffmpegdecoder.MainActivity}, dir=/data/app/com.hejunlin.ffmpegdecoder-1/base.apk

03-02 00:23:59.863 4117-4117/? W/art: Before Android 4.1, method android.graphics.PorterDuffColorFilter android.support.graphics.drawable.VectorDrawableCompat.updateTintFilter(android.graphics.PorterDuffColorFilter, android.content.res.ColorStateList, android.graphics.PorterDuff$Mode) would have incorrectly overridden the package-private method in android.graphics.drawable.Drawable

03-02 00:23:59.973 4117-4117/? W/Resources: Converting to string: TypedValue{t=0x12/d=0x0 a=4 r=0x7f0b0045}

03-02 00:23:59.983 4117-4117/? W/Resources: Converting to string: TypedValue{t=0x12/d=0x0 a=4 r=0x7f0b0046}

03-02 00:24:00.022 4117-4117/? W/Resources: Converting to string: TypedValue{t=0x12/d=0x0 a=4 r=0x7f0b0047}

03-02 00:24:00.022 4117-4117/? W/Resources: Converting to string: TypedValue{t=0x5/d=0x1 a=4 r=0x7f07000f}

03-02 00:24:00.022 4117-4117/? W/Resources: Converting to string: TypedValue{t=0x5/d=0x1 a=4 r=0x7f07000e}

03-02 00:24:00.022 4117-4117/? W/Resources: Converting to string: TypedValue{t=0x5/d=0x3001 a=1 r=0x10500cc}

03-02 00:24:00.032 4117-4117/? W/Resources: Converting to string: TypedValue{t=0x5/d=0x1 a=1 r=0x10500d3}

03-02 00:24:00.042 4117-4117/? W/Resources: Converting to string: TypedValue{t=0x1/d=0x7f080114 a=4 r=0x7f080114}

03-02 00:24:00.042 4117-4117/? W/Resources: Converting to string: TypedValue{t=0x12/d=0x0 a=4 r=0x7f0b0048}

03-02 00:24:00.071 4117-4117/? W/Resources: Converting to string: TypedValue{t=0x12/d=0x0 a=4 r=0x7f0b0056}

03-02 00:24:00.073 4117-4117/? W/Resources: Converting to string: TypedValue{t=0x12/d=0x0 a=4 r=0x7f0b0057}

03-02 00:24:00.074 4117-4117/? D/SurfaceView: checkSurfaceViewlLogProperty get invalid command

03-02 00:24:00.075 4117-4117/? V/ActivityThread: Performing resume of ActivityRecord{1036169a token=android.os.BinderProxy@3706bbcb {com.hejunlin.ffmpegdecoder/com.hejunlin.ffmpegdecoder.MainActivity}}

03-02 00:24:00.090 4117-4117/? D/ActivityThread: ACT-AM_ON_RESUME_CALLED ActivityRecord{1036169a token=android.os.BinderProxy@3706bbcb {com.hejunlin.ffmpegdecoder/com.hejunlin.ffmpegdecoder.MainActivity}}

03-02 00:24:00.090 4117-4117/? V/ActivityThread: Resume ActivityRecord{1036169a token=android.os.BinderProxy@3706bbcb {com.hejunlin.ffmpegdecoder/com.hejunlin.ffmpegdecoder.MainActivity}} started activity: false, hideForNow: false, finished: false

03-02 00:24:00.090 4117-4117/? V/PhoneWindow: DecorView setVisiblity: visibility = 4 ,Parent =null, this =com.android.internal.policy.impl.PhoneWindow$DecorView{4f1c1fd I.E..... R.....ID 0,0-0,0}

03-02 00:24:00.102 4117-4142/? D/OpenGLRenderer: initialize DisplayEventReceiver 0xf4a03fb8

03-02 00:24:00.102 4117-4142/? D/OpenGLRenderer: Use EGL_SWAP_BEHAVIOR_PRESERVED: true

03-02 00:24:00.105 4117-4117/? D/ViewRootImpl: hardware acceleration is enabled, this = ViewRoot{2c1b2da1 com.hejunlin.ffmpegdecoder/com.hejunlin.ffmpegdecoder.MainActivity,ident = 0}

03-02 00:24:00.116 4117-4117/? V/ActivityThread: Resuming ActivityRecord{1036169a token=android.os.BinderProxy@3706bbcb {com.hejunlin.ffmpegdecoder/com.hejunlin.ffmpegdecoder.MainActivity}} with isForward=false

03-02 00:24:00.117 4117-4117/? V/PhoneWindow: DecorView setVisiblity: visibility = 0 ,Parent =ViewRoot{2c1b2da1 com.hejunlin.ffmpegdecoder/com.hejunlin.ffmpegdecoder.MainActivity,ident = 0}, this =com.android.internal.policy.impl.PhoneWindow$DecorView{4f1c1fd V.E..... R.....ID 0,0-0,0}

03-02 00:24:00.117 4117-4117/? V/ActivityThread: Scheduling idle handler for ActivityRecord{1036169a token=android.os.BinderProxy@3706bbcb {com.hejunlin.ffmpegdecoder/com.hejunlin.ffmpegdecoder.MainActivity}}

03-02 00:24:00.117 4117-4117/? D/ActivityThread: ACT-LAUNCH_ACTIVITY handled : 0 / ActivityRecord{1036169a token=android.os.BinderProxy@3706bbcb {com.hejunlin.ffmpegdecoder/com.hejunlin.ffmpegdecoder.MainActivity}}

03-02 00:24:00.118 4117-4117/? I/SurfaceView: updateWindow -- onWindowVisibilityChanged, visibility = 0, this = android.view.SurfaceView{3d6c7208 V.E..... ......I. 0,0-0,0 #7f0b0057 app:id/surface_view}

03-02 00:24:00.198 4117-4142/? D/MALI: eglInitialize:1479: [+]

03-02 00:24:00.199 4117-4117/? I/SurfaceView: updateWindow -- setFrame, this = android.view.SurfaceView{3d6c7208 V.E..... ......ID 0,0-1920,861 #7f0b0057 app:id/surface_view}

03-02 00:24:00.202 4117-4142/? D/MALI: eglInitialize:1850: [-]

[ 03-02 00:24:00.202 4117: 4142 I/ ]

elapse(include ctx switch):3374 (ms), eglInitialize

03-02 00:24:00.202 4117-4142/? I/OpenGLRenderer: Initialized EGL, version 1.4

03-02 00:24:00.202 4117-4142/? D/MALI: eglCreateContext:206: [MALI] eglCreateContext display 0xf4a28280, share context 0x0 here.

03-02 00:24:00.210 4117-4142/? D/MALI: gles_context_new:248: Create GLES ctx 0xe0ce2008 successfully

03-02 00:24:00.210 4117-4142/? D/MALI: eglCreateContext:543: [MALI] eglCreateContext end. Created context 0xe0c53b68 here.

03-02 00:24:00.211 4117-4142/? D/OpenGLRenderer: TaskManager() 0xf48b0f48, cpu = 8, thread = 4

03-02 00:24:00.215 4117-4142/? D/OpenGLRenderer: Enabling debug mode 0

03-02 00:24:00.215 4117-4142/? D/Surface: Surface::connect(this=0xf49b0e00,api=1)

03-02 00:24:00.216 4117-4142/? D/mali_winsys: new_window_surface returns 0x3000

03-02 00:24:00.216 4117-4142/? D/Surface: Surface::allocateBuffers(this=0xf49b0e00)

03-02 00:24:00.219 4117-4157/? W/linker: libyuiopffmpeg.so: unused DT entry: type 0x6ffffffe arg 0x1058

03-02 00:24:00.219 4117-4157/? W/linker: libyuiopffmpeg.so: unused DT entry: type 0x6fffffff arg 0x4

03-02 00:24:00.225 4117-4157/? W/linker: libavcodec-57.so: unused DT entry: type 0x6ffffffe arg 0x5da4

03-02 00:24:00.225 4117-4157/? W/linker: libavcodec-57.so: unused DT entry: type 0x6fffffff arg 0x2

03-02 00:24:00.226 4117-4157/? W/linker: libavformat-57.so: unused DT entry: type 0x6ffffffe arg 0x6408

03-02 00:24:00.226 4117-4157/? W/linker: libavformat-57.so: unused DT entry: type 0x6fffffff arg 0x2

03-02 00:24:00.227 4117-4157/? W/linker: libswresample-2.so: unused DT entry: type 0x6ffffffe arg 0xcd4

03-02 00:24:00.228 4117-4157/? W/linker: libswresample-2.so: unused DT entry: type 0x6fffffff arg 0x1

03-02 00:24:00.228 4117-4117/? I/SurfaceView: Punch a hole(dispatchDraw), w = 1920, h = 861, this = android.view.SurfaceView{3d6c7208 V.E..... ........ 0,0-1920,861 #7f0b0057 app:id/surface_view}

03-02 00:24:00.228 4117-4157/? W/linker: libswscale-4.so: unused DT entry: type 0x6ffffffe arg 0xd70

03-02 00:24:00.229 4117-4157/? W/linker: libswscale-4.so: unused DT entry: type 0x6fffffff arg 0x1

03-02 00:24:00.235 4117-4157/? D/jni/yuiopffmpeg.c: Java_com_hejunlin_ffmpegdecoder_NativePlayer_playVideo:start playvideo... url

03-02 00:24:00.236 4117-4157/? D/libc-netbsd: [getaddrinfo]: hostname=cctv1.vtime.cntv.dnion.com; servname=(null); cache_mode=(null), netid=0; mark=0

03-02 00:24:00.236 4117-4157/? D/libc-netbsd: [getaddrinfo]: ai_addrlen=0; ai_canonname=(null); ai_flags=4; ai_family=0

03-02 00:24:00.236 4117-4157/? D/libc-netbsd: [getaddrinfo]: hostname=cctv1.vtime.cntv.dnion.com; servname=(null); cache_mode=(null), netid=0; mark=0

03-02 00:24:00.236 4117-4157/? D/libc-netbsd: [getaddrinfo]: ai_addrlen=0; ai_canonname=(null); ai_flags=4; ai_family=0

03-02 00:24:00.236 4117-4157/? D/libc-netbsd: [getaddrinfo]: hostname=cctv1.vtime.cntv.dnion.com; servname=8000; cache_mode=(null), netid=0; mark=0

03-02 00:24:00.236 4117-4157/? D/libc-netbsd: [getaddrinfo]: ai_addrlen=0; ai_canonname=(null); ai_flags=0; ai_family=0

03-02 00:24:00.241 4117-4142/? D/Surface: Surface::setBuffersDimensions(this=0xf49b0e00,w=1080,h=1920)

03-02 00:24:00.256 4117-4142/? D/GraphicBuffer: register, handle(0xe0c03980) (w:1080 h:1920 s:1088 f:0x1 u:0x000b00)

03-02 00:24:00.261 4117-4142/? W/MALI: glDrawArrays:714: [MALI] glDrawArrays takes more than 5ms here. Total elapse time(us): 20304

03-02 00:24:00.262 4117-4157/? D/libc-netbsd: getaddrinfo: cctv1.vtime.cntv.dnion.com get result from proxy >>

03-02 00:24:00.268 4117-4142/? D/Surface: Surface::setBuffersDimensions(this=0xf49b0e00,w=1080,h=1920)

03-02 00:24:00.273 4117-4142/? D/GraphicBuffer: register, handle(0xe0c04380) (w:1080 h:1920 s:1088 f:0x1 u:0x000b00)

03-02 00:24:00.324 4117-4117/? W/Resources: Converting to string: TypedValue{t=0x5/d=0x2401 a=1 r=0x10500d7}

03-02 00:24:00.324 4117-4117/? W/Resources: Converting to string: TypedValue{t=0x5/d=0x3001 a=1 r=0x10500d9}

03-02 00:24:00.326 4117-4117/? W/Resources: Converting to string: TypedValue{t=0x5/d=0x601 a=1 r=0x10500d4}

03-02 00:24:00.326 4117-4117/? W/Resources: Converting to string: TypedValue{t=0x5/d=0xa01 a=1 r=0x10500d5}

03-02 00:24:00.335 4117-4117/? D/STATUS_BAR_TINT: isBlackColor=false,color=argb(255,245,72,76)

03-02 00:24:00.336 4117-4117/? D/STATUS_BAR_TINT: isSimilarColor,red=0,green=0,blue=0

03-02 00:24:00.338 4117-4117/? I/SurfaceView: updateWindow -- UPDATE_WINDOW_MSG, this = Handler (android.view.SurfaceView$1) {18557a20}

03-02 00:24:00.346 4117-4117/? I/SurfaceView: updateWindow -- setFrame, this = android.view.SurfaceView{3d6c7208 V.E..... ......I. 0,0-1920,861 #7f0b0057 app:id/surface_view}

03-02 00:24:00.347 4117-4117/? I/SurfaceView: Punch a hole(dispatchDraw), w = 1920, h = 861, this = android.view