编程,exited with exitcode =1 嘛意思,如何解决?急!

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了编程,exited with exitcode =1 嘛意思,如何解决?急!相关的知识,希望对你有一定的参考价值。

DOS exitcode(错误代码):1无效DoS功能号

2文件末找到

3路径未找到

4打开文件过多

5禁止文件存取

6无效文件句柄

12无效文件存取代码

15无效驱动器号

16不能删除当前日录

17不能跨驱动器改文件名

exitcode=1,无效DoS功能号,一般是fp自身不稳定或者内存上溢导致的,如果有数组,建议把数组开小点,也有可能是自身程序的问题,可以试试用F7单步跟踪,看看哪里出的问题

http://zhidao.baidu.com/question/287867160.html 参考技术A 无效文件存取代码或者路径未找到。

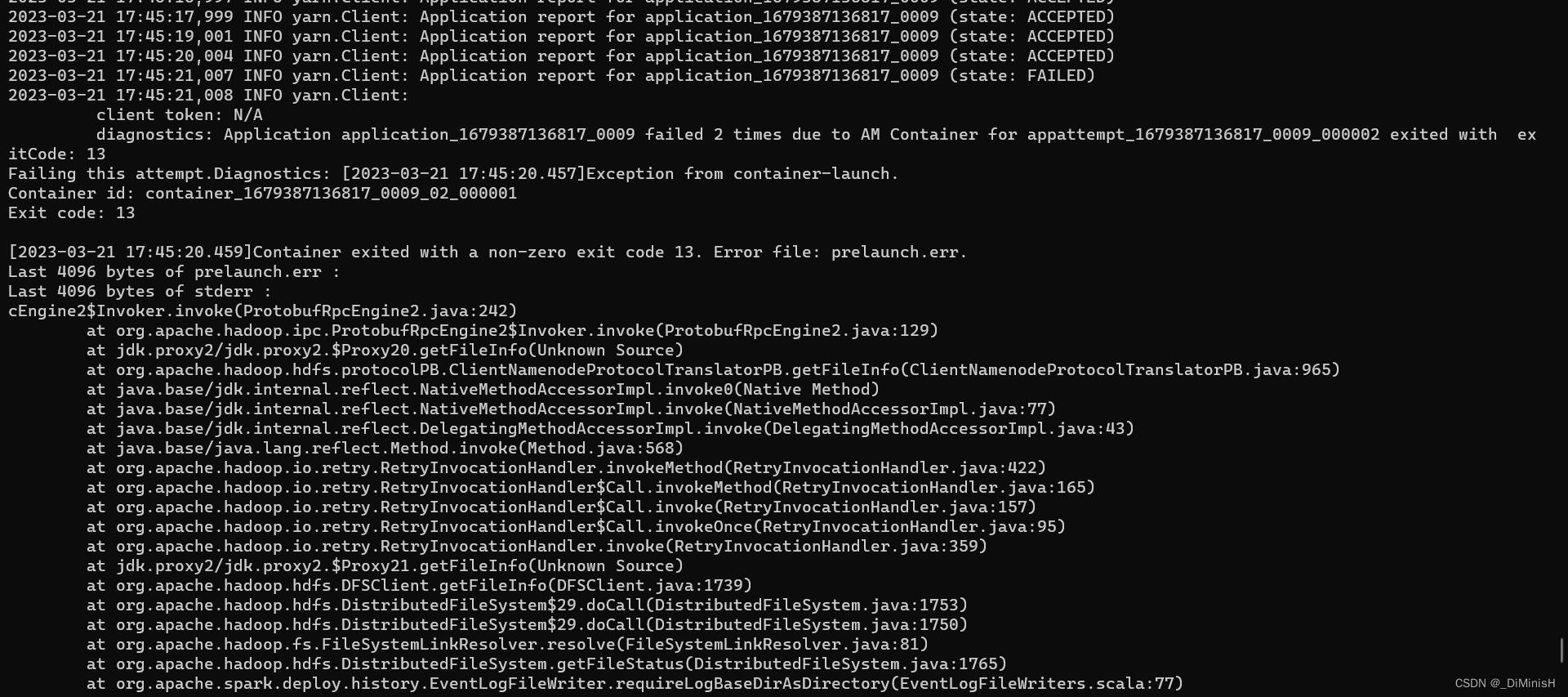

解决 Application xxx failed 2 times due to AM Container for xxx exited with exitCode: 13 问题

解决 Spark Application application_1679387136817_0009 failed 2 times due to AM Container for appattempt_1679387136817_0009_000002 exited with exitCode: 13 问题

问题

我安装的是Hadoop3.3.4,使用的是Java17,Spark用的是3.3.2

1. spark hadoop启动后输入命令出现错误

启动完成后,我在控制台输入如下命令

spark-submit --master yarn --deploy-mode cluster --class org.apache.spark.examples.SparkPi $SPARK_HOME/examples/jars/spark-examples_2.12-3.3.2.jar 100

出现报错信息

2023-03-21 17:45:04,392 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2023-03-21 17:45:04,460 INFO client.DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at master/192.168.186.141:8032

2023-03-21 17:45:04,968 INFO conf.Configuration: resource-types.xml not found

2023-03-21 17:45:04,968 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

2023-03-21 17:45:04,980 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (8192 MB per container)

2023-03-21 17:45:04,981 INFO yarn.Client: Will allocate AM container, with 1408 MB memory including 384 MB overhead

2023-03-21 17:45:04,982 INFO yarn.Client: Setting up container launch context for our AM

2023-03-21 17:45:04,982 INFO yarn.Client: Setting up the launch environment for our AM container

2023-03-21 17:45:04,994 INFO yarn.Client: Preparing resources for our AM container

2023-03-21 17:45:05,019 WARN yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

2023-03-21 17:45:05,626 INFO yarn.Client: Uploading resource file:/tmp/spark-300fccc5-6f53-48f7-b289-43938b5170d1/__spark_libs__10348349781677306272.zip -> hdfs://master:9000/user/root/.sparkStaging/application_1679387136817_0009/__spark_libs__10348349781677306272.zip

2023-03-21 17:45:07,612 INFO yarn.Client: Uploading resource file:/opt/spark/examples/jars/spark-examples_2.12-3.3.2.jar -> hdfs://master:9000/user/root/.sparkStaging/application_1679387136817_0009/spark-examples_2.12-3.3.2.jar

2023-03-21 17:45:07,817 INFO yarn.Client: Uploading resource file:/tmp/spark-300fccc5-6f53-48f7-b289-43938b5170d1/__spark_conf__14133738159966771512.zip -> hdfs://master:9000/user/root/.sparkStaging/application_1679387136817_0009/__spark_conf__.zip

2023-03-21 17:45:07,887 INFO spark.SecurityManager: Changing view acls to: root

2023-03-21 17:45:07,887 INFO spark.SecurityManager: Changing modify acls to: root

2023-03-21 17:45:07,887 INFO spark.SecurityManager: Changing view acls groups to:

2023-03-21 17:45:07,888 INFO spark.SecurityManager: Changing modify acls groups to:

2023-03-21 17:45:07,888 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

2023-03-21 17:45:07,934 INFO yarn.Client: Submitting application application_1679387136817_0009 to ResourceManager

2023-03-21 17:45:07,972 INFO impl.YarnClientImpl: Submitted application application_1679387136817_0009

2023-03-21 17:45:08,976 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:08,978 INFO yarn.Client:

client token: N/A

diagnostics: AM container is launched, waiting for AM container to Register with RM

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: default

start time: 1679391907944

final status: UNDEFINED

tracking URL: http://master:8088/proxy/application_1679387136817_0009/

user: root

2023-03-21 17:45:09,981 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:10,983 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:11,987 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:12,989 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:13,991 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:14,993 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:15,995 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:16,997 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:17,999 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:19,001 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:20,004 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:21,007 INFO yarn.Client: Application report for application_1679387136817_0009 (state: FAILED)

2023-03-21 17:45:21,008 INFO yarn.Client:

client token: N/A

diagnostics: Application application_1679387136817_0009 failed 2 times due to AM Container for appattempt_1679387136817_0009_000002 exited with exitCode: 13

Failing this attempt.Diagnostics: [2023-03-21 17:45:20.457]Exception from container-launch.

Container id: container_1679387136817_0009_02_000001

Exit code: 13

[2023-03-21 17:45:20.459]Container exited with a non-zero exit code 13. Error file: prelaunch.err.

Last 4096 bytes of prelaunch.err :

Last 4096 bytes of stderr :

cEngine2$Invoker.invoke(ProtobufRpcEngine2.java:242)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:129)

at jdk.proxy2/jdk.proxy2.$Proxy20.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:965)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:568)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359)

at jdk.proxy2/jdk.proxy2.$Proxy21.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1739)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1753)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1750)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1765)

at org.apache.spark.deploy.history.EventLogFileWriter.requireLogBaseDirAsDirectory(EventLogFileWriters.scala:77)

at org.apache.spark.deploy.history.SingleEventLogFileWriter.start(EventLogFileWriters.scala:221)

at org.apache.spark.scheduler.EventLoggingListener.start(EventLoggingListener.scala:83)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:622)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2714)

at org.apache.spark.sql.SparkSession$Builder.$anonfun$getOrCreate$2(SparkSession.scala:953)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:947)

at org.apache.spark.examples.SparkPi$.main(SparkPi.scala:30)

at org.apache.spark.examples.SparkPi.main(SparkPi.scala)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:568)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$2.run(ApplicationMaster.scala:739)

Caused by: java.net.ConnectException: Connection refused

at java.base/sun.nio.ch.Net.pollConnect(Native Method)

at java.base/sun.nio.ch.Net.pollConnectNow(Net.java:672)

at java.base/sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:946)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:205)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:586)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:711)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:833)

at org.apache.hadoop.ipc.Client$Connection.access$3800(Client.java:414)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1677)

at org.apache.hadoop.ipc.Client.call(Client.java:1502)

... 35 more

2023-03-21 17:45:20,318 INFO yarn.ApplicationMaster: Deleting staging directory hdfs://master:9000/user/root/.sparkStaging/application_1679387136817_0009

2023-03-21 17:45:20,405 INFO util.ShutdownHookManager: Shutdown hook called

2023-03-21 17:45:20,406 INFO util.ShutdownHookManager: Deleting directory /opt/localdir/usercache/root/appcache/application_1679387136817_0009/spark-b46475ca-fe68-435b-b528-d6a235d0f5c4

[2023-03-21 17:45:20.459]Container exited with a non-zero exit code 13. Error file: prelaunch.err.

Last 4096 bytes of prelaunch.err :

Last 4096 bytes of stderr :

cEngine2$Invoker.invoke(ProtobufRpcEngine2.java:242)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:129)

at jdk.proxy2/jdk.proxy2.$Proxy20.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:965)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:568)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157)

at org.apache.hadoop.io(pascal问题) program exited with exitcode = 201

解决 Application xxx failed 2 times due to AM Container for xxx exited with exitCode: 13 问题

Flutter:如何修复“Gradle task assembleDebug failed with exit code -1”?

完成错误:Gradle task assembleDebug failed with exit code 1,当我将 Flutter SDK 更新到 v1.12.13+hotfix.5

FlinkFlink Container exited with a non-zero exit code 143

BUILD FAILED in 6s Exception: Gradle task assembleDebug failed with exit code 1