高可用集群之heartbeat基于crm进行资源管理

Posted zouqingyun

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了高可用集群之heartbeat基于crm进行资源管理相关的知识,希望对你有一定的参考价值。

一、高可用集群之heartbeat基于crm进行资源管理

1、集群的工作模型:

A/P:两个节点,工作与主备模型

N-M N>M,N个节点,M个服务

N-N:N个节点,N个服务

A/A:双主模型:

2、资源转移的方式

rgmanager:failover domain priority

pacemaker:

资源黏性:

资源约束(三种类型):

位置约束:资源更倾向于那个节点上

inf:无穷大

n:

-n:

-inf:负无穷

排列约束:资源运行在同一节点的倾向性

inf:

-inf:

顺序约束:资源的启动次序及关闭次序

3、如何让web service中的三个资源:VIP、httpd和filesystem运行于同一节点上

1.排列约束

2.资源组(resource group)

4、如果节点不在是集群节点成员时,如何处理运行于当前节点的资源

stopped:停止

ignore:忽略

freeze:不连接新的请求

suicide:将服务器kill

5、一个资源刚配置完成时,是否启动

target-role?

6、RA类型

heartbeat legacy

LSB

OCF

STONITH

7、资源类型

primitive,native:主资源,只能运行于一个节点

group:组资源

clone:克隆资源

总克隆数,每个节点最多可运行的克隆数

stonith cluster filesystem

master/salve:主从资源

8、分布式锁:

/usr/lib64/heartbeat

hearsources2cib.py

9、图形化配置

ha.cf

crm on

/usr/lib64/heartbeat/ha_propagate 将配置文件传送到别的节点

10、安装gui

heartbeat v2使用crm作为ijiqun资源管理器:需要在ha.cf中添加

crm on

crm通过mgmtd集成监听5560/tcp

需要启动hb_gui的主机为hacluster用户添加密码,使用hb_gui启动

with quorum:拥有法定票数

without quorum :不拥有法定票数

11、定义高可用的web service

VIP

httpd

from

to:以它为基础

web service

VIP

httpd

NFS

注意haresources与crm不兼容,不被crm所读取

二、配置

1、ha.cf

[root@snn heartbeat]# vim /etc/ha.d/ha.cf

mcast eth0 225.0.100.19 694 1 0

crm on

[root@snn heartbeat]# /usr/lib64/heartbeat/ha_propagate

Propagating HA configuration files to node datanode4.abc.com.

ha.cf 100% 10KB 10.4KB/s 00:00

authkeys 100% 694 0.7KB/s 00:00

Setting HA startup configuration on node datanode4.abc.com.

2、注意haresources与crm不兼容,不被crm所读取

[root@snn heartbeat]# mv /etc/ha.d/haresources /root

底下mv是datanode4的主机

[root@datanode4 ha.d]# mv haresources /root/

[root@snn heartbeat]# service heartbeat start

logd is already running

Starting High-Availability services:

Done.

[root@snn heartbeat]# ssh datanode4 \'service heartbeat start\'

logd is already running

Starting High-Availability services:

Done.

3、查看日志

[root@snn heartbeat]# tail -f /var/log/messages

Jun 19 16:00:29 snn crmd: [2223]: notice: populate_cib_nodes: Node: datanode4.abc.com (uuid: 0862d824-047e-4826-9e26-21a7603f53c8)

Jun 19 16:00:30 snn crmd: [2223]: notice: populate_cib_nodes: Node: snn.abc.com (uuid: 6009ca6a-56eb-4d35-872e-3b8dc0fc9851)

Jun 19 16:00:30 snn crmd: [2223]: info: do_ha_control: Connected to Heartbeat

Jun 19 16:00:30 snn crmd: [2223]: info: do_ccm_control: CCM connection established... waiting for first callback

Jun 19 16:00:30 snn crmd: [2223]: info: do_started: Delaying start, CCM (0000000000100000) not connected

Jun 19 16:00:30 snn crmd: [2223]: info: crmd_init: Starting crmd\'s mainloop

Jun 19 16:00:30 snn crmd: [2223]: notice: crmd_client_status_callback: Status update: Client snn.abc.com/crmd now has status [online]

Jun 19 16:00:30 snn crmd: [2223]: notice: crmd_client_status_callback: Status update: Client snn.abc.com/crmd now has status [online]

Jun 19 16:00:30 snn crmd: [2223]: notice: crmd_client_status_callback: Status update: Client datanode4.abc.com/crmd now has status [online]

Jun 19 16:00:30 snn cib: [2219]: info: mem_handle_event: Got an event OC_EV_MS_NEW_MEMBERSHIP from ccm

Jun 19 16:00:30 snn cib: [2219]: info: mem_handle_event: instance=5, nodes=2, new=2, lost=0, n_idx=0, new_idx=0, old_idx=4

Jun 19 16:00:30 snn cib: [2219]: info: cib_ccm_msg_callback: PEER: datanode4.abc.com

Jun 19 16:00:30 snn cib: [2219]: info: cib_ccm_msg_callback: PEER: snn.abc.com

Jun 19 16:00:31 snn crmd: [2223]: info: do_started: Delaying start, CCM (0000000000100000) not connected

Jun 19 16:00:31 snn crmd: [2223]: info: mem_handle_event: Got an event OC_EV_MS_NEW_MEMBERSHIP from ccm

Jun 19 16:00:31 snn crmd: [2223]: info: mem_handle_event: instance=5, nodes=2, new=2, lost=0, n_idx=0, new_idx=0, old_idx=4

Jun 19 16:00:31 snn crmd: [2223]: info: crmd_ccm_msg_callback: Quorum (re)attained after event=NEW MEMBERSHIP (id=5)

Jun 19 16:00:31 snn crmd: [2223]: info: ccm_event_detail: NEW MEMBERSHIP: trans=5, nodes=2, new=2, lost=0 n_idx=0, new_idx=0, old_idx=4

Jun 19 16:00:31 snn crmd: [2223]: info: ccm_event_detail: #011CURRENT: datanode4.abc.com [nodeid=0, born=3]

Jun 19 16:00:31 snn crmd: [2223]: info: ccm_event_detail: #011CURRENT: snn.abc.com [nodeid=1, born=5]

Jun 19 16:00:31 snn crmd: [2223]: info: ccm_event_detail: #011NEW: datanode4.abc.com [nodeid=0, born=3]

Jun 19 16:00:31 snn crmd: [2223]: info: ccm_event_detail: #011NEW: snn.abc.com [nodeid=1, born=5]

Jun 19 16:00:31 snn crmd: [2223]: info: do_started: The local CRM is operational

Jun 19 16:00:31 snn crmd: [2223]: info: do_state_transition: State transition S_STARTING -> S_PENDING [ input=I_PENDING cause=C_CCM_CALLBACK origin=do_started ]

4、查看集群监控状态

//如果想它只显示一次使用crm_mon --one-shot

[root@snn heartbeat]# crm_mon

Refresh in 6s...

============

Last updated: Fri Jun 19 16:11:34 2015

Current DC: snn.abc.com (6009ca6a-56eb-4d35-872e-3b8dc0fc9851)

2 Nodes configured.

0 Resources configured.

============

Node: datanode4.abc.com (0862d824-047e-4826-9e26-21a7603f53c8): online

Node: snn.abc.com (6009ca6a-56eb-4d35-872e-3b8dc0fc9851): online

4、crm的命令工具

[root@snn heartbeat]# crm_sh

/usr/sbin/crm_sh:31: DeprecationWarning: The popen2 module is deprecated. Use the subprocess module.

from popen2 import Popen3

crm # help

Usage: crm (nodes|config|resources)

crm # nodes

crm nodes # help

Usage: nodes (status|list)

crm nodes # list

<node id="0862d824-047e-4826-9e26-21a7603f53c8" uname="datanode4.abc.com" type="normal"/>

<node id="6009ca6a-56eb-4d35-872e-3b8dc0fc9851" uname="snn.abc.com" type="normal"/>

crm nodes #

5、安装heartbeat的时候自动创建一个用户hacluster,但没有密码,需要创建

[root@snn heartbeat]# cat /etc/passwd |grep hacluster

hacluster:x:498:498:heartbeat user:/var/lib/heartbeat/cores/hacluster:/sbin/nologin

[root@snn heartbeat]# passwd hacluster

更改用户 hacluster 的密码 。

新的 密码:

无效的密码: WAY 过短

无效的密码: 过于简单

重新输入新的 密码:

passwd: 所有的身份验证令牌已经成功更新。

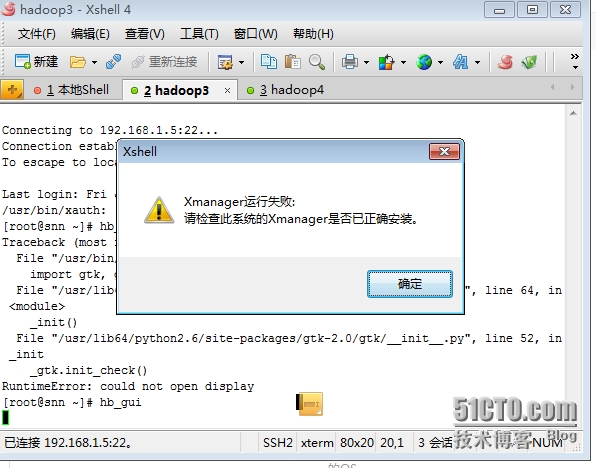

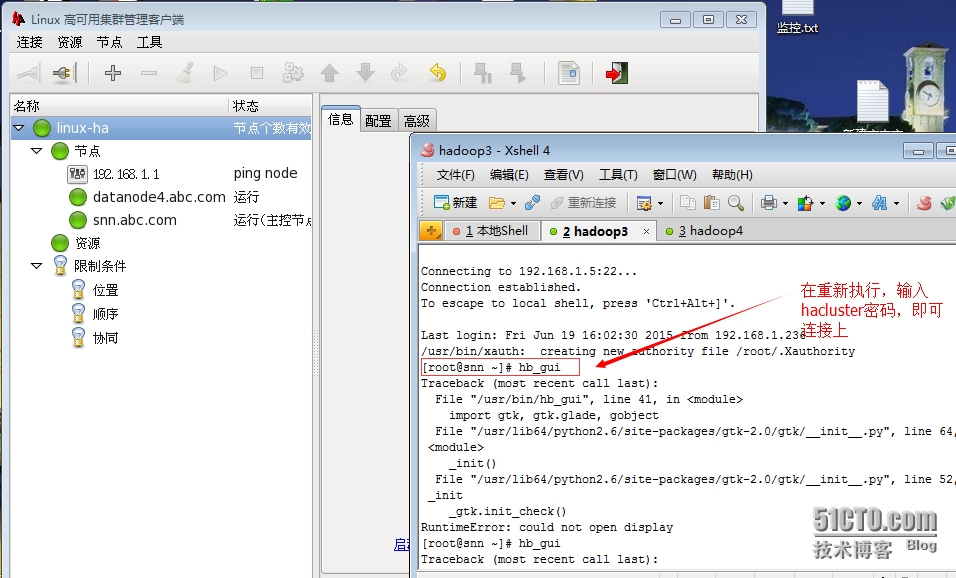

6、直接运行hb_gui

[root@snn ~]# hb_gui

Traceback (most recent call last):

File "/usr/bin/hb_gui", line 41, in <module>

import gtk, gtk.glade, gobject

File "/usr/lib64/python2.6/site-packages/gtk-2.0/gtk/__init__.py", line 64, in <module>

_init()

File "/usr/lib64/python2.6/site-packages/gtk-2.0/gtk/__init__.py", line 52, in _init

_gtk.init_check()

RuntimeError: could not open display

以上有错误提示

在客户端下载安装Xmanager即可

在重执行命令

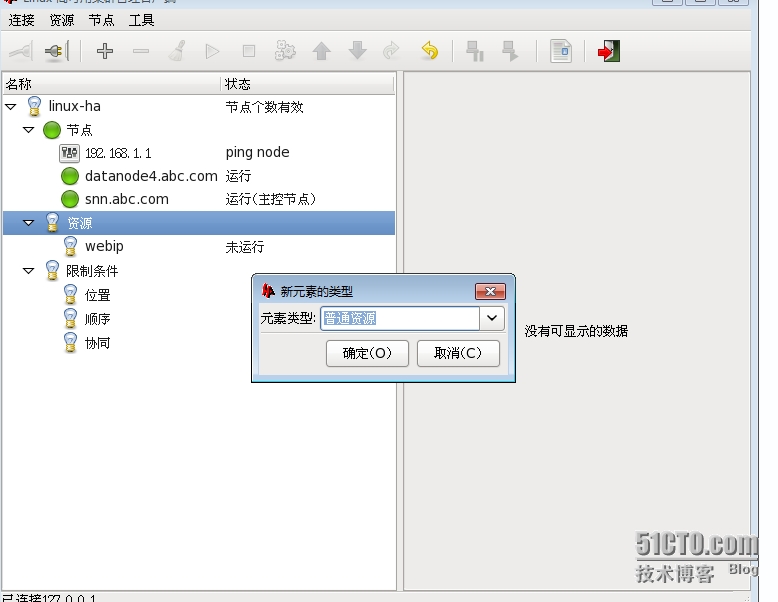

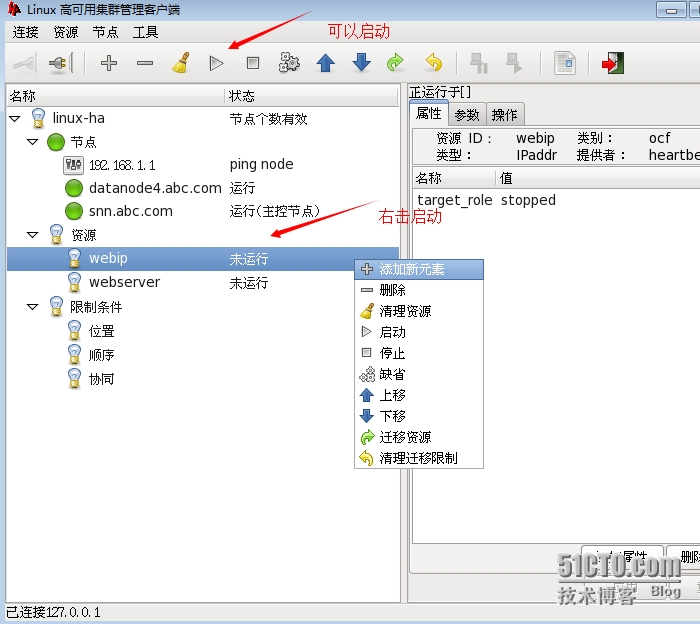

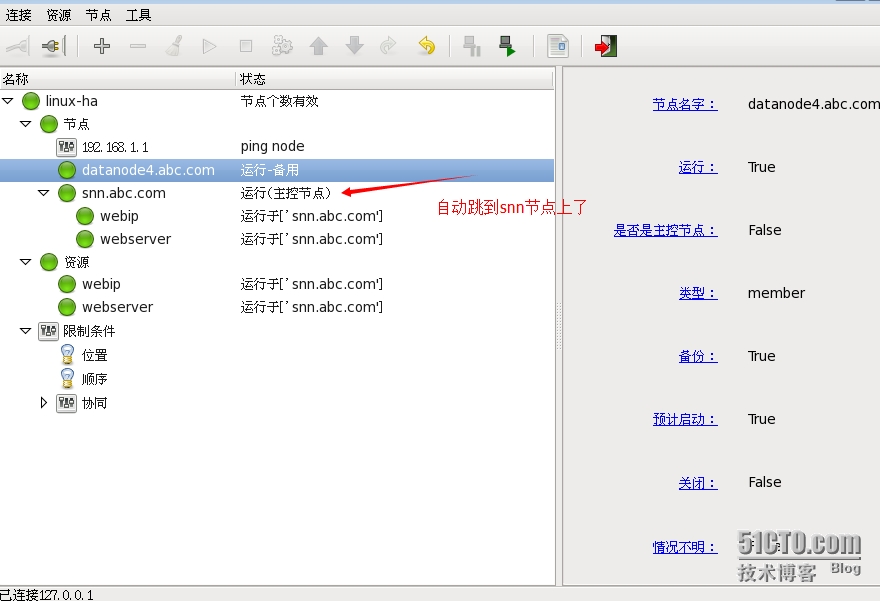

三、ha_gui定义

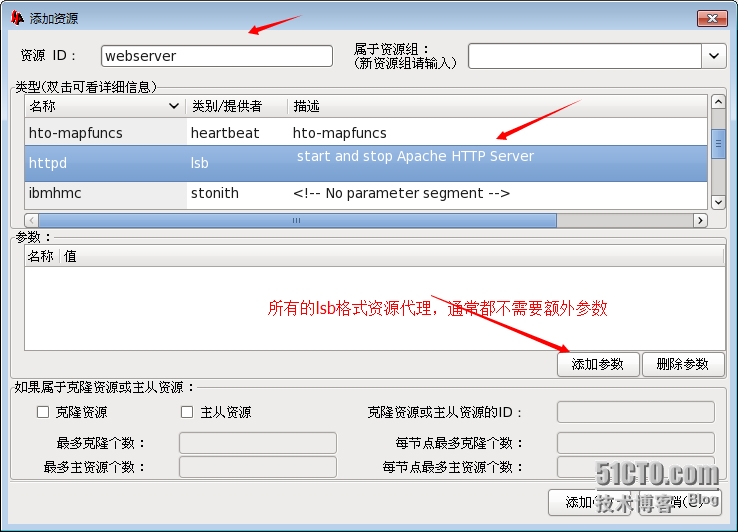

1、定义主资源名称

2、继继定义主资源

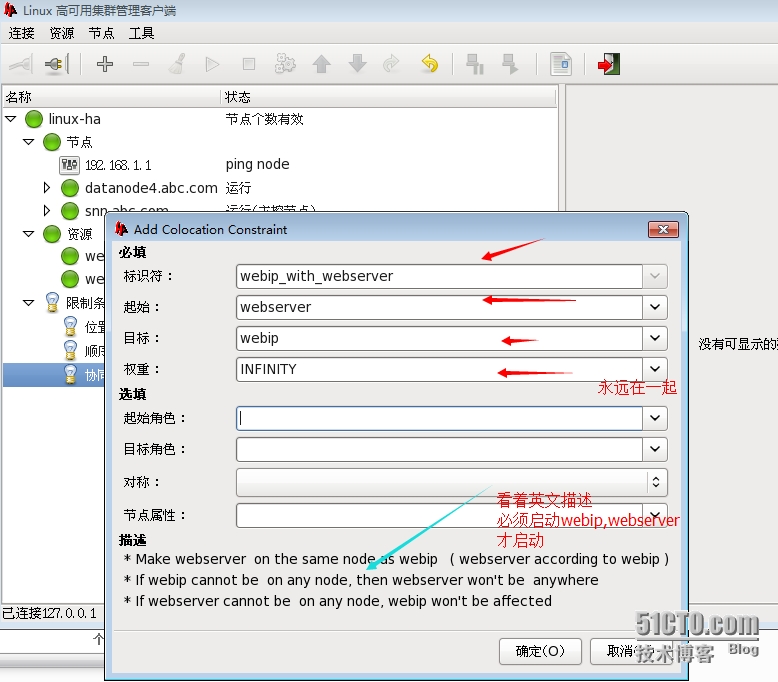

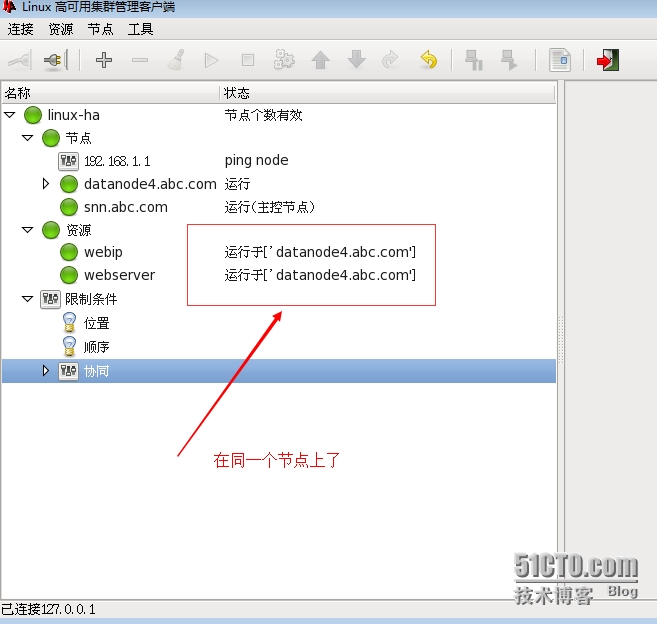

3、让两个资源运行同一个节点,方法有两种:(1)定义排列约束,(2)定义资源组

(1)定义排列约束

4、让snn节点成为备的

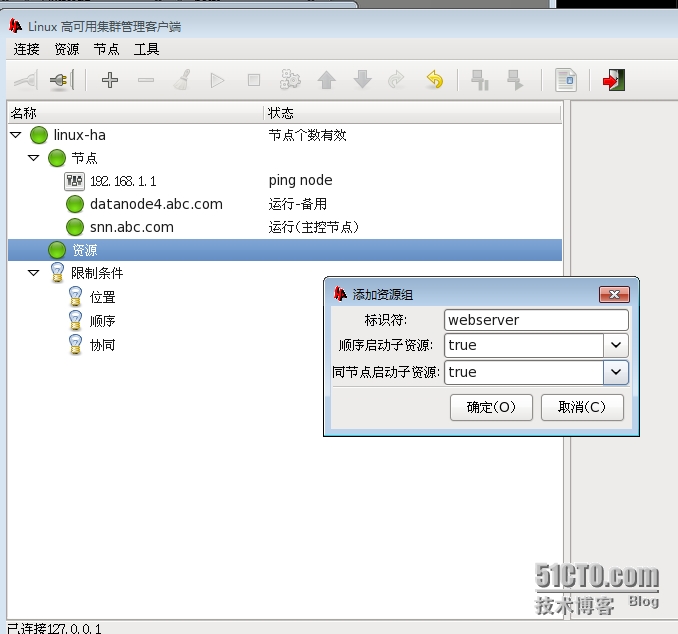

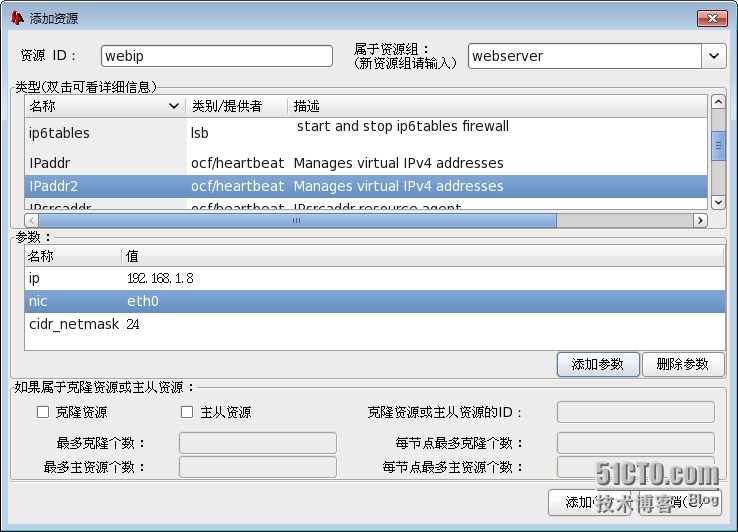

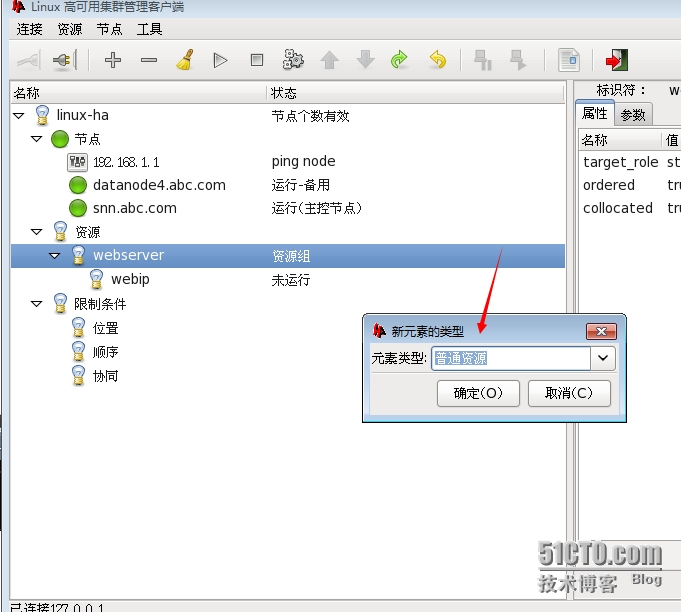

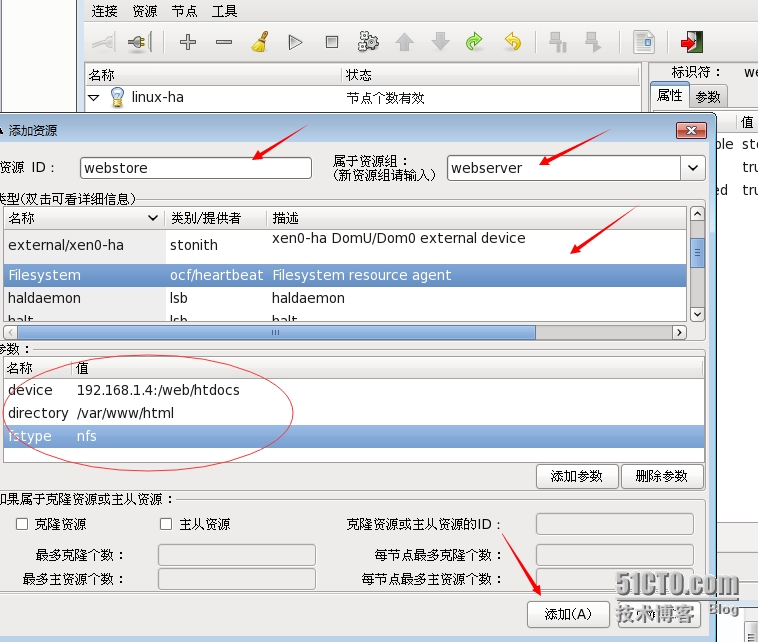

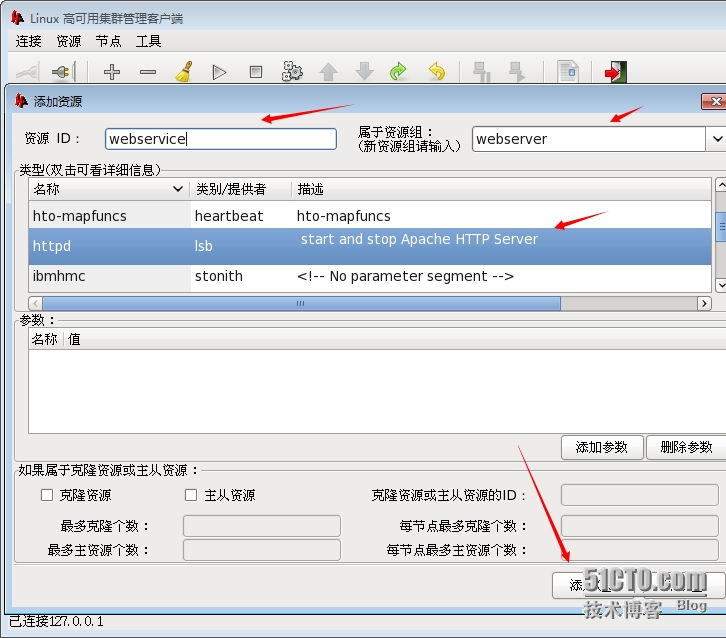

四、定义组的方式

web server:

vip:192.168.1.8

httpd

nfs:/192.168.1.4:/web/htdocs挂在到/var/www/html

1、删除原来主资源

2、定义群主源

3、httpd无法启动,查看日志如下

从日志 来看,nfs正常挂在到4这主机上,但httpd先启动后又关闭,奇怪了

4、来到datanode4这台机子,单独启动httpd看看,没有成功

[root@datanode4 ~]# /etc/init.d/httpd restart

停止 httpd: [失败]

正在启动 httpd:Syntax error on line 292 of /etc/httpd/conf/httpd.conf:

5、查看SElinux状态,吓了一跳,问题出现在这里

[root@datanode4 conf]# getenforce

Enforcing

[root@datanode4 conf]# setenforce 0

[root@datanode4 conf]# getenforce

Permissive

把配置文件改成disabled

[root@datanode4 conf]# vim /etc/selinux/config

SELINUX=disabled

6、单独在启动httpd看看

[root@datanode4 conf]# /etc/init.d/httpd start

正在启动 httpd: [确定]

[root@datanode4 conf]# /etc/init.d/httpd stop

停止 httpd:

7、再回到snn输入hb_gui看看,之前是webserivce,这不影响名称是可以随便定义,我之前删了,就重新建一资源为了好识别,就定义了httpd

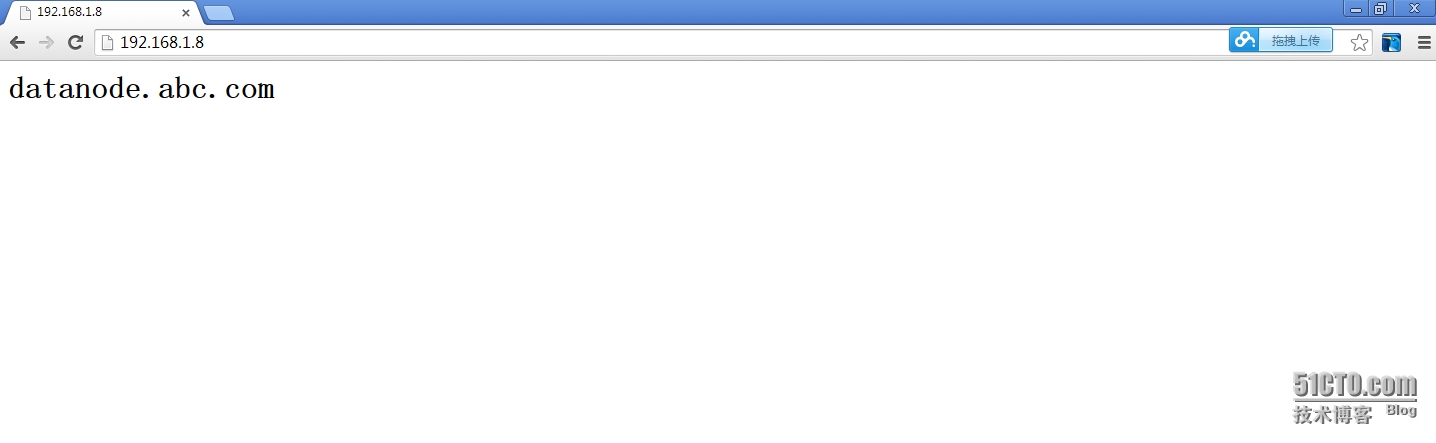

五、验证

1、nfs的index.html内容

[root@datanode ~]# cat /web/htdocs/index.html

<h1>datanode.abc.com</h1>

[root@datanode ~]# ifconfig eth0

eth0 Link encap:Ethernet HWaddr 00:0C:29:50:AC:6E

inet addr:192.168.1.4 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe50:ac6e/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:83505 errors:0 dropped:0 overruns:0 frame:0

TX packets:2037 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:7403212 (7.0 MiB) TX bytes:228350 (222.9 KiB)

2、datanode4的主机的vip地址,如果单纯输入ifocnfig,不能显示出来的,它没有利用别名来定义,所以要用的ip addr show

[root@datanode4 html]# ifconfig eth0

eth0 Link encap:Ethernet HWaddr 00:0C:29:E1:2F:66

inet addr:192.168.1.6 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fee1:2f66/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:147365 errors:0 dropped:0 overruns:0 frame:0

TX packets:66651 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:20284443 (19.3 MiB) TX bytes:14571080 (13.8 MiB)

[root@datanode4 html]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:e1:2f:66 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.6/24 brd 192.168.1.255 scope global eth0

inet 192.168.1.8/24 brd 192.168.1.255 scope global secondary eth0 //显示vip地址

inet6 fe80::20c:29ff:fee1:2f66/64 scope link

valid_lft forever preferred_lft forever

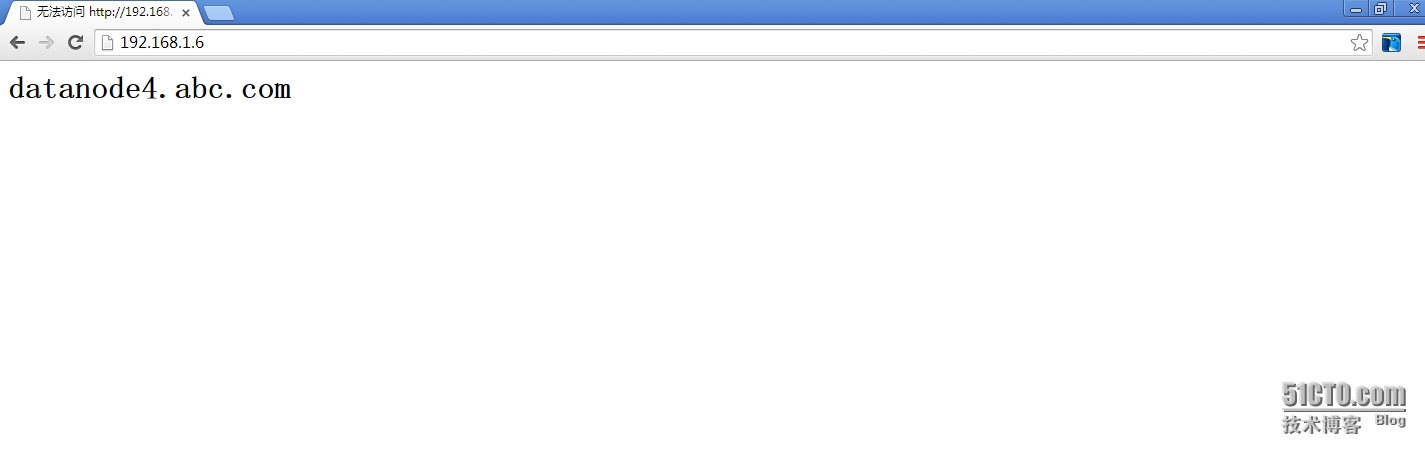

3、在浏览器输入,注意这里输入的是vip地址

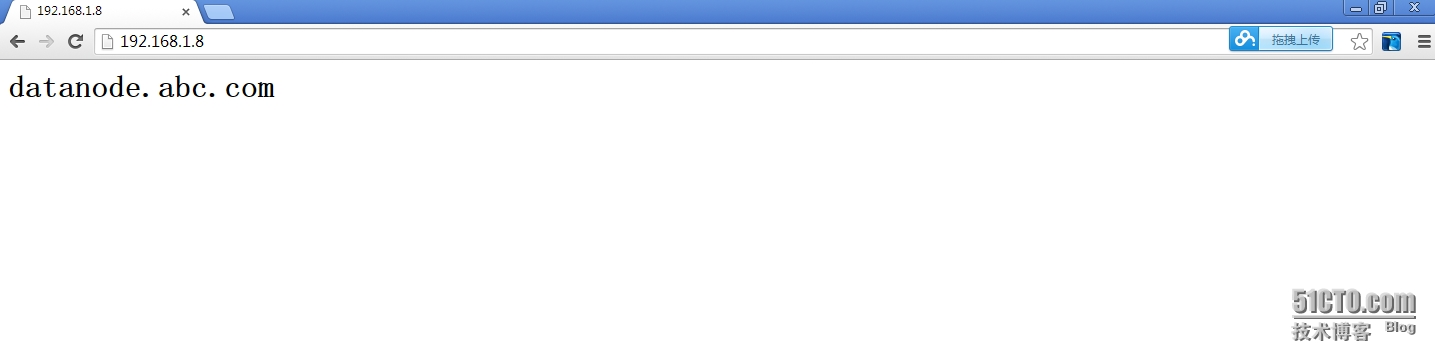

4、如果datanode4成为备用

到snn主机上看,转移成功

[root@snn ~]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:b1:89:48 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.5/24 brd 192.168.1.255 scope global eth0

inet 192.168.1.8/24 brd 192.168.1.255 scope global secondary eth0

inet6 fe80::20c:29ff:feb1:8948/64 scope link

valid_lft forever preferred_lft forever

涮新浏览器,还是原来的内容

以上是关于高可用集群之heartbeat基于crm进行资源管理的主要内容,如果未能解决你的问题,请参考以下文章