Android Multimedia框架总结(二十三)MediaCodec补充及MediaMuxer引入(附案例)

Posted 码农突围

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Android Multimedia框架总结(二十三)MediaCodec补充及MediaMuxer引入(附案例)相关的知识,希望对你有一定的参考价值。

请尊重分享成果,转载请注明出处,本文来自逆流的鱼yuiop,原文链接:http://blog.csdn.net/hejjunlin/article/details/53729575

前言:前面几章都是分析MediaCodec相关源码,有收到提问,说MediaCodec到底是硬解码还是软解码?看下今天的Agenda:

- MediaCodec到底是硬解码还是软解码

- MediaMuxer初识

- MediaMuxer与MediaExtractor进行剪辑视频

- 效果图

- 布局实现

- 逻辑实现

- log输出过程

MediaCodec到底是硬解码还是软解码?

MediaCodec 调用的是在系统中register的解码器,硬件厂商会把自己的硬解码器register进来,就是硬解,如果他register一个软解码器,则是软解。

MediaCodec并不是真正的codec,真正codec是在openMax,要保证是硬解,在MediaCodec里有接口可以枚举所有解码器,每种编码可能都有多个解码器,区分哪个是软解哪个是硬解就行。

MediaCodec mediaCodec = MediaCodec.createDecoderByType("video/avc");我的应用里面接收的是H264编码数据,所以我选取的是video/avc,我们可以看一下MediaCodec.createDecoderByType()枚举了哪些解编码器:

> /**

> * Instantiate a decoder supporting input data of the given mime type.

> *

> * The following is a partial list of defined mime types and their semantics:

> * <ul>

> * <li>"video/x-vnd.on2.vp8" - VP8 video (i.e. video in .webm)

> * <li>"video/x-vnd.on2.vp9" - VP9 video (i.e. video in .webm)

> * <li>"video/avc" - H.264/AVC video

> * <li>"video/mp4v-es" - MPEG4 video

> * <li>"video/3gpp" - H.263 video

> * <li>"audio/3gpp" - AMR narrowband audio

> * <li>"audio/amr-wb" - AMR wideband audio

> * <li>"audio/mpeg" - MPEG1/2 audio layer III

> * <li>"audio/mp4a-latm" - AAC audio (note, this is raw AAC packets, not packaged in LATM!)

> * <li>"audio/vorbis" - vorbis audio

> * <li>"audio/g711-alaw" - G.711 alaw audio

> * <li>"audio/g711-mlaw" - G.711 ulaw audio

> * </ul>

> *

> * @param type The mime type of the input data.

> */

> public static MediaCodec createDecoderByType(String type) {

> return new MediaCodec(type, true /* nameIsType */, false /* encoder */);

> }可以看到我选的”video/avc” - H.264/AVC video是一种H264的解码方式,但并不能证明我使用的就一定是硬解码

我们先来看一下android系统中解码器的命名,软解码器通常是以OMX.google开头的。硬解码器通常是以OMX.[hardware_vendor]开头的,比如TI的解码器是以OMX.TI开头的。当然还有一些不遵守这个命名规范的,不以OMX.开头的,那也会被认为是软解码器。

判断规则见frameworks/av/media/libstagefright/OMXCodec.cpp:

static bool IsSoftwareCodec(const char *componentName) {

if (!strncmp("OMX.google.", componentName, 11)) {

return true;

}

if (!strncmp("OMX.", componentName, 4)) {

return false;

}

return true;

}其实MediaCodec调用的是在系统中注册的解码器,系统中存在的解码器可以很多,但能够被应用使用的解码器是根据配置来的,即/system/etc/media_codecc.xml。这个文件一般由硬件或者系统的生产厂家在build整个系统的时候提供,一般是保存在代码的device/[company]/[codename]目录下的,例如device/samsung/tuna/media_codecs.xml。这个文件配置了系统中有哪些可用的codec以及,这些codec对应的媒体文件类型。在这个文件里面,系统里面提供的软硬codec都需要被列出来。

也就是说,如果系统里面实际上包含了某个codec,但是并没有被配置在这个文件里,那么应用程序也无法使用到。

在这个配置文件里面,如果出现多个codec对应同样类型的媒体格式的时候,这些codec都会被保留起来。当系统使用的时候,将会选择第一个匹配的codec。除非是指明了要软解码还是硬解码,但是Android的framework层为上层提供服务的AwesomePlayer中在处理音频和视频的时候,对到底是选择软解还是硬解的参数没有设置。所以虽然底层是支持选择的,但是对于上层使用MediaPlayer的Java程序来说,还是只能接受默认的codec选取规则。

但是Android提供的命令行程序/system/bin/stagefright在播放音频文件的时候,倒是可以根据参数来选择到底使用软解码还是硬解码,但是该工具只支持播放音频,不支持播放视频。

一般来说,如果系统里面有对应的媒体硬件解码器的话,系统开发人员应该是会配置在media_codecs.xml中,所以大多数情况下,如果有硬件解码器,那么我们总是会使用到硬件解码器。极少数情况下,硬件解码器存在,但不配置,我猜只可能是这个硬解码器还有bug,暂时还不适合发布,所以不用使用。

MediaMuxer初识

今天主要介绍MediaMuxer,在Android的多媒体类中,MediaMuxer用于将音频和视频进行混合生成多媒体文件。缺点是目前只能支持一个audio track和一个video track,而且仅支持mp4输出。

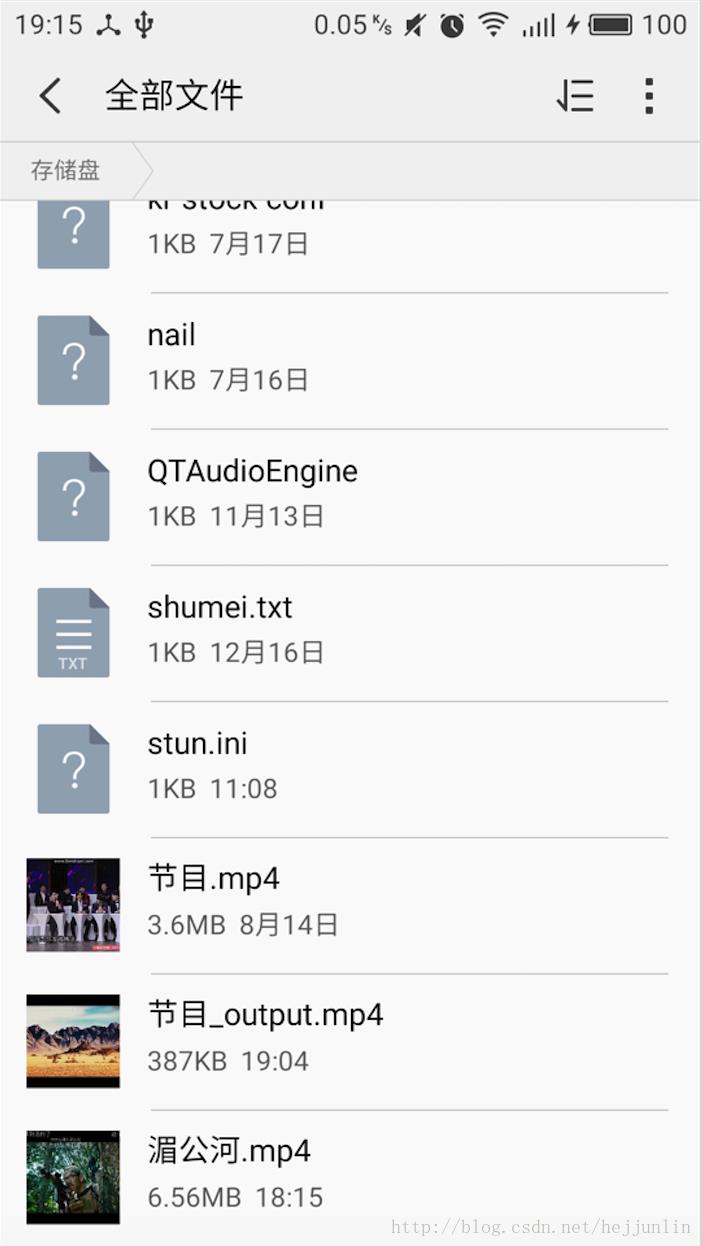

通过一个案列来了解MediaMuxer,以便后续过程分析,这个案例是进行一个音视频剪辑。就是一段正常的音视频文件,剪辑其中一个片段。在我的手机上有一段叫《节目.mp4》音视频文件。想剪辑其中精华部分,从2秒到12秒的视频。如下为效果图

效果图:

输入剪辑时间点,可以动态设置,并不是写死,剪辑时长,也是可以动态设置。

节目_output.mp4就是剪辑后的音视频文件。

布局实现:

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:id="@+id/container"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:orientation="vertical">

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:padding="10dp"

android:orientation="horizontal">

<TextView

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:textSize="18sp"

android:text="开始剪辑点(从第几秒开始):"/>

<EditText

android:id="@+id/et_cutpoint"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:background="@null"

android:textSize="18sp"

android:layout_marginLeft="5dp"

android:hint="2"/>

</LinearLayout>

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:padding="10dp"

android:orientation="horizontal">

<TextView

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:textSize="18sp"

android:text="剪辑时长(剪辑多少秒):"/>

<EditText

android:id="@+id/et_cutduration"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:background="@null"

android:textSize="18sp"

android:layout_marginLeft="5dp"

android:hint="10"/>

</LinearLayout>

<Button

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:id="@+id/button"

android:padding="10dp"

android:background="@drawable/selector_green_bg"

android:layout_gravity="center"

android:textColor="@color/white"

android:text="剪切视频" />

</LinearLayout>本文来自逆流的鱼yuiop,原文链接:http://blog.csdn.net/hejjunlin/article/details/53729575

逻辑实现:

package com.hejunlin.videoclip;

import android.media.MediaCodec;

import android.media.MediaExtractor;

import android.media.MediaFormat;

import android.media.MediaMuxer;

import android.util.Log;

import java.nio.ByteBuffer;

/**

* Created by 逆流的鱼yuiop on 16/12/18.

* blog : http://blog.csdn.net/hejjunlin

*/

public class VideoClip {

private final static String TAG = "VideoClip";

private MediaExtractor mMediaExtractor;

private MediaFormat mMediaFormat;

private MediaMuxer mMediaMuxer;

private String mime = null;

public boolean clipVideo(String url, long clipPoint, long clipDuration) {

int videoTrackIndex = -1;

int audioTrackIndex = -1;

int videoMaxInputSize = 0;

int audioMaxInputSize = 0;

int sourceVTrack = 0;

int sourceATrack = 0;

long videoDuration, audioDuration;

Log.d(TAG, ">> url : " + url);

//创建分离器

mMediaExtractor = new MediaExtractor();

try {

//设置文件路径

mMediaExtractor.setDataSource(url);

//创建合成器

mMediaMuxer = new MediaMuxer(url.substring(0, url.lastIndexOf(".")) + "_output.mp4", MediaMuxer.OutputFormat.MUXER_OUTPUT_MPEG_4);

} catch (Exception e) {

Log.e(TAG, "error path" + e.getMessage());

}

//获取每个轨道的信息

for (int i = 0; i < mMediaExtractor.getTrackCount(); i++) {

try {

mMediaFormat = mMediaExtractor.getTrackFormat(i);

mime = mMediaFormat.getString(MediaFormat.KEY_MIME);

if (mime.startsWith("video/")) {

sourceVTrack = i;

int width = mMediaFormat.getInteger(MediaFormat.KEY_WIDTH);

int height = mMediaFormat.getInteger(MediaFormat.KEY_HEIGHT);

videoMaxInputSize = mMediaFormat.getInteger(MediaFormat.KEY_MAX_INPUT_SIZE);

videoDuration = mMediaFormat.getLong(MediaFormat.KEY_DURATION);

//检测剪辑点和剪辑时长是否正确

if (clipPoint >= videoDuration) {

Log.e(TAG, "clip point is error!");

return false;

}

if ((clipDuration != 0) && ((clipDuration + clipPoint) >= videoDuration)) {

Log.e(TAG, "clip duration is error!");

return false;

}

Log.d(TAG, "width and height is " + width + " " + height

+ ";maxInputSize is " + videoMaxInputSize

+ ";duration is " + videoDuration

);

//向合成器添加视频轨

videoTrackIndex = mMediaMuxer.addTrack(mMediaFormat);

} else if (mime.startsWith("audio/")) {

sourceATrack = i;

int sampleRate = mMediaFormat.getInteger(MediaFormat.KEY_SAMPLE_RATE);

int channelCount = mMediaFormat.getInteger(MediaFormat.KEY_CHANNEL_COUNT);

audioMaxInputSize = mMediaFormat.getInteger(MediaFormat.KEY_MAX_INPUT_SIZE);

audioDuration = mMediaFormat.getLong(MediaFormat.KEY_DURATION);

Log.d(TAG, "sampleRate is " + sampleRate

+ ";channelCount is " + channelCount

+ ";audioMaxInputSize is " + audioMaxInputSize

+ ";audioDuration is " + audioDuration

);

//添加音轨

audioTrackIndex = mMediaMuxer.addTrack(mMediaFormat);

}

Log.d(TAG, "file mime is " + mime);

} catch (Exception e) {

Log.e(TAG, " read error " + e.getMessage());

}

}

//分配缓冲

ByteBuffer inputBuffer = ByteBuffer.allocate(videoMaxInputSize);

//根据官方文档的解释MediaMuxer的start一定要在addTrack之后

mMediaMuxer.start();

//视频处理部分

mMediaExtractor.selectTrack(sourceVTrack);

MediaCodec.BufferInfo videoInfo = new MediaCodec.BufferInfo();

videoInfo.presentationTimeUs = 0;

long videoSampleTime;

//获取源视频相邻帧之间的时间间隔。(1)

{

mMediaExtractor.readSampleData(inputBuffer, 0);

//skip first I frame

if (mMediaExtractor.getSampleFlags() == MediaExtractor.SAMPLE_FLAG_SYNC)

mMediaExtractor.advance();

mMediaExtractor.readSampleData(inputBuffer, 0);

long firstVideoPTS = mMediaExtractor.getSampleTime();

mMediaExtractor.advance();

mMediaExtractor.readSampleData(inputBuffer, 0);

long SecondVideoPTS = mMediaExtractor.getSampleTime();

videoSampleTime = Math.abs(SecondVideoPTS - firstVideoPTS);

Log.d(TAG, "videoSampleTime is " + videoSampleTime);

}

//选择起点

mMediaExtractor.seekTo(clipPoint, MediaExtractor.SEEK_TO_PREVIOUS_SYNC);

while (true) {

int sampleSize = mMediaExtractor.readSampleData(inputBuffer, 0);

if (sampleSize < 0) {

//这里一定要释放选择的轨道,不然另一个轨道就无法选中了

mMediaExtractor.unselectTrack(sourceVTrack);

break;

}

int trackIndex = mMediaExtractor.getSampleTrackIndex();

//获取时间戳

long presentationTimeUs = mMediaExtractor.getSampleTime();

//获取帧类型,只能识别是否为I帧

int sampleFlag = mMediaExtractor.getSampleFlags();

Log.d(TAG, "trackIndex is " + trackIndex

+ ";presentationTimeUs is " + presentationTimeUs

+ ";sampleFlag is " + sampleFlag

+ ";sampleSize is " + sampleSize);

//剪辑时间到了就跳出

if ((clipDuration != 0) && (presentationTimeUs > (clipPoint + clipDuration))) {

mMediaExtractor.unselectTrack(sourceVTrack);

break;

}

mMediaExtractor.advance();

videoInfo.offset = 0;

videoInfo.size = sampleSize;

videoInfo.flags = sampleFlag;

mMediaMuxer.writeSampleData(videoTrackIndex, inputBuffer, videoInfo);

videoInfo.presentationTimeUs += videoSampleTime;//presentationTimeUs;

}

//音频部分

mMediaExtractor.selectTrack(sourceATrack);

MediaCodec.BufferInfo audioInfo = new MediaCodec.BufferInfo();

audioInfo.presentationTimeUs = 0;

long audiosampleTime;

//获取音频帧时长

{

mMediaExtractor.readSampleData(inputBuffer, 0);

//skip first sample

if (mMediaExtractor.getSampleTime() == 0)

mMediaExtractor.advance();

mMediaExtractor.readSampleData(inputBuffer, 0);

long firstAudioPTS = mMediaExtractor.getSampleTime();

mMediaExtractor.advance();

mMediaExtractor.readSampleData(inputBuffer, 0);

long SecondAudioPTS = mMediaExtractor.getSampleTime();

audioSampleTime = Math.abs(SecondAudioPTS - firstAudioPTS);

Log.d(TAG, "AudioSampleTime is " + audioSampleTime);

}

mMediaExtractor.seekTo(clipPoint, MediaExtractor.SEEK_TO_CLOSEST_SYNC);

while (true) {

int sampleSize = mMediaExtractor.readSampleData(inputBuffer, 0);

if (sampleSize < 0) {

mMediaExtractor.unselectTrack(sourceATrack);

break;

}

int trackIndex = mMediaExtractor.getSampleTrackIndex();

long presentationTimeUs = mMediaExtractor.getSampleTime();

Log.d(TAG, "trackIndex is " + trackIndex

+ ";presentationTimeUs is " + presentationTimeUs);

if ((clipDuration != 0) && (presentationTimeUs > (clipPoint + clipDuration))) {

mMediaExtractor.unselectTrack(sourceATrack);

break;

}

mMediaExtractor.advance();

audioInfo.offset = 0;

audioInfo.size = sampleSize;

mMediaMuxer.writeSampleData(audioTrackIndex, inputBuffer, audioInfo);

audioInfo.presentationTimeUs += audioSampleTime;//presentationTimeUs;

}

//全部写完后释放MediaMuxer和MediaExtractor

mMediaMuxer.stop();

mMediaMuxer.release();

mMediaExtractor.release();

mMediaExtractor = null;

return true;

}

}ClipActivity

package com.hejunlin.videoclip;

import android.annotation.TargetApi;

import android.os.Build;

import android.os.Bundle;

import android.os.Environment;

import android.support.v7.app.AppCompatActivity;

import android.view.View;

import android.widget.Button;

import android.widget.EditText;

/**

* Created by 逆流的鱼yuiop on 16/12/18.

* blog : http://blog.csdn.net/hejjunlin

*/

public class ClipActivity extends AppCompatActivity implements View.OnClickListener {

private Button mButton;

private EditText mCutDuration;

private EditText mCutPoint;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main1);

mButton = (Button) findViewById(R.id.button);

mCutDuration = (EditText) findViewById(R.id.et_cutduration);

mCutPoint = (EditText)findViewById(R.id.et_cutpoint);

mButton.setOnClickListener(this);

}

@TargetApi(Build.VERSION_CODES.LOLLIPOP)

@Override

public void onClick(View v) {

new VideoClip().clipVideo(

Environment.getExternalStorageDirectory() + "/" + "节目.mp4",

Integer.parseInt(mCutPoint.getText().toString())*1000*1000,

Integer.parseInt(mCutDuration.getText().toString())*1000*1000);

}

}log输出过程

12-18 19:04:49.212 22409-22409/com.hejunlin.videoclip

D/VideoClip: >> url : /storage/emulated/0/节目.mp4

12-18 19:04:49.364 22409-22409/com.hejunlin.videoclip

D/VideoClip: width and height is 480 272;maxInputSize is 25326;duration is 125266666

12-18 19:04:49.365 22409-22409/com.hejunlin.videoclip

D/VideoClip: file mime is video/avc

12-18 19:04:49.366 22409-22409/com.hejunlin.videoclip

D/VideoClip: sampleRate is 24000;channelCount is 2;audioMaxInputSize is 348;audioDuration is 125440000

12-18 19:04:49.366 22409-22409/com.hejunlin.videoclip

D/VideoClip: file mime is audio/mp4a-latm

12-18 19:04:49.370 22409-22409/com.hejunlin.videoclip

D/VideoClip: videoSampleTime is 66667

12-18 19:04:49.372 22409-22409/com.hejunlin.videoclip

D/VideoClip: trackIndex is 0;presentationTimeUs is 0;sampleFlag is 1;sampleSize is 19099

12-18 19:04:49.373 22409-22409/com.hejunlin.videoclip

D/VideoClip: trackIndex is 0;presentationTimeUs is 66666;sampleFlag is 0;sampleSize is 451

12-18 19:04:49.374 22409-22409/com.hejunlin.videoclip

D/VideoClip: trackIndex is 0;presentationTimeUs is 133333;sampleFlag is 0;sampleSize is 521

12-18 19:04:49.374 22409-22409/com.hejunlin.videoclip

D/VideoClip: trackIndex is 0;presentationTimeUs is 200000;sampleFlag is 0;sampleSize is 738

12-18 19:04:49.375 22409-22409/com.hejunlin.videoclip

D/VideoClip: trackIndex is 0;presentationTimeUs is 266666;sampleFlag is 0;sampleSize is 628

12-18 19:04:49.376 22409-22409/com.hejunlin.videoclip

D/VideoClip: trackIndex is 0;presentationTimeUs is 333333;sampleFlag is 0;sampleSize is 267

12-18 19:04:49.376 22409-22409/com.hejunlin.videoclip

D/VideoClip: trackIndex is 0;presentationTimeUs is 400000;sampleFlag is 0;sampleSize is 4003

12-18 19:04:49.377 22409-22409/com.hejunlin.videoclip

D/VideoClip: trackIndex is 0;presentationTimeUs is 466666;sampleFlag is 0;sampleSize is 2575

12-18 19:04:49.377 22409-22409/com.hejunlin.videoclip

D/VideoClip: trackIndex is 0;presentationTimeUs is 533333;sampleFlag is 0;sampleSize is 1364

12-18 19:04:49.378 22409-22409/com.hejunlin.videoclip

D/VideoClip: trackIndex is 0;presentationTimeUs is 600000;sampleFlag is 0;sampleSize is 3019

12-18 19:04:49.379 22409-22409/com.hejunlin.videoclip

D/VideoClip: trackIndex is 0;presentationTimeUs is 666666;sampleFlag is 0;sampleSize is 4595

12-18 19:04:49.379 22409-22409/com.hejunlin.videoclip

D/VideoClip: trackIndex is 0;presentationTimeUs is 733333;sampleFlag is 0;sampleSize is 3689

... 省略log

12-18 19:04:49.467 22409-22409/com.hejunlin.videoclip

D/VideoClip: AudioSampleTime is 42667

12-18 19:04:49.468 22409-22409/com.hejunlin.videoclip

D/VideoClip: trackIndex is 1;presentationTimeUs is 2005333

12-18 19:04:49.469 22409-22409/com.hejunlin.videoclip

D/VideoClip: trackIndex is 1;presentationTimeUs is 2048000

12-18 19:04:49.469 22409-22409/com.hejunlin.videoclip

D/VideoClip: trackIndex is 1;presentationTimeUs is 2090666

12-18 19:04:49.470 22409-22409/com.hejunlin.videoclip

D/VideoClip: trackIndex is 1;presentationTimeUs is 2133333

12-18 19:04:49.470 22409-22409/com.hejunlin.videoclip

... 省略log

D/VideoClip: trackIndex is 1;presentationTimeUs is 12032000第一时间获得博客更新提醒,以及更多android干货,源码分析,欢迎关注我的微信公众号,扫一扫下方二维码或者长按识别二维码,即可关注。

如果你觉得好,随手点赞,也是对笔者的肯定,也可以分享此公众号给你更多的人,原创不易

以上是关于Android Multimedia框架总结(二十三)MediaCodec补充及MediaMuxer引入(附案例)的主要内容,如果未能解决你的问题,请参考以下文章